Synergistic Use of LiDAR and APEX Hyperspectral Data for High-Resolution Urban Land Cover Mapping

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data and Study Area

2.1.1. APEX Hyperspectral Imagery and Image Preprocessing

2.1.2. LiDAR and Derived Features

2.1.3. Very High-Resolution Orthophotos and other Ancillary Data

2.2. Methodology

2.2.1. Classification Scheme and Sampling Strategy

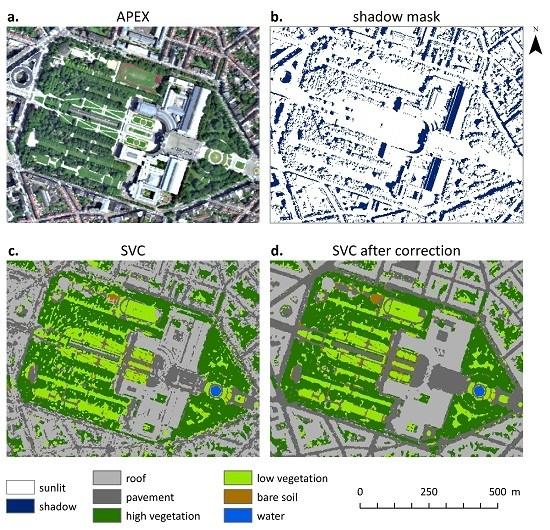

2.2.2. Shadow Detection

2.2.3. Support Vector Classification

2.2.4. LiDAR Post-Classification Correction

2.2.5. Accuracy Assessment

3. Results

3.1. Shadow Detection

3.2. SVC Land-Cover Mapping

3.3. LiDAR Post-Classification Correction

4. Discussion

4.1. Shadow Detection

4.2. SVC Land-Cover Mapping

4.3. LiDAR Post-Classification Correction

5. Conclusions

Supplementary Materials

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Rashed, T.; Jürgens, C. Remote Sensing of Urban and Suburban Areas; Springer: London, UK, 2010. [Google Scholar]

- Ben-Dor, E. Imaging spectrometry for urban applications. In Imaging Spectrometry: Basic Principles and Prospective Applications; Van Der Meer, F.D., De Jong, S.M., Eds.; Kluwer Academic Publishers: Dordrecht, The Netherland, 2001; pp. 243–281. [Google Scholar]

- Roberts, D.A.; Herold, M. Imaging spectroscopy of urban materials. In Infrared Spectroscopy in Geochemistry, Exploration and Remote Sensing; King, P., Ramsey, M.S., Swayze, G., Eds.; Mineral Association of Canada: Ottawa, ON, Canada, 2004; pp. 155–181. [Google Scholar]

- Xian, G.Z. Remote Sensing Applications for the Urban Environment; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA, 2015. [Google Scholar]

- Ben-Dor, E. Quantitative Remote Sensing of Soil Properties. Adv. Agron. 2002, 75, 173–243. [Google Scholar]

- Wu, C. Normalized spectral mixture analysis for monitoring urban composition using ETM+ imagery. Remote Sens. Environ. 2004, 93, 480–492. [Google Scholar] [CrossRef]

- Deng, Y.; Wu, C.; Li, M.; Chen, R. RNDSI: A ratio normalized difference soil index for remote sensing of urban/suburban environments. Int. J. Appl. Earth Obs. Geoinf. 2015, 39, 40–48. [Google Scholar] [CrossRef]

- Herold, M.; Schiefer, S.; Hostert, P.; Roberts, D.A. Applying imaging spectrometry in urban areas. In Urban Remote Sensing; Weng, Q., Quattrochi, D.A., Eds.; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA, 2007; pp. 137–161. [Google Scholar]

- Yan, W.Y.; Shaker, A.; El-Ashmawy, N. Urban land cover classification using airborne LiDAR data: A review. Remote Sens. Environ. 2015, 158, 295–310. [Google Scholar] [CrossRef]

- Raychaudhuri, B. Imaging spectroscopy: Origin and future trends. Appl. Spectrosc. Rev. 2016, 51, 23–35. [Google Scholar] [CrossRef]

- Hardin, P.; Hardin, A. Hyperspectral remote sensing of urban areas. Geogr. Compass 2013, 1, 7–21. [Google Scholar] [CrossRef]

- Brubaker, K.M.; Myers, W.L.; Drohan, P.J.; Miller, D.A.; Boyer, E.W. The Use of LiDAR Terrain data in characterizing surface roughness and microtopography. Appl. Environ. Soil Sci. 2013, 2013, 1–13. [Google Scholar] [CrossRef]

- Song, J.; Han, S.; Yu, K.; Kim, Y. Assessing the possibility of land-cover classification using LiDAR intensity data. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2002, 34, 259–262. [Google Scholar]

- Mallet, C.; Bretar, F.; Roux, M.; Soergel, U.; Heipke, C. Relevance assessment of full-waveform LiDAR data for urban area classification. ISPRS J. Photogramm. Remote Sens. 2011, 66, S71–S84. [Google Scholar] [CrossRef]

- Mallet, C.; Bretar, F. Full-waveform topographic LiDAR: State-of-the-art. ISPRS J. Photogramm. Remote Sens. 2009, 64, 1–16. [Google Scholar] [CrossRef]

- Khodadadzadeh, M.; Member, S.; Li, J.; Prasad, S.; Member, S. Fusion of hyperspectral and LiDAR remote sensing data using multiple feature learning. J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 1–13. [Google Scholar] [CrossRef]

- Ghamisi, P.; Benediktsson, J.A.; Phinn, S. Land-cover classification using both hyperspectral and LiDAR data. Int. J. Image Data Fusion 2015, 6, 189–215. [Google Scholar] [CrossRef]

- Heiden, U.; Heldens, W.; Roessner, S.; Segl, K.; Esch, T.; Mueller, A. Urban structure type characterization using hyperspectral remote sensing and height information. Landsc. Urban Plan. 2012, 105, 361–375. [Google Scholar] [CrossRef]

- Brook, A.; Ben-Dor, E.; Richter, R. Modelling and monitoring urban built environment via multi-source integrated and fused remote sensing data. Int. J. Image Data Fusion 2011, 9832, 1–31. [Google Scholar] [CrossRef]

- Alonzo, M.; Bookhagen, B.; Roberts, D.A. Urban tree species mapping using hyperspectral and LiDAR data fusion. Remote Sens. Environ. 2014, 148, 70–83. [Google Scholar] [CrossRef]

- Huang, X.; Zhang, L.; Gong, W. Information fusion of aerial images and LiDAR data in urban areas: Vector-stacking, re-classification and post-processing approaches. Int. J. Remote Sens. 2011, 32, 69–84. [Google Scholar] [CrossRef]

- Gamba, P. Image and data fusion in remote sensing of urban areas: Status issues and research trends. Int. J. Image Data Fusion 2013, 5, 2–12. [Google Scholar] [CrossRef]

- Gamba, P.; Lisini, G.; Tomas, L.; Almeida, C.; Fonseca, L. Joint VHR-LIDAR classification framework in urban areas using a priori knowledge and post processing shape optimization. In Proceedings of the 2011 Joint Urban Remote Sensing Event (JURSE), Munich, Germany, 11–13 April 2011; pp. 93–96.

- Van de Voorde, T.; De Genst, W.; Canters, F. Improving pixel-based VHR land-cover classifications of urban areas with post-classification techniques. Photogramm. Eng. Remote Sens. 2007, 73, 1017–1027. [Google Scholar]

- Dare, P.M. Shadow Analysis in High-Resolution Satellite Imagery of Urban Areas. Photogramm. Eng. Remote Sens. 2005, 71, 169–177. [Google Scholar] [CrossRef]

- Arévalo, V.; González, J.; Ambrosio, G. Shadow detection in colour high-resolution satellite images. Int. J. Remote Sens. 2008, 29, 1945–1963. [Google Scholar] [CrossRef]

- Adler-golden, S.M.; Levine, R.Y.; Matthew, M.W.; Richtsmeier, S.C.; Bernstein, L.S.; Gruninger, J.; Felde, G.; Hoke, M.; Anderson, G.; Ratkowski, A. Shadow-Insensitive material detection/classification with atmospherically corrected hyperspectral imagery. Proc. SPIE 2001. [Google Scholar] [CrossRef]

- Adeline, K.R.M.; Chen, M.; Briottet, X.; Pang, S.K.; Paparoditis, N. Shadow detection in very high spatial resolution aerial images: A comparative study. ISPRS J. Photogramm. Remote Sens. 2013, 80, 21–38. [Google Scholar] [CrossRef]

- Shahtahmassebi, A.; Yang, N.; Wang, K.; Moore, N.; Shen, Z. Review of shadow detection and de-shadowing methods in remote sensing. Chinese Geogr. Sci. 2013, 23, 403–420. [Google Scholar] [CrossRef]

- Tolt, G.; Shimoni, M.; Ahlberg, J. A shadow detection method for remote sensing images using VHR hyperspectral and LiDAR data. In Proceedings of the 2011 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Vancouver, BC, Canada, 24–29 July 2011; pp. 4423–4426.

- Ratti, C.; Richens, P. Raster analysis of urban form. Environ. Plan. B Plan. Des. 2004, 31, 297–309. [Google Scholar] [CrossRef]

- Richens, P. Image processing for urban scale environmental modelling. In Proceedings of the 5th Intemational IBPSA Conference: Building Simulation 97, Prague, Czech Republic, 1 Janunary 1997.

- Morello, E.; Ratti, C. Sunscapes: “Solar envelopes” and the analysis of urban DEMs. Comput. Environ. Urban Syst. 2009, 33, 26–34. [Google Scholar] [CrossRef]

- Tsai, V.J.D. A comparative study on shadow compensation of color aerial images in invariant color models. IEEE Trans. Geosci. Remote Sens. 2006, 44, 1661–1671. [Google Scholar] [CrossRef]

- Lorenzi, L.; Melgani, F.; Mercier, G. A complete processing chain for shadow detection and reconstruction in VHR images. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3440–3452. [Google Scholar] [CrossRef]

- Ashton, E.; Wemett, B.; Leathers, R.; Downes, T. A novel method for illumination suppression in hyperspectral images. Proc. SPIE 2008. [Google Scholar] [CrossRef]

- Friman, O.; Tolt, G.; Ahlberg, J. Illumination and shadow compensation of hyperspectral images using a digital surface model and non-linear least squares estimation. Proc. SPIE 2011. [Google Scholar] [CrossRef]

- Wu, S.T.; Hsieh, Y.T.; Chen, C.T.; Chen, J.C. A Comparison of 4 shadow compensation techniques for land cover classification of shaded areas from high radiometric resolution aerial images. Can. J. Remote Sens. 2014, 40, 315–326. [Google Scholar] [CrossRef]

- Itten, K.I.; Dell’Endice, F.; Hueni, A.; Kneubühler, M.; Schläpfer, D.; Odermatt, D.; Seidel, F.; Huber, S.; Schopfer, J.; Kellenberger, T.; et al. APEX—the hyperspectral ESA airborne prism experiment. Sensors 2008, 8, 6235–6259. [Google Scholar] [CrossRef] [Green Version]

- Schaepman, M.E.; Jehle, M.; Hueni, A.; D’Odorico, P.; Damm, A.; Weyermann, J.; Schneider, F.D.; Laurent, V.; Popp, C.; Seidel, F.C.; et al. Advanced radiometry measurements and earth science applications with the Airborne Prism Experiment (APEX). Remote Sens. Environ. 2015, 158, 207–219. [Google Scholar] [CrossRef]

- De Haan, J.F.; Hovenier, J.W.; Kokke, J.M.M.; van Stokkom, H.T.C. Removal of atmospheric influences on satellite-borne imagery: A radiative transfer approach. Remote Sens. Environ. 1991, 37, 1–21. [Google Scholar] [CrossRef]

- Sterckx, S.; Vreys, K.; Biesemans, J.; Iordache, M.D.; Bertels, L.; Meuleman, K. Atmospheric correction of APEX hyperspectral data. Misc. Geogr. 2016, 20, 16–20. [Google Scholar] [CrossRef]

- Vreys, K.; Iordache, M.-D.; Bomans, B.; Meuleman, K. Data acquisition with the APEX hyperspectral sensor. Misc. Geogr. 2016, 20, 5–10. [Google Scholar] [CrossRef]

- Vreys, K.; Iordache, M.-D.; Biesemans, J.; Meuleman, K. Geometric correction of APEX hyperspectral data. Misc. Geogr. 2016, 20, 11–15. [Google Scholar] [CrossRef]

- Cariou, C.; Chehdi, K.; Le Moan, S. BandClust: An unsupervised band reduction method for hyperspectral remote sensing. IEEE Geosci. Remote Sens. Lett. 2011, 8, 565–569. [Google Scholar] [CrossRef]

- Demarchi, L.; Canters, F.; Cariou, C.; Licciardi, G.; Chan, J.C.W. Assessing the performance of two unsupervised dimensionality reduction techniques on hyperspectral APEX data for high resolution urban land-cover mapping. ISPRS J. Photogramm. Remote Sens. 2014, 87, 166–179. [Google Scholar] [CrossRef]

- Chi, M.; Feng, R.; Bruzzone, L. Classification of hyperspectral remote-sensing data with primal SVM for small-sized training dataset problem. Adv. Sp. Res. 2008, 41, 1793–1799. [Google Scholar] [CrossRef]

- Mammone, A.; Turchi, M.; Cristianini, N. Support vector machines. Wiley Interdiscip. Rev. Comput. Stat. 2009, 1, 283–289. [Google Scholar] [CrossRef]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Van der Linden, S.; Rabe, A.; Held, M.; Jakimow, B.; Leitão, P.J.; Okujeni, A.; Schwieder, M.; Suess, S.; Hostert, P. The EnMAP-box-A toolbox and application programming interface for EnMAP data processing. Remote Sens. 2015, 7, 11249–11266. [Google Scholar] [CrossRef]

- Chang, C.-C.; Lin, C.J. Libsvm. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Congalton, R.G.; Green, K. Assessing the Accuracy of Remotely Sensed Data: Principles and Practices, 2nd ed.; CRC Press, Taylor & Francis Group: Boca Raton, FL, USA, 2009. [Google Scholar]

| Level 1 | Level 2 | Sunlit Polygons | Sunlit Pixels | Shaded Polygons | Shaded Pixels |

|---|---|---|---|---|---|

| roof | 1. red ceramic tile | 25 | 247 | ||

| 2. dark ceramic tile | 35 | 307 | 16 | 99 | |

| 3. dark shingle | 33 | 280 | 26 | 150 | |

| 4. bitumen | 29 | 260 | 12 | 121 | |

| 5. fiber cement | 28 | 306 | 11 | 92 | |

| 6. bright roof material | 27 | 311 | 14 | 122 | |

| 7. hydrocarbon roofing | 30 | 308 | 12 | 115 | |

| 8. gray metal | 15 | 143 | |||

| 9. green metal | 20 | 216 | |||

| 10. paved roof | 28 | 302 | 11 | 99 | |

| 11. glass | 13 | 153 | |||

| 12. gravel roofing | 30 | 322 | |||

| 13. green roof | 24 | 258 | |||

| 14. solar panel | 28 | 309 | |||

| pavement | 15. asphalt | 27 | 313 | 17 | 158 |

| 16. concrete | 29 | 328 | 17 | 159 | |

| 17. red concrete pavers | 28 | 312 | 14 | 105 | |

| 18. railroad track | 29 | 300 | 12 | 91 | |

| 19. cobblestone | 20 | 229 | 10 | 100 | |

| 20. bright gravel | 19 | 195 | 13 | 135 | |

| 21. red gravel | 28 | 309 | 10 | 112 | |

| 22. tartan | 17 | 198 | |||

| 23. artificial turf | 26 | 307 | 9 | 112 | |

| 24. green surface | 25 | 282 | |||

| high vegetation | 25. high vegetation | 27 | 312 | 14 | 107 |

| low vegetation | 26. low vegetation | 27 | 306 | 14 | 133 |

| bare soil | 27. bare soil | 31 | 342 | ||

| water° | 28. water° | n/a | n/a | n/a | n/a |

| Mapping Result | Sunlit | Shaded | ||

|---|---|---|---|---|

| PCC | Overall Kappa | PCC | Overall Kappa | |

| SVC (before correction) | 0.81 | 0.80 | 0.67 | 0.65 |

| LiDAR correction (height) | 0.85 | 0.84 | 0.71 | 0.69 |

| LiDAR correction (slope) | 0.84 | 0.83 | 0.68 | 0.65 |

| LiDAR correction (roughness) | 0.81 | 0.81 | 0.67 | 0.65 |

| LiDAR correction (all) | 0.88 | 0.87 | 0.71 | 0.69 |

| Land Cover | SVC | Cor. (Height) | Cor. (Slope) | Cor. (Roughness) | Cor. (All) |

|---|---|---|---|---|---|

| 1. red ceramic tile | 0.82 | 0.82 | 0.88 | 0.82 | 0.88 |

| 2. dark ceramic tile | 0.81 | 0.95 | 0.95 | 0.81 | 0.99 |

| 3. dark shingle | 0.51 | 0.51 | 0.94 | 0.50 | 0.94 |

| 4. bitumen | 0.77 | 0.79 | 0.90 | 0.76 | 0.93 |

| 5. fiber cement | 0.85 | 0.84 | 0.79 | 0.85 | 0.75 |

| 6. bright roof material | 0.55 | 0.64 | 0.55 | 0.55 | 0.63 |

| 7. reflective hydrocarbon | 0.75 | 0.90 | 0.81 | 0.73 | 0.95 |

| 8. gray metal | 0.82 | 0.83 | 0.78 | 0.82 | 0.76 |

| 9. green metal | 0.95 | 0.97 | 0.97 | 0.95 | 0.97 |

| 10. paved roof | 0.87 | 0.89 | 0.87 | 0.87 | 0.88 |

| 11. glass | 0.91 | 0.89 | 0.91 | 0.91 | 0.89 |

| 12. gravel roofing | 0.71 | 0.81 | 0.69 | 0.70 | 0.83 |

| 13. extensive green roof | 0.84 | 1.00 | 0.83 | 0.84 | 1.00 |

| 14. solar panel | 1.00 | 1.00 | 0.96 | 1.00 | 0.95 |

| 15. asphalt | 0.90 | 0.98 | 0.92 | 0.95 | 0.92 |

| 16. concrete | 0.52 | 0.71 | 0.62 | 0.51 | 0.71 |

| 17. red concrete pavers | 0.83 | 1.00 | 0.78 | 0.79 | 1.00 |

| 18. railroad track | 0.83 | 0.70 | 0.80 | 0.98 | 0.98 |

| 19. cobblestone | 0.59 | 0.75 | 0.53 | 0.62 | 0.69 |

| 20. bright gravel | 0.90 | 0.72 | 0.90 | 0.90 | 0.72 |

| 21. red gravel | 0.96 | 0.96 | 1.00 | 0.96 | 0.89 |

| 22. tartan | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 23. artificial turf | 0.98 | 0.98 | 0.93 | 0.98 | 0.97 |

| 24. green surface | 1.00 | 1.00 | 1.00 | 1.00 | 1.00 |

| 25. high vegetation | 1.00 | 0.85 | 1.00 | 1.00 | 1.00 |

| 26. low vegetation | 0.80 | 0.94 | 0.80 | 0.80 | 0.94 |

| 27. bare soil | 0.93 | 0.85 | 0.92 | 0.93 | 0.85 |

| Land Cover | SVC | Cor. (Height) | Cor. (Slope) | Cor. (Roughness) | Cor. (All) |

|---|---|---|---|---|---|

| 1. red ceramic tile | 0.47 | 0.57 | 0.53 | 0.47 | 0.55 |

| 2. dark ceramic tile | 0.72 | 0.67 | 0.60 | 0.72 | 0.57 |

| 3. dark shingle | 0.28 | 0.36 | 0.48 | 0.28 | 0.49 |

| 4. bitumen | 0.51 | 0.75 | 0.42 | 0.44 | 0.94 |

| 5. fiber cement | 1.00 | 0.82 | 0.82 | 1.00 | 0.80 |

| 6. bright roof material | 0.65 | 0.85 | 0.60 | 0.65 | 0.81 |

| 7. reflective hydrocarbon | 0.77 | 0.93 | 0.96 | 0.77 | 0.86 |

| 8. gray metal | 1.00 | 1.00 | 0.80 | 1.00 | 0.84 |

| 15. asphalt | 0.41 | 0.51 | 0.48 | 0.46 | 0.57 |

| 16. concrete | 0.24 | 0.30 | 0.25 | 0.18 | 0.25 |

| 18. railroad track | 0.95 | 0.96 | 0.98 | 0.95 | 0.98 |

| 19. cobblestone | 0.43 | 0.37 | 0.45 | 0.38 | 0.34 |

| 20. bright gravel | 0.88 | 0.69 | 0.88 | 0.89 | 0.70 |

| 21. red gravel | 0.87 | 0.74 | 0.98 | 0.87 | 0.81 |

| 23. artificial turf | 0.66 | 0.76 | 0.69 | 0.66 | 0.72 |

| 25. high vegetation | 0.42 | 0.50 | 0.39 | 0.72 | 0.94 |

| 26. low vegetation | 0.94 | 0.98 | 0.95 | 0.94 | 0.98 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Priem, F.; Canters, F. Synergistic Use of LiDAR and APEX Hyperspectral Data for High-Resolution Urban Land Cover Mapping. Remote Sens. 2016, 8, 787. https://doi.org/10.3390/rs8100787

Priem F, Canters F. Synergistic Use of LiDAR and APEX Hyperspectral Data for High-Resolution Urban Land Cover Mapping. Remote Sensing. 2016; 8(10):787. https://doi.org/10.3390/rs8100787

Chicago/Turabian StylePriem, Frederik, and Frank Canters. 2016. "Synergistic Use of LiDAR and APEX Hyperspectral Data for High-Resolution Urban Land Cover Mapping" Remote Sensing 8, no. 10: 787. https://doi.org/10.3390/rs8100787

APA StylePriem, F., & Canters, F. (2016). Synergistic Use of LiDAR and APEX Hyperspectral Data for High-Resolution Urban Land Cover Mapping. Remote Sensing, 8(10), 787. https://doi.org/10.3390/rs8100787