Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua

Abstract

:1. Introduction

2. Methods

2.1. Study Site and Aerial Campaign

2.2. UAV System

2.3. Image Mosaicking and Geo-Referencing

2.4. Stolf Method

2.5. Processing

2.5.1. Reference Information

2.5.2. Classification

2.5.3. Linear Gap Percentage and Crop Planting Quality Maps

- (i)

- We compared boxplots of Gap and Linear Gap Percentage by “Crop Planting Quality Levels”, to visually explore the appropriateness of the image-derived information to define “Crop Planting Quality Levels”.

- (ii)

- We fitted a linear regression model of “Linear Gap percentage” vs. “Gap percentage”.

3. Results

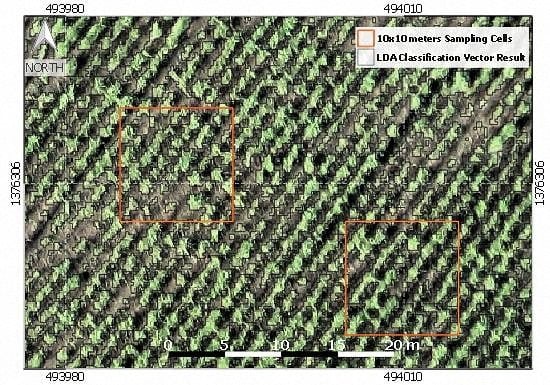

3.1. Classification

3.2. Classification and Crop Planting Quality Levels

- Classification by means of LDA results on a binary map of “sugarcane” and “gap” with an overall accuracy from 97% (estimated by cross-validation) to 92% (estimated by independent sub-sample);

- For grid cells of 10 m × 10 m, interactive Linear Gap percentage is linearly related to Gap percentage based on the classification (R2 = 0.91, p << 0.1), which let us apply Stolf’s standard thresholds to label Gap percentage as Crop Planting Quality Levels;

- The map of Crop Planting Quality is calculated from a 10 m × 10 m grid of Gap percentage derived from the classification. Crop Planting Quality from this grid agrees with Crop Planting Quality based on Interactive Linear Gap percentage (Spearman correlation 0.92).

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| UAV | Unmanned Aerial Vehicle |

| UAS | Unmanned Aerial System |

| RPAS | Remote Piloted Aerial Aircraft System |

| FAO | Food and Agricultural Organization of the United Nations |

| RS | Remote Sensing |

| RGB | Red-Green-Blue color composite |

| CCD | Charge-Coupled Device |

| QGIS | Quantum Geographic Information System |

| RMSE | Root Mean Square Error |

| LDA | Linear Discriminant Analysis |

| CLAHE | Contrast Limited Adaptive Histogram Equalization |

| RTK | Real Time Kinematic |

| SfM | Structure from Motion |

| CSM | Crop Surface Model |

References

- FAO. Handbook of Sugar Beet; Agribussines; FAO: Italy, Roma, 2009. [Google Scholar]

- Macedo, I.C.; Seabra, J.E.A.; Silva, J.E.A.R. Green house gases emissions in the production and use of ethanol from sugarcane in Brazil: The 2005/2006 averages and a prediction for 2020. Biomass Bioenergy 2008, 32, 582–595. [Google Scholar] [CrossRef]

- Yadav, R.L.; Solomon, S. Potential of developing sugarcane by-product based industries in India. Sugar Tech 2006, 8, 104–111. [Google Scholar] [CrossRef]

- FAOSTAT. Available online: http://faostat3.fao.org/download/Q/QC/E (accessed on 10 February 2016).

- CNPA. Production Report for Harvest 2014–2015; Comision Nacional Productores de Azucar: Managua, Nicaragua, 2015; p. 1. [Google Scholar]

- INIDE. Censo Nacional Agropecuario; INIDE: Managua, Nicaragua, 2012. [Google Scholar]

- Fischer, G.; Teixeira, E.; Tothne, E.; van Velthuizen, H. Land use dynamics and sugarcane production. In Sugarcane Ethanol: Contribution to Climate Change Mitigation and the Environment; Wageningen Academic Publisher: Wageningen, The Netherlands, 2008; pp. 29–62. [Google Scholar]

- Martinelli, L.A.; Filoso, S. Expansion of sugarcane ethanol production in Brazil: Environmental and social challenges. Ecol. Appl. 2008, 18, 885–898. [Google Scholar] [CrossRef] [PubMed]

- Goldemberg, J.; Coelho, S.T.; Guardabassi, P. The sustainability of ethanol production from sugarcane. Energy Policy 2008, 36, 2086–2097. [Google Scholar] [CrossRef]

- Altieri, M.A. The ecological impacts of large-scale agrofuel monoculture production systems in the Americas. Bull. Sci. Technol. Soc. 2009, 29, 236–244. [Google Scholar] [CrossRef]

- Foley, J.A.; Ramankutty, N.; Brauman, K.A.; Cassidy, E.S.; Gerber, J.S.; Johnston, M.; Mueller, N.D.; O’Connell, C.; Ray, D.K.; West, P.C.; et al. Solutions for a cultivated planet. Nature 2011, 478, 337–342. [Google Scholar] [CrossRef] [PubMed]

- CNPA. Production Report for Harvest 2015–2016. First Estimate; Comisión Nacional Productores de Azúcar: Managua, Nicaragua, 2016; p. 1. [Google Scholar]

- Santos, F.; Borém, A.; Caldas, C. Sugarcane: Agricultural Production, Bioenergy and Ethanol; Academic Press: Cambridge, MA, USA, 2015. [Google Scholar]

- Gascho, G.J. Water-sugarcane relationships. Sugar J. 1985, 48, 11–17. [Google Scholar]

- Raper, R.L. Agricultural traffic impacts on soil. J. Terramech. 2005, 42, 259–280. [Google Scholar] [CrossRef]

- Paula, V.R.; Molin, J.P. Assessing damage caused by accidental vehicle traffic on sugarcane ratoon. Appl. Eng. Agric. 2013, 29, 161–169. [Google Scholar] [CrossRef]

- Stolf, R. Methodology for gap evaluation on sugarcane lines. STAB Piracicaba 1986, 4, 12–20. [Google Scholar]

- Alvares, C.A.; de Oliveira, C.F.; Valadão, F.T.; Molin, J.P.; Salvi, J.V.; Fortes, C. Remote Sensing for Mapping Sugarcane Failures; Congresso Brasileiro de Agricultura de Precisao: Piracicaba, Brazil, 2008. [Google Scholar]

- Molin, J.P.; Veiga, J.P.S.; Cavalcante, D.S.C. Measuring and Mapping Sugarcane Gaps; University of São Paulo: São Paulo, Brazil, 2014. [Google Scholar]

- Tenkorang, F.; Lowenberg-DeBoer, J. On-farm profitability of remote sensing in agriculture. J. Terr. Obs. 2008, 1, 6. [Google Scholar]

- Atzberger, C. Advances in Remote Sensing of agriculture: Context description, existing operational monitoring systems and major information needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef]

- Thenkabail, P.S.; Lyon, J.G.; Huete, A. Hyperspectral. Remote Sensing of Vegetation; CRC Press: Boca Raton, FL, USA, 2011. [Google Scholar]

- Mokhele, T.A.; Ahmed, F.B. Estimation of leaf nitrogen and silicon using hyperspectral remote sensing. J. Appl. Remote Sens. 2010, 4, 043560. [Google Scholar]

- Honkavaara, E.; Saari, H.; Kaivosoja, J.; Pölönen, I.; Hakala, T.; Litkey, P.; Mäkynen, J.; Pesonen, L. Processing and assessment of spectrometric, stereoscopic imagery collected using a lightweight UAV spectral camera for precision agriculture. Remote Sens. 2013, 5, 5006–5039. [Google Scholar] [CrossRef] [Green Version]

- Abdel-Rahman, E.M.; Ahmed, F.B. The application of remote sensing techniques to sugarcane (Saccharum spp. hybrid) production: A review of the literature. Int. J. Remote Sens. 2008, 29, 3753–3767. [Google Scholar] [CrossRef]

- Tulip, J.R.; Wilkins, K. Application of spectral unmixing to trash level estimation in billet cane. In Proceedings of the 2005 Conference of the Australian Society of Sugar Cane Technologists; PK Editorial Services Pty Ltd.: Bundaberg, Australia, 2005; pp. 387–399. [Google Scholar]

- Simões, M.; Dos, S.; Rocha, J.V.; Lamparelli, R.A.C. Spectral variables, growth analysis and yield of sugarcane. Sci. Agric. 2005, 62, 199–207. [Google Scholar] [CrossRef]

- Miphokasap, P.; Honda, K.; Vaiphasa, C.; Souris, M.; Nagai, M. Estimating canopy nitrogen concentration in sugarcane using field imaging spectroscopy. Remote Sens. 2012, 4, 1651–1670. [Google Scholar] [CrossRef]

- Schmidt, E.J.; Narciso, G.; Frost, P.; Gers, C. Application of remote sensing technology in the SA Sugar Industry–A review of recent research findings. In Proceedings of the 74th Annual Congress of the South African Sugar Technologists’ Association, Durban, South Africa, 1–3 August 2000; Volume 74, pp. 192–201.

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Rey, C.; Martín, M.P.; Lobo, A.; Luna, I.; Diago, M.P.; Millan, B.; Tardáguila, J. Multispectral imagery acquired from a UAV to assess the spatial variability of a Tempranillo vineyard. In Precision Agriculture ’13; Stafford, J.V., Ed.; Wageningen Academic Publishers: Wageningen, The Netherlands, 2013; pp. 617–624. [Google Scholar]

- Primicerio, J.; Di Gennaro, S.F.; Fiorillo, E.; Genesio, L.; Lugato, E.; Matese, A.; Vaccari, F.P. A flexible unmanned aerial vehicle for precision agriculture. Precis. Agric. 2012, 13, 517–523. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J. A new era in remote sensing of crops with unmanned robots. SPIE Newsroom 2008. [Google Scholar] [CrossRef]

- INETER. Available online: http://servmet.ineter.gob.ni/Meteorologia/climadenicaragua.php (accessed on 8 February 2016).

- INETER. Taxonomia de Suelos de Nicaragua; Publisher INETER: Managua, Nicaragua, 1975. [Google Scholar]

- Quantum GIS Development Team. Quantum GIS Geographic Information System, Open Source Geospatial Foundation 2009. Available online: http://qgis.osgeo.org (accessed on 13 June 2016).

- R Core Team. R: A Language and Environment for Statistical Computing; R Foundation for Statistical Computing: Vienna, Austria, 2012. [Google Scholar]

- Keitt, T.H.; Bivand, R.; Pebesma, E.; Rowlingson, B. Rgdal: Bindings for the Geospatial Data Abstraction Library, R package version; 2012. Available online: https://cran.r-project.org/web/packages/rgdal/index.html (accessed on 13 June 2016).

- Hijmans, R.J.; van Etten, J. Raster: Geographic Analysis and Modeling with Raster Data, R Package Version, 2012. Available online: https://cran.r-project.org/web/packages/raster/index.html (accessed on 13 June 2016).

- Bivand, R.; Rundel, C. Rgeos: Interface to Geometry Engine-Open Source (GEOS), R Package Version, 2015. Available online: https://cran.r-project.org/web/packages/rgeos/index.html (accessed on 13 June 2016).

- Venables, W.N.; Ripley, B.D. Modern. Applied Statistics with S, 4th ed.; Springer: New York, NY, USA, 2002. [Google Scholar]

- Wickham, H. Ggplot2: Elegant Graphics for Data Analysis; Springer: New York, NY, USA, 2009. [Google Scholar]

- Wickham, H. The split-apply-combine strategy for data analysis. J. Statist. Softw. 2011, 40, 136992. [Google Scholar] [CrossRef]

- Richards, J.A.; Jia, X. Remote Sensing Digital Image Analysis: An Introduction, 4th ed.; Springer Verlag: Berlin, Germany; Heidelberg, Germany, 2005. [Google Scholar]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

- Lobo, A. Image segmentation and discriminant analysis for the identification of land cover units in ecology. IEEE Trans. Geosci. Remote Sens. 1997, 35, 1136–1145. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Goforth, M.A.; Steele, C.M.; Rango, A. Multispectral Remote Sensing from unmanned aircraft: Image processing workflows and applications for rangeland environments. Remote Sens. 2011, 3, 2529–2551. [Google Scholar] [CrossRef]

- Zhou, Z.; Huang, J.; Wang, J.; Zhang, K.; Kuang, Z.; Zhong, S.; Song, X. Object-oriented classification of sugarcane using time-series middle-resolution Remote Sensing data based on adaboost. PLoS ONE 2015, 10, e0142069. [Google Scholar] [CrossRef] [PubMed]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Gil, A.; Lobo, A.; Abadi, M.; Silva, L.; Calado, H. Mapping invasive woody plants in Azores Protected Areas by using very high-resolution multispectral imagery. Eur. J. Remote Sens. 2013, 46, 289–304. [Google Scholar] [CrossRef]

- Zuiderveld, K. Contrast limited adaptive histogram equalization. In Graphics Gems IV; Heckbert, P.S., Ed.; Academic Press Professional, Inc.: San Diego, CA, USA, 1994; pp. 474–485. [Google Scholar]

- Burkart, A.; Aasen, H.; Alonso, L.; Menz, G.; Bareth, G.; Rascher, U. Angular dependency of hyperspectral measurements over wheat characterized by a novel UAV based goniometer. Remote Sens. 2015, 7, 725–746. [Google Scholar] [CrossRef]

- Peña-Barragán, J.M.; Kelly, M.; de-Castro, A.I.; López-Granados, F. Object-based approach for crop row characterization in uav images for site-specific weed management. In Proceedings of the 4th GEOBIA, Rio de Janeiro, Brazil, 7–9 May 2012; pp. 426–430.

- Berni, J.A.J.; Zarco-Tejada, P.J.; Sepulcre-Cantó, G.; Fereres, E.; Villalobos, F. Mapping canopy conductance and CWSI in olive orchards using high resolution thermal remote sensing imagery. Remote Sens. Environ. 2009, 113, 2380–2388. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Guillén-Climent, M.L.; Hernández-Clemente, R.; Catalina, A.; González, M.R.; Martín, P. Estimating leaf carotenoid content in vineyards using high resolution hyperspectral imagery acquired from an unmanned aerial vehicle (UAV). Agric. For. Meteorol. 2013, 171–172, 281–294. [Google Scholar] [CrossRef]

- Westoby, M.J.; Brasington, J.; Glasser, N.F.; Hambrey, M.J.; Reynolds, J.M. “Structure-from-Motion” photogrammetry: A low-cost, effective tool for geoscience applications. Geomorphology 2012, 179, 300–314. [Google Scholar] [CrossRef] [Green Version]

- Mancini, F.; Dubbini, M.; Gattelli, M.; Stecchi, F.; Fabbri, S.; Gabbianelli, G. Using unmanned aerial vehicles (UAV) for high-resolution reconstruction of topography: The structure from motion approach on coastal environments. Remote Sens. 2013, 5, 6880–6898. [Google Scholar] [CrossRef] [Green Version]

- Sullivan, D.; Brown, A. High accuracy autonomous image georeferencing using a GPS/Inertial-aided digital imaging system. In Proceedings of the 2002 National Technical Meeting of The Institute of Navigation, San Diego, CA, USA, 28–30 January 2002.

- Jones, H.; Sirault, X. Scaling of thermal images at different spatial resolution: The mixed pixel problem. Agronomy 2014, 4, 380–396. [Google Scholar] [CrossRef]

- Bellvert, J.; Zarco-Tejada, P.J.; Girona, J.; Fereres, E. Mapping crop water stress index in a “Pinot-noir” vineyard: Comparing ground measurements with thermal remote sensing imagery from an unmanned aerial vehicle. Precis. Agric. 2014, 15, 361–376. [Google Scholar] [CrossRef]

- Zarco-Tejada, P.J.; Diaz-Varela, R.; Angileri, V.; Loudjani, P. Tree height quantification using very high resolution imagery acquired from an unmanned aerial vehicle (UAV) and automatic 3D photo-reconstruction methods. Eur. J. Agron. 2014, 55, 89–99. [Google Scholar] [CrossRef]

- Bendig, J.; Willkomm, M.; Tilly, N.; Gnyp, M.L.; Bennertz, S.; Qiang, C.; Miao, Y.; Lenz-Wiedemann, V.I.S.; Bareth, G. Very high resolution crop surface models (CSMs) from UAV-based stereo images for rice growth monitoring in Northeast China. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2013, 40, 45–50. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Calderón, R.; Navas-Cortés, J.; Zarco-Tejada, P. Early detection and quantification of verticillium wilt in olive using hyperspectral and thermal imagery over large areas. Remote Sens. 2015, 7, 5584–5610. [Google Scholar] [CrossRef]

| Gap Percentage | Planting Quality | Observations |

|---|---|---|

| 0–10 | Excellent | Exceptional germination conditions |

| 10–20 | Normal | Most common type observed |

| 20–35 | Subnormal | Possibility of renewal may be considered |

| 35–50 | Bad | Renewal should be considered |

| >50 | Very bad | Renewal/Replanting |

| Photo-interpretation | Image Classification | |||

| Regular Soil or Withered Sugarcane | Fresh Sugarcane | Dark Soil | ||

| Regular soil or withered sugarcane | 172 | 0 | 0 | |

| Fresh Sugarcane | 9 | 109 | 0 | |

| Dark soil | 10 | 0 | 0 | |

| Photo-interpretation | Image Classification | ||

| Gap | Sugarcane | ||

| Gap | 182 (64) | 0 (0) | |

| Sugarcane | 9 (6) | 109 (15) | |

| Statistic | Value | Standard Error | p |

|---|---|---|---|

| Intercept | −15.600 | 2.876 | 1.70 × 10−50 |

| Slope | 0.814 | 0.054 | 1.85 × 10−13 |

| RSE | 5.254 | 5.254 | |

| R2 | 0.909 | ||

| F | 229.500 (23 d.o.f) | 1.85 × 10−13 |

| Based on Linear Gap Percentage (interactive) | Based on Gap Percentage (Image Classification) | |||||

| Very Bad | Bad | Sub-Normal | Normal | Excellent | ||

| Very Bad | 2 | 1 | 0 | 0 | 0 | |

| Bad | 1 | 2 | 1 | 0 | 0 | |

| Sub-Normal | 0 | 0 | 7 | 0 | 0 | |

| Normal | 0 | 0 | 2 | 4 | 1 | |

| Excellent | 0 | 0 | 0 | 1 | 3 | |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Luna, I.; Lobo, A. Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua. Remote Sens. 2016, 8, 500. https://doi.org/10.3390/rs8060500

Luna I, Lobo A. Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua. Remote Sensing. 2016; 8(6):500. https://doi.org/10.3390/rs8060500

Chicago/Turabian StyleLuna, Inti, and Agustín Lobo. 2016. "Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua" Remote Sensing 8, no. 6: 500. https://doi.org/10.3390/rs8060500

APA StyleLuna, I., & Lobo, A. (2016). Mapping Crop Planting Quality in Sugarcane from UAV Imagery: A Pilot Study in Nicaragua. Remote Sensing, 8(6), 500. https://doi.org/10.3390/rs8060500