A Scale-Driven Change Detection Method Incorporating Uncertainty Analysis for Remote Sensing Images

Abstract

:1. Introduction

2. Methodology

2.1. Multiscale Segmentation of Difference Image

2.2. Pixel-Based CD Using FCM

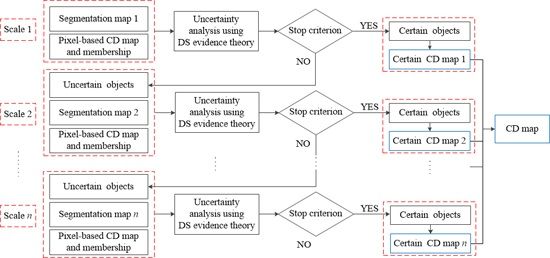

2.3. Scale-Driven CD Incorporating Uncertainty Analysis (SDCDUA)

3. Experimental Results and Analysis

3.1. Experiments of Landsat-7 ETM+ Data Set

3.1.1. Description of Data Set 1

3.1.2. Results and Analysis of Experiment 1

3.2. Experiments of SPOT 5 Data Set

3.2.1. Description of Data Set 2

3.2.2. Results and Analysis of Experiment 2

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Bruzzone, L.; Bovolo, F. A novel framework for the design of change-detection systems for very-high-resolution remote sensing images. Proc. IEEE 2013, 101, 609–630. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Shi, W.Z.; Hao, M. A method to detect earthquake-collapsed buildings from high-resolution satellite images. Remote Sens. Lett. 2013, 4, 1166–1175. [Google Scholar] [CrossRef]

- Bouziani, M.; Goïta, K.; He, D.C. Automatic change detection of buildings in urban environment from very high spatial resolution images using existing geodatabase and prior knowledge. ISPRS J. Photogramm. Remote Sens. 2010, 65, 143–153. [Google Scholar] [CrossRef]

- Chen, J.; Gong, P.; He, C.; Pu, R.; Shi, P. Land-use/land-cover change detection using improved change-vector analysis. Photogramm. Eng. Remote Sens. 2003, 69, 369–379. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L.; Capobianco, L.; Garzelli, A.; Marchesi, S.; Nencini, F. Analysis of the effects of pansharpening in change detection on vhr images. IEEE Geosci. Remote Sens. Lett. 2010, 7, 53–57. [Google Scholar] [CrossRef]

- Gong, M.; Su, L.; Jia, M.; Chen, W. Fuzzy clustering with a modified MRF energy function for change detection in synthetic aperture radar images. IEEE Trans. Fuzzy Syst. 2014, 22, 98–109. [Google Scholar] [CrossRef]

- Khorram, S. Accuracy Assessment of Remote Sensing-Derived Change Detection; ASPRS Publications: Bethesda, MD, USA, 1999. [Google Scholar]

- Hester, D.; Nelson, S.; Cakir, H.; Khorram, S.; Cheshire, H. High-resolution land cover change detection based on fuzzy uncertainty analysis and change reasoning. Int. J. Remote Sens. 2010, 31, 455–475. [Google Scholar] [CrossRef]

- Ulbricht, K.; Heckendorff, W. Satellite images for recognition of landscape and landuse changes. ISPRS J. Photogramm. Remote Sens. 1998, 53, 235–243. [Google Scholar] [CrossRef]

- Sader, S.; Winne, J. RGB-NDVI colour composites for visualizing forest change dynamics. Int. J. Remote Sens. 1992, 13, 3055–3067. [Google Scholar] [CrossRef]

- Slater, J.; Brown, R. Changing landscapes: Monitoring environmentally sensitive areas using satellite imagery. Int. J. Remote Sens. 2000, 21, 2753–2767. [Google Scholar] [CrossRef]

- Fung, T.; LeDrew, E. The determination of optimal threshold levels for change detection using various accuracy indices. Photogramm. Eng. Remote Sens. 1988, 54, 1449–1454. [Google Scholar]

- Huang, L.; Wang, M. Image thresholding by minimizing the measures of fuzziness. Pattern Recognit. 1995, 28, 41–51. [Google Scholar] [CrossRef]

- Bruzzone, L.; Prieto, D.F. Automatic analysis of the difference image for unsupervised change detection. IEEE Trans. Geosci. Remote Sens. 2000, 38, 1171–1182. [Google Scholar] [CrossRef]

- Patra, S.; Ghosh, S.; Ghosh, A. Histogram thresholding for unsupervised change detection of remote sensing images. Int. J. Remote Sens. 2011, 32, 6071–6089. [Google Scholar] [CrossRef]

- Hao, M.; Zhang, H.; Shi, W.; Deng, K. Unsupervised change detection using fuzzy c-means and MRF from remotely sensed images. Remote Sens. Lett. 2013, 4, 1185–1194. [Google Scholar] [CrossRef]

- Ma, W.; Jiao, L.; Gong, M.; Li, C. Image change detection based on an improved rough fuzzy C-means clustering algorithm. Int. J. Mach. Learn. Cybern. 2014, 5, 369–377. [Google Scholar] [CrossRef]

- Celik, T. Image change detection using gaussian mixture model and genetic algorithm. J. Vis. Commun. Image Represent. 2010, 21, 965–974. [Google Scholar] [CrossRef]

- Celik, T. Change detection in satellite images using a genetic algorithm approach. IEEE Geosci. Remote Sens. Lett. 2010, 7, 386–390. [Google Scholar] [CrossRef]

- Bazi, Y.; Melgani, F.; Al-Sharari, H.D. Unsupervised change detection in multispectral remotely sensed imagery with level set methods. IEEE Trans. Geosci. Remote Sens. 2010, 48, 3178–3187. [Google Scholar] [CrossRef]

- Hao, M.; Shi, W.Z.; Zhang, H.; Li, C. Unsupervised change detection with expectation-maximization-based level set. IEEE Geosci. Remote Sens. Lett. 2014, 11, 210–214. [Google Scholar] [CrossRef]

- Chen, Y.; Cao, Z. An improved mrf-based change detection approach for multitemporal remote sensing imagery. Signal Process. 2013, 93, 163–175. [Google Scholar] [CrossRef]

- Ghosh, A.; Mishra, N.S.; Ghosh, S. Fuzzy clustering algorithms for unsupervised change detection in remote sensing images. Inf. Sci. 2011, 181, 699–715. [Google Scholar] [CrossRef]

- Hussain, M.; Chen, D.; Cheng, A.; Wei, H.; Stanley, D. Change detection from remotely sensed images: From pixel-based to object-based approaches. ISPRS J. Photogramm. Remote Sens. 2013, 80, 91–106. [Google Scholar] [CrossRef]

- Chen, G.; Hay, G.J.; Carvalho, L.M.; Wulder, M.A. Object-based change detection. Int. J. Remote Sens. 2012, 33, 4434–4457. [Google Scholar] [CrossRef]

- Miller, O.; Pikaz, A.; Averbuch, A. Objects based change detection in a pair of gray-level images. Pattern Recognit. 2005, 38, 1976–1992. [Google Scholar] [CrossRef]

- Gong, J.; Sui, H.; Sun, K.; Ma, G.; Liu, J. Object-level change detection based on full-scale image segmentation and its application to wenchuan earthquake. Sci. China Ser. E Technol. Sci. 2008, 51, 110–122. [Google Scholar] [CrossRef]

- Durieux, L.; Lagabrielle, E.; Nelson, A. A method for monitoring building construction in urban sprawl areas using object-based analysis of spot 5 images and existing gis data. ISPRS J. Photogramm. Remote Sens. 2008, 63, 399–408. [Google Scholar] [CrossRef]

- Walter, V. Object-based classification of remote sensing data for change detection. ISPRS J. Photogramm. Remote Sens. 2004, 58, 225–238. [Google Scholar] [CrossRef]

- Desclée, B.; Bogaert, P.; Defourny, P. Forest change detection by statistical object-based method. Remote Sens. Environ. 2006, 102, 1–11. [Google Scholar] [CrossRef]

- Carvalho, L.D.; Fonseca, L.; Murtagh, F.; Clevers, J. Digital change detection with the aid of multiresolution wavelet analysis. Int. J. Remote Sens. 2001, 22, 3871–3876. [Google Scholar] [CrossRef]

- McDermid, G.J.; Linke, J.; Pape, A.D.; Laskin, D.N.; McLane, A.J.; Franklin, S.E. Object-based approaches to change analysis and thematic map update: Challenges and limitations. Can. J. Remote Sens. 2008, 34, 462–466. [Google Scholar] [CrossRef]

- Wulder, M.A.; Ortlepp, S.M.; White, J.C.; Coops, N.C.; Coggins, S.B. Monitoring tree-level insect population dynamics with multi-scale and multi-source remote sensing. J. Spat. Sci. 2008, 53, 49–61. [Google Scholar] [CrossRef]

- Möller, M.; Lymburner, L.; Volk, M. The comparison index: A tool for assessing the accuracy of image segmentation. Int. J. Appl. Earth Obs. Geoinf. 2007, 9, 311–321. [Google Scholar] [CrossRef]

- Nock, R.; Nielsen, F. Statistical region merging. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1452–1458. [Google Scholar] [CrossRef] [PubMed]

- Bezdek, J.C.; Ehrlich, R.; Full, W. FCM: The fuzzy C-means clustering algorithm. Comput. Geosci. 1984, 10, 191–203. [Google Scholar] [CrossRef]

- Wang, Q.; Shi, W. Unsupervised classification based on fuzzy C-means with uncertainty analysis. Remote Sens. Lett. 2013, 4, 1087–1096. [Google Scholar] [CrossRef]

- Dempster, A.P. Upper and lower probabilities induced by a multivalued mapping. Ann. Math. Stat. 1967, 219, 325–339. [Google Scholar] [CrossRef]

- Shafer, G. A Mathematical Theory of Evidence; Princeton University Press: Princeton, NJ, USA, 1976. [Google Scholar]

- Li, C.M.; Xu, C.Y.; Gui, C.F.; Fox, M.D. Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Image Process. 2010, 19, 3243–3254. [Google Scholar] [PubMed]

- Mandanici, E.; Bitelli, G. Multi-image and multi-sensor change detection for long-term monitoring of arid environments with landsat series. Remote Sens. 2015, 7, 14019–14038. [Google Scholar] [CrossRef]

- Zhu, Z.; Woodcock, C.E. Continuous change detection and classification of land cover using all available landsat data. Remote Sens. Environ. 2014, 144, 152–171. [Google Scholar] [CrossRef]

| Q | Missed Detections | False Alarms | Total Errors | |||

|---|---|---|---|---|---|---|

| Nm | Pm (%) | Nf | Pf (%) | Nt | Pt (%) | |

| 32 | 13,187 | 43.5 | 397 | 0.3 | 13,584 | 8.5 |

| 64 | 9357 | 30.9 | 869 | 0.7 | 10,226 | 6.4 |

| 128 | 10,580 | 34.9 | 815 | 0.6 | 11,395 | 7.1 |

| 256 | 12,457 | 41.1 | 566 | 0.4 | 13,023 | 8.1 |

| Tm | Missed Detections | False Alarms | Total Errors | |||

|---|---|---|---|---|---|---|

| Nm | Pm (%) | Nf | Pf (%) | Nt | Pt (%) | |

| 0.6 | 4896 | 16.2 | 1735 | 1.3 | 6631 | 4.2 |

| 0.65 | 4632 | 15.3 | 1779 | 1.4 | 6411 | 4.0 |

| 0.7 | 4632 | 15.3 | 1779 | 1.4 | 6411 | 4.0 |

| 0.75 | 4632 | 15.3 | 1779 | 1.4 | 6411 | 4.0 |

| 0.8 | 4653 | 15.4 | 1774 | 1.4 | 6427 | 4.0 |

| 0.85 | 4653 | 15.4 | 1774 | 1.4 | 6427 | 4.0 |

| 0.9 | 4896 | 16.2 | 1774 | 1.4 | 6670 | 4.2 |

| Methods | Missed Detections | False Alarms | Total Errors | |||

|---|---|---|---|---|---|---|

| Nm | Pm (%) | Nf | Pf (%) | Nt | Pt (%) | |

| DRLSE | 9149 | 30.2 | 4021 | 3.1 | 13,440 | 8.4 |

| CV | 6302 | 20.8 | 9858 | 7.6 | 16,160 | 10.1 |

| MLSK | 7483 | 24.7 | 5059 | 3.9 | 12,542 | 7.8 |

| EMAC | 7604 | 25.1 | 4151 | 3.2 | 11,755 | 7.3 |

| OBCD | 9357 | 30.9 | 869 | 0.7 | 10,226 | 6.4 |

| SDCDUA | 4653 | 15.4 | 1774 | 1.4 | 6427 | 4.0 |

| Q | Missed Detections | False Alarms | Total Errors | |||

|---|---|---|---|---|---|---|

| Nm | Pm (%) | Nf | Pf (%) | Nt | Pt (%) | |

| 32 | 23,929 | 68.0 | 1705 | 0.8 | 25,634 | 10.6 |

| 64 | 15,503 | 44.1 | 7362 | 3.5 | 22,865 | 9.4 |

| 128 | 13,552 | 38.5 | 10,941 | 5.3 | 24,493 | 10.1 |

| 256 | 30,054 | 85.4 | 1487 | 0.7 | 31,541 | 13.0 |

| Tm | Missed Detections | False Alarms | Total Errors | |||

|---|---|---|---|---|---|---|

| Nm | Pm (%) | Nf | Pf (%) | Nt | Pt (%) | |

| 0.6 | 12,345 | 35.1 | 8046 | 3.9 | 20,391 | 8.4 |

| 0.65 | 12,345 | 35.1 | 8247 | 4.0 | 20,592 | 8.5 |

| 0.7 | 12,345 | 35.1 | 8247 | 4.0 | 20,592 | 8.5 |

| 0.75 | 12,345 | 35.1 | 8247 | 4.0 | 20,592 | 8.5 |

| 0.8 | 12,345 | 35.1 | 8247 | 4.0 | 20,592 | 8.5 |

| 0.85 | 12,345 | 35.1 | 8247 | 4.0 | 20,592 | 8.5 |

| 0.9 | 12,954 | 36.8 | 5339 | 2.6 | 18,293 | 7.5 |

| Methods | Missed Detections | False Alarms | Total Errors | |||

|---|---|---|---|---|---|---|

| Nm | Pm (%) | Nf | Pf (%) | Nt | Pt (%) | |

| DRLSE | 17,700 | 50.3 | 7480 | 3.6 | 25,180 | 10.3 |

| CV | 9747 | 27.7 | 21,401 | 10.3 | 31,148 | 12.8 |

| MLSK | 12,035 | 34.2 | 16,830 | 8.1 | 28,913 | 11.9 |

| EMAC | 12,633 | 35.9 | 13,921 | 6.7 | 26,554 | 10.9 |

| OBCD | 15,503 | 44.1 | 7362 | 3.5 | 22,865 | 9.4 |

| SDCDUA | 12,954 | 36.8 | 5339 | 2.6 | 18,293 | 7.5 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hao, M.; Shi, W.; Zhang, H.; Wang, Q.; Deng, K. A Scale-Driven Change Detection Method Incorporating Uncertainty Analysis for Remote Sensing Images. Remote Sens. 2016, 8, 745. https://doi.org/10.3390/rs8090745

Hao M, Shi W, Zhang H, Wang Q, Deng K. A Scale-Driven Change Detection Method Incorporating Uncertainty Analysis for Remote Sensing Images. Remote Sensing. 2016; 8(9):745. https://doi.org/10.3390/rs8090745

Chicago/Turabian StyleHao, Ming, Wenzhong Shi, Hua Zhang, Qunming Wang, and Kazhong Deng. 2016. "A Scale-Driven Change Detection Method Incorporating Uncertainty Analysis for Remote Sensing Images" Remote Sensing 8, no. 9: 745. https://doi.org/10.3390/rs8090745

APA StyleHao, M., Shi, W., Zhang, H., Wang, Q., & Deng, K. (2016). A Scale-Driven Change Detection Method Incorporating Uncertainty Analysis for Remote Sensing Images. Remote Sensing, 8(9), 745. https://doi.org/10.3390/rs8090745