Improving Spectral Estimation of Soil Organic Carbon Content through Semi-Supervised Regression

Abstract

:1. Introduction

2. Theory and Algorithm

2.1. Least Squares Support Vector Machine Regression

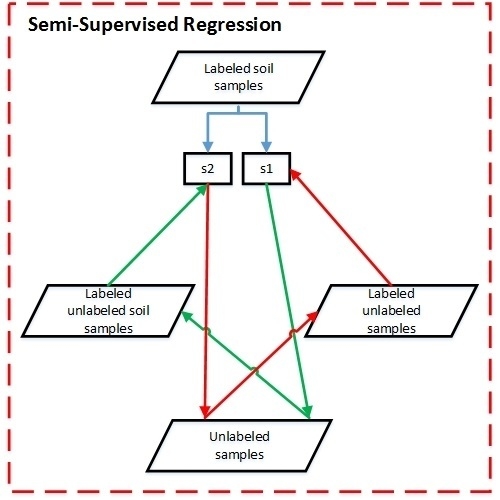

2.2. Semi-Supervised Regression with Co-Training Based on LSSVMR (Co-LSSVMR)

- (1)

- Copy labeled set L to and .

- (2)

- Train two LSSVMR regressors and from and with RBF and RBF4 as their kernel functions, respectively.

- (3)

- Obtain labeling set and from the unlabeled set U using and , respectively.

- (4)

- Add the most confidently labeled sample of to , and remove from and . is the one that results in the largest reduction of RMSECV of .

- (5)

- Add the most confidently labeled sample of to , and remove from and . is the one that results in the largest reduction of RMSECV of .

- (6)

- Retrain and , and update the labeling values of and with and , respectively.

- (7)

- Repeat Steps (4)–(6) until neither nor changes.

- (8)

- Select a refined model for each regressor ( and ) according to the model selection criterion.

- (9)

- For a sample to be estimated, the average of the estimations of the two refined models is considered the final estimation.

3. Materials and Methods

3.1. Study Area and Field Sampling

3.2. Laboratory Analyses and Measurements

3.3. Spectral Preprocessing and Outlier Detection

3.4. Model Calibration

3.5. Model Evaluation and Comparison

4. Results

4.1. Descriptive Statistics and Reflectance Spectra of Soil Samples

4.2. Sensitivity to the Percentage of Labeled Samples

4.3. Sensitivity to the Number of Labeled Samples

4.4. Sensitivity to the Number of Unlabeled Samples

5. Discussion

6. Conclusions

- (1)

- Co-LSSVMR can generally produce better estimations than LSSVMR when the number of labeled samples is not excessively small (>50), and the gains in accuracy of Co-LSSVMR with respect to LSSVMR can be up to over 20%.

- (2)

- SSR requires less labeled samples to produce estimations of a certain accuracy.

- (3)

- The usefulness of SSR is sensitive to the number of labeled and unlabeled samples, and SSR is more likely to produce more gains in estimation accuracy when the number of labeled samples is neither excessively small nor excessively large, and when the unlabeled samples are sufficient.

Acknowledgments

Author Contributions

Conflicts of Interest

Appendix A. Least Square Support Vector Machine Regression (LSSVMR)

References

- Gomez, C.; Viscarra Rossel, R.A.; McBratney, A.B. Soil organic carbon prediction by hyperspectral remote sensing and field Vis-NIR spectroscopy: An Australian case study. Geoderma 2008, 146, 403–411. [Google Scholar] [CrossRef]

- Ladoni, M.; Bahrami, H.A.; Alavipanah, S.K.; Norouzi, A.A. Estimating soil organic carbon from soil reflectance: A review. Precis. Agric. 2010, 11, 82–99. [Google Scholar] [CrossRef]

- Mulla, D.J. Twenty five years of remote sensing in precision agriculture: Key advances and remaining knowledge gaps. Biosyst. Eng. 2013, 114, 358–371. [Google Scholar] [CrossRef]

- Viscarra Rossel, R.A.; Jeon, Y.S.; Odeh, I.O.A.; McBratney, A.B. Using a legacy soil sample to develop a mid-IR spectral library. Soil Res. 2008, 46, 1–16. [Google Scholar] [CrossRef]

- Stevens, A.; Udelhoven, T.; Denis, A.; Tychon, B.; Lioy, R.; Hoffmann, L.; van Wesemael, B. Measuring soil organic carbon in croplands at regional scale using airborne imaging spectroscopy. Geoderma 2010, 158, 32–45. [Google Scholar] [CrossRef]

- Shi, T.; Chen, Y.; Liu, H.; Wang, J.; Wu, G. Soil organic carbon content estimation with laboratory-based visible-near-infrared reflectance spectroscopy: Feature selection. Appl. Spectrosc. 2014, 68, 831–837. [Google Scholar] [CrossRef] [PubMed]

- Vaudour, E.; Gilliot, J.M.; Bel, L.; Lefevre, J.; Chehdi, K. Regional prediction of soil organic carbon content over temperate croplands using visible near-infrared airborne hyperspectral imagery and synchronous field spectra. Int. J. Appl. Earth Obs. Geoinf. 2016, 49, 24–38. [Google Scholar] [CrossRef]

- Brevik, E.C.; Calzolari, C.; Miller, B.A.; Pereira, P.; Kabala, C.; Baumgarten, A.; Jordán, A. Soil mapping, classification, and pedologic modeling: History and future directions. Geoderma 2016, 264, 256–274. [Google Scholar] [CrossRef]

- Viscarra Rossel, R.A.; Walvoort, D.J.J.; McBratney, A.B.; Janik, L.J.; Skjemstad, J.O. Visible, near infrared, mid infrared or combined diffuse reflectance spectroscopy for simultaneous assessment of various soil properties. Geoderma 2006, 131, 59–75. [Google Scholar] [CrossRef]

- Peng, X.; Shi, T.; Song, A.; Chen, Y.; Gao, W. Estimating soil organic carbon using VIS/NIR spectroscopy with SVMR and SPA methods. Remote Sens. 2014, 6, 2699–2717. [Google Scholar] [CrossRef]

- Viscarra Rossel, R.A.; Behrens, T. Using data mining to model and interpret soil diffuse reflectance spectra. Geoderma 2010, 158, 46–54. [Google Scholar] [CrossRef]

- Ramirez-Lopez, L.; Schmidt, K.; Behrens, T.; van Wesemael, B.; Demattê, J.A.; Scholten, T. Sampling optimal calibration sets in soil infrared spectroscopy. Geoderma 2014, 226–227, 140–150. [Google Scholar] [CrossRef]

- Chapelle, O.; Schölkopf, B.; Zien, A. Semi-Supervised Learning; MIT Press: Cambridge, MA, USA, 2006; Volume 2. [Google Scholar]

- Blum, A.; Mitchell, T. Combining labeled and unlabeled data with co-training. In Proceedings of the Eleventh Annual Conference on Computational Learning Theory, Madison, WI, USA, 24–26 July 1998.

- Goldman, S.; Zhou, Y. Enhancing supervised learning with unlabeled data. In Proceedings of ICML 2000—The Seventeenth International Conference on Machine Learning, Stanford, CA, USA, 29 June–2 July 2000.

- Denis, F.; Gilleron, R.; Laurent, A.; Tommasi, M. Text classification and co-training from positive and unlabeled examples. In Proceedings of the ICML 2003 Workshop: The Continuum from Labeled to Unlabeled Data, Washington, DC, USA, 21–24 August 2003.

- Wan, X. Co-training for cross-lingual sentiment classification. In Proceedings of the Joint Conference of the 47th Annual Meeting of the ACL and the 4th International Joint Conference on Natural Language Processing of the AFNLP, Suntec, Singapore, 2–7 August 2009.

- Zhou, Z.-H.; Li, M. Semi-supervised regression with co-training. In Proceedings of the 19th International Joint Conference on Artificial intelligence (IJCAI’05), Edinburgh, UK, 30 July–5 August 2005.

- Bazi, Y.; Alajlan, N.; Melgani, F. Improved estimation of water chlorophyll concentration with semisupervised Gaussian process regression. IEEE Trans. Geosci. Remote Sens. 2012, 50, 2733–2743. [Google Scholar] [CrossRef]

- Dobigeon, N.; Tourneret, J.-Y.; Chang, C.-I. Semi-supervised linear spectral unmixing using a hierarchical Bayesian model for hyperspectral imagery. IEEE Trans. Signal Process. 2008, 56, 2684–2695. [Google Scholar] [CrossRef] [Green Version]

- Drucker, H.; Burges, C.J.; Kaufman, L.; Smola, A.; Vapnik, V. Support vector regression machines. Adv. Neural Inf. Process. Syst. 1997, 9, 155–161. [Google Scholar]

- Suykens, J.A.; van Gestel, T.; de Brabanter, J.; de Moor, B.; Vandewalle, J.; Suykens, J.; van Gestel, T. Least Squares Support Vector Machines; World Scientific: London, UK, 2002; Volume 4. [Google Scholar]

- Gholizadeh, A.; Borůvka, L.; Saberioon, M.; Vašát, R. Visible, near-infrared, and mid-infrared spectroscopy applications for soil assessment with emphasis on soil organic matter content and quality: State-of-the-art and key issues. Appl. Spectrosc. 2013, 67, 1349–1362. [Google Scholar] [CrossRef] [PubMed]

- Stevens, A.; Behrens, T. Monitoring soil organic carbon in croplands using imaging spectroscopy. In Proceedings of the European Geosciences Union General Assembly Conference Abstracts, Vienna, Austria, 19–24 April 2009.

- Viscarra Rossel, R.A.; Behrens, T. Using data mining to model and interpret soil diffuse reflectance spectra. Geoderma 2010, 158, 46–54. [Google Scholar] [CrossRef]

- Gao, Y.; Cui, L.; Lei, B.; Zhai, Y.; Shi, T.; Wang, J.; Chen, Y.; He, H.; Wu, G. Estimating soil organic carbon content with visible-near-infrared (Vis-NIR) spectroscopy. Appl. Spectrosc. 2014, 68, 712–722. [Google Scholar] [CrossRef] [PubMed]

- Pelckmans, K.; Suykens, J.A.; van Gestel, T.; de Brabanter, J.; Lukas, L.; Hamers, B.; de Moor, B.; Vandewalle, J. LS-SVMlab: A MATLAB/C Toolbox for Least Squares Support Vector Machines; ESAT-SISTA, Katholieke Univiversiteit: Leuven, Belgium, 2002. [Google Scholar]

- Wang, W.; Zhou, Z.-H. Analyzing co-training style algorithms. In Machine Learning: ECML; Springer: Berlin/Heidelberg, Germany, 2007; pp. 454–465. [Google Scholar]

- Food and Agriculture Organization of the United Nations. World Reference Base for Soil Resources; World Soil Resources Reports; Food and Agriculture Organization of the United Nations: Rome, Italy, 1998; Volume 84, pp. 21–22. [Google Scholar]

- Liu, G.; Shen, S.; Yan, W.; Tian, D.; Wu, Q.; Liang, X. Characteristics of organic carbon and nutrient content in five soil types in Honghu wetland ecosystems. Acta Ecol. Sin. 2011, 31, 7625–7631. (In Chinese) [Google Scholar]

- Walkley, A.; Black, I.A. An examination of the Degtjareff method for determining soil organic matter, and a proposed modification of the chromic acid titration method. Soil Sci. 1934, 37, 29–38. [Google Scholar] [CrossRef]

- Savitzky, A.; Golay, M.J. Golay, smoothing and differentiation of data by simplified least squares procedures. Anal. Chem. 1964, 36, 1627–1639. [Google Scholar] [CrossRef]

- Verboven, S.; Hubert, M. LIBRA: A MATLAB library for robust analysis. Chemom. Intell. Lab. Syst. 2005, 75, 127–136. [Google Scholar] [CrossRef]

- Kennard, R.W.; Stone, L.A. Computer aided design of experiments. Technometrics 1969, 11, 137–148. [Google Scholar] [CrossRef]

- Wiesmeier, M.; Spörlein, P.; Geuß, U.; Hangen, E.; Haug, S.; Reischl, A.; Schilling, B.; Lützow, M.V.; Kögel-Knabner, I. Soil organic carbon stocks in southeast Germany (Bavaria) as affected by land use, soil type and sampling depth. Glob. Chang. Biol. 2012, 18, 2233–2245. [Google Scholar] [CrossRef]

- Castaldi, F.; Palombo, A.; Santini, F.; Pascucci, S.; Pignatti, S.; Casa, R. Evaluation of the potential of the current and forthcoming multispectral and hyperspectral imagers to estimate soil texture and organic carbon. Remote Sens. Environ. 2016, 179, 54–65. [Google Scholar] [CrossRef]

- Bellon-Maurel, V.; Fernandez-Ahumada, E.; Palagos, B.; Roger, J.-M.; Mcbratney, A. Critical review of chemometric indicators commonly used for assessing the quality of the prediction of soil attributes by NIR spectroscopy. Trends Anal. Chem. 2010, 29, 1073–1081. [Google Scholar] [CrossRef]

- Shi, T.; Cui, L.; Wang, J.; Fei, T.; Chen, Y.; Wu, G. Comparison of multivariate methods for estimating soil total nitrogen with visible/near-infrared spectroscopy. Plant Soil 2013, 366, 363–375. [Google Scholar] [CrossRef]

- Ramirez-Lopez, L.; Behrens, T.; Schmidt, K.; Rossel, R.; Demattê, J.; Scholten, T. Distance and similarity-search metrics for use with soil Vis–NIR spectra. Geoderma 2013, 199, 43–53. [Google Scholar] [CrossRef]

- Ramirez-Lopez, L.; Behrens, T.; Schmidt, K.; Stevens, A.; Demattê, J.A.M.; Scholten, T. The spectrum-based learner: A new local approach for modeling soil Vis–NIR spectra of complex datasets. Geoderma 2013, 195, 268–279. [Google Scholar] [CrossRef]

- Ramírez–López, L.; Behrens, T.; Schmidt, K.; Rossel, R.V.; Scholten, T. New approaches of soil similarity analysis using manifold-based metric learning from proximal VIS–NIR sensing data. In Proceedings of the Second Golbal Workshop on Proximal Soil Sensing, Montreal, QC, Canada, 15–18 May 2011.

- Shi, Z.; Ji, W.; Rossel, R.A.V.; Chen, S.; Zhou, Y. Prediction of soil organic matter using a spatially constrained local partial least squares regression and the Chinese Vis–NIR spectral library. Eur. J. Soil Sci. 2015, 66, 679–687. [Google Scholar] [CrossRef]

- Zhou, Z.-H.; Li, M. Semi-supervised learning by disagreement. Knowl. Inf. Syst. 2010, 24, 415–439. [Google Scholar] [CrossRef]

- Bazi, Y.; Melgani, F. Melgani, semisupervised PSO-SVM regression for biophysical parameter estimation. IEEE Trans. Geosci. Remote Sens. 2007, 45, 1887–1895. [Google Scholar] [CrossRef]

- Adankon, M.M.; Cheriet, M. Semi-supervised learning for weighted LS-SVM. In Proceedings of the 2010 International Joint Conference on Neural Networks (IJCNN), Barcelona, Spain, 18–20 July 2010.

- Han, L.; Sun, K.; Jin, J.; Xing, B. Some concepts of soil organic carbon characteristics and mineral interaction from a review of literature. Soil Biol. Biochem. 2016, 94, 107–121. [Google Scholar] [CrossRef]

- Wight, J.P.; Ashworth, A.J.; Allen, F.L. Organic substrate, clay type, texture, and water influence on NIR carbon measurements. Geoderma 2016, 261, 36–43. [Google Scholar] [CrossRef]

- Kanning, M.; Siegmann, B.; Jarmer, T. Regionalization of uncovered agricultural soils based on organic carbon and soil texture estimations. Remote Sens. 2016, 8, 927. [Google Scholar] [CrossRef]

- Steinberg, A.; Chabrillat, S.; Stevens, A.; Segl, K.; Foerster, S. Prediction of common surface soil properties based on Vis-NIR airborne and simulated EnMAP imaging spectroscopy data: Prediction accuracy and influence of spatial resolution. Remote Sens. 2016, 8, 613. [Google Scholar] [CrossRef]

- Diek, S.; Schaepman, M.; de Jong, R. Creating multi-temporal composites of airborne imaging spectroscopy data in support of digital soil mapping. Remote Sens. 2016, 8, 906. [Google Scholar] [CrossRef]

- Nocita, M.; Stevens, A.; Toth, G.; Panagos, P.; van Wesemael, B.; Montanarella, L. Prediction of soil organic carbon content by diffuse reflectance spectroscopy using a local partial least square regression approach. Soil Biol. Biochem. 2014, 68, 337–347. [Google Scholar] [CrossRef]

- Rossel, R.A.V.; Behrens, T.; Ben-Dor, E.; Brown, D.J.; Demattê, J.A.M.; Shepherd, K.D.; Shi, Z.; Stenberg, B.; Stevens, A.; Adamchuk, V. A global spectral library to characterize the world’s soil. Earth Sci. Rev. 2016, 155, 198–230. [Google Scholar] [CrossRef]

- Demattê, J.A.M.; Bellinaso, H.; Araújo, S.R.; Rizzo, R.; Souza, A.B.; Demattê, J.A.M.; Bellinaso, H.; Araújo, S.R.; Rizzo, R.; Souza, A.B. Spectral regionalization of tropical soils in the estimation of soil attributes. Rev. Ciênc. Agron. 2016, 47, 589–598. [Google Scholar] [CrossRef]

- Bertsekas, D.P. Constrained Optimization and Lagrange Multiplier Methods; Academic Press: Boston, MA, USA, 1982. [Google Scholar]

- Hsu, C.-W.; Chang, C.-C.; Lin, C.-J. A Practical Guide to Support Vector Classification; National Taiwan University: Taipei, Taiwan, 2003. [Google Scholar]

- Xavier-de-Souza, S.; Suykens, J.A.; Vandewalle, J.; Bollé, D. Coupled simulated annealing. IEEE Trans. Syst. Man Cybern. B 2010, 40, 320–335. [Google Scholar] [CrossRef] [PubMed]

- Nelder, J.A.; Mead, R. A simplex method for function minimization. Comput. J. 1965, 7, 308–313. [Google Scholar] [CrossRef]

- Mathews, J.H.; Fink, K.D. Numerical Methods Using MATLAB; Prentice Hall: Upper Saddle River, NJ, USA, 1999; Volume 31. [Google Scholar]

- Ying, Z.; Keong, K.C. Fast leave-one-out evaluation and improvement on inference for LS-SVMs. In Proceedings of the 17th International Conference on Pattern Recognition, Cambridge, UK, 23–26 August 2004.

| Dataset | N | Min | Max | Mean | Median | Q1 | Q3 | Std. | Skew | Kurtosis |

|---|---|---|---|---|---|---|---|---|---|---|

| Whole | 246 | 0.76 | 45.73 | 11.51 | 10.04 | 5.43 | 15.85 | 6.87 | 1.01 | −0.83 |

| Calibration | 164 | 0.76 | 45.73 | 11.64 | 10.31 | 6.17 | 15.96 | 6.99 | 1.26 | 2.74 |

| Validation | 82 | 2.35 | 27.46 | 11.25 | 9.60 | 8.11 | 20.48 | 6.67 | 0.65 | 1.28 |

© 2017 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, H.; Shi, T.; Chen, Y.; Wang, J.; Fei, T.; Wu, G. Improving Spectral Estimation of Soil Organic Carbon Content through Semi-Supervised Regression. Remote Sens. 2017, 9, 29. https://doi.org/10.3390/rs9010029

Liu H, Shi T, Chen Y, Wang J, Fei T, Wu G. Improving Spectral Estimation of Soil Organic Carbon Content through Semi-Supervised Regression. Remote Sensing. 2017; 9(1):29. https://doi.org/10.3390/rs9010029

Chicago/Turabian StyleLiu, Huizeng, Tiezhu Shi, Yiyun Chen, Junjie Wang, Teng Fei, and Guofeng Wu. 2017. "Improving Spectral Estimation of Soil Organic Carbon Content through Semi-Supervised Regression" Remote Sensing 9, no. 1: 29. https://doi.org/10.3390/rs9010029

APA StyleLiu, H., Shi, T., Chen, Y., Wang, J., Fei, T., & Wu, G. (2017). Improving Spectral Estimation of Soil Organic Carbon Content through Semi-Supervised Regression. Remote Sensing, 9(1), 29. https://doi.org/10.3390/rs9010029