Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers

Abstract

:1. Introduction

1.1. Motivation

1.2. Reproducible Research

1.3. FOSS for GEOBIA

1.4. Balancing Reproducibility and Customizability for Practical Adoption

1.5. Contribution and Overview

2. Materials and Methods

2.1. Data

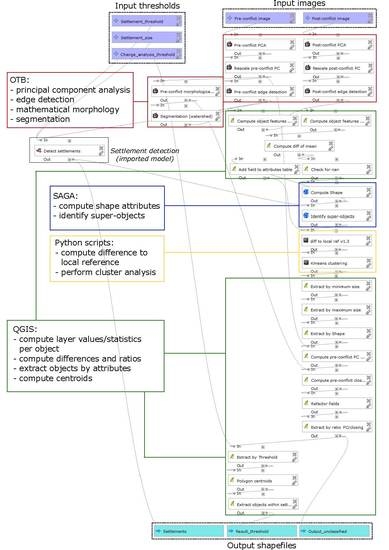

2.2. Example Analysis: Conflict Damage Assessment

2.2.1. Summary

2.2.2. Detect Settlement Areas

2.2.3. Change Analysis within Settlement Areas

2.2.4. Analysis Output

2.3. Implementation and Packaging of QGIS-Based Workflow

2.3.1. Development in the QGIS Modeler

2.3.2. Workspace Preparation

- a subdirectory data with the two georeferenced data files in TIFF format

- a Python script file, model.py, calling the actual model using the QGIS Python API (Application Programming Interface, based on [61])

2.3.3. Containerization of the Workspace and Runtime Environment

2.4. InterIMAGE-Based Analysis

3. Results

3.1. Running the Container: Command Line Interface

3.2. Running the Container: Graphical User Interface

3.3. Running InterIMAGE inside Container

3.4. Reproducibility Package

3.5. Reproducible GEOBIA

4. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| MDPI | Multidisciplinary Digital Publishing Institute |

| GIS | Geographic Information System |

| GEOBIA | Geographic Object-Based Image Analysis |

| OBIA | Object-Based Image Analysis |

| FOSS | Free and Open-Source Software |

| LULC | Land Use and Land Cover |

| LIDAR | Light Detection and Ranging |

| GUI | Graphical User Interface |

| AAAS | American Association for the Advancement of Science |

| PCA | Principal Component Analysis |

| OTB | Orfeo ToolBox |

| SAGA | System for Automated Geoscientific Analyses |

| API | Application Programming Interface |

| XVFB | X Window Virtual Frame Buffer |

| UAS | Unmanned Aerial Systems |

| HTTP | Hypertext Transfer Protocol |

| HTML | Hypertext Markup Language |

Appendix A

| Object Property | Rule or Threshold | Analysis Step |

|---|---|---|

| Standard deviation of edge layer (pre-conflict) of seed segments | ≥ 0.3 | Settlement detection |

| Proximity of seed segments to each other | ≤ 100 m | Settlement detection |

| Number of seed segments (per settlement) | ≥ 2 | Settlement detection |

| Optionally: Size of settlement area (after merging of seeds) | ≥ 0 (no default threshold set in this example) | Settlement detection |

| Existence of super-object of class settlement | True (super-object ID > 0) | Change analysis |

| Change of edge intensity | Difference to local reference value ≥ 0.33 | Change analysis |

| Minimum size (area) | 10 m2 | Change analysis |

| Maximum size (area) | 60 m2 | Change analysis |

| Shape Index value | ≤ 1.55 | Change analysis |

| Impact of morphological closing (ratio of standard deviation of pre-conflict layer values per object before and after morphological closing) | ≤ 5.5 | Change analysis |

| Analysis Step | Algorithm | Stage of Workflow |

|---|---|---|

| Extract first principal component of pre- and post-conflict image | OTB:DimensionalityReduction (pca) | Image processing |

| Rescale both principal components to 8bit | OTB:Rescale Image | Image processing |

| Edge detection on both layers | OTB:EdgeExtraction (touzi) | Image processing |

| Morphological closing on pre-conflict layer | OTB:GrayScaleMorphologicalOperation (closing) | Image processing |

| Determine extent of raster layer | QGIS:Raster layer bounds | Settlement detection |

| Create chessboard segmentation within extent | QGIS:Create grid | Settlement detection |

| Compute standard deviation of edge layer within segments | QGIS:Zonal statistics | Settlement detection |

| Extract settlement candidate segments according to standard deviation of edge layer | QGIS:Extract by attribute | Settlement detection |

| Create settlement area objects by growing and merging candidate segments that are within proximity (100 m max) to each other (ignore isolated candidates) | QGIS: Fixed distance buffer QGIS:Multipart to singleparts SAGA:Polygon shape indices QGIS:Extract by attribute QGIS:Fill holes | Settlement detection |

| Create IDs in attribute table and specify field name | QGIS:Add autoincremental field QGIS:Refactor fields | Settlement detection |

| Create objects on level of single huts | OTB:Segmentation (watershed) | Change analysis |

| Compute mean of edge intensity within objects (pre- and post-conflict) | QGIS:Zonal statistics | Change analysis |

| Calculate difference in mean edge density between pre- and post-conflict (check for NULL) | QGIS:Adv. Python Field Calculator QGIS:Extract by attribute | Change analysis |

| Compute shape and size properties of objects | SAGA:Polygon shape indices | Change analysis |

| For all sub-objects, get IDs of containing super-objects (settlements) | SAGA:Identity | Change analysis |

| Compute local reference (of change) within settlements and difference of sub-objects to this reference | Python:Difference to local reference v1.3 | Change analysis |

| Compute unsupervised clustering regarding change | Python:Kmeans clustering v2.3 | Change analysis |

| Extract objects by minimum and maximum size | QGIS:Extract by attribute | Change analysis |

| Extract objects by their shape index | QGIS:Extract by attribute | Change analysis |

| Compute statistics of pre-conflict layer per object before and after morphological closing | QGIS:Zonal statistics | Change analysis |

| Calculate ratio of sdev. values of pre-conflict layer before and after morphological closing | QGIS:Refactor fields | Change analysis |

| Extract objects by ratio value | QGIS:Extract by attribute | Change analysis |

| Extract objects by change value (difference in mean edge density) using pre-defined threshold | QGIS:Extract by attribute | Change analysis |

| Compute centroids of objects extracted by threshold and within settlements | QGIS:Polygon centroids QGIS:Extract by attribute | Change analysis |

References

- Bailey, C.W. What Is Open Access? Available online: http://digital-scholarship.org/cwb/WhatIsOA.htm (accessed on 19 December 2016).

- European Commission Horizon 2020 Open Science (Open Access). Available online: https://ec.europa.eu/programmes/horizon2020/en/h2020-section/open-science-open-access (accessed on 19 December 2016).

- The Commission High Level Expert Group on the European Open Science Cloud. Realising the European Open Science Cloud; European Commission: Brussels, Belgium, 2016. [Google Scholar]

- Nosek, B.A.; Alter, G.; Banks, G.C.; Borsboom, D.; Bowman, S.D.; Breckler, S.J.; Buck, S.; Chambers, C.D.; Chin, G.; Christensen, G.; et al. Promoting an open research culture. Science 2015, 348, 1422–1425. [Google Scholar] [CrossRef] [PubMed]

- Peng, R.D. Reproducible research and Biostatistics. Biostatistics 2009, 10, 405–408. [Google Scholar] [CrossRef] [PubMed]

- Markowetz, F. Five selfish reasons to work reproducibly. Genome Biol. 2015, 16, 274. [Google Scholar] [CrossRef] [PubMed]

- Kraker, P.; Dörler, D.; Ferus, A.; Gutounig, R.; Heigl, F.; Kaier, C.; Rieck, K.; Šimukovič, E.; Vignoli, M.; Aspöck, E.; et al. The Vienna Principles: A Vision for Scholarly Communication in the 21st Century. Mitteilungen der Vereinigung Österreichischer Bibliothekarinnen und Bibliothekare 2016, 3, 436–446. [Google Scholar]

- Sandve, G.K.; Nekrutenko, A.; Taylor, J.; Hovig, E. Ten Simple Rules for Reproducible Computational Research. PLoS Comput. Biol. 2013, 9, e1003285. [Google Scholar] [CrossRef] [PubMed]

- Gentleman, R.; Temple Lang, D. Statistical Analyses and Reproducible Research. J. Comput. Graph. Stat. 2007, 16, 1–23. [Google Scholar] [CrossRef]

- Goodman, S.N.; Fanelli, D.; Ioannidis, J.P.A. What does research reproducibility mean? Sci. Transl. Med. 2016, 8, 341ps12. [Google Scholar] [CrossRef] [PubMed]

- Amitrano, D.; Di Martino, G.; Iodice, A.; Riccio, D.; Ruello, G. A New Framework for SAR Multitemporal Data RGB Representation: Rationale and Products. IEEE Trans. Geosci. Remote Sens. 2015, 53, 117–133. [Google Scholar] [CrossRef]

- Howe, B. Virtual Appliances, Cloud Computing, and Reproducible Research. Comput. Sci. Eng. 2012, 14, 36–41. [Google Scholar] [CrossRef]

- Peng, R.D. Reproducible Research in Computational Science. Science 2011, 334, 1226–1227. [Google Scholar] [CrossRef] [PubMed]

- Lucieer, V.; Hill, N.A.; Barrett, N.S.; Nichol, S. Do marine substrates ‘look’ and ‘sound’ the same? Supervised classification of multibeam acoustic data using autonomous underwater vehicle images. Estuar. Coast. Shelf Sci. 2013, 117, 94–106. [Google Scholar] [CrossRef]

- Dupuy, S.; Barbe, E.; Balestrat, M. An Object-Based Image Analysis Method for Monitoring Land Conversion by Artificial Sprawl Use of RapidEye and IRS Data. Remote Sens. 2012, 4, 404–423. [Google Scholar] [CrossRef] [Green Version]

- Tormos, T.; Dupuy, S.; Van Looy, K.; Barbe, E.; Kosuth, P. An OBIA for fine-scale land cover spatial analysis over broad territories: Demonstration through riparian corridor and artificial sprawl studies in France. In Proceedings of the GEOBIA 2012: 4th International Conference on GEographic Object-Based Image Analysis, Rio de Janeiro, Brazil, 7–9 May 2012.

- Stodden, V.; Miguez, S.; Seiler, J. ResearchCompendia.org: Cyberinfrastructure for Reproducibility and Collaboration in Computational Science. Comput. Sci. Eng. 2015, 17, 12–19. [Google Scholar] [CrossRef]

- Bechhofer, S.; Buchan, I.; De Roure, D.; Missier, P.; Ainsworth, J.; Bhagat, J.; Couch, P.; Cruickshank, D.; Delderfield, M.; Dunlop, I.; et al. Why linked data is not enough for scientists. Future Gen. Comput. Syst. 2013, 29, 599–611. [Google Scholar] [CrossRef]

- Chirigati, F.; Rampin, R.; Shasha, D.; Freire, J. ReproZip: Computational Reproducibility With Ease. In Proceedings of the SIGMOD 2016 International Conference on Management of Data, San Francisco, CA, USA, 26 June–1 July 2016.

- Douglas, T.; Haiyan Meng, P. Techniques for Preserving Scientific Software Executions: Preserve the Mess or Encourage Cleanliness? In Proceedings of the 12th International Conference on Digital Preservation (iPres) 2015, Chapel Hill, NC, USA, 2–6 November 2016.

- Open Source Initiative. Open Source Case for Business:Advocacy. Available online: https://opensource.org/advocacy/case_for_business.php (accessed on 19 December 2016).

- Wightman, T. What’s keeping you from using open source software? Available online: https://opensource.com/business/13/12/using-open-source-software (accessed on 19 December 2016).

- Salus, P. A Quarter-Century of Unix; Addison-Wesley: Boston, MA, USA, 1994; pp. 52–53. [Google Scholar]

- Grippa, T.; Lennert, M.; Beaumont, B.; Vanhuysse, S.; Stephenne, N.; Wolff, E. An Open-Source Semi-Automated Processing Chain for Urban OBIA Classification. In Proceedings of the GEOBIA 2016: Solutions & Synergies, Enschede, The Netherlands, 14–16 September 2016.

- Böck, S.; Immitzer, M.; Atzberger, C. Automated Segmentation Parameter Selection and Classification of Urban Scenes Using Open-Source Software. In Proceedings of the GEOBIA 2016: Solutions & Synergies, Enschede, The Netherlands, 14–16 September 2016.

- Van De Kerchove, R.; Hanson, E.; Wolff, E. Comparing pixel-based and object-based classification methodologies for mapping impervious surfaces in Wallonia using ortho-imagery and LIDAR data. In Proceedings of the GEOBIA 2014: Advancements, Trends and Challenges, Thessaloniki, Greece, 22 May 2014.

- Körting, T.; Fonseca, L.; Câmara, G. GeoDMA—Geographic Data Mining Analyst. Comput. Geosci. 2013, 57, 133–145. [Google Scholar] [CrossRef]

- Costa, G.; Feitosa, R.; Fonseca, L.; Oliveira, D.; Ferreira, R.; Castejon, E. Knowledge-based interpretation of remote sensing data with the InterImage system: Major characteristics and recent developments. In Proceedings of the GEOBIA 2010: Geographic Object-Based Image Analysis, Ghent, Belgium, 29 June–2 July 2010.

- Antunes, R.; Happ, P.; Bias, E.; Brites, R.; Costa, G.; Feitosa, R. An Object-Based Image Interpretation Application on Cloud Computing Infrastructure. In Proceedings of the GEOBIA 2016: Solutions & Synergies, Enschede, The Netherlands, 14–16 September 2016.

- Blaschke, T.; Hay, G.; Maggi, K.; Lang, S.; Hofmann, P.; Addink, E.; Feitosa, R.; van der Meer, F.; van der Werff, H.; van Coillie, F.; et al. Geographic Object-Based Image Analysis—Towards a new paradigm. ISPRS J. Photogramm. Remote Sens. 2014, 87, 180–191. [Google Scholar] [CrossRef] [PubMed]

- Drǎguţ, L.; Tiede, D.; Levick, S. ESP: A tool to estimate scale parameter for multiresolution image segmentation of remotely sensed data. Int. J. Geograph. Inf. Sci. 2010, 24, 859–871. [Google Scholar] [CrossRef]

- Drǎguţ, L.; Csillik, O.; Eisank, C.; Tiede, D. Automated parameterisation for multi-scale image segmentation on multiple layers. ISPRS J. Photogramm. Remote Sens. 2014, 88, 119–127. [Google Scholar] [CrossRef] [PubMed]

- Martha, T.; Kerle, N.; van Westen, C. Segment Optimization and Data-Driven Thresholding for Knowledge-Based Landslide Detection by Object-Based Image Analysis. Int. J. Geograph. Inf. Sci. 2011, 49, 4928–4943. [Google Scholar] [CrossRef]

- Hofmann, P. Defuzzification Strategies for Fuzzy Classifications of Remote Sensing Data. Remote Sens. 2016, 8, 467. [Google Scholar] [CrossRef]

- Kohli, D.; Warwadekar, P.; Kerle, N.; Sliuzas, R.; Stein, A. Transferability of Object-Oriented Image Analysis Methods for Slum Identification. Remote Sens. 2013, 5, 4209–4228. [Google Scholar] [CrossRef]

- Hofmann, P.; Blaschke, T.; Strobl, J. Quantifying the robustness of fuzzy rule sets in object-based image analysis. Int. J. Remote Sens. 2011, 32, 7359–7381. [Google Scholar] [CrossRef]

- Knoth, C.; Pebesma, E. Detecting destruction in conflict areas in Darfur. In Proceedings of the GEOBIA 2014: Advancements, Trends and Challenges, Thessaloniki, Greece, 22 May 2014.

- Knoth, C.; Pebesma, E. Detecting dwelling destruction in Darfur through object-based change analysis of very high-resolution imagery. Int. J. Remote Sens. 2017, 38, 273–295. [Google Scholar] [CrossRef]

- Tiede, D.; Füreder, P.; Lang, S.; Hölbling, D.; Zeil, P. Automated Analysis of Satellite Imagery to provide Information Products for Humanitarian Relief Operations in Refugee Camps - from Scientific Development towards Operational Services. Photogramm. Fernerkund. Geoinf. 2013, 2013, 185–195. [Google Scholar] [CrossRef]

- Giada, S.; De Groeve, T.; Ehrlich, D.; Soille, P. Information extraction from very high resolution satellite imagery over Lukole refugee camp, Tanzania. Int. J. Remote Sens. 2003, 24, 4251–4266. [Google Scholar] [CrossRef]

- Al-Khudhairy, D.; Caravaggi, I.; Giada, S. Structural damage assessments from Ikonos data using change detection, Object-level Segmentation, and Classification Techniques. Photogramm. Eng. Remote Sens. 2005, 71, 825–837. [Google Scholar] [CrossRef]

- Witmer, F. Remote sensing of violent conflict: Eyes from above. Int. J. Remote Sens. 2015, 36, 2326–2352. [Google Scholar] [CrossRef]

- Wolfinbarger, S. Remote Sensing as a Tool for Human Rights Fact-Finding. In The Transformation of Human Rights Fact-Finding; Alston, P., Knuckey, S., Eds.; Oxford University Press: New York, NY, USA, 2016; pp. 463–477. [Google Scholar]

- Knoth, C.; Nüst, D. Enabling reproducible OBIA with open-source software in docker containers. In Proceedings of the GEOBIA 2016: Solutions & Synergies, Enschede, The Netherlands, 14–16 September 2016; Kerle, N., Gerke, M., Lefèvre, S., Eds.; University of Twente Faculty of Geo-Information and Earth Observation (ITC): Enschede, The Netherlands, 2016. [Google Scholar]

- American Association for the Advancement of Science Appendix A: Darfur, Sudan and Chad Imagery Characteristics. Available online: http://www.aaas.org/page/appendix-darfur-sudan-and-chad-imagery-characteristics (accessed on 19 December 2016).

- Lang, S.T.D.; Hölbling, D.; Füreder, P.; Zeil, P. Earth observation (EO)-based ex post assessment of internally displaced person (IDP) camp evolution and population dynamics in Zam Zam, Darfur. Int. J. Remote Sens. 2010, 31, 5709–5731. [Google Scholar] [CrossRef]

- Wei, Y.; Zhao, Z.; Song, J. Urban building extraction from high-resolution satellite panchromatic image using clustering and edge detection. In Proceedings of the 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004.

- De Kok, R.; Wezyk, P. Principles of full autonomy in image interpretation. The basic architectural design for a sequential process with image objects. In Object-Based Image Analysis. Spatial Concepts for Knowledge-Driven Remote Sensing Applications; Blaschke, T., Lang, S., Hay, G.J., Eds.; Springer: Berlin, Germany, 2008; pp. 697–710. [Google Scholar]

- Touzi, R.; Lopes, A.; Bousquet, P. A statistical and geometrical edge detector for SAR images. IEEE Trans. Geosci. Remote Sens. 1988, 26, 764–773. [Google Scholar] [CrossRef]

- Tewkesbury, A.P.; Comber, A.J.; Tate, N.J.; Lamb, A.; Fisher, P.F. A critical synthesis of remotely sensed optical image change detection techniques. Remote Sens. Environ. 2015, 160, 1–14. [Google Scholar] [CrossRef]

- OTB Development Team. The ORFEO Tool Box Software Guide. Available online: https://www.orfeo-toolbox.org//packages/OTBSoftwareGuide.pdf (accesssed on 27 June 2016).

- Sulik, J.; Edwards, S. Feature extraction for Darfur: Geospatial applications in the documentation of human rights abuses. Int. J. Remote Sens. 2010, 31, 2521–2533. [Google Scholar] [CrossRef]

- Lang, S.; Blaschke, T. Landschaftsanalyse Mit GIS; Ulmer: Stuttgart, Germany, 2007; pp. 241–243. [Google Scholar]

- Forman, R.; Godron, M. Landscape Ecology; Wiley: New York, NY, USA, 1986; pp. 106–108. [Google Scholar]

- QGIS Development Team. QGIS Geographic Information System. Available online: http://qgis.osgeo.org (accessed on 24 June 2016).

- Graser, A.; Oyala, V. Processing: A Python Framework for the Seamless Integration of Geoprocessing Tools in QGIS. ISPRS Int. J. Geo-Inf. 2015, 4, 2219–2245. [Google Scholar] [CrossRef]

- Rossum, G. Python Reference Manual. Available online: http://www.python.org/ (accessed on 19 December 2016).

- Inglada, J.; Christophe, E. The Orfeo Toolbox remote sensing image processing software. In Proceedings of the 2009 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Cape Town, South Africa, 12–17 July 2009.

- Conrad, O.; Bechtel, B.; Bock, M.; Dietrich, H.; Fischer, E.; Gerlitz, L.; Wehberg, J.; Wichmann, V.; Böhner, J. System for Automated Geoscientific Analyses (SAGA) v. 2.1.4. Geosci. Model Dev. 2015, 8, 1991–2007. [Google Scholar] [CrossRef]

- Jones, E.; Oliphant, T.; Peterson, P. SciPy: Open Source Scientific Tools for Python. Available online: http://www.scipy.org/ (accessed on 27 June 2016).

- PyQGIS Developer Cookbook Using PyQGIS in standalone scripts. Available online: http://docs.qgis.org/testing/en/docs/pyqgis_developer_cookbook/intro.html#using-pyqgis-in-standalone-scripts (accessed on 27 June 2016).

- Nüst, D. Docker Container for QGIS Models on GitHub. Available online: https://github.com/nuest/docker-qgis-model (accessed on 24 November 2016).

- Loukides, M. What is DevOps? Infrastructure as Code; O’Reilly Media: Sebastopol, CA, USA, 2012. [Google Scholar]

- Boettiger, C. An introduction to Docker for reproducible research, with examples from the R environment. ACM SIGOPS Oper. Syst. Rev. 2015, 49, 71–79. [Google Scholar] [CrossRef]

- Stavish, T. Docker for QGIS. Available online: https://hub.docker.com/r/toddstavish/qgis (accessed on 24 November 2016).

- Stavish, T. Docker for OTB. Available online: https://hub.docker.com/r/toddstavish/orfeo_toolbox (accessed on 24 November 2016).

- Sutton, T. Docker for QGIS Desktop. Available online: https://hub.docker.com/r/kartoza/qgis-desktop (accessed on 24 November 2016).

- GNU-Project GNU Bash. Available online: https://www.gnu.org/software/bash/ (accessed on 24 November 2016).

- Wiggins, D.P. XVFB Documentation. Available online: https://www.x.org/releases/X11R7.6/doc/man/man1/Xvfb.1.xhtml (accessed on 24 November 2016).

- Baatz, M.; Schäpe, A. Multiresolution segmentation—An optimization approach for high quality multi-scale image segmentation. In Angewandte Geographische Informations-Verarbeitung XII; Strobl, J., Blaschke, T., Griesebner, G., Eds.; Wichmann: Karlsruhe, Germany, 2000; pp. 12–23. [Google Scholar]

- Nüst, D.; Knoth, C. Docker-Interimage: Running the Latest InterIMAGE Linux Release in A Docker Container with User Interface. Available online: http://zenodo.org/record/55083 (accessed on 6 July 2016).

- Antunes, R.; Bias, E.; Brites, R.; Costa, G. Integration of Open-Source Tools for Object-Based Monitoring of Urban Targets. In Proceedings of the GEOBIA 2016: Solutions & Synergies, Enschede, The Netherlands, 14–16 September 2016.

- Passo, D.; Bias, E.; Brites, R.; Costa, G.; Antunes, R. Susceptibility mapping of linear erosion processes using object-based analysis of VHR images. In Proceedings of the GEOBIA 2016: Solutions & Synergies, Enschede, The Netherlands, 14–16 September 2016.

- Friis, M. Docker on Windows Server 2016 Technical Preview 5. Available online: https://blog.docker.com/2016/04/docker-windows-server-tp5/ (accessed on 19 December 2016).

- Nüst, D.; Knoth, C. Data and code for: Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers. Available online: https://doi.org/10.5281/zenodo.168370 (accessed on 19 December 2016).

- GNU-Project What is free software? Available online: https://www.gnu.org/philosophy/free-sw.en.html (accessed on 24 November 2016).

- Marwick, B. 1989-Excavation-Report-Madjebebe. Available online: https://doi.org/10.6084/m9.figshare.1297059.v2 (accessed on 24 November 2016).

- Ram, K. Git can facilitate greater reproducibility and increased transparency in science. Source Code Biol. Med. 2013, 8, 7. [Google Scholar] [CrossRef] [PubMed]

- Nüst, D.; Konkol, M.; Schutzeichel, M.; Pebesma, E.; Kray, C.; Przibytzin, H.; Lorenz, J. Opening the Publication Process with Executable Research Compendia. D-Lib Mag. 2017. [Google Scholar] [CrossRef]

- Tiede, D.; Lang, S.; Hölbling, D.; Füreder, P. Transferability of OBIA rulesets for IDP camp analysis in Darfur. In Proceedings of the GEOBIA 2010: Geographic Object-Based Image Analysis, Ghent, Belgium, 29 June–2 July 2010.

- Baker, M. Muddled meanings hamper efforts to fix reproducibility crisis. Nat. News 2016. [Google Scholar] [CrossRef]

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Knoth, C.; Nüst, D. Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers. Remote Sens. 2017, 9, 290. https://doi.org/10.3390/rs9030290

Knoth C, Nüst D. Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers. Remote Sensing. 2017; 9(3):290. https://doi.org/10.3390/rs9030290

Chicago/Turabian StyleKnoth, Christian, and Daniel Nüst. 2017. "Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers" Remote Sensing 9, no. 3: 290. https://doi.org/10.3390/rs9030290

APA StyleKnoth, C., & Nüst, D. (2017). Reproducibility and Practical Adoption of GEOBIA with Open-Source Software in Docker Containers. Remote Sensing, 9(3), 290. https://doi.org/10.3390/rs9030290