1. Introduction

Accuracy assessment is an acknowledged requirement in the process of creating and distributing thematic maps [

1]. It is a necessary condition for the comparison of research results and the appropriate use of map products. However, despite the fact that the need to carry out (and document) accuracy assessment is recognized by the scientific community, further attention to rigorous assessment is still needed [

2]. A general framework of good practices for accuracy assessment has been described in [

3,

4] in order to guide the validation effort. However, as mentioned in [

5], Geographic Object-Based Image Analysis (GEOBIA) validation has its own characteristics. It is, therefore, necessary to adjust some of the good practices of the general framework for the specific quality assessment of GEOBIA results. These practices depend on the type of geospatial database that is going to be extracted from the image analysis.

There are at least three important stages in the assessment process leading to the design of a geographic database: (i) observation, (ii) relating the observation to a conceptual model and (iii) representing the data in formal terms [

6]. In the first stage, the surveyor must decide whether the observation is a clearly defined or definable entity, or if it is a continuum, i.e., a smoothly-varying surface. The first situation refers to an exact entity, called the discrete entity in Burrough [

6] or the spatial object in Bian [

7]. In this study, they will be named spatial entities. The second situation refers to a (continuous) field, and it is not well represented by GEOBIA [

7]. However, viewing the world as either a continuum or as a set of discrete entities is always a matter of appreciation. At the level of the empirical, one-to-one scale geographic world, it is indeed not possible to decide if the world is made up of discrete, indivisible elementary entities, or if it is a continuum with different properties at different locations. On one side, houses, trees and humans are the non-subdivisible smallest units for most purposes of geographic-scale modeling and study; on the other side, extensive entities such as oceans, prairies, forest and geological formations may be subdivided within very wide limits and still maintain their identity [

8]. A third category has therefore been added, which is called spatial region [

7] or the object with an indeterminate boundary [

6]. The term spatial region used in this paper represents a mass of individuals that can be conceptualized both as a continuous field and as discrete entity, which is often the case for land cover. This duality is also found for their coding in a database: they can be discretized as vector polygons with boundaries based on a general agreement (which are represented as adimensional lines, but which are fuzzy in reality), or they can be represented as an arbitrary grid specifying the proportion of each individual entity at each pixel (without boundaries) [

7]. The three conceptual models (spatial entities, spatial regions and continuous field) are illustrated in

Figure 1. The third model (continuous field) will not be discussed further in this paper.

GEOBIA is often considered as a new image classification paradigm in remote sensing [

9]. It encompasses a large set of tools that incorporate the knowledge of a set of (usually) adjacent pixels to derive object-based information. GEOBIA is primarily applied to Very High Resolution (VHR) images, where spatial entities/regions are visually composed of many pixels and where it is possible to visually validate them [

9]. Most of the time, these groups of pixels (called image-segments in Hay and Castilla [

10]) are derived from automated image segmentation (e.g., [

11]) or superpixel algorithms [

12] before the classification stage. In addition to the frequently-used segmentation/classification workflow, some machine learning algorithms have been developed that recognize groups of pixels as a whole [

13], and polygons are sometimes taken from ancillary data (e.g., [

14,

15]). In any case, GEOBIA relates to the conceptual model of geo-objects, defined as a bounded geographic region that can be identified for a period of time as the referent of a geographic term [

16]. Geo-objects, which encompass spatial entities or spatial regions, are represented (and analyzed) as points, lines or (most of the time) polygons. GEOBIA then assigns a single set of attributes to each polygon as a whole, in contrast to pixel-based classification, where each individual pixel is classified. The polygons used in GEOBIA are thus intrinsically considered as homogeneous in terms of the label [

10,

17,

18], even when those labels are fuzzy [

19].

According to Bian [

7], polygons are a good representation for spatial entities and a reasonable one for spatial regions, which might have indeterminate boundaries. Although many geographic-scale and anthropogenic entities have boundaries, the most precise boundaries existing in the geographic world are immaterial: these are administrative and property boundaries [

8]. At the other extreme are the self-defining boundaries of social, cultural and biological territories. Extracting the boundaries is often part of the GEOBIA process, which then contributes to the discretization process. The quality of the boundaries should therefore most of the time be considered in addition to the thematic accuracy.

There is a wide range of applications of GEOBIA, and good validation practices depend on the selected application. In this study, we will focus on thematic (categorical) geographic database using the polygon representation of spatial entities or regions, as opposed to the pixel-based approach where the support of the analysis is the (sub)pixel captured by a sensor. With those thematic products, where a single category is assigned to each polygon (i.e., thematic maps), the accuracy of the labels assigned to the geo-objects is of primary concern. However, in addition to the thematic accuracy assessment, spatial quality should also be taken into account for GEOBIA [

20]. The structural and positional quality of the polygons are therefore addressed, as suggested by [

5]. The comparison of segmentation algorithms before the classification stage is, however, out of the scope of this paper, as this study will consider the quality of the final results, notwithstanding the specific numerical methods that were involved for their production. The proposed framework assesses thematic and geometric errors using distinct indices in order to identify the different sources of uncertainty for the derived product. Synthetic indices mixing the two types of errors (e.g., [

21,

22]) are therefore not discussed.

2. General Accuracy Assessment Framework

The general accuracy assessment framework defined in [

3,

4] is still applicable to most GEOBIA results. The standard practice consists of reporting the map accuracy as an error matrix when the map and reference classifications are based on categories [

23]. Recommendations about the quality assessment are commonly divided in three components that have the same importance to draw rigorous conclusions and that should be reported to end users, namely the analysis, the response design and the sampling design.

Analysis includes the quality indices to be used to address the objectives of the mapping project, along with the method to estimate those indices [

4]. Quality indices that are directly interpretable as probabilities of encountering certain types of misclassification errors or correct classifications should be selected in preference to quality indices not interpretable as such [

24]. In order to derive unbiased estimation of these indices, the probability to select each sampling unit should be considered.

Response design is the protocol for determining the ground condition (reference) classification of a sampled spatial unit (pixel, block or polygon) [

25]. The fundamental basis of an accuracy assessment is a location-specific comparison between the map classification and the “reference” classification [

1]. The response design includes the choice of a type of sampling unit and the rules that allow the operator to decide if a sampling unit was correctly labeled (or not).

The sampling design is the way a representative subset of the geospatial database is selected to perform the accuracy assessment. In practice, it is indeed impossible to have precise (low uncertainty) and exact (unbiased) information that exhaustively covers the study area [

4,

25], except when using synthetic data. A subset of the geo-objects is, therefore, selected in order to estimate the accuracy of the map. The subset should be drawn with a probabilistic method, in order to be representative of the whole study area [

23]. The sample can be stratified (i) to increase the proportion of samples in error-prone areas in order to reduce the variance of the estimator [

26,

27] and/or (ii) to balance the number of sampling units for each category in order to avoid large variances of estimation for low frequency classes [

4].

As discussed above, GEOBIA can be oriented towards objectives, related with two different conceptual models: spatial regions or spatial entities. Furthermore, GEOBIA can have different goals. In an attempt to provide pragmatic guidelines, four types of GEOBIA applications are considered in this paper:

Wall-to-wall mapping: This is the most common application, which results in thematic maps proposing a complete partition of the study area into classified polygons, such as for land cover or land use maps.

Entity detection: GEOBIA is used to inventory well-defined geo-objects (such as cars [

28], buildings [

29], single trees [

30] or animals [

31]). These entities can be sparsely distributed on a background (e.g., cars on a road) or agglutinated (e.g., trees in a dense forest).

Entity delineation: The goal is the delineation of selected spatial entities (e.g., buildings [

32], vine parcels [

33], crop fields [

34]) with a focus on the precision of their boundaries.

Enhanced pixel classification: GEOBIA is used to improve image classification at the pixel level by reducing the within-class variability (speckle removal) or by computing additional characteristics (texture, structure, context) [

35,

36,

37].

In the following sections, specific GEOBIA issues will be addressed with respect to these four types of applications, when relevant.

Section 3 lays focus on the way to analyze the confusion matrix to estimate relevant quality indices.

Section 4 is related to the response design and addresses the choice of the sampling unit (pixel or polygon). The methods to create a subset of polygonal sampling units are then described in

Section 5. Finally,

Section 6 addresses further issues related to the scale of the GEOBIA application, as well as specific geometric errors linked to the segmentation process.

3. Analysis of Quality Indices

For a pixel-based validation, the proportion of correctly classified pixels (count-based classification accuracy) is equal to the proportion of the area that is correctly classified (area-based map accuracy). In other words, if one knows how many pixels are misclassified, one also knows the area of the map that is misclassified. Indeed, standard validation procedures usually assume that pixels have all the same area (assuming pixels of equal area makes sense at small scale factor, but a rigorous pixel-based quality assessment over large areas should take into account that some coordinate systems do not preserve equal areas). Polygons, however, do not necessarily have the same areas, and their differences in size can be very large in some landscapes (e.g., a landscape including anthropogenic buildings and forests at the same time). In terms of the proportion of the area of the map that is incorrectly classified, an error for a large polygon has obviously more impact than an error for a smaller one. This variable area must be taken into account for an unbiased estimate of some accuracy indices.

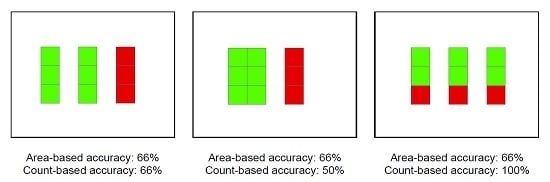

Figure 2 illustrates the differences between count-based and area-based accuracy on simplified examples, and it emphasizes the need of selecting indices that are meaningful for the purpose of the study. On one side, count-based accuracy (

Section 3.1) should be used for the assessment of spatial entity detection, and on the other side, area-based accuracy (

Section 3.2) should be used for spatial region mapping and for the classification of pixels. In the case of spatial entity delineation, the geometric precision is usually more important than the thematic accuracy. Nevertheless, the automated delineation could sometimes misclassify some of the spatial entities. Count-based indices can then be useful to estimate the probability of missing entities.

3.1. Accuracy of Spatial Entity Detection

In the case of entity detection, the validation process focuses on correct detection rates. In a binary classification, the response design identifies four possible cases: (i) the True Positives () are the entities that are correctly detected by the method; (ii) the False Positives () are the polygons that are incorrectly detected as entities; (iii) the False Negatives () are entities that are not detected by the method; and (iv) the True Negatives () are the correctly undetected polygons. Indices based on true and false detections are widely used in computer science and related thematic disciplines. However, GEOBIA detection does not solely rely on the classification of entities. Indeed, for polygons that have been classified as a spatial entity, over-segmentation (more than one image-segment for one spatial entity) and under-segmentation (more than one spatial entity encapsulated inside one image-segment) contribute to the false positives and the false negatives, respectively. As a consequence, the number of subparts minus one should, therefore, be counted as false positive, and the number of encapsulated geo-objects minus one should add to the false negative in these situations.

In the binary case, e.g., detection of dead trees in a dense population of trees, Powers [

38] suggests three indices to summarize the information of the contingency table that is relevant for the comparison between methods, namely the informedness (Equation (

1)), the markedness (Equation (

2)) and the Matthews’ correlation coefficient (Equation (

3)), with:

However, in most spatial entities’ detection context,

is indeterminate because it would correspond to the background, which is not of the same nature as the spatial entities and is usually not countable (e.g., cows (spatial entities) in a pasture (spatial region)). In those cases, the count-based user accuracy (also called positive predicted value, Equation (

4)) and the count-based producer accuracy (also called sensitivity, Equation (

5)) provide a better summary of the truncated confusion matrix, where:

It is furthermore easy to show that the users’ and producers’ accuracies for the various classes are equal to the corresponding markedness and informedness for these classes when tends to infinity (i.e., if all pixels of the background were considered as ).

In addition, Count Accuracy (

) has been widely used in remote sensing applications, such as tree counting [

39] or animal counting. It consists of dividing the number of detections (

and

) by the number of geo-objects in the reference, with:

While this index provides meaningful information at the plot level for documenting, e.g., the density of trees in a parcel or the total number of animals in a landscape, it does not provide location-specific information due to commission and omission errors that are canceled by the aggregation [

40]. We therefore recommend to compute

on a set of randomly selected regions of equal areas, in order to provide an estimate of its variance in addition to its mean value.

3.2. Accuracy of a Wall-To-Wall Map

For wall-to-wall maps, the area of the map that is correctly classified is the main concern. The primary map accuracy indices suggested by the comparative study of Liu et al. [

41], namely overall accuracy, producers’ accuracies and users’ accuracies are recommended. Pixel-based accuracy estimators and their variance can be found in the general good practices from Olofsson et al. [

4]. For GEOBIA results, the same indices should be used. However, with polygon sampling units, the estimators of the primary accuracy indices should take the variable size of polygons into account in order to avoid bias and to reduce the variance of the predictors.

In the context of polygon-based estimation of the thematic accuracy, correctly classifying large polygons will contribute more to the map quality than correctly classifying smaller ones. Unbiased predictors of the primary accuracy indices have, therefore, been suggested in Radoux and Bogaert [

42]. Under the hypothesis of binary agreement rules and representative samples, these indices use the knowledge about the area of the polygons to predict overall accuracy (Equation (

7)), user accuracies (Equation (

8)) and producer accuracies (Equation (

9)), with:

where

N is the total number of polygons in the map,

n is the number of sampled polygons and

is the area of the

i-th polygon.

,

are binary indicators of the actual and predicted labels

j of polygon

i.

is therefore equal to one, if the

i-th polygon was correctly classified, and zero, otherwise.

is an estimate of the probability to belong to class

j, and

is an estimate of the classification accuracy (

is the set of all possible classes in the map). The main difference with the classification accuracy estimated with the standard point-based estimators is the inclusion of the size of the polygons in the first term of the numerators of each equation. Furthermore, the area of the non-sampled polygons is also taken into account, so that all information at hand about the polygons is used. Finally, it is worth noting that the second terms of the numerators of Equations (

7)–(

9) have more influence when the number of polygons is large: the above predictors simplify to the standard estimators when

N becomes infinite if the count-based accuracy is not influenced by the size of the polygons.

Relationships between size and classification accuracy have been highlighted in previous studies [

42,

43] and could impact the predictors. In theory, these relationships can be plugged into Equations (

7)–(

9) by replacing

with a function of the size (Radoux and Bogaert [

42]). In practice, it is not trivial to identify this function and to estimate its parameters. A pragmatic solution consists of (i) splitting the sample based on quantiles for the areas of the polygons, then (ii) estimating

for each size category

q and, finally, (iii) multiplying

by the sum of the polygons’ areas in that size category

q. Another solution consists of estimating the area-based accuracy from the sampled polygons only, with:

where

n is the number of polygons in the sample. This formula (that can be adapted for both users’ and producers’ accuracy estimates) has a larger variance than Equation (

7), but it is unbiased. It is therefore of appropriate use, when the size of the sample is small and

is difficult to estimate.

So far, the best approximation of the prediction variance of the overall accuracy estimator given by Equation (

7) is:

which can be used to derive the confidence interval around the predicted overall accuracy index (to the best of our knowledge, there are no simple formulas for the prediction variance of the polygon-based users’ and producers’ accuracies). Equations (

7)–(

9) will be particularly efficient when the number of polygons in the map is small, and they are at least as efficient as a point-based sampling when the number of polygons is very large [

44].

4. Response Design

The response design encompasses all steps of the protocol that lead to a decision regarding the agreement of the reference and map classifications [

4]. Response design with GEOBIA is an underestimated issue. Indeed, because classification systems involved in GEOBIA are often complex, not only accuracy issues (e.g., mistaken photo-interpretation), but also precision issues (e.g., difficulty to estimate the proportion of each class of the legend) could affect the quality of the reference dataset.

The sampling unit must be defined prior to specifying the sampling design, and it is not necessarily set by the map representation [

25]. In the case of GEOBIA, the use of polygons sampling units is usually recommended [

27,

45] because the legend is defined at the scale of the polygons and not at the scale of the pixels. However, there is no universal agreement on a best sampling unit [

1]. In order to select a type of sampling unit, the conceptual model and the sampling effort must indeed be taken into account.

For spatial entities, polygon sampling units prevail because distinct individual entities are extracted. Individual pixels indeed need to be aggregated at different levels to compose a geo-object. For instance, a high resolution pixel identified as a window could be the windshield of a vehicle or the skylight of a building roof top. The photo-interpretation of very high resolution images, therefore, intrinsically takes context into account to identify spatial entities. The response design should, however, include rules for handling imperfect matches between the reference and the image-segment. Topological relationship, such as intersection or containment, based on the outlines or the centroids, define unambiguous binary agreement rules with a tolerance for delineation errors. Alternatively, an entity may be considered correctly labeled if the majority of its area, as determined from a reference classification, corresponds to the map label [

1].

For spatial regions, various classes (e.g., urban area, open forest, mixed forest, orchard) are also defined at the polygon level and would not exist at the scale of a high resolution pixel [

46]. Those classes typically (i) represent complex concepts where the context plays a major role for the interpretation or (ii) generalize the landscape. The generalization of the landscape offered by GEOBIA may better represent how land cover interpreters and analysts actually perceive it [

36]. The selected legend is then strongly related to the scale at which a spatial region is observed and linked with minimum mapping units, which are not necessarily identical for all classes (e.g., [

47]). However, when using polygons as sampling units, one should make sure that the object-based generalization is suitable to represent the variable of interest. Otherwise, pixel sampling units are more relevant.

When polygon sampling units are selected, the rules that define the agreement are usually simpler when the reference data are based on the same polygons as those on the map. Nevertheless, object-based quality assessment could need information about the segmentation quality in addition to the thematic accuracy assessment. As discussed in

Section 6.1 and

Section 6.2, manually digitized or already existing polygons of reference may then be needed. The use of manually digitized polygons for the assessment of the thematic accuracy is however complex because the aggregation rules are then applied on different supports.

Issues related to the heterogeneity of polygon sampling units are similar to the so-called mixed pixels problem. It is therefore of paramount importance to clearly define the labeling rules in order to avoid ambiguous validation results. There is however no universal set of decision rules that fits to all mapping purposes. As a consequence, the same landscape can be described with different legends (e.g.,

Figure 3), which cannot be compared without a fitness to purpose analysis. For the thematic accuracy assessment, the response design links the observed reality to the type of legend of that has been selected for the map. Those legends can be categorized into majority-based, rule-based (such as the Land Cover Classification System (LCCS) [

48]) or object-oriented :

Majority rules assign a label based on the dominant label inside each polygon. The legend includes the minimum number of classes necessary to represent each of type of geo-object that are identified with a smaller scale factor. The labeled polygons are usually interpreted as “pure” geo-objects. It is, therefore, most suited when GEOBIA aims at identifying spatial entities, but is frequently used with spatial regions, as well. In this case, the polygons are used to generalize the landscape (e.g., mapping the forest and not the trees) or to avoid classification artifacts. When there are more than two classes, it is worth noting that the majority class could have a proportion that is less than . Majority rules could therefore be source of confusions.

Rules-based legends (e.g., FAO LCCS rules) are built upon a set of thresholds on the proportion of each land cover within the spatial unit. When correctly applied, those rules generate a comprehensive partition of all of the possible combinations of its constitutive spatial entities. They are well suited to the characterization of complex land cover classes using spatial regions. Each LCCS class is characterized with a unique code that guarantees a good interoperability.

The goal of object-oriented legends is to build human-friendly representations of geo-objects based on the knowledge that people cognitively have about the geospatial domain [

49]. The conceptual schema describing information are linked with formal representation of semantics (i.e., ontologies) [

50] in an attempt to capture and organize common knowledge about the problem’s domain. The concepts are often well understood (e.g., an urban area), but difficult to quantitatively define. Land use and ecosystem maps belong to this category. Each concept has a set of properties and relations between spatial entities, which involve geometric and topological relationships.

When GEOBIA is used as a means to improve classification results at the pixel level, treating a polygon as homogeneous could lead to a biased estimate of the number of pixels in a class. In order to properly assess the impact of GEOBIA on pixel classification, it is therefore suggested to use pixel-based sampling units. Indeed, the alternative method combining object-based thematic accuracy assessment and a measure of the polygons heterogeneity is likely to be less cost effective to achieve the same results.

The variance of the overall accuracy estimation with polygon sampling units is smaller or equal to the variance of a pixel-based validation under the same type of sampling scheme and with the same number of sampling units [

51], but there are two disadvantages of using polygon sampling units. Firstly, the response design with polygons is often more complex than with pixels, especially when geometric quality needs to be assessed. Polygon-based validation therefore does not necessarily reduce the total sampling effort. Secondly, polygon sampling units are bounded to a given partition of the landscape. They are therefore difficult to reuse for change detection [

25] if the same segmentation is not used for all dates.

7. Overview of the Recommended Practices

Details about the quality assessment of GEOBIA products with a crisp classification are provided in the previous sections. From a more pragmatic viewpoint, it is not necessary to estimate all indices in all cases.

Table 1 summarizes the recommended practices with respect to the objectives of the GEOBIA described in

Section 2.

One needs to first identify if GEOBIA is used to enhance per-pixel classification or if the conceptual model of the geographic database is better represented with polygons. In the first case, pixel sampling unit is recommended, because: (i) it simplifies the response design and (ii) it does not require specific boundary or structural quality control. Standard methods for the accuracy assessment of pixel-based classification (see [

4]) are thus fully applicable in those cases.

When the legend is defined at the scale of the polygons, the thematic accuracy assessment should be based on the polygon sampling units. An appropriate response design should then take the conceptual model into account in order to define the binary agreement between the polygons and the “real world” (see

Section 4). Within object-based conceptual models, GEOBIA products are usually either a wall-to-wall map of the landscape into (mainly) spatial regions, or a spatially discontinuous set of one (sometimes several) types of spatial entities. These two types of GEOBIA products should be validated with the set of indices that reflect their purpose.

In case of wall-to-wall mapping, (i) area-based accuracy assessment indices are recommended (

Section 3) and (ii) additional information about the precision of the boundaries and the generalization of the content could be needed (

Section 6). The response design should be based on the content of each polygon. Due to the variable size of those polygons, the area of each sampling unit has to be taken into account in order to provide an unbiased estimate of the accuracy indices.

Finally, studies dedicated to a set of spatial entities usually aim at either updating large scale vector maps (entity delineation) or inventorying a specific type of entity (entity counting). For the former, thematic accuracy is not a primary concern and the validation should focus on the geometric quality of the polygons (

Section 6). For the latter, count-based quality assessment (

Section 3.1) should be applied with a response design that addresses possible segmentation errors. However, the quality of the delineation is usually not an issue as long as the spatial entities are correctly detected. In case of poor delineation, the response design should clearly state the matching rules that define when a spatial entity has been detected.