1. Introduction

The relevance of global scale land cover is reflected in its status as an Essential Climate Variable (ECV) [

1], providing Plant Functional Types (PFTs) to global climate models [

2]. Land cover is also used in applications of ecosystem accounting, conservation, forest and water management, natural hazard prevention and mitigation, monitoring of agricultural policies and economic land use modeling [

3,

4,

5,

6,

7]. Consequently, many global land cover maps have been produced over the last three decades, initially at a coarse resolution of 8 km [

8] to medium resolutions of 300 m to 1 km [

9,

10,

11] and more recently at a 30 m Landsat resolution [

12,

13], facilitated by the opening up of the Landsat archive [

14] and improvements in data processing and storage [

15]. Hence, there has been an increasing trend in the development of higher resolution land cover and land use maps [

16]. Taking advantage of the new Sentinel satellites in a multi-sensor approach, even higher resolution global products are starting to appear, e.g., the Global Urban Footprint layer, produced by the German Aerospace Agency (DLR) at the highest resolution of 12 m [

17].

Even more important is the monitoring of land cover change over time, which is one of the largest drivers of global environmental change [

18]. For example, the agriculture, forestry and other land use sector (ALOFU) contributes 24% to global greenhouse gas emissions [

19]. Land cover change monitoring is also a key input to a number of the Sustainable Development Goals (SDGs) [

20]. New land cover products have appeared recently that capture this temporal dimension, e.g., the ESA-CCI annual land cover products for 1992–2015 [

21], the forest loss and gain maps of Hansen [

22], the high resolution global surface water layers covering the period 1984–2015 [

23] and the Global Human Settlement Layer for 1975, 1990, 2000 and 2014 for monitoring urbanization [

24].

As part of the development chain of land cover and land cover change products, accuracy assessment is a key process that consists of three main steps: response design; sampling design; and analysis [

25]. The response design outlines the details of what information is recorded, e.g., the land cover classes; the type of data to be collected, e.g., vector or raster; and the scale. The sampling design outlines the method by which the sample units are selected while analysis usually involves the estimation of a confusion matrix and a number of evaluation measures that are accompanied by confidence intervals, estimated using statistical inference [

26]. Note that in this paper we use the terms accuracy assessment and validation interchangeably as these terms are used by GOFC-GOLD (Global Observation for Forest Cover and Land Dynamics) and the CEOS Calibration/Validation Working Group (CEOS-CVWG), who have been key proponents in establishing good practice in the validation of global land cover maps as agreed by the international community [

27]. Moreover, both accuracy assessment (e.g., [

28,

29,

30]) and validation (e.g., [

31,

32,

33]) have been used in the recent literature.

In general, the accuracy assessment of land cover products is undertaken using commercial tools or in-house technologies. Increasingly, there are open source tools becoming available, e.g., the RSToolbox package in R [

34], a QGIS plug-in called “Validation Tool” [

35] and spreadsheets that calculate accuracy measures based on a confusion matrix, e.g., [

36]. There are also new open source remote sensing tools, e.g., SAGA [

37], GRASS [

38] and ILWIS [

39], among others [

40]. However, the use of these tools, and R packages for accuracy assessment, in particular, still requires a reasonable level of technical expertise, or in some cases they do not include the full validation workflow. Hence there is a clear need for a simple-to-use, open and online validation tool.

Another key recommendation from the GOFC-GOLD CEOS-CVWG is the need to archive the reference data, making them available to the scientific community. This recommendation is also directly in line with the Group on Earth Observation’s (GEO) Quality Assurance Framework for Earth Observation (QA4EO), which includes transparency as a key principle [

41]. Although confusion matrices are sometimes published, e.g., [

9,

10,

42], the reference data themselves are often not shared. There are exceptions, which include: (i) the GOFC-GOLD validation portal [

43]; (ii) the global reference data set from USGS [

44,

45], designed by Boston University and GOFC-GOLD [

46]; (iii) a global validation data set developed by Peng Gong’s group at Tsinghua University [

47]; and (iv) data collected through Geo-Wiki campaigns [

48]. However, the amount of reference data that is not shared far outweighs the data currently being shared. If more data were shared, they could be reused for both calibration and validation purposes, which is one of the recommendations in the GOFC-GOLD CEOS-CVWG guidelines [

27]. Although there are issues related to inclusion of existing validation samples within a probability-based design and in terms of harmonization of nomenclatures, a recent study by Tsendbazar et al. [

49] showed that existing global reference data sets do have some reuse potential.

The LACO-Wiki online tool is intended to fill both of these identified gaps, i.e., to provide an online platform for undertaking accuracy assessment and to share both the land cover maps and the reference data sets generated as part of the accuracy assessment process. Hence, the aim of this paper is to present the LACO-Wiki tool and to demonstrate its use in the accuracy assessment of Globeland30 [

13] for Kenya. During this process, we highlight the functionality of LACO-Wiki and provide lessons learned in land cover map validation using visual interpretation of satellite imagery.

2. The LACO-Wiki Tool

LACO-Wiki has been designed as a distributed, scalable system consisting of two main components (

Figure 1): (i) the online portal, where the user interaction and initial communications take place; and (ii) the storage servers, where the data are handled, analyzed and published through a Web Map Service (WMS), among other functions. The communication between the portal and the storage servers is implemented using RESTful Web Services. The portal has been built using ASP.NET MVC while the storage is comprised of Windows services written in C#. OpenLayers is used as the mapping framework for displaying the base layers (i.e., Google, Bing, OpenStreetMap), the uploaded maps, the samples, and additional layers. In addition, PostgreSQL is used for the database on each of the components, GeoServer and its REST API are used for publishing the data WMS layers and styling them, the GDAL/OGR library is employed for raster and vector data access, and Hangfire handles job creation and background processing.

An important feature of LACO-Wiki is the data security and the access rights of the users. Since the WMS is not exposed to the public, this means that any maps and sample sets are stored securely in the system. Users can choose to share their data sets (i.e., the maps) and sample sets with individual users or openly to all users. However, downloads of the data sets, sample sets and the accuracy reports are only possible for those with proper access rights.

Note that these sharing features relate to the maps uploaded to LACO-Wiki and the intermediate products created in the system, e.g., a validation session, which can be shared with others to aid in the visual interpretation process. This is separate to the user agreement that governs the sharing of interpreted samples in the system. By using the platform, users agree to the terms of use (visible on the “About” page—

https://laco-wiki.net/en/About), which state that as part of using LACO-Wiki, they agree to share their reference data. At present there is not much reference data available in the system, but in the future, any user will be able to access the data through a planned LACO-Wiki API (Application Programming Interface).

The LACO-Wiki portal can be accessed via

https://www.laco-wiki.net. To log in, a user can either use their Geo-Wiki account after registering at

https://www.geo-wiki.org or their Google or Facebook accounts, where the access has been implemented through Open Authentication (OATH2) technology. The default language for LACO-Wiki is English but it is possible to change to one of 11 other languages: German, French, Spanish, Italian, Portuguese, Greek, Czech, Russian, Ukrainian, Turkish and Bulgarian.

2.1. The Validation Workflow

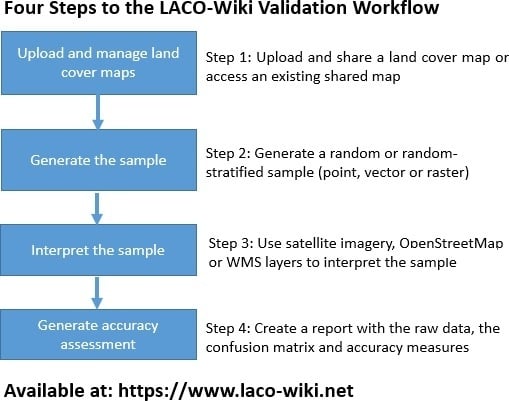

There are four main components to the validation workflow in LACO-Wiki (

Figure 2). Each component is outlined in more detail below. These components are shown as menu items at the top of the LACO-Wiki screen (

Figure 3).

The section that follows describes the validation workflow encapsulated in LACO-Wiki. This is followed by a description of how the tool can be used in different use cases.

2.1.1. Create and Manage Data

The first step in the workflow is to upload a data set, i.e., a land cover map, to LACO-Wiki (

Figure 4). By clicking on the Data menu option (

Figure 3), the “Manage your Datasets” screen will appear. All the data sets that the user has uploaded or which are shared with the user will appear in their list. The “Upload a new Dataset” option appears at the bottom of this screen. This will display the “Create a new Dataset” screen (

Figure 4). Here, the user must enter a name for the data set and then indicate whether it is categorical, i.e., land cover classes, continuous, e.g., 0–100%, or a background layer that can be used additionally in the validation process such as an aerial photograph of the area. The user must then enter a data set description. Finally, the user can select a raster or vector file in geotiff or shapefile format, respectively. At present the map must have a WGS 84 Platte Carrée projection EPSG: 32662 or the European projection ETRS89/ETRS-LAEA EPSG: 3035. The file size that can be uploaded is currently limited to 1 GB. More information on how to handle larger file sizes is presented in

Section 2.2. The data set is then created and stored in the LACO-Wiki system.

By clicking on the data set that was just uploaded, basic metadata about the land cover map will be shown. At this stage the user must indicate the name of the column in the data set that contains the land cover classes or the continuous data. The legend designer is then used to change the default colors assigned to the classes. Some pre-defined legends from different land cover maps are also available to the user. Once the final colors have been chosen, the “Preview Image” window on the “Dataset Details” screen will show the map using these colors and the legend will be displayed below the map (

Figure 5). Finally, the user can share the data set with other individual users, share the data with everyone or the data set can remain private to a user.

2.1.2. Generate a Sample

The second step in the workflow (

Figure 2) is to generate a sample from a data set. There are two main types of sampling design available: random and stratified. Systematic sampling will be added in the future. In the case of a vector data set, this can be a point sample or a vector sample where there are two main choices by which objects are chosen, i.e., based on the number of objects or based on their area. In the case of a raster data set, this can be a point sample or a pixel sample. For a random sample, the user indicates the total sample size desired. For stratified, the number of sample units per class is entered. A sample calculator is currently being implemented that will allow users to determine the minimum number of samples needed. As with the data sets, samples can remain private or they can be shared. A sample can also be downloaded as a KML file for viewing in Google Earth or in a GIS package.

2.1.3. Generate a Validation Session and Then Interpret the Sample Using Imagery

Once a sample is generated, the third step in the workflow (

Figure 2) is to create a validation session and then interpret the sample. Clicking on the Sample menu option at the top of the LACO-Wiki screen will bring up the “Manage your Samples” screen. This will list all sample sets created by the user or shared by other users. Clicking on one of the sample sets will select it and then basic metadata about that sample will be displayed (see

Figure 6).

At the bottom of the screen there will be the option to create a validation session as shown in

Figure 6.

Figure 7 shows the screen for creating a validation session. In addition to the name and description, the user must select blind, plausibility or enhanced plausibility as the Validation Method. In blind validation, the interpreter does not know the value associated with the layer that they are validating and they choose one class from the legend when interpreting the satellite imagery. In plausibility validation, the interpreter is provided with the land cover class and they must agree or disagree with the value while enhanced plausibility validation allows the interpreter to provide a corrected class or value.

The user must then indicate if the validation session is for the web, i.e., online through the LACO-Wiki interface, or for a mobile phone, i.e., through the LACO-Wiki mobile application for collection of data on the ground, which can complement the visual interpretation of the samples; geotagged photographs can also be collected. For the purpose of this paper, the validation session takes place on the web.

The user then chooses the background layers that will be available for the validation session. Google Earth imagery is always added as a default, but satellite imagery from Bing and an OpenStreetMap layer can also be added. Finally, other external layers can be added via a WMS, e.g., Sentinel 2 layers could be added from Sentinel Hub or any other WMS could be added, e.g., to display aerial photography.

The validation session is created and the same sharing options are available, i.e., private, shared with individuals or shared with everyone. If an individual validation session is shared with others, then the task can be divided among multiple persons, which is useful when the process is done in teams (e.g., with a group of students). Similarly, multiple copies of the validation session can be generated and shared with different individuals so that multiple interpretations are collected at the same location. This is useful for quality control purposes. Once the validation session has been created, a progress bar will be displayed. The validation session can then be started whereby a user would step through the individual sample units to complete the interpretation.

Figure 8 shows an example of a pixel and the underlying imagery from Google Maps, which can be changed to Bing by clicking on the layer icon located on the top right of the image.

The dates of the Bing imagery are listed but to obtain the dates for Google Earth imagery, there is a “Download sample (kmz)” button that automatically displays the location on Google Earth if installed locally on the user’s computer. The validation session is completed once all sample units have been interpreted.

2.1.4. Generate a Report with the Accuracy Assessment

A report is produced in Excel format, which contains options for including the raw data, a confusion matrix and a series of accuracy measures. These accuracy measures are calculated using R routines, which are called by the LACO-Wiki application. At the most basic level, these include the overall accuracy and the class-specific user’s and producer’s accuracy [

50]. Overall accuracy is the number of correctly identified classes, which is the diagonal of the confusion matrix, divided by the total of all elements in the confusion matrix. The user’s accuracy, also referred to as the error of commission, estimates the proportion of reference data that are correctly classified and hence indicates how well the map represents the truth. The producer’s accuracy, or error of omission, estimates the proportion of correctly classified reference locations in the map. Users can also choose to select Kappa, which was introduced to account for chance agreement and hence is generally lower than overall accuracy [

50]. Note that we are not recommending this as a measure of accuracy, in line with the recommendations of the GOFC-GOLD CEOS-CVWG [

27] and other research [

36], but we also recognize that many people will generate this accuracy measure regardless. As one of the use cases for LACO-Wiki is educational (see

Section 2.3.2), learning about the advantages and pitfalls of different accuracy measures could be one use of this tool.

Finally, we offer other measures that have appeared in the literature, i.e., Average Mutual Information (AMI) [

51], which measures consistency rather than correctness, and should therefore be used in combination with measures such as overall accuracy. It reflects the amount of information shared between the reference data and the map. Allocation and quantity disagreement [

36] are two components of the total disagreement. Allocation disagreement represents the amount of difference between the reference data and the map that reflects a sub-optimal spatial allocation of the classes given the proportion of classes in the reference data set and the map while quantity disagreement captures the less than perfect match between the reference data and the map in terms of the overall quantity. Finally, Portmanteau accuracy includes both the presence and absence of a class in the evaluation and hence provides a measure of the probability that the reference data and the map are the same [

52]. The reader is referred to the above references for further information on how these measures are calculated. Additional measures required by the user can be calculated from the confusion matrix. Alternatively, a request can be made to add accuracy measures to LACO-Wiki (contact

[email protected]).

2.2. Additional Features in LACO-Wiki

In addition to generating a sample set from a data set within LACO-Wiki, it is possible to generate it outside of LACO-Wiki, e.g., in a GIS package or using a programming language such as R. This is particularly useful in those situations where the land cover map is too large, i.e., >1 GB at the current limit, and can thus be generated externally. Once the sample set is generated, it can be uploaded to LACO-Wiki and it will then appear in the “Manage my Samples” list. A validation session can then be generated and interpreted as per

Section 2.1.3. Users can also transform 32 bit rasters to 8 bit if they exceed the 1 GB limit or the area could be divided into regions prior to uploading as another way to handle the current file size limitation.

Another useful feature built-into LACO-Wiki is the sample calculator, although it is currently still being tested before release. This option will appear in Step 2 of the workflow, i.e., sample generation. This option allows users to determine the minimum number of samples needed given a specified precision.

2.3. Use Cases for LACO-Wiki

2.3.1. Scientific Research

There are many land cover maps created and published in the scientific literature every year at a range of scales from local to global. For example, in the study by Yu et al. [

53], they assembled a database of 6771 research papers published before 2013 on land cover maps. LACO-Wiki could provide a research tool for accuracy assessment of land cover maps developed as part of ongoing research. As part of the research process, both the maps and the reference data can be shared, creating a potentially vast reference data repository. This in turn could stimulate further research into how these shared reference data could be reused in the calibration and validation of new land cover products. This also means that all data sets will have to comply with basic metadata standards.

2.3.2. Education

One of the main aims behind the development of LACO-Wiki has been its potential use as an educational tool. For example, it can be used within undergraduate remote sensing courses to provide an easy-to-use application for illustrating accuracy assessment as part of lessons on land cover classification. Students would most likely follow the complete workflow, i.e., upload a land cover map that they have created as part of learning about classification algorithms, generate a stratified-random sample, interpret the sample with imagery and then generate the accuracy measures. Lessons might also be focused on the accuracy assessment of a particular land cover product in which case the students might only be assigned a validation session followed by generation of the report on accuracy measures. This might provide an interesting classroom experiment on comparing the results achieved across the class.

2.3.3. Map Production

Map producers around the world could use the tool as one part of their production chain, which could vastly increase the pool of reference data in locations where the data are currently sparse. The use of local knowledge for image interpretation as well as in-house base layers such as orthophotos, which can be added to LACO-Wiki via a WMS, may result in higher quality reference data in these locations, which could, in turn, benefit other map producers when reusing the data.

2.3.4. Accuracy Assessment

LACO-Wiki is currently one of the tools recommended by the European Environment Agency (EEA) for use by EEA member countries in undertaking an accuracy assessment of the local component layers, e.g., the Urban Atlas. Starting in the summer of 2017, LACO-Wiki will be used for this purpose. The private sector could also use LACO-Wiki as part of land cover validation contracts. The sharing features built into LACO-Wiki facilitate completion of validation tasks by teams or can be used to gather multiple interpretations at the same location for quality control. Map users could also carry out independent accuracy assessments of existing products, geared towards their specific application or location.

5. Discussion

One of the criticisms that is often leveled at tools such as LACO-Wiki and Geo-Wiki is the problem related to the date of the imagery at the sample locations and the date of the land cover map. Hence if satellite imagery from Google Maps and Bing are the only sources of imagery used for sample interpretation, then it is important to record the dates of this imagery and to use the historical archive in Google Earth to support the accuracy assessment. As shown in the exercise undertaken here, more than 83% of the imagery used is within two years of 2010, which is the year to which GlobeLand30 corresponds. Moreover, there were no images used that were more than six years before or beyond 2010. Although we did not systematically record examples of where images before and after showed no change, there were a number of examples of where this was clearly the case, supporting the use of images that are not only available for 2010 or close to that year. Although the model showed a significant effect between agreement and the time difference in years between the imagery and 2010, implying that images further away in time from the base year 2010 had an effect on the agreement, this effect is very small.

Figure 13 shows an example of the Google Maps image that appears in LACO-Wiki for 2017 (

Figure 13a), the Bing image for 2012 (

Figure 13b) and the image for 2010 that can be found in Google Earth (

Figure 13c), which shows considerable change over time. If the Bing image had not been available, then the need to use the historical archive in Google becomes even more critical.

To undertake an accuracy assessment of GlobeLand30, very high resolution imagery is ideally needed. However, this is not always available. Landsat or similar resolution imagery in Google Earth occurred in 17.8% of the time in both Samples 1 and 2. This situation is made worse when Bing very high resolution imagery is also not available, which occurred 6.1% of the time in Sample 1 and 4.2% of the time in Sample 2. In these latter situations, it is very hard to recognize the land cover types or differentiate between types such as Forest, Shrubland, Grassland, Bareland or Wetland. For this reason we allowed for multiple land cover types as acceptable interpretations when only Landsat or similar resolution imagery was available in Google Earth (

Table 4 and

Table 7). These sample units should be considered as highly uncertain and ideally require another source of information such as ground-based data, geotagged photographs or orthophotos. Although we checked Google Earth for the presence of Panoramio photographs, there were rarely any present in the locations being interpreted. Note that we manually took the imagery types and presence/absence of Bing imagery into account but ideally this functionality would be added to LACO-Wiki in the future.

The results of the accuracy assessment show that certain classes are more problematic than others in terms of confusion between classes. One clear area of confusion is between the Grassland and Bareland classes. It was sometimes difficult to determine from the imagery alone whether surfaces were vegetated or bare. For this reason we used the NDVI tool in Geo-Wiki to examine the mean profiles of Landsat 7 and 8 to distinguish between the Grassland and Bareland classes. For this reason we would recommend that the international validation of GlobeLand30 include such tools to aid the accuracy assessment process.

Another source of confusion was between Forest and Shrubland classes, since it was not always possible to see the heights of the trees or shrubs from the images. Where possible we looked at the size of crowns and for the presence of shadows to denote trees but this task was still difficult. The use of a 30% percent threshold for these two classes also made judgment difficult when the vegetation was sparse and near this threshold. Hence there was additional confusion between these classes and Grassland/Bareland. In some cases the use of a 3 × 3 pixel took care of this issue but not always. We would suggest that an online gallery containing many different examples of land cover types be developed by the GlobeLand30 team to aid their validation process. We have employed such an approach during our recent SIGMA campaign to crowd source cropland data [

63].

Another source of error may have arisen from lack of local knowledge. The three interpreters have backgrounds in physical geography (L.S.), agronomy (J.C.L.B) and forestry (D.S.) although all have experience in remote sensing and visual image interpretation. However, none of these interpreters are from Kenya but rather from different locations around the world, i.e., Canada, Ecuador and Russia. These two samples should now be interpreted by local Kenyans to see whether local knowledge affects the agreement with GlobeLand30. This could have clear implications for needing the active involvement of GEO members in validating GlobeLand30 for their own countries. The results would also be of interest within the field of visual interpretation.