A Comparative Usability Study of Bare Hand Three-Dimensional Object Selection Techniques in Virtual Environment

Abstract

:1. Introduction

2. Materials and Methods

2.1. Participants and Apparatus

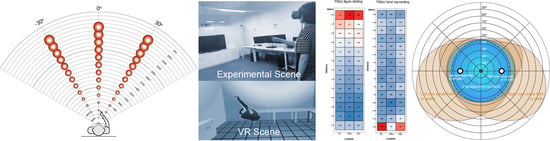

2.2. Experiment 1: Finger Clicking Experiment

2.3. Experiment 2: Finger Ray-Casting Experiment

3. Results

3.1. Interactive Performance Data of Finger Clicking Experiment

3.2. Interactive Performance Data of Finger Ray-Casting Experiment

3.3. Fitts’ Law

3.3.1. Adaptation on the Finger Clicking Experiment

3.3.2. Adaptation on the Finger Ray-Casting Experiment

4. Discussion

4.1. Applicability of Techniques

4.2. Size Adjustment of an Interactive Object Within the Horizontal FOV

4.3. Psychological Coordinates

5. Conclusions and Future Work

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Froehlich, B.; Bowman, D.A. 3D User Interfaces. IEEE Eng. Med. Boil. Mag. 2009, 29, 20–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Liang, J.; Green, M. JDCAD: A highly interactive 3D modeling system. Comput. Graph. 1994, 18, 499–506. [Google Scholar] [CrossRef]

- Lee, S.-B.; Jung, I.-H. A Design and Implementation of Natural User Interface System Using Kinect. J. Digit. Contents Soc. 2014, 15, 473–480. [Google Scholar] [CrossRef] [Green Version]

- Poupyrev, I.; Billinghurst, M.; Weghorst, S.; Ichikawa, T. The Go-Go Interaction Technique. In Proceedings of the 9th Annual ACM Symposium on User Interface Software and Technology—UIST ’96; Association for Computing Machinery (ACM): New York, NY, USA, 1996; pp. 79–80. [Google Scholar]

- Chen, H.-J.; Lin, C.J.; Lin, P.-H. Effects of control-display gain and postural control method on distal pointing performance. Int. J. Ind. Ergon. 2019, 72, 45–53. [Google Scholar] [CrossRef]

- Patel, S.N.; Abowd, G.D. A 2-Way Laser-Assisted Selection Scheme for Handhelds in a Physical Environment. Computer Vision 2003, 2864, 200–207. [Google Scholar]

- Parker, J.K.; Mandryk, R.L.; Nunes, M.N.; Inkpen, K. TractorBeam Selection Aids: Improving Target Acquisition for Pointing Input on Tabletop Displays. In Proceedings of the Computer Vision; Springer: Berlin/Heidelberg, Germany, 2005; pp. 80–93. [Google Scholar]

- Bowman, D.A.; Wingrave, C.A.; Campbell, J.M.; Ly, V.Q.; Rhoton, C.J. Novel Uses of Pinch Gloves™ for Virtual Environment Interaction Techniques. Virtual Real. 2002, 6, 122–129. [Google Scholar] [CrossRef]

- Bowman, D.A.; Hodges, L.F. An Evaluation of Techniques for Grabbing and Manipulating Remote Objects in Immersive Virtual Environments. In Proceedings of the 1997 Symposium on Interactive 3D Graphics—SI3D ’97; Association for Computing Machinery (ACM): New York, NY, USA, 1997. [Google Scholar]

- Chang, Y.S.; Nuernberger, B.; Luan, B.; Hollerer, T. Evaluating gesture-based augmented reality annotation. In Proceedings of the 2017 IEEE Symposium on 3D User Interfaces (3DUI), Los Angeles, CA, USA, 18–19 March 2017; pp. 182–185. [Google Scholar]

- Schweigert, R.; Schwind, V.; Mayer, S. EyePointing. In Proceedings of the Mensch und Computer—MuC ’19; Association for Computing Machinery (ACM): New York, NY, USA, 2019; pp. 719–723. [Google Scholar]

- McMahan, R.P.; Kopper, R.; Bowman, D.A. Chapter 12: Principles for Designing Effective 3D Interaction Techniques. In Handbook of Virtual Environments: Design, Implementation, and Applications, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2014; pp. 285–311. [Google Scholar]

- Argelaguet, F.; Andujar, C. A survey of 3D object selection techniques for virtual environments. Comput. Graph. 2013, 37, 121–136. [Google Scholar] [CrossRef] [Green Version]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Review of Three-Dimensional Human-Computer Interaction with Focus on the Leap Motion Controller. Sensors 2018, 18, 2194. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wang, T.; Cai, X.; Wang, L.; Tian, H. Interactive Design of 3D Dynamic Gesture Based on SVM-LSTM Model. Int. J. Mob. Hum. Comput. Interact. 2018, 10, 49–63. [Google Scholar] [CrossRef]

- Liu, L.; Huai, Y.; Long, L.; Yongjian, H. Dynamic Hand Gesture Recognition Using LMC for Flower and Plant Interaction. Int. J. Pattern Recognit. Artif. Intell. 2018, 33, 1950003. [Google Scholar] [CrossRef]

- Ameur, S.; Ben Khalifa, A.; Bouhlel, M.S. A comprehensive leap motion database for hand gesture recognition. In Proceedings of the 7th International Conference on Sciences of Electronics, Technologies of Information and Telecommunications (SETIT), Hammamet, Tunisia, 18–20 December 2016. [Google Scholar]

- Lee, J.H.; An, S.-G.; Kim, Y.; Bae, S.-H.; Gajos, K.; Mankoff, J.; Harrison, C. Projective Windows. In Proceedings of the Adjunct Publication of the 30th Annual ACM Symposium on User Interface Software and Technology—UIST ’17; Association for Computing Machinery (ACM): New York, NY, USA, 2017; pp. 169–171. [Google Scholar]

- Luo, Y.; Gao, B.; Deng, Y.; Zhu, X.; Jiang, T.; Zhao, X.; Yang, Z. Automated brain extraction and immersive exploration of its layers in virtual reality for the rhesus macaque MRI data sets. Comput. Animat. Virtual Worlds 2018, 30, e1841. [Google Scholar] [CrossRef]

- Morse, P.; Reading, A.M.; Lueg, C.P.; Kenderdine, S. TaggerVR: Interactive Data Analytics for Geoscience—A Novel Interface for Interactive Visual Analytics of Large Geoscientific Datasets in Cloud Repositories. In 2015 Big Data Visual Analytics (BDVA); Institute of Electrical and Electronics Engineers (IEEE): New York NY, USA, 2015; pp. 1–2. [Google Scholar]

- Shen, J.; Luo, Y.; Wu, Z.; Tian, Y.; Deng, Q. CUDA-based real-time hand gesture interaction and visualization for CT volume dataset using leap motion. Vis. Comput. 2016, 32, 359–370. [Google Scholar] [CrossRef]

- Li, J.; Cho, I.; Wartell, Z. Evaluation of Cursor Offset on 3D Selection in VR. In Proceedings of the Symposium on Spatial User Interaction—SUI ’18; Association for Computing Machinery (ACM): New York, NY, USA, 2018; pp. 120–129. [Google Scholar]

- Bachmann, D.; Weichert, F.; Rinkenauer, G. Evaluation of the Leap Motion Controller as a New Contact-Free Pointing Device. Sensors 2014, 15, 214–233. [Google Scholar] [CrossRef]

- Steed, A.; Parker, C. Evaluating Effectiveness of Interaction Techniques across Immersive Virtual Environmental Systems. Presence Teleoperators Virtual Environ. 2005, 14, 511–527. [Google Scholar] [CrossRef]

- Difeng, Y.; Liang, H.-N.; Lu, F.; Nanjappan, V.; Papangelis, K. Target Selection in Head-Mounted Display Virtual Reality Environments. J. Univers. Comp. Sci. 2018, 24, 1217–1243. [Google Scholar]

- Lin, J.; Harris-Adamson, C.; Rempel, D. The Design of Hand Gestures for Selecting Virtual Objects. Int. J. Hum. Comp. Interact. 2019, 35, 1729–1735. [Google Scholar] [CrossRef]

- Figueiredo, L.; Rodrigues, E.; Teixeira, J.; Teichrieb, V.; Teixeira, J.M.; Teichrieb, V. A comparative evaluation of direct hand and wand interactions on consumer devices. Comput. Graph. 2018, 77, 108–121. [Google Scholar] [CrossRef]

- Norman, D.A. The way I see it: Natural user interfaces are not natural. Interactions 2010, 17, 6–10. [Google Scholar] [CrossRef]

- El Jamiy, F.; Marsh, R. Survey on depth perception in head mounted displays: Distance estimation in virtual reality, augmented reality, and mixed reality. IET Image Process. 2019, 13, 707–712. [Google Scholar] [CrossRef]

- Alger, M. Visual Design Methods for Virtual Reality. Available online: http://aperturesciencellc.com/vr/CARD.pdf (accessed on 10 October 2020).

- Loup-Escande, E.; Jamet, E.; Ragot, M.; Erhel, S.; Michinov, N. Effects of Stereoscopic Display on Learning and User Experience in an Educational Virtual Environment. Int. J. Hum. Comp. Interact. 2016, 33, 115–122. [Google Scholar] [CrossRef]

- Previc, F.H. The neuropsychology of 3-D space. Psychol. Bull. 1998, 124, 123–164. [Google Scholar] [CrossRef] [PubMed]

- Yu, D.; Liang, H.-N.; Lu, X.; Fan, K.; Ens, B. Modeling endpoint distribution of pointing selection tasks in virtual reality environments. ACM Trans. Graph. 2019, 38, 1–13. [Google Scholar] [CrossRef] [Green Version]

- Cheok, M.J.; Omar, Z.; Jaward, M.H. A review of hand gesture and sign language recognition techniques. Int. J. Mach. Learn. Cybern. 2017, 10, 131–153. [Google Scholar] [CrossRef]

- Zengeler, N.; Kopinski, T.; Handmann, U. Hand Gesture Recognition in Automotive Human–Machine Interaction Using Depth Cameras. Sensors 2018, 19, 59. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Gao, B.; Kim, H.; Kim, B.; Kim, J.-I. Artificial Landmarks to Facilitate Spatial Learning and Recalling for Curved Visual Wall Layout in Virtual Reality. In Proceedings of the IEEE International Conference on Big Data and Smart Computing (BigComp), Shanghai, China, 15–18 January 2018. [Google Scholar]

- Marichal, T.; Bedoret, D.; Mesnil, C.; Pichavant, M.; Goriely, S.; Trottein, F.; Cataldo, D.D.; Goldman, M.; Lekeux, P.; Bureau, F. Interferon response factor 3 is essential for house dust mite–induced airway allergy. J. Allergy Clin. Immunol. 2010, 126, 836–844. [Google Scholar] [CrossRef] [PubMed]

- Hincapié-Ramos, J.D.; Ozacar, K.; Irani, P.P.; Kitamura, Y. GyroWand: An Approach to IMU-Based Raycasting for Augmented Reality. IEEE Eng. Med. Boil. Mag. 2016, 36, 90–96. [Google Scholar] [CrossRef]

- Sanz, F.A.; Andújar, C. Efficient 3D pointing selection in cluttered virtual environments. IEEE Eng. Med. Boil. Mag. 2009, 29, 34–43. [Google Scholar] [CrossRef]

- Fitts, P.M. The information capacity of the human motor system in controlling the amplitude of movement. J. Exp. Psychol. 1954, 47, 381–391. [Google Scholar] [CrossRef] [Green Version]

- MacKenzie, I.S. Fitts’ Law as a Research and Design Tool in Human-Computer Interaction. Hum. Comp. Interact. 1992, 7, 91–139. [Google Scholar] [CrossRef]

- Grossman, T.; Balakrishnan, R. Pointing at Trivariate Targets in 3D Environments. In Proceedings of the 2004 Conference on Human Factors in Computing Systems—CHI ’04; Association for Computing Machinery (ACM): New York, NY, USA, 2004; pp. 447–454. [Google Scholar]

- Cha, Y.; Myung, R. Extended Fitts’ law in Three-Dimensional Pointing Tasks. Proc. Hum. Factors Ergon. Soc. Annu. Meet. 2010, 54, 972–976. [Google Scholar] [CrossRef]

- Wingrave, C.A.; Bowman, D.A. Baseline factors for raycasting selection. In Proceedings of the HCI International, 2005; pp. 61–68. [Google Scholar]

- Murata, A.; Iwase, H. Extending Fitts’ law to a three-dimensional pointing task. Hum. Mov. Sci. 2001, 20, 791–805. [Google Scholar] [CrossRef] [Green Version]

- Huesmann, L.R.; Card, S.K.; Moran, T.P.; Newell, A. The Psychology of Human-Computer Interaction. Am. J. Psychol. 1984, 97, 625. [Google Scholar] [CrossRef]

- Teather, R.J.; Stuerzlinger, W. Pointing at 3D targets in a stereo head-tracked virtual environment. In Proceedings of the IEEE Symposium on 3D User Interfaces (3DUI), Singapore, 19–20 March 2011. [Google Scholar]

- Olsen, D.R., Jr.; Nielsen, T. Laser Pointer Interaction. In Proceedings of the 27th International Conference on Human Factors in Computing Systems—CHI 09; Association for Computing Machinery (ACM): New York, NY, USA, 2001; pp. 17–22. [Google Scholar] [CrossRef]

- Bateman, S.; Mandryk, R.L.; Gutwin, C.; Xiao, R. Analysis and comparison of target assistance techniques for relative ray-cast pointing. Int. J. Hum. Comput. Stud. 2013, 71, 511–532. [Google Scholar] [CrossRef]

- Patterson, R.; Winterbottom, M.D.; Pierce, B.J. Perceptual Issues in the Use of Head-Mounted Visual Displays. Hum. Factors J. Hum. Factors Ergon. Soc. 2006, 48, 555–573. [Google Scholar] [CrossRef]

- Drasdo, N. The neural representation of visual space (reply). Nat. Cell Biol. 1978, 276, 422. [Google Scholar] [CrossRef]

- Andersen, R.A.; Snyder, L.H.; Li, C.-S.; Stricanne, B. Coordinate transformations in the representation of spatial information. Curr. Opin. Neurobiol. 1993, 3, 171–176. [Google Scholar] [CrossRef]

- Andersen, R.A.; Buneo, C.A. Intentional Maps in Posterior Parietal Cortex. Annu. Rev. Neurosci. 2002, 25, 189–220. [Google Scholar] [CrossRef] [Green Version]

- Bosco, A.; Piserchia, V.; Fattori, P. Multiple Coordinate Systems and Motor Strategies for Reaching Movements When Eye and Hand Are Dissociated in Depth and Direction. Front. Hum. Neurosci. 2017, 11, 1–15. [Google Scholar] [CrossRef]

- Ens, B.; Hincapié-Ramos, J.D.; Irani, P. Ethereal Planes. In Proceedings of the 2nd ACM Symposium on Computing for Development—ACM DEV ’12; Association for Computing Machinery (ACM): New York, NY, USA, 2014; pp. 2–12. [Google Scholar]

- Ens, B.; Finnegan, R.; Irani, P.P. The personal cockpit. In Proceedings of the 32nd Annual ACM Conference on Human Factors in Computing Systems—CHI ’14; Association for Computing Machinery (ACM): New York, NY, USA, 2014; pp. 3171–3180. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhou, X.; Qin, H.; Xiao, W.; Jia, L.; Xue, C. A Comparative Usability Study of Bare Hand Three-Dimensional Object Selection Techniques in Virtual Environment. Symmetry 2020, 12, 1723. https://doi.org/10.3390/sym12101723

Zhou X, Qin H, Xiao W, Jia L, Xue C. A Comparative Usability Study of Bare Hand Three-Dimensional Object Selection Techniques in Virtual Environment. Symmetry. 2020; 12(10):1723. https://doi.org/10.3390/sym12101723

Chicago/Turabian StyleZhou, Xiaozhou, Hao Qin, Weiye Xiao, Lesong Jia, and Chengqi Xue. 2020. "A Comparative Usability Study of Bare Hand Three-Dimensional Object Selection Techniques in Virtual Environment" Symmetry 12, no. 10: 1723. https://doi.org/10.3390/sym12101723

APA StyleZhou, X., Qin, H., Xiao, W., Jia, L., & Xue, C. (2020). A Comparative Usability Study of Bare Hand Three-Dimensional Object Selection Techniques in Virtual Environment. Symmetry, 12(10), 1723. https://doi.org/10.3390/sym12101723