Continuance Use of Cloud Computing in Higher Education Institutions: A Conceptual Model

Abstract

:1. Introduction

2. Background and Related Work

Life Cycle of an Information System

3. Theoretical and Conceptual Background

3.1. IS Continuance Model

3.2. IS Success Model

3.3. IS Discontinuance Model

3.4. TOE Framework

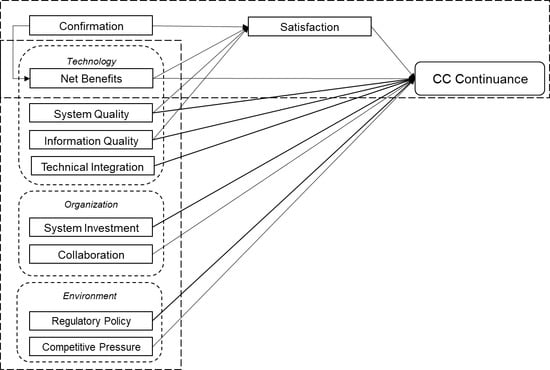

4. Research Model and Hypotheses

5. Methodology

5.1. Research Design

5.2. Instrument Development

5.3. Data Collection

5.4. Data Analysis

5.5. Prototype Development and Evaluation

5.5.1. Establish Prototype Objectives

5.5.2. Define Prototype Functionality

5.5.3. Develop Prototype

5.5.4. Evaluate Prototype

6. Preliminary Results

6.1. Validity and Reliability of the Survey Instrument

6.2. Reflective Measurement Model Evaluation

6.3. Formative Measurement Model Evaluation

7. Discussion and Conclusions

7.1. Theoretical Contributions

7.2. Practical Implications

7.3. Limitations

7.4. Future Research Directions

Author Contributions

Funding

Conflicts of Interest

Appendix A

| Constructs | Reflective/Formative | Measurement Items | Theories | ||

|---|---|---|---|---|---|

| Items | Adapted Source | Previous Studies | |||

| CC Continuous Intention | Reflective | (1 = Strongly Disagree to 7 = Strongly Agree) CCA1: Our institution intends to continue using the cloud computing service rather than discontinue. CCA2: Our institution’s intention is to continue using the cloud computing service rather than use any another means (traditional software). CCA3: If we could, our institution would like to discontinue the use of the cloud computing service. (reverse coded). | [101] | [45,72,76] | ECM & ISD |

| Satisfaction (SAT) | Reflective | How do you feel about your overall experience with your current cloud computing service (SaaS, IaaS, or PaaS)? SAT1: Very dissatisfied (1)–Very satisfied (7) SAT2: Very displeased (1)–Very pleased (7) SAT3: Very frustrated (1)–Very contented (7) SAT4: Absolutely terrible (1)–Absolutely delighted (7). | [101] | [45,72,76] | ECM |

| Confirmation (Con) | Reflective | (1 = Strongly Disagree to 7 = Strongly Agree) CON1. Our experience with using cloud computing services was better than what we expected. CON2. The benefits with using cloud computing services were better than we expected. CON3. The functionalities provided by cloud computing services for team projects was better than what I expected. CON4. Cloud computing services support our institution more than expected. CON5. Overall, most of our expectations from using cloud computing services were confirmed. | [101] | [45,72] | ECM |

| Net Benefits (NB) | Formative | Our cloud computing service… NB1. … increases the productivity of end-users. NB2. … increases the overall productivity of the institution. NB3. … enables individual users to make better decisions. NB4. … helps to save IT-related costs. NB5. … makes it easier to plan the IT costs of the institution. NB6. … enhances our strategic flexibility. NB7. … enhances the ability of the institution to innovate. NB8. … enhances the mobility of the institution’s employees. NB9. … improves the quality of the institution’s business processes. NB10. … shifts the risks of IT failures from my instituting to the provider. NB11. … lower the IT staff requirements within the institution to keep the system running. NB12. … improves outcomes/outputs of my institution. | [105,120] | [14,77,78,152] | ECM |

| NB13. … has brought significant benefits to the institution. | [116] | ||||

| Technical Integration (TE) | Reflective | TI1. The technical characteristics of the cloud computing service make it complex. TI2. The cloud computing service depends on a sophisticated integration of technology components. TI3. There is considerable technical complexity underlying the cloud computing service. | [16] | [14,78] | ISD |

| System Quality (SQ) | Formative | Our cloud computing service… SQ1. … operates reliably and stable. SQ2. … can be flexibly adjusted to new demands or conditions. SQ3. … effectively integrates data from different areas of the company. SQ4. … makes information easy to access (accessibility). SQ5. … is easy to use. SQ6. … provides information in a timely fashion (response time). SQ7. … provides key features and functionalities that meet the institution requirements. SQ8. … is secure. SQ9. … is easy to learn. SQ10. … meets different user requirements within the institution. SQ11. … is easy to upgrade from an older to a newer version. SQ12. … is easy to customize (after implementation, e.g., user interface). | [105,120] | [14,77,78,152] | ISS |

| SQ13. Overall, our cloud computing system is of high quality. | [116] | ||||

| Information Quality (IQ) | Formative | Our cloud computing service… IQ1. … provides a complete set of information IQ2. … produces correct information. IQ3. … provides information which is well formatted. IQ4. … provides me with the most recent information. IQ5. … produces relevant information with limited unnecessary elements. IQ6. … produces information which is easy to understand. | [105,120] | [14,77,78,152] | |

| IQ7. In general, our cloud computing service provides our institution with high-quality information. | [116] | ||||

| System Investment (SI) | Reflective | SI1. Significant organizational resources have been invested in our cloud computing service SI2. We have committed considerable time and money to the implementation and operation of the cloud-based system. SI3. The financial investments that have been made in the cloud-based system are substantial. | [16] | [14,78] | ISD |

| Collaboration (Col) | Reflective | Col1. Interaction of our institution with employees, industry and other institutions is easy with the continuance use of cloud computing service Col2. Collaboration between our institution and industry raise by the continuance use of cloud computing service Col3. The continuance uses of cloud computing service improve collaboration among institutions. Col4. If our institution continues using cloud computing service, it can communicate with its partners (institutions and industry) Col5. Communication with the institution’s partners (institutions and industry) is enhanced by the continuance use of cloud computing service | [195,196] | [42,142,143,144] | TOE |

| Regulatory Policy (RP) | Reflective | RP1. Our institution is under pressure from some government agencies to continue using cloud computing service. RP2. The government is providing us with incentives to continue using cloud computing service. RP3. The government is active in setting up the facilities to enable cloud computing service. RP4. The laws and regulations that exist nowadays are sufficient to protect the use of cloud computing service. RP5. There is legal protection in the use of cloud computing service. | [172,252,253] | [145,146,147] | TOE |

| Competitive Pressure (CP) | Reflective | CP1. Our Institution thinks that continuance use of cloud computing service has an influence on competition among other institutions CP2. Our institution will lose students to competitors if they don’t keep using cloud computing service CP3. Our institution is under pressure from competitors to continue using cloud computing service CP4. Some of our competitors have been using cloud computing service | [170,171,197] | [72,145] | TOE |

References

- Alexander, B. Social networking in higher education. In The Tower and the Cloud; EDUCAUSE: Louisville, CO, USA, 2008; pp. 197–201. [Google Scholar]

- Katz, N. The Tower and the Cloud: Higher Education in the Age of Cloud Computing; EDUCAUSE: Louisville, CO, USA, 2008; Volume 9. [Google Scholar]

- Sultan, N. Cloud computing for education: A new dawn? Int. J. Inf. Manag. 2010, 30, 109–116. [Google Scholar] [CrossRef]

- Son, I.; Lee, D.; Lee, J.-N.; Chang, Y.B. Market perception on cloud computing initiatives in organizations: An extended resource-based view. Inf. Manag. 2014, 51, 653–669. [Google Scholar] [CrossRef]

- Salim, S.A.; Sedera, D.; Sawang, S.; Alarifi, A.H.E.; Atapattu, M. Moving from Evaluation to Trial: How do SMEs Start Adopting Cloud ERP? Australas. J. Inf. Syst. 2015, 19. [Google Scholar] [CrossRef]

- Mell, P.; Grance, T. The NIST Definition of Cloud Computing; U.S. Department of Commerce, National Institute of Standards and Technology: Gaithersburg, MD, USA, 2011. [Google Scholar]

- González-Martínez, J.A.; Bote-Lorenzo, M.L.; Gómez-Sánchez, E.; Cano-Parra, R. Cloud computing and education: A state-of-the-art survey. Comput. Educ. 2015, 80, 132–151. [Google Scholar] [CrossRef]

- Qasem, Y.A.M.; Abdullah, R.; Jusoh, Y.Y.; Atan, R.; Asadi, S. Cloud Computing Adoption in Higher Education Institutions: A Systematic Review. IEEE Access 2019, 7, 63722–63744. [Google Scholar] [CrossRef]

- Rodríguez Monroy, C.; Almarcha Arias, G.C.; Núñez Guerrero, Y. The new cloud computing paradigm: The way to IT seen as a utility. Lat. Am. Caribb. J. Eng. Educ. 2012, 6, 24–31. [Google Scholar]

- IDC. IDC Forecasts Worldwide Public Cloud Services Spending. 2019. Available online: https://www.idc.com/getdoc.jsp?containerId=prUS44891519 (accessed on 28 July 2020).

- Hsu, P.-F.; Ray, S.; Li-Hsieh, Y.-Y. Examining cloud computing adoption intention, pricing mechanism, and deployment model. Int. J. Inf. Manag. 2014, 34, 474–488. [Google Scholar] [CrossRef]

- Dubey, A.; Wagle, D. Delivering Software as a Service. The McKinsey Quarterly, 6 May 2007. [Google Scholar]

- Walther, S.; Sedera, D.; Urbach, N.; Eymann, T.; Otto, B.; Sarker, S. Should We Stay, or Should We Go? Analyzing Continuance of Cloud Enterprise Systems. J. Inf. Technol. Theory Appl. 2018, 19, 4. [Google Scholar]

- Qasem, Y.A.; Abdullah, R.; Jusoh, Y.Y.; Atan, R. Conceptualizing a model for Continuance Use of Cloud Computing in Higher Education Institutions. In Proceedings of the AMCIS 2020 TREOs, Salt Lake City, UT, USA, 10–14 August 2020; p. 30. [Google Scholar]

- Furneaux, B.; Wade, M.R. An exploration of organizational level information systems discontinuance intentions. MIS Q. 2011, 35, 573–598. [Google Scholar] [CrossRef]

- Long, K. Unit of Analysis. Encyclopedia of Social Science Research Methods; SAGE Publications, Inc.: Los Angeles, CA, USA, 2004. [Google Scholar]

- Berman, S.J.; Kesterson-Townes, L.; Marshall, A.; Srivathsa, R. How cloud computing enables process and business model innovation. Strategy Leadersh. 2012, 40, 27–35. [Google Scholar] [CrossRef]

- Stahl, E.; Duijvestijn, L.; Fernandes, A.; Isom, P.; Jewell, D.; Jowett, M.; Stockslager, T. Performance Implications of Cloud Computing; Red Paper: New York, NY, USA, 2012. [Google Scholar]

- Thorsteinsson, G.; Page, T.; Niculescu, A. Using virtual reality for developing design communication. Stud. Inform. Control 2010, 19, 93–106. [Google Scholar] [CrossRef]

- Pocatilu, P.; Alecu, F.; Vetrici, M. Using cloud computing for E-learning systems. In Proceedings of the 8th WSEAS International Conference on Data networks, Communications, Computers, Baltimore, MD, USA, 7–9 November 2009; pp. 54–59. [Google Scholar]

- Sasikala, S.; Prema, S. Massive centralized cloud computing (MCCC) exploration in higher education. Adv. Comput. Sci. Technol. 2011, 3, 111. [Google Scholar]

- García-Peñalvo, F.J.; Johnson, M.; Alves, G.R.; Minović, M.; Conde-González, M.Á. Informal learning recognition through a cloud ecosystem. Future Gener. Comput. Syst. 2014, 32, 282–294. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.D.; Nguyen, T.M.; Pham, Q.T.; Misra, S. Acceptance and Use of E-Learning Based on Cloud Computing: The Role of Consumer Innovativeness. In Computational Science and Its Applications—Iccsa 2014; Pt, V.; Murgante, B., Misra, S., Rocha, A., Torre, C., Rocha, J.G., Falcao, M.I., Taniar, D., Apduhan, B.O., Gervasi, O., Eds.; Springer: Cham, Switzerland, 2014; pp. 159–174. [Google Scholar]

- Pinheiro, P.; Aparicio, M.; Costa, C. Adoption of cloud computing systems. In Proceedings of the International Conference on Information Systems and Design of Communication—ISDOC ’14, Lisbon, Portugal, 16 May 2014; ACM: Lisbon, Portugal, 2014; pp. 127–131. [Google Scholar]

- Behrend, T.S.; Wiebe, E.N.; London, J.E.; Johnson, E.C. Cloud computing adoption and usage in community colleges. Behav. Inf. Technol. 2011, 30, 231–240. [Google Scholar] [CrossRef]

- Almazroi, A.A.; Shen, H.F.; Teoh, K.K.; Babar, M.A. Cloud for e-Learning: Determinants of its Adoption by University Students in a Developing Country. In Proceedings of the 2016 IEEE 13th International Conference on E-Business Engineering (Icebe), Macau, China, 4–6 November 2016; pp. 71–78. [Google Scholar]

- Meske, C.; Stieglitz, S.; Vogl, R.; Rudolph, D.; Oksuz, A. Cloud Storage Services in Higher Educatio-Results of a Preliminary Study in the Context of the Sync&Share-Project in Germany. In Learning and Collaboration Technologies: Designing and Developing Novel Learning Experiences; Pt, I.; Zaphiris, P., Ioannou, A., Eds.; Springer: Berlin/Heidelberg, Germany, 2014; pp. 161–171. [Google Scholar]

- Khatib, M.M.E.; Opulencia, M.J.C. The Effects of Cloud Computing (IaaS) on E- Libraries in United Arab Emirates. Procedia Econ. Financ. 2015, 23, 1354–1357. [Google Scholar] [CrossRef]

- Arpaci, I.; Kilicer, K.; Bardakci, S. Effects of security and privacy concerns on educational use of cloud services. Comput. Hum. Behav. 2015, 45, 93–98. [Google Scholar] [CrossRef]

- Park, S.C.; Ryoo, S.Y. An empirical investigation of end-users’ switching toward cloud computing: A two factor theory perspective. Comput. Hum. Behav. 2013, 29, 160–170. [Google Scholar] [CrossRef]

- Riaz, S.; Muhammad, J. An Evaluation of Public Cloud Adoption for Higher Education: A case study from Pakistan. In Proceedings of the 2015 International Symposium on Mathematical Sciences and Computing Research (Ismsc), Ipoh, MX, USA, 19–20 May 2015; pp. 208–213. [Google Scholar]

- Militaru, G.; Purcărea, A.A.; Negoiţă, O.D.; Niculescu, A. Examining Cloud Computing Adoption Intention in Higher Education: Exploratory Study. In Exploring Services Science; Borangiu, T., Dragoicea, M., Novoa, H., Eds.; 2016; pp. 732–741. [Google Scholar]

- Wu, W.W.; Lan, L.W.; Lee, Y.T. Factors hindering acceptance of using cloud services in university: A case study. Electron. Libr. 2013, 31, 84–98. [Google Scholar] [CrossRef] [Green Version]

- Gurung, R.K.; Alsadoon, A.; Prasad, P.W.C.; Elchouemi, A. Impacts of Mobile Cloud Learning (MCL) on Blended Flexible Learning (BFL). In Proceedings of the 2016 International Conference on Information and Digital Technologies (IDT), Rzeszow, Poland, 5–7 July 2016; pp. 108–114. [Google Scholar]

- Bhatiasevi, V.; Naglis, M. Investigating the structural relationship for the determinants of cloud computing adoption in education. Educ. Inf. Technol. 2015, 21, 1197–1223. [Google Scholar] [CrossRef]

- Yeh, C.-H.; Hsu, C.-C. The Learning Effect of Students’ Cognitive Styles in Using Cloud Technology. In Advances in Web-Based Learning—ICWL 2013 Workshops; Chiu, D.K.W., Wang, M., Popescu, E., Li, Q., Lau, R., Shih, T.K., Yang, C.-S., Sampson, D.G., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 155–163. [Google Scholar]

- Stantchev, V.; Colomo-Palacios, R.; Soto-Acosta, P.; Misra, S. Learning management systems and cloud file hosting services: A study on students’ acceptance. Comput. Hum. Behav. 2014, 31, 612–619. [Google Scholar] [CrossRef]

- Yuvaraj, M. Perception of cloud computing in developing countries. Libr. Rev. 2016, 65, 33–51. [Google Scholar] [CrossRef]

- Sharma, S.K.; Al-Badi, A.H.; Govindaluri, S.M.; Al-Kharusi, M.H. Predicting motivators of cloud computing adoption: A developing country perspective. Comput. Hum. Behav. 2016, 62, 61–69. [Google Scholar] [CrossRef]

- Kankaew, V.; Wannapiroon, P. System Analysis of Virtual Team in Cloud Computing to Enhance Teamwork Skills of Undergraduate Students. Procedia Soc. Behav. Sci. 2015, 174, 4096–4102. [Google Scholar] [CrossRef] [Green Version]

- Yadegaridehkordi, E.; Iahad, N.A.; Ahmad, N. Task-Technology Fit and User Adoption of Cloud-based Collaborative Learning Technologies. In Proceedings of the 2014 International Conference on Computer and Information Sciences (Iccoins), Kuala Lumpur, MX, USA, 3–5 June 2014. [Google Scholar]

- Arpaci, I. Understanding and predicting students’ intention to use mobile cloud storage services. Comput. Hum. Behav. 2016, 58, 150–157. [Google Scholar] [CrossRef]

- Shiau, W.-L.; Chau, P.Y.K. Understanding behavioral intention to use a cloud computing classroom: A multiple model comparison approach. Inf. Manag. 2016, 53, 355–365. [Google Scholar] [CrossRef]

- Tan, X.; Kim, Y. User acceptance of SaaS-based collaboration tools: A case of Google Docs. J. Enterp. Inf. Manag. 2015, 28, 423–442. [Google Scholar] [CrossRef]

- Atchariyachanvanich, K.; Siripujaka, N.; Jaiwong, N. What Makes University Students Use Cloud-based E-Learning?: Case Study of KMITL Students. In Proceedings of the 2014 International Conference on Information Society (I-Society 2014), London, UK, 10–12 November 2014; pp. 112–116. [Google Scholar]

- Vaquero, L.M. EduCloud: PaaS versus IaaS Cloud Usage for an Advanced Computer Science Course. IEEE Trans. Educ. 2011, 54, 590–598. [Google Scholar] [CrossRef]

- Ashtari, S.; Eydgahi, A. Student Perceptions of Cloud Computing Effectiveness in Higher Education. In Proceedings of the 2015 IEEE 18th International Conference on Computational Science and Engineering (CSE), Porto, Portugal, 21–23 October 2015; pp. 184–191. [Google Scholar]

- Qasem, Y.A.; Abdullah, R.; Atan, R.; Jusoh, Y.Y. Cloud-Based Education As a Service (CEAAS) System Requirements Specification Model of Higher Education Institutions in Industrial Revolution 4.0. Int. J. Recent Technol. Eng. 2019, 8. [Google Scholar] [CrossRef]

- Huang, Y.-M. The factors that predispose students to continuously use cloud services: Social and technological perspectives. Comput. Educ. 2016, 97, 86–96. [Google Scholar] [CrossRef]

- Tashkandi, A.N.; Al-Jabri, I.M. Cloud computing adoption by higher education institutions in Saudi Arabia: An exploratory study. Clust. Comput. 2015, 18, 1527–1537. [Google Scholar] [CrossRef]

- Tashkandi, A.; Al-Jabri, I. Cloud Computing Adoption by Higher Education Institutions in Saudi Arabia: Analysis Based on TOE. In Proceedings of the 2015 International Conference on Cloud Computing, ICCC, Riyadh, Saudi Arabia, 26–29 April 2015. [Google Scholar]

- Dahiru, A.A.; Bass, J.M.; Allison, I.K. Cloud computing adoption in sub-Saharan Africa: An analysis using institutions and capabilities. In Proceedings of the International Conference on Information Society, i-Society 2014, London, UK, 10–12 November 2014; pp. 98–103. [Google Scholar]

- Shakeabubakor, A.A.; Sundararajan, E.; Hamdan, A.R. Cloud Computing Services and Applications to Improve Productivity of University Researchers. Int. J. Inf. Electron. Eng. 2015, 5, 153. [Google Scholar] [CrossRef] [Green Version]

- Md Kassim, S.S.; Salleh, M.; Zainal, A. Cloud Computing: A General User’s Perception and Security Awareness in Malaysian Polytechnic. In Pattern Analysis, Intelligent Security and the Internet of Things; Abraham, A., Muda, A.K., Choo, Y.-H., Eds.; Springer International Publishing: Cham, Switzerland, 2015; pp. 131–140. [Google Scholar]

- Sabi, H.M.; Uzoka, F.-M.E.; Langmia, K.; Njeh, F.N. Conceptualizing a model for adoption of cloud computing in education. Int. J. Inf. Manag. 2016, 36, 183–191. [Google Scholar] [CrossRef]

- Sabi, H.M.; Uzoka, F.-M.E.; Langmia, K.; Njeh, F.N.; Tsuma, C.K. A cross-country model of contextual factors impacting cloud computing adoption at universities in sub-Saharan Africa. Inf. Syst. Front. 2017, 20, 1381–1404. [Google Scholar] [CrossRef]

- Yuvaraj, M. Determining factors for the adoption of cloud computing in developing countries. Bottom Line 2016, 29, 259–272. [Google Scholar] [CrossRef]

- Surya, G.S.F.; Surendro, K. E-Readiness Framework for Cloud Computing Adoption in Higher Education. In Proceedings of the 2014 International Conference of Advanced Informatics: Concept, Theory and Application (ICAICTA), Bandung, Indonesia, 20–21 August 2014; pp. 278–282. [Google Scholar]

- Alharthi, A.; Alassafi, M.O.; Walters, R.J.; Wills, G.B. An exploratory study for investigating the critical success factors for cloud migration in the Saudi Arabian higher education context. Telemat. Inform. 2017, 34, 664–678. [Google Scholar] [CrossRef] [Green Version]

- Mokhtar, S.A.; Al-Sharafi, A.; Ali, S.H.S.; Aborujilah, A. Organizational Factors in the Adoption of Cloud Computing in E-learning. In Proceedings of the 3rd International Conference on Advanced Computer Science Applications and Technologies Acsat, Amman, Jordan, 29–30 December 2014; pp. 188–191. [Google Scholar]

- Lal, P. Organizational learning management systems: Time to move learning to the cloud! Dev. Learn. Organ. Int. J. 2015, 29, 13–15. [Google Scholar] [CrossRef]

- Yuvaraj, M. Problems and prospects of implementing cloud computing in university libraries. Libr. Rev. 2015, 64, 567–582. [Google Scholar] [CrossRef]

- Koch, F.; Assunção, M.D.; Cardonha, C.; Netto, M.A.S. Optimising resource costs of cloud computing for education. Future Gener. Comput. Syst. 2016, 55, 473–479. [Google Scholar] [CrossRef] [Green Version]

- Qasem, Y.A.; Abdullah, R.; Atan, R.; Jusoh, Y.Y. Towards Developing A Cloud-Based Education As A Service (CEAAS) Model For Cloud Computing Adoption in Higher Education Institutions. Complexity 2018, 6, 7. [Google Scholar] [CrossRef]

- Qasem, Y.A.M.; Abdullah, R.; Yah, Y.; Atan, R.; Al-Sharafi, M.A.; Al-Emran, M. Towards the Development of a Comprehensive Theoretical Model for Examining the Cloud Computing Adoption at the Organizational Level. In Recent Advances in Intelligent Systems and Smart Applications; Al-Emran, M., Shaalan, K., Hassanien, A.E., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 63–74. [Google Scholar]

- Jia, Q.; Guo, Y.; Barnes, S.J. Enterprise 2.0 post-adoption: Extending the information system continuance model based on the technology-Organization-environment framework. Comput. Hum. Behav. 2017, 67, 95–105. [Google Scholar] [CrossRef] [Green Version]

- Tripathi, S. Understanding the determinants affecting the continuance intention to use cloud computing. J. Int. Technol. Inf. Manag. 2017, 26, 124–152. [Google Scholar]

- Obal, M. What drives post-adoption usage? Investigating the negative and positive antecedents of disruptive technology continuous adoption intentions. Ind. Mark. Manag. 2017, 63, 42–52. [Google Scholar] [CrossRef]

- Ratten, V. Continuance use intention of cloud computing: Innovativeness and creativity perspectives. J. Bus. Res. 2016, 69, 1737–1740. [Google Scholar] [CrossRef]

- Flack, C.K. IS Success Model for Evaluating Cloud Computing for Small Business Benefit: A Quantitative Study. Ph.D. Thesis, Kennesaw State University, Kennesaw, GA, USA, 2016. [Google Scholar]

- Walther, S.; Sarker, S.; Urbach, N.; Sedera, D.; Eymann, T.; Otto, B. Exploring organizational level continuance of cloud-based enterprise systems. In Proceedings of the ECIS 2015 Completed Research Papers, Münster, Germany, 26–29 May 2015. [Google Scholar]

- Ghobakhloo, M.; Tang, S.H. Information system success among manufacturing SMEs: Case of developing countries. Inf. Technol. Dev. 2015, 21, 573–600. [Google Scholar] [CrossRef]

- Schlagwein, D.; Thorogood, A. Married for life? A cloud computing client-provider relationship continuance model. In Proceedings of the European Conference on Information Systems (ECIS) 2014, Tel Aviv, Israel, 9–11 June 2014. [Google Scholar]

- Hadji, B.; Degoulet, P. Information system end-user satisfaction and continuance intention: A unified modeling approach. J. Biomed. Inf. 2016, 61, 185–193. [Google Scholar] [CrossRef]

- Esteves, J.; Bohórquez, V.W. An Updated ERP Systems Annotated Bibliography: 2001–2005; Instituto de Empresa Business School Working Paper No. WP; Instituto de Empresa Business School: Madrid, Spain, 2007; pp. 4–7. [Google Scholar]

- Gable, G.G.; Sedera, D.; Chan, T. Re-conceptualizing information system success: The IS-impact measurement model. J. Assoc. Inf. Syst. 2008, 9, 18. [Google Scholar] [CrossRef]

- Sedera, D.; Gable, G.G. Knowledge management competence for enterprise system success. J. Strateg. Inf. Syst. 2010, 19, 296–306. [Google Scholar] [CrossRef] [Green Version]

- Walther, S.; Plank, A.; Eymann, T.; Singh, N.; Phadke, G. Success factors and value propositions of software as a service providers—A literature review and classification. In Proceedings of the 2012 AMCIS: 18th Americas Conference on Information Systems, Seattle, WA, USA, 9–12 August 2012. [Google Scholar]

- Ashtari, S.; Eydgahi, A. Student perceptions of cloud applications effectiveness in higher education. J. Comput. Sci. 2017, 23, 173–180. [Google Scholar] [CrossRef]

- Ding, Y. Looking forward: The role of hope in information system continuance. Comput. Hum. Behav. 2019, 91, 127–137. [Google Scholar] [CrossRef]

- Rogers, E. Diffusion of Innovation; Macmillan Press Ltd.: London, UK, 1962. [Google Scholar]

- Ettlie, J.E.J.P.R. Adequacy of stage models for decisions on adoption of innovation. Psychol. Rep. 1980, 46, 991–995. [Google Scholar] [CrossRef]

- Fichman, R.G.; Kemerer, C.F.J.M.s. The assimilation of software process innovations: An organizational learning perspective. Manag. Sci. 1997, 43, 1345–1363. [Google Scholar] [CrossRef] [Green Version]

- Salim, S.A.; Sedera, D.; Sawang, S.; Alarifi, A. Technology adoption as a multi-stage process. In Proceedings of the 25th Australasian Conference on Information Systems (ACIS), Auckland, New Zealand, 8–10 December 2014. [Google Scholar]

- Fichman, R.G.; Kemerer, C.F. Adoption of software engineering process innovations: The case of object orientation. Sloan Manag. Rev. 1993, 34, 7–23. [Google Scholar]

- Choudhury, V.; Karahanna, E. The relative advantage of electronic channels: A multidimensional view. MIS Q. 2008, 32, 179. [Google Scholar] [CrossRef] [Green Version]

- Karahanna, E.; Straub, D.W.; Chervany, N.L. Information technology adoption across time: A cross-sectional comparison of pre-adoption and post-adoption beliefs. MIS Q. 1999, 23, 183. [Google Scholar] [CrossRef]

- Pavlou, P.A.; Fygenson, M. Understanding and predicting electronic commerce adoption: An extension of the theory of planned behavior. MIS Q. 2006, 30, 115. [Google Scholar] [CrossRef]

- Shoham, A. Selecting and evaluating trade shows. Ind. Mark. Manag. 1992, 21, 335–341. [Google Scholar] [CrossRef]

- Mintzberg, H.; Raisinghani, D.; Theoret, A. The Structure of “Unstructured” Decision Processes. Adm. Sci. Q. 1976, 21, 246–275. [Google Scholar] [CrossRef] [Green Version]

- Pierce, J.L.; Delbecq, A.L. Organization structure, individual attitudes and innovation. Acad. Manag. Rev. 1977, 2, 27–37. [Google Scholar] [CrossRef]

- Zmud, R.W. Diffusion of modern software practices: Influence of centralization and formalization. Manag. Sci. 1982, 28, 1421–1431. [Google Scholar] [CrossRef]

- Aguirre-Urreta, M.I.; Marakas, G.M. Exploring choice as an antecedent to behavior: Incorporating alternatives into the technology acceptance process. J. Organ. End User Comput. 2012, 24, 82–107. [Google Scholar] [CrossRef]

- Schwarz, A.; Chin, W.W.; Hirschheim, R.; Schwarz, C. Toward a process-based view of information technology acceptance. J. Inf. Technol. 2014, 29, 73–96. [Google Scholar] [CrossRef]

- Maier, C.; Laumer, S.; Weinert, C.; Weitzel, T. The effects of technostress and switching stress on discontinued use of social networking services: A study of Facebook use. Inf. Syst. J. 2015, 25, 275–308. [Google Scholar] [CrossRef]

- Damanpour, F.; Schneider, M. Phases of the adoption of innovation in organizations: Effects of environment, organization and top managers 1. Br. J. Manag. 2006, 17, 215–236. [Google Scholar] [CrossRef]

- Jeyaraj, A.; Rottman, J.W.; Lacity, M.C. A review of the predictors, linkages, and biases in IT innovation adoption research. J. Inf. Technol. 2006, 21, 1–23. [Google Scholar] [CrossRef]

- Davis, F.D. Perceived usefulness, perceived ease of use, and user acceptance of information technology. MIS Q. 1989, 13, 319. [Google Scholar] [CrossRef] [Green Version]

- Venkatesh, V.; Morris, M.G.; Davis, G.B.; Davis, F.D. User acceptance of information technology: Toward a unified view. MIS Q. 2003, 27, 425–478. [Google Scholar] [CrossRef] [Green Version]

- Oliver, R.L. A cognitive model of the antecedents and consequences of satisfaction decisions. J. Mark. Res. 1980, 17, 460–469. [Google Scholar] [CrossRef]

- Bhattacherjee, A. Understanding information systems continuance: An expectation-confirmation model. MIS Q. 2001, 25, 351. [Google Scholar] [CrossRef]

- Tornatzky, L.G.; Fleischer, M.; Chakrabarti, A.K. Processes of Technological Innovation; Lexington Books: Lanham, MD, USA, 1990. [Google Scholar]

- Rogers, E.M. Diffusion of Innovations; The Free Press: New York, NY, USA, 1995; p. 12. [Google Scholar]

- Teo, H.-H.; Wei, K.K.; Benbasat, I. Predicting intention to adopt interorganizational linkages: An institutional perspective. MIS Q. 2003, 27, 19. [Google Scholar] [CrossRef] [Green Version]

- DeLone, W.H.; McLean, E.R. Information systems success: The quest for the dependent variable. Inf. Syst. Res. 1992, 3, 60–95. [Google Scholar] [CrossRef]

- Eden, R.; Sedera, D.; Tan, F. Sustaining the Momentum: Archival Analysis of Enterprise Resource Planning Systems (2006–2012). Commun. Assoc. Inf. Syst. 2014, 35, 3. [Google Scholar] [CrossRef]

- Chou, S.-W.; Chen, P.-Y. The influence of individual differences on continuance intentions of enterprise resource planning (ERP). Int. J. Hum. Comput. Stud. 2009, 67, 484–496. [Google Scholar] [CrossRef]

- Lin, W.-S. Perceived fit and satisfaction on web learning performance: IS continuance intention and task-technology fit perspectives. Int. J. Hum. Comput. Stud. 2012, 70, 498–507. [Google Scholar] [CrossRef]

- Karahanna, E.; Straub, D. The psychological origins of perceived usefulness and ease-of-use. Inf. Manag. 1999, 35, 237–250. [Google Scholar] [CrossRef]

- Roca, J.C.; Chiu, C.-M.; Martínez, F.J. Understanding e-learning continuance intention: An extension of the Technology Acceptance Model. Int. J. Hum. Comput. Stud. 2006, 64, 683–696. [Google Scholar] [CrossRef] [Green Version]

- Dai, H.M.; Teo, T.; Rappa, N.A.; Huang, F. Explaining Chinese university students’ continuance learning intention in the MOOC setting: A modified expectation confirmation model perspective. Comput. Educ. 2020, 150, 103850. [Google Scholar] [CrossRef]

- Ouyang, Y.; Tang, C.; Rong, W.; Zhang, L.; Yin, C.; Xiong, Z. Task-technology fit aware expectation-confirmation model towards understanding of MOOCs continued usage intention. In Proceedings of the 50th Hawaii International Conference on System Sciences, Hilton Waikoloa Village, HI, USA, 4–7 January 2017. [Google Scholar]

- Joo, Y.J.; So, H.-J.; Kim, N.H. Examination of relationships among students’ self-determination, technology acceptance, satisfaction, and continuance intention to use K-MOOCs. Comput. Educ. 2018, 122, 260–272. [Google Scholar] [CrossRef]

- Thong, J.Y.; Hong, S.-J.; Tam, K.Y. The effects of post-adoption beliefs on the expectation-confirmation model for information technology continuance. Int. J. Hum. Comput. Stud. 2006, 64, 799–810. [Google Scholar] [CrossRef]

- Ajzen, I. The theory of planned behavior. Organ. Behav. Hum. Decis. Process. 1991, 50, 179–211. [Google Scholar] [CrossRef]

- Wixom, B.H.; Todd, P.A. A theoretical integration of user satisfaction and technology acceptance. Inf. Syst. Res. 2005, 16, 85–102. [Google Scholar] [CrossRef]

- Lokuge, S.; Sedera, D. Deriving information systems innovation execution mechanisms. In Proceedings of the 25th Australasian Conference on Information Systems (ACIS), Auckland, New Zealand, 8–10 December 2014. [Google Scholar]

- Lokuge, S.; Sedera, D. Enterprise systems lifecycle-wide innovation readiness. In Proceedings of the PACIS 2014 Proceedings, Chengdu, China, 24–28 June 2014; pp. 1–14. [Google Scholar]

- Melville, N.; Kraemer, K.; Gurbaxani, V. Information technology and organizational performance: An integrative model of IT business value. MIS Q. 2004, 28, 283–322. [Google Scholar] [CrossRef] [Green Version]

- Delone, W.H.; McLean, E.R. The DeLone and McLean model of information systems success: A ten-year update. J. Manag. Inf. Syst. 2003, 19, 9–30. [Google Scholar]

- Urbach, N.; Smolnik, S.; Riempp, G. The state of research on information systems success. Bus. Inf. Syst. Eng. 2009, 1, 315–325. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.S. Assessing e-commerce systems success: A respecification and validation of the DeLone and McLean model of IS success. Inf. Syst. J. 2008, 18, 529–557. [Google Scholar] [CrossRef]

- Urbach, N.; Smolnik, S.; Riempp, G. An empirical investigation of employee portal success. J. Strateg. Inf. Syst. 2010, 19, 184–206. [Google Scholar] [CrossRef]

- Barki, H.; Huff, S.L. Change, attitude to change, and decision support system success. Inf. Manag. 1985, 9, 261–268. [Google Scholar] [CrossRef]

- Gelderman, M. The relation between user satisfaction, usage of information systems and performance. Inf. Manag. 1998, 34, 11–18. [Google Scholar] [CrossRef]

- Seddon, P.B. A respecification and extension of the DeLone and McLean model of IS success. Inf. Syst. Res. 1997, 8, 240–253. [Google Scholar] [CrossRef]

- Yuthas, K.; Young, S.T. Material matters: Assessing the effectiveness of materials management IS. Inf. Manag. 1998, 33, 115–124. [Google Scholar] [CrossRef]

- Burton-Jones, A.; Gallivan, M.J. Toward a deeper understanding of system usage in organizations: A multilevel perspective. MIS Q. 2007, 31, 657. [Google Scholar] [CrossRef] [Green Version]

- Sedera, D.; Gable, G.; Chan, T. ERP success: Does organisation Size Matter? In Proceedings of the PACIS 2003 Proceedings, Adelaide, Australia, 10–13 July 2003; p. 74. [Google Scholar]

- Sedera, D.; Gable, G.; Chan, T. Knowledge management for ERP success. In Proceedings of the PACIS 2003 Proceedings, Adelaide, Australia, 10–13 July 2003; p. 97. [Google Scholar]

- Abolfazli, S.; Sanaei, Z.; Tabassi, A.; Rosen, S.; Gani, A.; Khan, S.U. Cloud Adoption in Malaysia: Trends, Opportunities, and Challenges. IEEE Cloud Comput. 2015, 2, 60–68. [Google Scholar] [CrossRef]

- Arkes, H.R.; Blumer, C. The psychology of sunk cost. Organ. Behav. Hum. Decis. Process. 1985, 35, 124–140. [Google Scholar] [CrossRef]

- Ahtiala, P. The optimal pricing of computer software and other products with high switching costs. Int. Rev. Econ. Financ. 2006, 15, 202–211. [Google Scholar] [CrossRef] [Green Version]

- Benlian, A.; Vetter, J.; Hess, T. The role of sunk cost in consecutive IT outsourcing decisions. Z. Fur Betr. 2012, 82, 181. [Google Scholar]

- Armbrust, M.; Fox, A.; Griffith, R.; Joseph, A.D.; Katz, R.; Konwinski, A.; Lee, G.; Patterson, D.; Rabkin, A.; Stoica, I. A view of cloud computing. Commun. ACM 2010, 53, 50–58. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.; Blake, M.B. Service-oriented computing and cloud computing: Challenges and opportunities. IEEE Internet Comput. 2010, 14, 72–75. [Google Scholar] [CrossRef]

- Bughin, J.; Chui, M.; Manyika, J. Clouds, big data, and smart assets: Ten tech-enabled business trends to watch. McKinsey Q. 2010, 56, 75–86. [Google Scholar]

- Lin, H.-F. Understanding the determinants of electronic supply chain management system adoption: Using the Technology–Organization–Environment framework. Technol. Forecast. Soc. Chang. 2014, 86, 80–92. [Google Scholar] [CrossRef]

- Oliveira, T.; Martins, M.F. Literature review of information technology adoption models at firm level. Electron. J. Inf. Syst. Eval. 2011, 14, 110–121. [Google Scholar]

- Chau, P.Y.; Tam, K.Y. Factors affecting the adoption of open systems: An exploratory study. MIS Q. 1997, 21, 1–24. [Google Scholar] [CrossRef]

- Galliers, R.D. Organizational Dynamics of Technology-Based Innovation; Springer: Boston, MA, USA, 2007; pp. 15–18. [Google Scholar]

- Yadegaridehkordi, E.; Iahad, N.A.; Ahmad, N. Task-Technology Fit Assessment of Cloud-Based Collaborative Learning Technologes: Remote Work and Collaboration: Breakthroughs in Research and Practice. Int. J. Inf. Systems Serv. Sect. 2017, 371–388. [Google Scholar] [CrossRef]

- Gupta, P.; Seetharaman, A.; Raj, J.R.J.I.J.o.I.M. The usage and adoption of cloud computing by small and medium businesses. Int. J. Inf. Manag. 2013, 33, 861–874. [Google Scholar] [CrossRef]

- Chong, A.Y.-L.; Lin, B.; Ooi, K.-B.; Raman, M. Factors affecting the adoption level of c-commerce: An empirical study. J. Comput. Inf. Syst. 2009, 50, 13–22. [Google Scholar]

- Oliveira, T.; Thomas, M.; Espadanal, M. Assessing the determinants of cloud computing adoption: An analysis of the manufacturing and services sectors. Inf. Manag. 2014, 51, 497–510. [Google Scholar] [CrossRef]

- Senyo, P.K.; Effah, J.; Addae, E. Preliminary insight into cloud computing adoption in a developing country. J. Enterp. Inf. Manag. 2016, 29, 505–524. [Google Scholar] [CrossRef]

- Klug, W.; Bai, X. The determinants of cloud computing adoption by colleges and universities. Int. J. Bus. Res. Inf. Technol. 2015, 2, 14–30. [Google Scholar]

- Cornu, B. Digital Natives: How Do They Learn? How to Teach Them; UNESCO Institute for Information Technology in Education: Moscow, Russia, 2011; Volume 52, pp. 2–11. [Google Scholar]

- Oblinger, D.; Oblinger, J.L.; Lippincott, J.K. Educating the Net Generation; c2005. 1 v.(various pagings): Illustrations; Educause: Boulder, CO, USA, 2005. [Google Scholar]

- Wymer, S.A.; Regan, E.A. Factors influencing e-commerce adoption and use by small and medium businesses. Electron. Mark. 2005, 15, 438–453. [Google Scholar] [CrossRef]

- Qasem, Y.A.; Abdullah, R.; Atan, R.; Jusoh, Y.Y. Mapping and Analyzing Process of Cloud-based Education as a Service (CEaaS) Model for Cloud Computing Adoption in Higher Education Institutions. In Proceedings of the 2018 Fourth International Conference on Information Retrieval and Knowledge Management (CAMP), Kota Kinabalu, MX, USA, 26–28 March 2018; pp. 1–8. [Google Scholar]

- Walther, S.; Sedera, D.; Sarker, S.; Eymann, T. Evaluating Operational Cloud Enterprise System Success: An Organizational Perspective. In Proceedings of the ECIS, Utrecht, The Netherlands, 6–8 June 2013; p. 16. [Google Scholar]

- Wang, M.W.; Lee, O.-K.; Lim, K.H. Knowledge management systems diffusion in Chinese enterprises: A multi-stage approach with the technology-organization-environment framework. In Proceedings of the PACIS 2007 Proceedings, Auckland, New Zealand, 4–6 July 2007; p. 70. [Google Scholar]

- Liao, C.; Palvia, P.; Chen, J.-L. Information technology adoption behavior life cycle: Toward a Technology Continuance Theory (TCT). Int. J. Inf. Manag. 2009, 29, 309–320. [Google Scholar] [CrossRef]

- Li, Y.; Crossler, R.E.; Compeau, D. Regulatory Focus in the Context of Wearable Continuance. In Proceedings of the AMCIS 2019 Conference Site, Cancún, México, 15–17 August 2019. [Google Scholar]

- Rousseau, D.M.J.R.i.o.b. Issues of level in organizational research: Multi-level and cross-level perspectives. Res. Organ. Behav. 1985, 7, 1–37. [Google Scholar]

- Walther, S. An Investigation of Organizational Level Continuance of Cloud-Based Enterprise Systems. Ph.D. Thesis, University of Bayreuth, Bayreuth, Germany, 2014. [Google Scholar]

- Petter, S.; DeLone, W.; McLean, E. Measuring information systems success: Models, dimensions, measures, and interrelationships. Eur. J. Inf. Syst. 2008, 17, 236–263. [Google Scholar] [CrossRef]

- Robey, D.; Zeller, R.L.J.I. Factors affecting the success and failure of an information system for product quality. Interfaces 1978, 8, 70–75. [Google Scholar] [CrossRef]

- Aldholay, A.; Isaac, O.; Abdullah, Z.; Abdulsalam, R.; Al-Shibami, A.H. An extension of Delone and McLean IS success model with self-efficacy: Online learning usage in Yemen. Int. J. Inf. Learn. Technol. 2018, 35, 285–304. [Google Scholar] [CrossRef]

- Xu, J.D.; Benbasat, I.; Cenfetelli, R.T.J.M.Q. Integrating service quality with system and information quality: An empirical test in the e-service context. MIS Q. 2013, 37, 777–794. [Google Scholar] [CrossRef]

- Spears, J.L.; Barki, H.J.M.q. User participation in information systems security risk management. MIS Q. 2010, 34, 503. [Google Scholar] [CrossRef] [Green Version]

- Lee, S.; Shin, B.; Lee, H.G. Understanding post-adoption usage of mobile data services: The role of supplier-side variables. J. Assoc. Inf. Syst. 2009, 10, 860–888. [Google Scholar] [CrossRef] [Green Version]

- Alshare, K.A.; Freeze, R.D.; Lane, P.L.; Wen, H.J. The impacts of system and human factors on online learning systems use and learner satisfaction. Decis. Sci. J. Innov. Educ. 2011, 9, 437–461. [Google Scholar] [CrossRef]

- Benlian, A.; Koufaris, M.; Hess, T. Service quality in software-as-a-service: Developing the SaaS-Qual measure and examining its role in usage continuance. J. Manag. Inf. Syst. 2011, 28, 85–126. [Google Scholar] [CrossRef]

- Oblinger, D. Boomers gen-xers millennials. EDUCAUSE Rev. 2003, 500, 37–47. [Google Scholar]

- Monaco, M.; Martin, M. The millennial student: A new generation of learners. Athl. Train. Educ. J. 2007, 2, 42–46. [Google Scholar] [CrossRef]

- White, B.J.; Brown, J.A.E.; Deale, C.S.; Hardin, A.T. Collaboration using cloud computing and traditional systems. Issues Inf. Syst. 2009, 10, 27–32. [Google Scholar]

- Nkhoma, M.Z.; Dang, D.P.; De Souza-Daw, A. Contributing factors of cloud computing adoption: A technology-organisation-environment framework approach. In Proceedings of the European Conference on Information Management & Evaluation, Melbourne, Australia, 2–4 December 2013. [Google Scholar]

- Zhu, K.; Dong, S.; Xu, S.X.; Kraemer, K.L. Innovation diffusion in global contexts: Determinants of post-adoption digital transformation of European companies. Eur. J. Inf. Syst. 2006, 15, 601–616. [Google Scholar] [CrossRef]

- Zhu, K.; Kraemer, K.L.; Xu, S. The process of innovation assimilation by firms in different countries: A technology diffusion perspective on e-business. Manag. Sci. 2006, 52, 1557–1576. [Google Scholar] [CrossRef] [Green Version]

- Shah Alam, S.; Ali, M.Y.; Jaini, M.M.F. An empirical study of factors affecting electronic commerce adoption among SMEs in Malaysia. J. Bus. Econ. Manag. 2011, 12, 375–399. [Google Scholar] [CrossRef] [Green Version]

- Ifinedo, P. Internet/e-business technologies acceptance in Canada’s SMEs: An exploratory investigation. Internet Res. 2011, 21, 255–281. [Google Scholar] [CrossRef]

- Creswell, J.W.; Creswell, J.D. Research Design: Qualitative, Quantitative, and Mixed Methods Approaches; Sage Publications: Los Angeles, CA, USA, 2017. [Google Scholar]

- Moore, G.C.; Benbasat, I. Development of an instrument to measure the perceptions of adopting an information technology innovation. Inf. Syst. Res. 1991, 2, 192–222. [Google Scholar] [CrossRef]

- Wu, K.; Vassileva, J.; Zhao, Y. Understanding users’ intention to switch personal cloud storage services: Evidence from the Chinese market. Comput. Hum. Behav. 2017, 68, 300–314. [Google Scholar] [CrossRef]

- Kelley, D.L. Measurement Made Accessible: A Research Approach Using Qualitative, Quantitative and Quality Improvement Methods; Sage Publications: Los Angeles, CA, USA, 1999. [Google Scholar]

- McKenzie, J.F.; Wood, M.L.; Kotecki, J.E.; Clark, J.K.; Brey, R.A. Establishing content validity: Using qualitative and quantitative steps. Am. J. Health Behav. 1999, 23, 311–318. [Google Scholar] [CrossRef]

- Zikmund, W.G.; Babin, B.J.; Carr, J.C.; Griffin, M. Business Research Methods, 9th ed.; South-Western Cengage Learning: Nelson, BC, Canada, 2013. [Google Scholar]

- Sekaran, U.; Bougie, R. Research Methods for Business: A Skill Building Approach; John Wiley & Sons: New York, NY, USA, 2016. [Google Scholar]

- Mathieson, K.; Peacock, E.; Chin, W.W. Extending the technology acceptance model. ACM SIGMIS Database Database Adv. Inf. Syst. 2001, 32, 86. [Google Scholar] [CrossRef]

- Diamantopoulos, A.; Winklhofer, H.M. Index construction with formative indicators: An alternative to scale development. J. Mark. Res. 2001, 38, 269–277. [Google Scholar] [CrossRef]

- MacKenzie, S.B.; Podsakoff, P.M.; Podsakoff, N.P. Construct measurement and validation procedures in MIS and behavioral research: Integrating new and existing techniques. MIS Q. 2011, 35, 293–334. [Google Scholar] [CrossRef]

- Petter, S.; Straub, D.; Rai, A. Specifying formative constructs in information systems research. MIS Q. 2007, 31, 623. [Google Scholar] [CrossRef] [Green Version]

- Haladyna, T.M. Developing and Validating Multiple-Choice Test Items; Routledge: London, UK, 2004. [Google Scholar]

- DeVon, H.A.; Block, M.E.; Moyle-Wright, P.; Ernst, D.M.; Hayden, S.J.; Lazzara, D.J.; Savoy, S.M.; Kostas-Polston, E. A psychometric toolbox for testing validity and reliability. J. Nurs. Sch. 2007, 39, 155–164. [Google Scholar] [CrossRef] [PubMed]

- Webster, J.; Watson, R.T. Analyzing the past to prepare for the future: Writing a literature review. MIS Q. 2002, 26, 13–23. [Google Scholar]

- Mac MacKenzie, S.B.; Podsakoff, P.M.; Jarvis, C.B. The Problem of Measurement Model Misspecification in Behavioral and Organizational Research and Some Recommended Solutions. J. Appl. Psychol. 2005, 90, 710–730. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Briggs, R.O.; Reinig, B.A.; Vreede, G.-J. The Yield Shift Theory of Satisfaction and Its Application to the IS/IT Domain. J. Assoc. Inf. Syst. 2008, 9, 267–293. [Google Scholar] [CrossRef]

- Rushinek, A.; Rushinek, S.F. What makes users happy? Commun. ACM 1986, 29, 594–598. [Google Scholar] [CrossRef]

- Oliver, R.L. Measurement and evaluation of satisfaction processes in retail settings. J. Retail. 1981, 57, 24–48. [Google Scholar]

- Swanson, E.B.; Dans, E. System life expectancy and the maintenance effort: Exploring their equilibration. MIS Q. 2000, 24, 277. [Google Scholar] [CrossRef] [Green Version]

- Gill, T.G. Early expert systems: Where are they now? MIS Q. 1995, 19, 51. [Google Scholar] [CrossRef]

- Keil, M.; Mann, J.; Rai, A. Why software projects escalate: An empirical analysis and test of four theoretical models. MIS Q. 2000, 24, 631. [Google Scholar] [CrossRef]

- Campion, M.A.; Medsker, G.J.; Higgs, A.C.J.P.p. Relations between work group characteristics and effectiveness: Implications for designing effective work groups. Pers. Psychol. 1993, 46, 823–847. [Google Scholar] [CrossRef]

- Baas, P. Task-Technology Fit in the Workplace: Affecting Employee Satisfaction and Productivity; Erasmus Universiteit: Rotterdam, The Netherlands, 2010. [Google Scholar]

- Doolin, B.; Troshani, I. Organizational Adoption of XBRL. Electron. Mark. 2007, 17, 199–209. [Google Scholar] [CrossRef]

- Segars, A.H.; Grover, V. Strategic Information Systems Planning Success: An Investigation of the Construct and Its Measurement. MIS Q. 1998, 22, 139. [Google Scholar] [CrossRef]

- Sharma, R.; Yetton, P.; Crawford, J. Estimating the effect of common method variance: The method—Method pair technique with an illustration from TAM Research. MIS Q. 2009, 33, 473–490. [Google Scholar] [CrossRef] [Green Version]

- Gorla, N.; Somers, T.M.; Wong, B. Organizational impact of system quality, information quality, and service quality. J. Strateg. Inf. Syst. 2010, 19, 207–228. [Google Scholar] [CrossRef]

- Malhotra, N.K. Questionnaire design and scale development. The Handbook of Marketing Research: Uses, Misuses, and Future Advances; Sage: Thousand Oaks, CA, USA, 2006; pp. 83–94. [Google Scholar]

- Hertzog, M.A. Considerations in determining sample size for pilot studies. Res. Nurs. Health 2008, 31, 180–191. [Google Scholar] [CrossRef]

- Saunders, M.N. Research Methods for Business Students, 5th ed.; Pearson Education India: Bengaluru, India, 2011. [Google Scholar]

- Sekaran, U.; Bougie, R. Research Methods for Business, A Skill Building Approach; John Willey & Sons Inc.: New York, NY, USA, 2003. [Google Scholar]

- Tellis, W. Introduction to case study. Qual. Rep. 1997, 3, 2. [Google Scholar]

- Whitehead, A.L.; Julious, S.A.; Cooper, C.L.; Campbell, M.J. Estimating the sample size for a pilot randomised trial to minimise the overall trial sample size for the external pilot and main trial for a continuous outcome variable. Stat. Methods Med. Res. 2016, 25, 1057–1073. [Google Scholar] [CrossRef]

- Hair Jr, J.F.; Hult, G.T.M.; Ringle, C.; Sarstedt, M. A Primer on Partial Least Squares Structural Equation Modeling (PLS-SEM); Sage Publications: Thousand Oaks, CA, USA, 2016. [Google Scholar]

- Chin, W.W.; Marcolin, B.L.; Newsted, P.R. A partial least squares latent variable modeling approach for measuring interaction effects: Results from a Monte Carlo simulation study and an electronic-mail emotion/adoption study. Inf. Syst. Res. 2003, 14, 189–217. [Google Scholar] [CrossRef] [Green Version]

- Hulland, J. Use of partial least squares (PLS) in strategic management research: A review of four recent studies. Strateg. Manag. J. 1999, 20, 195–204. [Google Scholar] [CrossRef]

- Gefen, D.; Rigdon, E.E.; Straub, D. Editor’s comments: An update and extension to SEM guidelines for administrative and social science research. MIS Q. 2011, 35, 3–14. [Google Scholar] [CrossRef]

- Chin, W.W. How to write up and report PLS analyses. In Handbook of Partial Least Squares; Springer: Berlin/Heidelberg, Germany, 2010; pp. 655–690. [Google Scholar]

- Ainuddin, R.A.; Beamish, P.W.; Hulland, J.S.; Rouse, M.J. Resource attributes and firm performance in international joint ventures. J. World Bus. 2007, 42, 47–60. [Google Scholar] [CrossRef]

- Henseler, J.; Ringle, C.M.; Sinkovics, R.R. The use of partial least squares path modeling in international marketing. In New Challenges to International Marketing; Emerald Group Publishing Limited: Bingley, UK, 2009; pp. 277–319. [Google Scholar]

- Urbach, N.; Ahlemann, F. Structural equation modeling in information systems research using partial least squares. J. Inf. Technol. Theory Appl. 2010, 11, 5–40. [Google Scholar]

- Sommerville, I. Software Engineering, 9th ed.; Pearson Education Limited: Harlow, UK, 2011; p. 18. ISBN 0137035152. [Google Scholar]

- Venkatesh, V.; Bala, H. Technology acceptance model 3 and a research agenda on interventions. Decis. Sci. 2008, 39, 273–315. [Google Scholar] [CrossRef] [Green Version]

- Venkatesh, V.; Davis, F.D. A theoretical extension of the technology acceptance model: Four longitudinal field studies. Manag. Sci. 2000, 46, 186–204. [Google Scholar] [CrossRef] [Green Version]

- Taylor, S.; Todd, P.A. Understanding information technology usage: A test of competing models. Inf. Syst. Res. 1995, 6, 144–176. [Google Scholar] [CrossRef]

- Faulkner, L. Beyond the five-user assumption: Benefits of increased sample sizes in usability testing. Behav. Res. Methods Instrum. Comput. 2003, 35, 379–383. [Google Scholar] [CrossRef]

- Turner, C.W.; Lewis, J.R.; Nielsen, J. Determining usability test sample size. Int. Encycl. Ergon. Hum. Factors 2006, 3, 3084–3088. [Google Scholar]

- Zaman, H.; Robinson, P.; Petrou, M.; Olivier, P.; Shih, T.; Velastin, S.; Nystrom, I. Visual Informatics: Sustaining Research and Innovations; LNCS, Springer: Selangor, Malaysia, 2011. [Google Scholar]

- Hadi, A.; Daud, W.M.F.W.; Ibrahim, N.H. The development of history educational game as a revision tool for Malaysia school education. In Proceedings of the International Visual Informatics Conference, Selangor, MY, USA, 9–11 November 2011; Springer: Berlin/Heidelberg, Germany, 2011. [Google Scholar]

- Marian, A.M.; Haziemeh, F.A. On-Line Mobile Staff Directory Service: Implementation for the Irbid University College (Iuc). Ubiquitous Comput. Commun. J. 2011, 6, 25–33. [Google Scholar]

- Brinkman, W.-P.; Haakma, R.; Bouwhuis, D. The theoretical foundation and validity of a component-based usability questionnaire. Behav. Inf. Technol. 2009, 28, 121–137. [Google Scholar] [CrossRef]

- Mikroyannidis, A.; Connolly, T. Case Study 3: Exploring open educational resources for informal learning. In Responsive Open Learning Environments; Springer: Cham, Switzerland, 2015; pp. 135–158. [Google Scholar]

- Shanmugam, M.; Yah Jusoh, Y.; Jabar, M.A. Measuring Continuance Participation in Online Communities. J. Theor. Appl. Inf. Technol. 2017, 95, 3513–3522. [Google Scholar]

- Straub, D.; Boudreau, M.C.; Gefen, D. Validation guidelines for IS positivist research. Commun. Assoc. Inf. Syst. 2004, 13, 24. [Google Scholar] [CrossRef]

- Lynn, M.R. Determination and quantification of content validity. Nurs. Res. 1986, 35, 382–385. [Google Scholar] [CrossRef]

- Dobratz, M.C. The life closure scale: Additional psychometric testing of a tool to measure psychological adaptation in death and dying. Res. Nurs. Health 2004, 27, 52–62. [Google Scholar] [CrossRef]

- Davis, L.L. Instrument review: Getting the most from a panel of experts. Appl. Nurs. Res. 1992, 5, 194–197. [Google Scholar] [CrossRef]

- Polit, D.F.; Beck, C.T. The content validity index: Are you sure you know what’s being reported? Critique and recommendations. Res. Nurs. Health 2006, 29, 489–497. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Qasem, Y.A.M.; Asadi, S.; Abdullah, R.; Yah, Y.; Atan, R.; Al-Sharafi, M.A.; Yassin, A.A. A Multi-Analytical Approach to Predict the Determinants of Cloud Computing Adoption in Higher Education Institutions. Appl. Sci. 2020, 10. [Google Scholar] [CrossRef]

- Coolican, H. Research Methods and Statistics in Psychology; Psychology Press: New York, NY, USA, 2017. [Google Scholar]

- Briggs, S.R.; Cheek, J.M. The role of factor analysis in the development and evaluation of personality scales. J. Personal. 1986, 54, 106–148. [Google Scholar] [CrossRef]

- Tabachnick, B.G.; Fidell, L.S. Principal components and factor analysis. Using Multivar Stat. 2001, 4, 582–633. [Google Scholar]

- Gliem, J.A.; Gliem, R.R. Calculating, interpreting, and reporting Cronbach’s alpha reliability coefficient for Likert-type scales. In Proceedings of the 2003 Midwest Research-to-Practice Conference in Adult, Continuing, and Community, Jeddah, Saudi Arabia, 8–10 October 2003. [Google Scholar]

- Mallery, P.; George, D. SPSS for Windows Step by Step: A Simple Guide and Reference; Allyn & Bacon: Boston, MA, USA, 2003. [Google Scholar]

- Nunnally, J.C. Psychometric Theory 3E; Tata McGraw-Hill Education: New York, NY, USA, 1994. [Google Scholar]

- Bagozzi, R.P.; Yi, Y. On the evaluation of structural equation models. J. Acad. Mark. Sci. 1988, 16, 74–94. [Google Scholar] [CrossRef]

- Fornell, C.; Larcker, D.F. Evaluating structural equation models with unobservable variables and measurement error. J. Mark. Res. 1981, 18, 39–50. [Google Scholar] [CrossRef]

- Gefen, D.; Straub, D.; Boudreau, M.-C. Structural Equation Modeling and Regression: Guidelines for Research Practice. Commun. Assoc. Inf. Syst. 2000, 4, 7. [Google Scholar] [CrossRef] [Green Version]

- Chin, W.W.; Marcoulides, G. The partial least squares approach to structural equation modeling. Mod. Methods Bus. Res. 1998, 295, 295–336. [Google Scholar]

- Diamantopoulos, A.; Siguaw, J.A. Formative versus reflective indicators in organizational measure development: A comparison and empirical illustration. Br. J. Manag. 2006, 17, 263–282. [Google Scholar] [CrossRef]

- Hair, J.F.; Ringle, C.M.; Sarstedt, M. Partial least squares structural equation modeling: Rigorous applications, better results and higher acceptance. Long Range Plan. 2013, 46, 1–12. [Google Scholar] [CrossRef]

- Cenfetelli, R.T.; Bassellier, G. Interpretation of formative measurement in information systems research. MIS Q. 2009, 33, 689–707. [Google Scholar] [CrossRef]

- Yoo, Y.; Henfridsson, O.; Lyytinen, K. Research commentary—The new organizing logic of digital innovation: An agenda for information systems research. Inf. Syst. Res. 2010, 21, 724–735. [Google Scholar] [CrossRef]

- Nylén, D.; Holmström, J. Digital innovation strategy: A framework for diagnosing and improving digital product and service innovation. Bus. Horiz. 2015, 58, 57–67. [Google Scholar] [CrossRef] [Green Version]

- Maksimovic, M. Green Internet of Things (G-IoT) at engineering education institution: The classroom of tomorrow. Green Internet Things 2017, 16, 270–273. [Google Scholar]

- Fortino, G.; Rovella, A.; Russo, W.; Savaglio, C. Towards cyberphysical digital libraries: Integrating IoT smart objects into digital libraries. In Management of Cyber Physical Objects in the Future Internet of Things; Springer: Berlin/Heidelberg, Germany, 2016; pp. 135–156. [Google Scholar]

- Picciano, A.G. The evolution of big data and learning analytics in American higher education. J. Asynchronous Learn. Netw. 2012, 16, 9–20. [Google Scholar] [CrossRef] [Green Version]

- Ifinedo, P. An empirical analysis of factors influencing Internet/e-business technologies adoption by SMEs in Canada. Int. J. Inf. Technol. Decis. Mak. 2011, 10, 731–766. [Google Scholar] [CrossRef]

- Talib, A.M.; Atan, R.; Abdullah, R.; Murad, M.A.A. Security framework of cloud data storage based on multi agent system architecture—A pilot study. In Proceedings of the 2012 International Conference on Information Retrieval and Knowledge Management, CAMP’12, Kuala Lumpur, Malaysia, 13–15 March 2012. [Google Scholar]

- Adrian, C.; Abdullah, R.; Atan, R.; Jusoh, Y.Y. Factors influencing to the implementation success of big data analytics: A systematic literature review. In Proceedings of the International Conference on Research and Innovation in Information Systems, ICRIIS, Langkawi, Malaysia, 16–17 July 2017. [Google Scholar]

| Level of Analysis | Adoption Phase | Theoretical Perspective | Type | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| IND | ORG | PRE | POST | ISC | ISS | ISD | TOE | OTH | EMP | THEO | |

| [14] | √ | √ | √ | √ | √ | ||||||

| [72] | √ | √ | √ | √ | √ | ||||||

| [75] | √ | √ | √ | √ | |||||||

| [76] * | √ | √ | √ | √ | √ | √ | |||||

| [71] ** | √ | √ | √ | √ | √ | ||||||

| [77] | √ | √ | √ | √ | |||||||

| [45] * | √ | √ | √ | √ | |||||||

| [78] | √ | √ | √ | √ | √ | ||||||

| [79] | √ | √ | √ | √ | √ | ||||||

| [70] | √ | √ | √ | √ | |||||||

| [80] | √ | √ | √ | √ | √ | ||||||

| [49] | √ | √ | √ | √ | |||||||

| [14] | √ | √ | √ | √ | √ | ||||||

| SUM | 4 | 10 | 2 | 13 | 5 | 6 | 3 | 2 | 3 | 12 | 1 |

| This Research | √ | √ | √ | √ | √ | ||||||

| Life Cycle Phases | Adoption | Usage | Termination |

|---|---|---|---|

| User/organization Transformation | Intent to adopt | Continuance usage intention | Discontinuance usage intention |

| End-user state | No user | User | Ex-user |

| Individual Level-based theories | TAM [98], and UTAT model [99] | ECT [100], which has taken shape in the ISC model [101] | |

| Organizational Level-based theories | TOE framework [102], DOI [103], and Social Contagion [104] | ISS model [105] | ISD model [16] |

| Theory/Model | Technology/Dependent Variable | Source | Constructs/Independent Variables | |||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Satisfaction | Confirmation | Technology | Organization | Environment | ||||||||

| Net Benefits | Technology Integration | System Quality | Information Quality | System Integration | Collaboration | Regulatory Policy | Competitive Pressures | |||||

| ISD | Organizational level information System discontinuance intentions. | [16] | √ | √ | √ | |||||||

| ISC | Information system continuance. | [101] | √ | √ | ||||||||

| ISS | Information system success. | [105,120] | √ | √ | √ | |||||||

| ECM & TOE | Enterprise 2.0 post-adoption. | [72] | √ | √ | √ | |||||||

| TAM | Continuance intention to use CC. | [75] | ||||||||||

| ISC & OTH | Disruptive technology continuous adoption intentions. | [76] | √ | √ | ||||||||

| ISS | CC evaluation | [77] | √ | √ | √ | |||||||

| ISS & ISD | Cloud-Based Enterprise Systems. | [78] | √ | √ | √ | √ | √ | |||||

| ISC | SaaS-based collaboration tools. | [45] | √ | √ | ||||||||

| ISC | CC client-provider relationship. | [70] | √ | √ | ||||||||

| ISC | Operational Cloud Enterprise System. | [152] | √ | √ | √ | |||||||

| OTH | Usage and adoption of CC. by SMEs | [143] | √ | |||||||||

| TOE | Knowledge management systems diffusion | [153] | √ | |||||||||

| TCT | Information technology adoption behavior life cycle | [154] | √ | √ | ||||||||

| ISC | Wearable Continuance | [155] | √ | √ | √ | |||||||

| Constructs | Definition | Literature Sources | Previous Studies |

|---|---|---|---|

| Net Benefits (Formative) | Extent to which an information system benefits individuals, groups, or organizations. | [105,116,120] | [14,77,78,152] |

| System Quality (Formative) | Desirable features of a system (e.g., reliability, timeliness, or ease of use). | [105,116,120] | [14,77,78,152] |

| Information Quality (Formative) | Desirable features of a system’s output (e.g., format, relevance, or completeness). | [105,116,120] | [14,77,78,152] |

| Confirmation (Reflective) | Extent to which a user in a HEI feels satisfied when the outcomes are consistent with (or exceed) their expectations or desires, or when the outcomes are inconsistent with or below their expectations or desires. | [101,189,190] | [45,72] |

| Satisfaction (Reflective) | Psychological state that results when the emotion linked to disconfirmed expectations is paired with the user’s previous attitudes towards the consumption experience. | [101,191] | [45,72,76] |

| Technical Integration (Reflective) | Extent to which an information system depends on intricate connections with different technological elements. | [16,192] | [14,78] |

| System Investment (Reflective) | Resources, both financial and otherwise, that the institution has applied to acquire, implement, and use an information system. | [16,193,194] | [14,78] |

| Collaboration (Reflective) | Extent to which CC application supports cooperation and collaboration among stakeholders. | [195,196] | [42,142,143,144] |

| Regulatory Policy (Reflective) | Extent to which government policy supports, pressures, or protects the continued use of CC applications. | [147,169,170] | [145,146] |

| Competitive Pressure (Reflective) | Pressure perceived by institutional leadership that industry rivals may have won a significant competitive advantage using CC applications. | [170,171,197] | [72,145] |

| Continuance Intention (Reflective) | Extent to which organizational decision makers are likely to continue using an information system. | [16,101] | [45,72,76] |

| Construct | No. Items | Min Inter-Item Correlation | Max Inter-Item Correlation | Cronbach’s Alpha |

|---|---|---|---|---|

| CC Continuance Use | 3 | 0.656 | 0.828 | 0.907 |

| Satisfaction | 4 | 0.684 | 0.81 | 0.916 |

| Confirmation | 5 | 0.124 | 0.795 | 0.78 |

| Net Benefit | 13 | - | - | 0.916 |

| Technical Integration | 3 | 0.711 | 0.788 | 0.891 |

| System Quality | 13 | - | - | 0.927 |

| Information Quality | 7 | - | - | 0.928 |

| System Investment | 3 | 0.67 | 0.731 | 0.836 |

| Collaboration | 5 | 0.504 | 0.742 | 0.899 |

| Regulatory Policy | 5 | 0.398 | 0.821 | 0.894 |

| Competitive Pressure | 4 | 0.577 | 0.772 | 0.86 |

| All Items | 65 | 0.862 | 0.913 |

| Cloud Computing Continuance Use (Reflective) | Loadings 1 | AVE 2 | CR 3 |

|---|---|---|---|

| CCCU1 | 0.896 | 0.845 | 0.942 |

| CCCU2 | 0.961 | ||

| CCCU3 | 0.898 | ||

| Confirmation (Reflective) | Loadings | AVE | CR |

| CON1 | 0.505 | 0.544 | 0.852 |

| CON2 | 0.622 | ||

| CON3 | 0.876 | ||

| CON4 | 0.786 | ||

| CON5 | 0.832 | ||

| Satisfaction (Reflective) | Loadings | AVE | CR |

| SAT1 | 0.872 | 0.798 | 0.941 |

| SAT2 | 0.887 | ||

| SAT3 | 0.917 | ||

| SAT4 | 0.897 | ||

| Technical Integration (Reflective) | Loadings | AVE | CR |

| TE1 | 0.923 | 0.824 | 0.934 |

| TE2 | 0.876 | ||

| TE3 | 0.924 | ||

| System Investment (Reflective) | Loadings | AVE | CR |

| SI1 | 0.91 | 0.799 | 0.923 |

| SI2 | 0.871 | ||

| SI3 | 0.901 | ||

| Collaboration (Reflective) | Loadings | AVE | CR |

| COL1 | 0.88 | 0.716 | 0.926 |

| COL2 | 0.775 | ||

| COL3 | 0.835 | ||

| COL4 | 0.867 | ||

| COL5 | 0.87 | ||

| Regulatory Policy (Reflective) | Loadings | AVE | CR |

| RP1 | 0.809 | 0.697 | 0.92 |

| RP2 | 0.84 | ||

| RP3 | 0.859 | ||

| RP4 | 0.853 | ||

| RP5 | 0.812 | ||

| Competitive Pressure (Reflective) | Loadings | AVE | CR |

| CP1 | 0.776 | 0.748 | 0.922 |

| CP2 | 0.904 | ||

| CP3 | 0.878 | ||

| CP4 | 0.895 |

| Latent Construct | CCCU | COL | CP | Conf | IQ | NB | RP | SI | SQ | SAT | TE |

|---|---|---|---|---|---|---|---|---|---|---|---|

| CC Continuance Use | 0.919 | ||||||||||

| Collaboration | 0.85 | 0.846 | |||||||||

| Competitive Pressure | −0.524 | −0.541 | 0.865 | ||||||||

| Confirmation | −0.201 | −0.277 | 0.397 | 0.737 | |||||||

| Information Quality | −0.615 | −0.579 | 0.381 | 0.328 | formative | ||||||

| Net Benefits | 0.719 | 0.736 | −0.592 | −0.617 | −0.733 | formative | |||||

| Regulatory Policy | 0.409 | 0.472 | −0.821 | −0.306 | −0.378 | 0.513 | 0.835 | ||||

| System Investment | 0.903 | 0.807 | −0.458 | −0.251 | −0.578 | 0.695 | 0.383 | 0.894 | |||

| System Quality | 0.838 | 0.789 | −0.593 | −0.481 | −0.782 | 0.877 | 0.438 | 0.776 | formative | ||

| Satisfaction | 0.473 | 0.54 | −0.468 | −0.58 | −0.812 | 0.794 | 0.462 | 0.481 | 0.805 | 0.894 | |

| Technical Integration | 0.894 | 0.739 | −0.479 | −0.256 | −0.696 | 0.647 | 0.396 | 0.809 | 0.794 | 0.504 | 0.908 |

| Redundancy Analysis, Assessing Multicollinearity, Significance and Contribution | ||||

|---|---|---|---|---|

| Net Benefits (formative) | VIF | t-values | Weights | Loadings |

| NB1 | 3.615 | 0.604 | 0.125 | 0.633 |

| NB2 | 3.276 | 1.32 | 0.313 | 0.802 |

| NB3 | 6.695 | 1.653 | −0.489 | 0.648 |

| NB4 | 3.098 | 1.561 | 0.346 | 0.71 |

| NB5 | 2.202 | 1.618 | 0.23 | 0.535 |

| NB6 | 1.942 | 0.801 | 0.134 | 0.691 |

| NB7 | 5.617 | 1.785 | 0.608 | 0.876 |

| NB8 | 3.896 | 0.632 | 0.146 | 0.609 |

| NB9 | 3.236 | 0.988 | 0.214 | 0.641 |

| NB10 | 1.51 | 0.932 | 0.158 | 0.45 |

| NB11 | 6.653 | 1.149 | −0.391 | 0.721 |

| NB12 | 2.053 | 0.231 | −0.039 | 0.594 |

| Net Benefits (Reflective) | F2 | |||

| Redundancy Analysis | 0.763 | |||

| NB13 | ||||

| System Quality (formative) | VIF | t-values | Weights | Loadings |

| SQ1 | 4.197 | 0.115 | −0.019 | 0.75 |

| SQ2 | 3.715 | 0.854 | 0.141 | 0.626 |

| SQ3 | 2.57 | 1.397 | 0.165 | 0.794 |

| SQ4 | 2.182 | 1.222 | 0.134 | 0.72 |

| SQ5 | 5.351 | 1.203 | 0.204 | 0.867 |

| SQ6 | 5.582 | 0.199 | −0.035 | 0.726 |

| SQ7 | 2.615 | 2.399 | 0.262 | 0.771 |

| SQ8 | 1.3 | 0.711 | −0.065 | 0.184 |

| SQ9 | 4.435 | 1.392 | 0.239 | 0.768 |

| SQ10 | 1.92 | 0.046 | 0.005 | 0.707 |

| SQ11 | 3.749 | 1.26 | 0.156 | 0.659 |

| SQ12 | 2.434 | 0.692 | 0.075 | 0.82 |

| System Quality (reflective) | F2 | |||

| Redundancy Analysis | 0.784 | |||

| SQ13 | ||||

| Information Quality (formative) | VIF | t-values | Weights | Loadings |

| IQ1 | 3.122 | 0.366 | −0.079 | 0.664 |

| IQ2 | 3.232 | 0.995 | 0.228 | 0.874 |

| IQ3 | 2.84 | 1.569 | 0.348 | 0.839 |

| IQ4 | 3.78 | 1.787 | 0.436 | 0.874 |

| IQ5 | 4.753 | 0.838 | 0.219 | 0.831 |

| IQ6 | 2.928 | 0.017 | −0.004 | 0.727 |

| Information Quality (reflective) | F2 | |||

| Redundancy Analysis | 0.884 | |||

| IQ7 | ||||

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Qasem, Y.A.M.; Abdullah, R.; Yaha, Y.; Atana, R. Continuance Use of Cloud Computing in Higher Education Institutions: A Conceptual Model. Appl. Sci. 2020, 10, 6628. https://doi.org/10.3390/app10196628

Qasem YAM, Abdullah R, Yaha Y, Atana R. Continuance Use of Cloud Computing in Higher Education Institutions: A Conceptual Model. Applied Sciences. 2020; 10(19):6628. https://doi.org/10.3390/app10196628

Chicago/Turabian StyleQasem, Yousef A. M., Rusli Abdullah, Yusmadi Yaha, and Rodziah Atana. 2020. "Continuance Use of Cloud Computing in Higher Education Institutions: A Conceptual Model" Applied Sciences 10, no. 19: 6628. https://doi.org/10.3390/app10196628

APA StyleQasem, Y. A. M., Abdullah, R., Yaha, Y., & Atana, R. (2020). Continuance Use of Cloud Computing in Higher Education Institutions: A Conceptual Model. Applied Sciences, 10(19), 6628. https://doi.org/10.3390/app10196628