Proposal of an Automated Mission Manager for Cooperative Autonomous Underwater Vehicles

Abstract

:Featured Application

Abstract

1. Introduction

- Adaptation is mostly delegated to the vehicles. There is a lack of consideration of the use of a distributed adaptation including a global mission manager.

- Vehicles’ capabilities are typically tightly coupled to the architectures, specifically in regard to mission management at the vehicle level. There is a lack of consideration to the use of vehicles with heterogeneous mission-management capabilities.

- In underwater interventions, the mission plan is typically loaded into the vehicles before being launched. Consequently, there is also a lack of consideration to the possibility of online transferring of the mission plan to the vehicles.

- Using a hierarchical control pattern for decentralized control, that is novel in the use of mission management architectures for cooperative autonomous underwater vehicles.

- Designing a virtualization approach related to the mission planning capabilities of the vehicles.

- Using an online dispatching method for transferring the mission plan to the AUVs.

2. Related Works

- A distributed self-adaptive system where the deployment of self-adaptive controls is both on the managed and the managing subsystems [23]. The usual solution is to use self-adaptive mechanisms only at the vehicle level.

- A virtualization of the vehicle capabilities.

- An online mission plan dispatching mechanism running from a central mission manager.

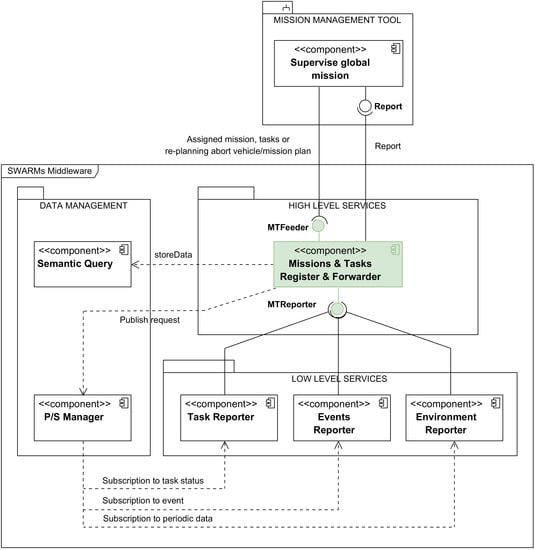

3. Overview of the SWARMs Architecture

3.1. The SWARMs Architecture

- A Mission Management Tool (MMT), used by a human operator to supervise the global mission, to track the situational changes, and to generate the mission plan.

- A Context Awareness Framework (CAF) application, used to provide a visual interface to the context awareness to a human operator.

- Centralize the communications.

- Serve as a mission executive, providing execution and control mechanisms.

- Define a common information model.

- Use a semantic management.

- Provide a registry of all available vehicles and services.

- Track and report the progress status of a mission.

- Define mechanisms and functionalities for understanding the surrounding environment of the vehicles participating in a mission.

- Define mechanisms for data validation.

- The Control Data Terminal (CDT), that provides an interface between the middleware components at the CCS and the acoustic communication system used to communicate with the vehicles.

- The Vehicle Data Terminal (VDT), that provides an interface between the communication system and the vehicle.

3.1.1. The Semantic Query Component and the Data Repositories

- A semantic ontology has been created for the SWARMs project, as described in [42]. This ontology defines a common information model that provides a non-ambiguous representation of a swarm of maritime vehicles and missions. It is also in charge of storing the information obtained from different domains, including mission and planning, and environment recognition and sensing among others. Besides the information model, the ontology is also used to store the last updated values, as they are also used automatically to infer events by means of the use of semantic reasoners [43,44,45].

- In order to perform requests on the SWARMs ontology, Apache Jena Fuseki has been utilized, as it can provide server-like capabilities for resource storage, along with the protocols used for the SPARQL Protocol and RDF Query Language (SPARQL) queries needed to retrieve data from the SWARMs ontology. The combined Fuseki server sending SPARQL requests to the SWARMs ontology that is accessed and the ontology itself are referred in this manuscript as the semantic repository. There are several reasons for having chosen Fuseki as the way to interact with the ontology. To begin with, it offers the SPARQL 1.1 Protocol for queries and updates, which enable for an easier access to the ontology. Secondly, by having a server with a web-based front end, data management and monitoring of the ontology status becomes easier. Lastly, specific resources can be provided to server in terms of, for example, memory allocation, thus making it more flexible to execute depending on the capabilities of the hardware that runs it. It must be noted that the MTRR has been conceived to send and store information used for mission planning, execution and data retrieval and is oblivious to the ontology that is used for this purpose, due to the fact that the MTRR has been designed, implemented and tested as software capable of sending information to an ontology, regardless of which one is used. Development works strictly related to ontologies and ontologies for underwater vehicles can be tracked in [42].

- A historical data repository that allows to have a record of the progress of the missions, and to be used by decision-making algorithms to compute the recommended choices using the information from past situations. As it was expected that the volume of the historical data would be large, it was decided to use a relational database for this repository instead of the ontology.

3.1.2. The Publish/Subscription Manager Component

3.1.3. The Reporters

- The Task Reporter receives the data regarding the status of the tasks being executed in a mission carried out by a vehicle, generates the corresponding report, and dispatches the report to the MTRR for further processing.

- The Environment Reporter receives the environmental data that the vehicles publish periodically to the DDS Manager, generates the corresponding report, and dispatches the report to the MTRR for further processing.

- The Event Reporter receives the events published by the vehicles to the DDS Manager, generates the corresponding report, and dispatches the report to the MTRR for further processing.

3.2. The Mission Plan

4. Description of the Missions and Tasks Register and Reporter (MTRR)

- Mission information retrieval. The different parameters that are fixed at the MMT in the mission plan as a way to establish what kind of plan will be executed or what maritime vehicles should be involved are transferred to the MTRR. Those parameters are: coordinates of the area to be inspected or surveyed, stages of the plan to be executed (going to a specific point, having an autonomous vehicle executing a particular trajectory) or transferring back to the MMT data about the mission progress.

- Mission status collection. The MTRR provides information to the high end of the system regarding what stages of the mission were completed. Depending on the actions to be carried out by the vehicles, high-level tasks such as “SURVEY” (used by the AUV to maneuver in a land mower pattern), “TRANSIT” (used to go to a coordinate) or “HOVER” (used by Autonomous Surface Vehicles to navigate the surface of an area to be surveyed in a circular pattern) will need to be sent. Missions are created following a sequential order for the tasks to be executed, so each of those tasks will be sent to the vehicles through the MTRR, which will notify when they are completed. Also, the MTRR is in charge of sending the “ABORT” signal to the vehicles when the mission has to be finished abruptly due to any major reason.

- Information storage invocation. Data about how vehicles have progressed in a specific mission are retrieved via state vectors that contain, among other pieces of information, their location, speed, depth or altitude from the seabed, according to a data format specifically developed for the project [51]. The MTRR invokes the necessary operations that involve other software components in the middleware responsible for storing the data gathered. In this way, the MTRR makes the information available for all the other applications that require the data to perform their actions, such as the context awareness component (used to assess the level of danger in certain situations) or a plume simulator (which would simulate information about salinity concentration during a pollution leak event).

4.1. Autonomous Underwater Vehicle (AUV) Virtualization at the MTRR

4.2. Activities Performed by the MTRR

- Starting a mission.

- Receiving and recording mission status reports.

- Receiving and recording environmental reports.

- Receiving and recording events reports.

- Aborting a mission.

4.2.1. Starting a Mission

- Validation of the mission plan.

- Storage of the reference coordinates into the semantic repository (that is, the ontology via Fuseki server).

- Storage of the mission plan into the semantic repository.

- Parsing the vehicles assigned to the mission plan.

- Storage of assigned vehicles into the semantic repository.

- Subscription to vehicles events.

- Parsing the actions assigned to each vehicle in the mission plan.

- Publication of the first assigned action for each vehicle in the SWARMs DDS partition.

- Sending the full plan to each vehicle.

- Sending just the first action in the plan to each vehicle. Subsequent actions will be sent from the TaskReport whenever requested by the vehicle.

4.2.2. Receiving and Recording Mission Status Reports

- To extract and store the status report in the ontology using the Semantic Query component.

- Notifying the MMT if there is any important status update, like the vehicle being unable to fulfill the mission.

- If the assignment mode was set to wait until task completion for requesting the next task in the mission plan, then the assignment of the tasks to the vehicles besides the first one is performed also when receiving a mission status report with a status of COMPLETED.

4.2.3. Receiving and Recording Environmental Reports

4.2.4. Receiving and Recording Events Reports

4.2.5. Aborting a Mission

5. Validation

5.1. Validation Goals

- Verification of the effectiveness of the procedures for starting a mission, processing a task status report and dispatching the next task whenever there is any pending assignment, and processing the received environmental reports. The MTRR will be considered effective if it produces the expected results in terms of interoperability, cooperation and coordination.

- Characterization of the startMission activity of the MTRR by measuring the times required for each of its internal sub-activities, and identifying possible bottlenecks and correlations.

- Characterization of the reportTask activity of the MTRR by measuring the times required for each of its internal sub-activities, and identifying possible bottlenecks.

- Characterization of the reportEnvironment activity of the MTRR by measuring the times required for each of its internal sub-activities, identifying possible bottlenecks and comparing the efficiency of storing the state vector in the semantic and the relational databases.

5.2. Validation Scenario

5.3. Technical Details

5.3.1. Equipment Used for Running the MTRR

5.3.2. Other Equipment and Materials Used for the Tests Involving the MTRR

5.4. Tests Details

6. Results

- Starting a mission.

- Reporting the status of a vehicle.

- Reporting the state vector from a vehicle.

6.1. Starting a Mission

6.2. Processing Task Status Reports

6.3. Processing Environmental Reports

6.4. Summary of Validation Results

7. Conclusions and Future Works

Author Contributions

Funding

Conflicts of Interest

References

- Patrón, P.; Petillot, Y.R. The underwater environment: A challenge for planning. In Proceedings of the 27th Workshop of the UK PLANNING AND SCHEDULING Special Interest Group, PlanSIG, Edinburgh, UK, 11–12 December 2008. [Google Scholar]

- Bellingham, J.G. Platforms: Autonomous Underwater Vehicles. In Encyclopedia of Ocean Sciences; Elsevier: Amsterdam, The Netherlands, 2009; pp. 473–484. [Google Scholar]

- Thompson, F.; Guihen, D. Review of mission planning for autonomous marine vehicle fleets. J. Field Robot. 2019, 36, 333–354. [Google Scholar] [CrossRef]

- Kothari, M.; Pinto, J.; Prabhu, V.S.; Ribeiro, P.; de Sousa, J.B.; Sujit, P.B. Robust Mission Planning for Underwater Applications: Issues and Challenges. IFAC Proc. Vol. 2012, 45, 223–229. [Google Scholar] [CrossRef]

- Fernández Perdomo, E.; Cabrera Gámez, J.; Domínguez Brito, A.C.; Hernández Sosa, D. Mission specification in underwater robotics. J. Phys. Agents 2010, 4, 25–33. [Google Scholar]

- DNV GL. RULES FOR CLASSIFICATION: Underwater Technology; Part 5 Types of UWT systems, Chapter 8 Autonomous underwater vehicles; DNL GL: Oslo, Norway, 2015. [Google Scholar]

- Alterman, R. Adaptive planning. Cogn. Sci. 1988, 12, 393–421. [Google Scholar] [CrossRef]

- Woodrow, I.; Purry, C.; Mawby, A.; Goodwin, J. Autonomous AUV Mission Planning and Replanning–Towards True Autonomy. In Proceedings of the 14th International Symposium on Unmanned Untethered Submersible Technology, Durham, UK, 21–24 August 2005; pp. 1–10. [Google Scholar]

- Van Der Krogt, R.; De Weerdt, M. Plan Repair as an Extension of Planning. In Proceedings of the International Conference on Automated Planning and Scheduling (ICAPS), Monterey, CA, USA, 5–10 June 2005; Biundo, S., Myers, K., Rajan, K., Eds.; The AAAI Press: Monterey, CA, USA, 2005; pp. 161–170. [Google Scholar]

- Fox, M.; Gerevini, A.; Long, D.; Serina, I. Plan stability: Replanning versus plan repair. In Proceedings of the International Conference on AI Planning and Scheduling (ICAPS), Cumbria, UK, 6–10 June 2006; Long, D., Smith, S.F., Borrajo, D., McCluskey, L., Eds.; The AAAI Press: Cumbria, UK, 2006; pp. 212–221. [Google Scholar]

- Van Der Krogt, R. Plan Repair in Single-Agent and Multi-Agent Systems; Technical University of Delft: Delft, The Netherlands, 2005. [Google Scholar]

- Brito, M.P.; Bose, N.; Lewis, R.; Alexander, P.; Griffiths, G.; Ferguson, J. The Role of adaptive mission planning and control in persistent autonomous underwater vehicles presence. In Proceedings of the IEEE/OES Autonomous Underwater Vehicles (AUV), Southampton, UK, 24–27 September 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–9. [Google Scholar]

- Patrón, P.; Lane, D.M. Adaptive mission planning: The embedded OODA loop. In Proceedings of the 3rd SEAS DTC Technical Conference, Edinburgh, UK, 24–25 June 2008. [Google Scholar]

- Insaurralde, C.C.; Cartwright, J.J.; Petillot, Y.R. Cognitive Control Architecture for autonomous marine vehicles. In Proceedings of the IEEE International Systems Conference SysCon 2012, Vancouver, BC, Canada, 19–22 March 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 1–8. [Google Scholar]

- Endsley, M.R. Designing for Situation Awareness, 2nd ed.; CRC Press: Boca Raton, FL, USA, 2016; ISBN 9780429146732. [Google Scholar]

- MBARI—Autonomy—TREX. Available online: https://web.archive.org/web/20140903170721/https://www.mbari.org/autonomy/TREX/index.htm (accessed on 27 November 2019).

- McGann, C.; Py, F.; Rajan, K.; Thomas, H.; Henthorn, R.; McEwen, R. A deliberative architecture for AUV control. In Proceedings of the IEEE International Conference on Robotics and Automation, Pasadena, CA, USA, 19–23 May 2008; IEEE: Piscataway, NJ, USA, 2008; pp. 1049–1054. [Google Scholar]

- Huebscher, M.C.; McCann, J.A. A survey of autonomic computing—Degrees, models, and applications. ACM Comput. Surv. 2008, 40, 1–28. [Google Scholar] [CrossRef] [Green Version]

- IBM. An architectural blueprint for autonomic computing. IBM 2005, 31, 1–6. Available online: https://www-03.ibm.com/autonomic/pdfs/AC%20Blueprint%20White%20Paper%20V7.pdf (accessed on 20 January 2020).

- Poole, D.L.; Mackworth, A.K. Artificial Intelligence: Foundations of Computational Agents, 2nd ed.; Cambridge University Press: Cambridge, UK, 2017; ISBN 9781107195394. [Google Scholar]

- Rodríguez-Molina, J.; Bilbao, S.; Martínez, B.; Frasheri, M.; Cürüklü, B. An Optimized, Data Distribution Service-Based Solution for Reliable Data Exchange Among Autonomous Underwater Vehicles. Sensors 2017, 17, 1802. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- SWARMS. Available online: http://swarms.eu/overview.html (accessed on 16 December 2019).

- Weyns, D.; Schmerl, B.; Grassi, V.; Malek, S.; Mirandola, R.; Prehofer, C.; Wuttke, J.; Andersson, J.; Giese, H.; Göschka, K.M. On Patterns for Decentralized Control in Self-Adaptive Systems. In Software Engineering for Self-Adaptive Systems II; Springer: Berlin, Germany, 2013; pp. 76–107. [Google Scholar]

- Rødseth, Ø.J. Object-oriented software system for AUV control. Eng. Appl. Artif. Intell. 1991, 4, 269–277. [Google Scholar] [CrossRef]

- Teck, T.Y.; Chitre, M.A.; Vadakkepat, P.; Shahabudeen, S. Design and Development of Command and Control System for Autonomous Underwater Vehicles. 2008. Available online: http://arl.nus.edu.sg/twiki6/pub/ARL/BibEntries/Tan2009a.pdf (accessed on 20 January 2020).

- Teck, T.Y. Design and Development of Command and Control System for Autonomous Underwater Vehicles. Master’s Thesis, National University of Singapore, Singapore, October 2008. [Google Scholar]

- Madsen, H.Ø. Mission Management System for an Autonomous Underwater Vehicle. IFAC Proc. Vol. 1997, 30, 59–63. [Google Scholar] [CrossRef]

- Madsen, H.Ø.; Bjerrum, A.; Krogh, B.; Aps, M. MARTIN—An AUV for Offshore Surveys. In Proceedings of the Oceanology International 96, Brighton, UK, 1 March 1996; pp. 5–8. [Google Scholar]

- McGann, C.; Py, F.; Rajan, K.; Ryan, J.; Henthorn, R. Adaptive control for autonomous underwater vehicles. Proc. Natl. Conf. Artif. Intell. 2008, 3, 1319–1324. [Google Scholar]

- Executive T-Rex for ROS. Available online: http://wiki.ros.org/executive_trex (accessed on 27 November 2019).

- Ropero, F.; Muñoz, P.; R.-Moreno, M.D. A Versatile Executive Based on T-REX for Any Robotic Domain. In Proceedings of the International Conference on Innovative Techniques and Applications of Artificial Intelligence, Cambridge, UK, 11–13 December 2018; pp. 79–91. [Google Scholar]

- RAUVI: Reconfigurable AUV for Intervention. Available online: http://www.irs.uji.es/rauvi/news.html (accessed on 27 November 2019).

- Palomeras, N.; Garcia, J.C.; Prats, M.; Fernandez, J.J.; Sanz, P.J.; Ridao, P. A distributed architecture for enabling autonomous underwater Intervention Missions. In Proceedings of the IEEE International Systems Conference, San Diego, CA, USA, 5–8 April 2010; IEEE: Piscataway, NJ, USA, 2010; pp. 159–164. [Google Scholar]

- García, J.C.; Javier Fernández, J.; Sanz, R.M.P.J.; Prats, M. Towards specification, planning and sensor-based control of autonomous underwater intervention. IFAC Proc. Vol. 2011, 44, 10361–10366. [Google Scholar] [CrossRef]

- Palomeras, N.; Ridao, P.; Carreras, M.; Silvestre, C. Using petri nets to specify and execute missions for autonomous underwater vehicles. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems, St. Louis, MO, USA, 11–15 October 2009; IEEE: Piscataway, NJ, USA, 2009; pp. 4439–4444. [Google Scholar]

- Trident FP7 European Project. Available online: http://www.irs.uji.es/trident/aboutproject.html (accessed on 28 November 2019).

- Atyabi, A.; MahmoudZadeh, S.; Nefti-Meziani, S. Current advancements on autonomous mission planning and management systems: An AUV and UAV perspective. Annu. Rev. Control 2018, 46, 196–215. [Google Scholar] [CrossRef]

- MahmoudZadeh, S.; Powers, D.M.W.; Bairam Zadeh, R. State-of-the-Art in UVs’ Autonomous Mission Planning and Task Managing Approach. In Autonomy and Unmanned Vehicles; Springer: Singapore, 2019; pp. 17–30. [Google Scholar]

- Pacini, F.; Paoli, G.; Kebkal, O.; Kebkal, V.; Kebkal, K.; Bastot, J.; Monteiro, C.; Sucasas, V.; Schipperijn, B. Integrated comunication network for underwater applications: The SWARMs approach. In Proceedings of the Fourth Underwater Communications and Networking Conference (UComms), Lerici, Italy, 28–30 August 2018; IEEE: Piscataway, NJ, USA, 2018; pp. 1–5. [Google Scholar]

- Pacini, F.; Paoli, G.; Cayón, I.; Rivera, T.; Sarmiento, B.; Kebkal, K.; Kebkal, O.; Kebkal, V.; Geelhoed, J.; Schipperijn, B.; et al. The SWARMs Approach to Integration of Underwater and Overwater Communication Sub-Networks and Integration of Heterogeneous Underwater Communication Systems. In Proceedings of the ASME 2018 37th International Conference on Ocean, Offshore and Arctic Engineering, Madrid, Spain, 17–22 June 2018; American Society of Mechanical Engineers: New York, NY, USA, 2018; Volume 7. [Google Scholar]

- About the Data Distribution Service Specification Version 1.4. Available online: https://www.omg.org/spec/DDS/ (accessed on 16 December 2019).

- Li, X.; Bilbao, S.; Martín-Wanton, T.; Bastos, J.; Rodriguez, J. SWARMs Ontology: A Common Information Model for the Cooperation of Underwater Robots. Sensors 2017, 17, 569. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Li, X.; Martínez, J.; Rubio, G.; Gómez, D. Context Reasoning in Underwater Robots Using MEBN. In Proceedings of the Third International Conference on Cloud and Robotics ICCR 2016, Saint Quentin, France, 22–23 November 2016; pp. 22–23. [Google Scholar]

- Li, X.; Martínez, J.-F.; Rubio, G. Towards a Hybrid Approach to Context Reasoning for Underwater Robots. Appl. Sci. 2017, 7, 183. [Google Scholar] [CrossRef] [Green Version]

- Zhai, Z.; Martínez Ortega, J.-F.; Lucas Martínez, N.; Castillejo, P. A Rule-Based Reasoner for Underwater Robots Using OWL and SWRL. Sensors 2018, 18, 3481. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Miloradovic, B.; Curuklu, B.; Ekstrom, M. A genetic planner for mission planning of cooperative agents in an underwater environment. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- Miloradović, B.; Çürüklü, B.; Ekström, M. A Genetic Mission Planner for Solving Temporal Multi-agent Problems with Concurrent Tasks. In Proceedings of the International Conference on Swarm Intelligence, Fukuoka, Japan, 27 July–1 August 2017; pp. 481–493. [Google Scholar]

- Landa-Torres, I.; Manjarres, D.; Bilbao, S.; Del Ser, J. Underwater Robot Task Planning Using Multi-Objective Meta-Heuristics. Sensors 2017, 17, 762. [Google Scholar] [CrossRef] [PubMed]

- Apache Thrift—Home. Available online: https://thrift.apache.org/ (accessed on 16 December 2019).

- Slee, M.; Agarwal, A.; Kwiatkowski, M. Thrift: Scalable Cross-Language Services Implementation. Facebook White Paper 2007, 5, 1–8. [Google Scholar]

- Rodríguez-Molina, J.; Martínez, B.; Bilbao, S.; Martín-Wanton, T. Maritime Data Transfer Protocol (MDTP): A Proposal for a Data Transmission Protocol in Resource-Constrained Underwater Environments Involving Cyber-Physical Systems. Sensors 2017, 17, 1330. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bauer, M.; Boussard, M.; Bui, N.; Francois, C.; Jardak, C.; De Loof, J.; Magerkurth, C.; Meissner, S.; Nettsträter, A.; Olivereau, A.; et al. Internet of Things—Architecture IoT-A, Deliverable D1.5—Final Architectural Reference Model for the IoT v3.0; Technical Report FP7-257521; IoT-A project; 2013; Available online: https://www.researchgate.net/publication/272814818_Internet_of_Things_-_Architecture_IoT-A_Deliverable_D15_-_Final_architecture_reference_model_for_the_IoT_v30 (accessed on 20 January 2020).

- GitHub—Uuvsimulator/Uuv_Plume_Simulator: ROS Nodes to Generate a Turbulent Plume in an Underwater Environment. Available online: https://github.com/uuvsimulator/uuv_plume_simulator (accessed on 11 December 2019).

- Manhaes, M.M.M.; Scherer, S.A.; Voss, M.; Douat, L.R.; Rauschenbach, T. UUV Simulator: A Gazebo-based package for underwater intervention and multi-robot simulation. In Proceedings of the OCEANS 2016 MTS/IEEE Monterey, Monterey, CA, USA, 19–23 September 2016; IEEE: Piscataway, NJ, USA, 2016; pp. 1–8. [Google Scholar]

- GitHub—Uuvsimulator/Uuv_Simulator: Gazebo/ROS Packages for Underwater Robotics Simulation. Available online: https://github.com/uuvsimulator/uuv_simulator (accessed on 11 December 2019).

| Component Name | Location | Component Functionality | Interaction with the MTRR |

|---|---|---|---|

| Supervise Global Mission | Mission Management Tool | Used to show data and create missions | Send the mission content to the MTRR and receive data back from it about stages completed and state vector data collected from the autonomous vehicles |

| Semantic Query | Data Management | Used to access the storage means of the middleware (ontology, database) | It is invoked by the MTRR in order to transfer the information that has been gathered from the maritime vehicles to the database or the ontology when it is required |

| Publish/Subscribe (P/S) Manager | Data Management | Used to set the paradigm of communications between the middleware and the maritime autonomous vehicles | The MTRR invokes some of its functionalities to subscribe to the topic that is used for communications |

| Task Reporter | Low-Level Services | Used to transfer information collected to the P/S Manager | Invoked by the MTRR in order to send information to the P/S Manager |

| Events reporter | Low-Level Services | Used to highlight unusual events during the mission | Invokes the MTRR in order to collect information |

| Environment Reporter | Low-Level Services | Used to report information collected about the surroundings of the vehicle | Invokes the MTRR in order to collect information |

| Vehicle Name | Type | Codename |

|---|---|---|

| Noptilus-1 | AUV | PORTO1 |

| Noptilus-2 | AUV | PORTO2 |

| Fridtjof | AUV | NTNU |

| Telemetron | USV | MAROB |

| Mission | Start Time | High Level Actions | Low Level Actions | Total Actions | Assigned Vehicles (Codename) | Type of Mission |

|---|---|---|---|---|---|---|

| 1 | 10:01:27 | 9 | 14 | 23 | NTNU, PORTO2, MAROB | Real |

| 2 | 10:17:56 | 9 | 0 | 9 | NTNU, PORTO2, MAROB | Real |

| 3 | 11:57:17 | 9 | 14 | 23 | NTNU, PORTO2, MAROB | Simulated |

| 4 | 12:57:58 | 9 | 34 | 43 | NTNU, PORTO2, MAROB | Real |

| 5 | 13:25:55 | 9 | 34 | 43 | NTNU, PORTO2, MAROB | Real |

| 6 | 15:31:05 | 9 | 34 | 43 | NTNU, PORTO2, MAROB | Simulated |

| 7 | 17:10:09 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 8 | 17:58:57 | 3 | 16 | 19 | MAROB | Real |

| 9 | 10:29:28 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 10 | 11:01:59 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 11 | 11:13:54 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 12 | 11:21:25 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 13 | 12:30:29 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 14 | 12:41:11 | 9 | 0 | 9 | NTNU, PORTO1, PORTO2 | Real |

| 15 | 12:55:57 | 9 | 0 | 9 | NTNU, PORTO1, PORTO2 | Real |

| 16 | 13:17:36 | 9 | 0 | 9 | NTNU, PORTO1, PORTO2 | Simulated |

| 17 | 13:39:19 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 18 | 14:12:16 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 19 | 15:19:59 | 9 | 48 | 57 | NTNU, PORTO1, PORTO2 | Real |

| 20 | 15:37:19 | 3 | 16 | 19 | MAROB | Real |

| 21 | 15:40:54 | 3 | 16 | 19 | MAROB | Real |

| 22 | 16:38:04 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 23 | 17:38:34 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Simulated |

| 24 | 19:33:52 | 3 | 10 | 13 | MAROB | Real |

| 25 | 08:26:21 | 3 | 12 | 15 | MAROB | Real |

| 26 | 09:01:21 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 27 | 09:47:54 | 3 | 16 | 19 | MAROB | Real |

| 28 | 10:00:23 | 3 | 0 | 3 | MAROB | Real |

| 29 | 10:47:27 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 30 | 12:05:20 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 31 | 12:25:24 | 3 | 6 | 9 | MAROB | Real |

| 32 | 12:37:14 | 3 | 10 | 13 | MAROB | Real |

| 33 | 13:20:55 | 3 | 10 | 13 | MAROB | Real |

| 34 | 13:41:14 | 3 | 16 | 19 | MAROB | Real |

| 35 | 14:44:52 | 3 | 16 | 19 | MAROB | Real |

| 36 | 15:11:33 | 12 | 50 | 62 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 37 | 10:36:45 | 3 | 8 | 11 | MAROB | Real |

| 38 | 10:59:09 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 39 | 11:15:00 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 40 | 11:36:15 | 3 | 8 | 11 | MAROB | Real |

| 41 | 11:38:50 | 3 | 8 | 11 | MAROB | Real |

| 42 | 11:55:27 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 43 | 12:11:50 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| 44 | 14:17:02 | 3 | 8 | 11 | MAROB | Real |

| 45 | 14:36:24 | 12 | 0 | 12 | NTNU, PORTO1, PORTO2, MAROB | Real |

| Mission | ||||||||

|---|---|---|---|---|---|---|---|---|

| 1 | 91 | 17 | 140 | 13 | 0 | 3 | 7 | 6 |

| 2 | 32 | 5 | 60 | 12 | 9 | 2 | 25 | 9 |

| 3 | 73 | 14 | 121 | 9 | 1 | 4 | 10 | 9 |

| 4 | 111 | 9 | 210 | 11 | 1 | 3 | 10 | 4 |

| 5 | 170 | 12 | 239 | 17 | 1 | 6 | 7 | 12 |

| 6 | 182 | 10 | 212 | 12 | 1 | 2 | 7 | 5 |

| 7 | 170 | 8 | 361 | 14 | 1 | 2 | 8 | 5 |

| 8 | 129 | 10 | 141 | 14 | 1 | 1 | 2 | 11 |

| 9 | 38 | 7 | 74 | 16 | 1 | 5 | 12 | 4 |

| 10 | 48 | 7 | 70 | 25 | 1 | 2 | 9 | 6 |

| 11 | 38 | 6 | 62 | 17 | 4 | 5 | 8 | 13 |

| 12 | 59 | 8 | 70 | 18 | 2 | 11 | 23 | 7 |

| 13 | 56 | 7 | 61 | 15 | 1 | 4 | 19 | 8 |

| 14 | 37 | 5 | 37 | 14 | 0 | 2 | 8 | 10 |

| 15 | 42 | 6 | 41 | 15 | 1 | 2 | 6 | 8 |

| 16 | 35 | 11 | 102 | 28 | 1 | 10 | 15 | 5 |

| 17 | 28 | 7 | 57 | 14 | 0 | 5 | 10 | 5 |

| 18 | 52 | 7 | 67 | 16 | 0 | 3 | 12 | 5 |

| 19 | 162 | 10 | 265 | 9 | 2 | 3 | 6 | 5 |

| 20 | 135 | 21 | 107 | 5 | 1 | 1 | 4 | 35 |

| 21 | 90 | 9 | 122 | 5 | 0 | 2 | 2 | 32 |

| 22 | 200 | 6 | 248 | 12 | 1 | 4 | 12 | 19 |

| 23 | 191 | 10 | 317 | 12 | 0 | 3 | 7 | 8 |

| 24 | 62 | 6 | 65 | 6 | 0 | 1 | 3 | 7 |

| 25 | 53 | 16 | 81 | 4 | 0 | 1 | 2 | 12 |

| 26 | 204 | 6 | 293 | 22 | 2 | 16 | 32 | 13 |

| 27 | 94 | 8 | 147 | 5 | 1 | 1 | 5 | 17 |

| 28 | 17 | 6 | 16 | 10 | 1 | 1 | 4 | 26 |

| 29 | 162 | 10 | 268 | 15 | 1 | 4 | 10 | 4 |

| 30 | 241 | 31 | 265 | 13 | 1 | 2 | 6 | 8 |

| 31 | 34 | 6 | 51 | 9 | 0 | 0 | 4 | 5 |

| 32 | 51 | 8 | 73 | 4 | 0 | 1 | 2 | 6 |

| 33 | 113 | 11 | 199 | 7 | 0 | 1 | 6 | 9 |

| 34 | 94 | 10 | 125 | 4 | 0 | 2 | 3 | 10 |

| 35 | 217 | 33 | 144 | 4 | 1 | 1 | 2 | 18 |

| 36 | 164 | 6 | 286 | 16 | 2 | 5 | 12 | 5 |

| 37 | 138 | 30 | 73 | 4 | 1 | 1 | 5 | 30 |

| 38 | 41 | 6 | 50 | 16 | 2 | 4 | 13 | 7 |

| 39 | 43 | 10 | 104 | 19 | 1 | 6 | 8 | 23 |

| 40 | 67 | 5 | 80 | 5 | 0 | 1 | 3 | 17 |

| 41 | 87 | 10 | 338 | 5 | 0 | 1 | 3 | 7 |

| 42 | 65 | 13 | 83 | 19 | 0 | 4 | 16 | 4 |

| 43 | 74 | 11 | 87 | 38 | 1 | 6 | 10 | 9 |

| 44 | 61 | 10 | 81 | 11 | 0 | 1 | 3 | 7 |

| 45 | 48 | 7 | 59 | 16 | 1 | 7 | 18 | 10 |

| 1 | 170 | 4 | 1190 | 226 | 44 | 20 | 18 | 1498 |

| 2 | 215 | 5 | 1123 | 228 | 49 | 43 | 27 | 1470 |

| 3 | 199 | 6 | 934 | 230 | 56 | 39 | 16 | 1275 |

| 4 | 149 | 3 | 677 | 158 | 40 | 19 | 14 | 908 |

| 5 | 215 | 5 | 1077 | 263 | 44 | 25 | 19 | 1428 |

| 6 | 229 | 6 | 1069 | 274 | 68 | 38 | 16 | 1465 |

| 7 | 301 | 7 | 1351 | 331 | 58 | 39 | 30 | 1809 |

| 8 | 71 | 2 | 482 | 106 | 30 | 14 | 5 | 637 |

| 9 | 43 | 1 | 211 | 47 | 12 | 5 | 5 | 280 |

| 10 | 285 | 7 | 1322 | 299 | 54 | 42 | 24 | 1741 |

| 11 | 157 | 3 | 705 | 287 | 39 | 21 | 9 | 1061 |

| 12 | 299 | 7 | 1413 | 338 | 58 | 30 | 27 | 1866 |

| 13 | 119 | 3 | 566 | 140 | 19 | 18 | 15 | 758 |

| 14 | 169 | 4 | 658 | 173 | 43 | 29 | 15 | 918 |

| 15 | 217 | 5 | 956 | 257 | 58 | 39 | 17 | 1327 |

| 16 | 253 | 6 | 1151 | 263 | 66 | 31 | 18 | 1529 |

| 17 | 243 | 6 | 1116 | 227 | 51 | 63 | 19 | 1476 |

| 18 | 261 | 7 | 1369 | 330 | 78 | 45 | 20 | 1842 |

| 19 | 161 | 3 | 562 | 119 | 39 | 30 | 8 | 758 |

| 20 | 39 | 1 | 290 | 56 | 11 | 12 | 1 | 370 |

| 21 | 39 | 1 | 310 | 82 | 9 | 5 | 9 | 415 |

| 22 | 127 | 3 | 590 | 127 | 32 | 21 | 15 | 779 |

| 23 | 394 | 8 | 1544 | 387 | 81 | 54 | 28 | 2094 |

| 24 | 71 | 2 | 503 | 79 | 29 | 10 | 11 | 632 |

| 25 | 71 | 2 | 435 | 92 | 16 | 16 | 8 | 567 |

| 26 | 301 | 7 | 1200 | 329 | 75 | 57 | 22 | 1683 |

| 27 | – | – | – | – | – | – | – | – |

| 28 | 71 | 2 | 413 | 113 | 22 | 11 | 9 | 568 |

| 29 | 233 | 5 | 977 | 268 | 57 | 35 | 32 | 1369 |

| 30 | 201 | 4 | 979 | 203 | 63 | 26 | 14 | 1285 |

| 31 | 63 | 2 | 396 | 98 | 31 | 7 | 4 | 536 |

| 32 | 41 | 1 | 262 | 61 | 31 | 5 | 7 | 366 |

| 33 | 71 | 2 | 474 | 92 | 28 | 17 | 12 | 623 |

| 34 | 71 | 2 | 424 | 98 | 21 | 19 | 6 | 568 |

| 35 | 41 | 1 | 285 | 49 | 15 | 7 | 2 | 358 |

| 36 | 237 | 6 | 1080 | 281 | 50 | 25 | 17 | 1453 |

| 37 | 71 | 2 | 576 | 93 | 36 | 13 | 6 | 724 |

| 38 | 301 | 7 | 1250 | 259 | 44 | 44 | 20 | 1617 |

| 39 | 301 | 7 | 1226 | 309 | 58 | 32 | 23 | 1648 |

| 40 | 41 | 1 | 288 | 59 | 16 | 6 | 0 | 369 |

| 41 | 65 | 2 | 456 | 99 | 33 | 17 | 11 | 616 |

| 42 | 227 | 5 | 1231 | 265 | 43 | 40 | 28 | 1607 |

| 43 | 259 | 6 | 1195 | 263 | 56 | 37 | 14 | 1565 |

| 44 | 71 | 2 | 495 | 163 | 28 | 15 | 13 | 714 |

| 45 | 267 | 7 | 1133 | 287 | 65 | 32 | 13 | 1530 |

| Activity | Average Time (ms) | Contribution (%) |

|---|---|---|

| Getting vehicle info | 5.26 | 39.39 |

| Storing task status in database | 1.21 | 9.09 |

| Sending task status to MMT | 0.28 | 2.13 |

| Assigning next action | 6.50 | 48.69 |

| Other activities | 0.09 | 0.70 |

| TOTAL AVERAGE TIME | 13.35 | 100.00 |

| 1 | 156 | 0 | 1694 | 10,962 | – | 86 | 12,742 |

| 2 | 87 | 0 | 884 | 5320 | – | 56 | 6260 |

| 3 | 128 | 0 | 1330 | 7037 | – | 72 | 8439 |

| 4 | 334 | 14 | 3072 | 17470 | 398 | 171 | 21,111 |

| 5 | 528 | 31 | 5202 | 26,807 | 878 | 274 | 33,161 |

| 6 | 305 | 26 | 3013 | 15,942 | 751 | 182 | 19,888 |

| 7 | 758 | 139 | 7063 | 37,239 | 4880 | 417 | 49,599 |

| 8 | 130 | 0 | 1173 | 6620 | – | 96 | 7889 |

| 9 | 1608 | 0 | 14,868 | 77,662 | – | 779 | 93,309 |

| 10 | 278 | 0 | 2832 | 13,490 | – | 119 | 16,441 |

| 11 | 48 | 0 | 390 | 2551 | – | 31 | 2972 |

| 12 | 303 | 0 | 2782 | 14,401 | – | 148 | 17,331 |

| 13 | 124 | 0 | 1177 | 6196 | – | 75 | 7448 |

| 14 | 92 | 0 | 776 | 4296 | – | 41 | 5113 |

| 15 | 493 | 0 | 4668 | 23,073 | – | 268 | 28,009 |

| 16 | 181 | 0 | 1859 | 11,483 | – | 69 | 13,411 |

| 17 | 276 | 0 | 2307 | 15,626 | – | 128 | 18,061 |

| 18 | 141 | 0 | 1308 | 8423 | – | 68 | 9799 |

| 19 | 279 | 48 | 2484 | 14,219 | 1354 | 172 | 18,219 |

| 20 | 13 | 0 | 164 | 867 | – | 4 | 1035 |

| 21 | 46 | 0 | 485 | 2372 | – | 30 | 2887 |

| 22 | 289 | 19 | 2557 | 14,106 | 517 | 166 | 17,346 |

| 23 | 851 | 137 | 7259 | 41,090 | 4554 | 504 | 53,405 |

| 24 | 94 | 0 | 873 | 4923 | – | 38 | 5834 |

| 25 | 216 | 0 | 1808 | 11,413 | – | 79 | 13,300 |

| 26 | 490 | 69 | 4504 | 23,836 | 1989 | 271 | 30,600 |

| 27 | – | – | – | – | – | – | – |

| 28 | 180 | 0 | 1790 | 8297 | – | 104 | 10,191 |

| 29 | 321 | 59 | 3173 | 15,605 | 1786 | 208 | 20,772 |

| 30 | 176 | 18 | 1619 | 10,429 | 499 | 86 | 12,633 |

| 31 | 54 | 0 | 524 | 2824 | – | 23 | 3371 |

| 32 | 14 | 0 | 137 | 810 | – | 9 | 956 |

| 33 | 110 | 0 | 1040 | 5278 | – | 64 | 6382 |

| 34 | 155 | 0 | 1513 | 7225 | – | 59 | 8797 |

| 35 | 127 | 0 | 1192 | 6132 | – | 66 | 7390 |

| 36 | 443 | 43 | 4102 | 20,727 | 1194 | 211 | 26,234 |

| 37 | 53 | 0 | 471 | 3663 | – | 36 | 4170 |

| 38 | 336 | 22 | 3359 | 17,326 | 617 | 189 | 21,491 |

| 39 | 228 | 8 | 2242 | 10,762 | 219 | 107 | 13,330 |

| 40 | 7 | 0 | 75 | 438 | – | 5 | 518 |

| 41 | 45 | 0 | 404 | 2828 | – | 21 | 3253 |

| 42 | 96 | 0 | 961 | 4807 | – | 57 | 5825 |

| 43 | 160 | 5 | 1473 | 9557 | 129 | 57 | 11,216 |

| 44 | 56 | 0 | 575 | 2955 | – | 21 | 3551 |

| 45 | 163 | 2 | 1563 | 8174 | 50 | 99 | 9886 |

| Activity | Average Time (ms) | Contribution (%) |

|---|---|---|

| Storing vector in the database | 9.36 | 10.24 |

| Storing vector in the ontology | 50.61 | 55.34 |

| Storing salinity in the ontology | 30.96 | 33.85 |

| Other activities | 0.52 | 0.57 |

| TOTAL AVERAGE TIME | 91.45 | 100.00 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lucas Martínez, N.; Martínez-Ortega, J.-F.; Rodríguez-Molina, J.; Zhai, Z. Proposal of an Automated Mission Manager for Cooperative Autonomous Underwater Vehicles. Appl. Sci. 2020, 10, 855. https://doi.org/10.3390/app10030855

Lucas Martínez N, Martínez-Ortega J-F, Rodríguez-Molina J, Zhai Z. Proposal of an Automated Mission Manager for Cooperative Autonomous Underwater Vehicles. Applied Sciences. 2020; 10(3):855. https://doi.org/10.3390/app10030855

Chicago/Turabian StyleLucas Martínez, Néstor, José-Fernán Martínez-Ortega, Jesús Rodríguez-Molina, and Zhaoyu Zhai. 2020. "Proposal of an Automated Mission Manager for Cooperative Autonomous Underwater Vehicles" Applied Sciences 10, no. 3: 855. https://doi.org/10.3390/app10030855

APA StyleLucas Martínez, N., Martínez-Ortega, J. -F., Rodríguez-Molina, J., & Zhai, Z. (2020). Proposal of an Automated Mission Manager for Cooperative Autonomous Underwater Vehicles. Applied Sciences, 10(3), 855. https://doi.org/10.3390/app10030855