Sensory Panel Performance Evaluation—Comprehensive Review of Practical Approaches

Abstract

:Featured Application

Abstract

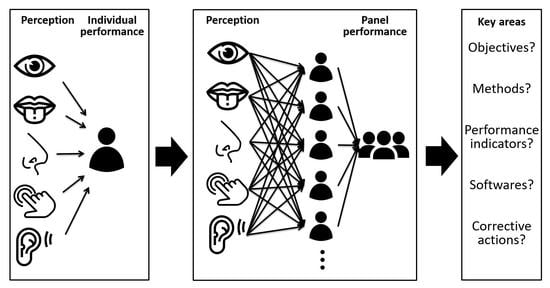

1. Introduction

2. Materials and Methods

3. Panelist Selection, Monitoring and Product-Specific Tests

4. Methods, Models and Frameworks in Sensory Panel Evaluation

4.1. Classification of Panel Performance Tools according to Sensory Method Types

4.2. Indicators and Effects of Individual Assessor and Panel Performance

- −

- Discrimination of an assessor/panel: ability of the assessor/panel to exhibit significant differences among products, (assessor: ANOVA one fixed factor (product); panel: ANOVA two fixed factors (product, assessors) and interaction).

- −

- Agreement of an assessor/panel: agreement ability of different panels or assessors to exhibit the same product differences when assigning scores on a given attribute to the same set of products, (assessor: distance and correlation to panel median; panel: significant difference among assessors).

- −

- Repeatability of an assessor: the degree of homogeneity between replicated assessments of the same product. Repeatability of a panel: the agreement in assessments of the same set of products under similar test conditions by the same assessors at different time points (assessor: ANOVA one fixed factor (product); panel: ANOVA two fixed factors (product, assessors) + interaction).

- −

- Reproducibility of the panel: the agreement in assessments of the same set of products under similar test conditions by different assessors (panel) at different time points (between-sessions in three-way ANOVA).

4.3. Structure and Models of Sensory Data

5. Software

- −

- Panelists’ recruitment, training, test design, scoresheet editing, test implementation, statistical analysis and reporting. Such programmes include the followings: Compusense (Compusense 20), Fizz, Redjade, EyeQuestion (V12021), SIMS (SIMS Sensory Software Cloud).

- −

- Specified statistical analysis of the test data and visualization. Some typical examples are: Senstools, XLSTAT (2021.5), SensoMineR (V3.1-5-1).

- −

- Measuring panel performance or test performance parameters: PanelCheck (V1.4.2), V-Power, SensCheck.

- −

- Compusense Cloud (Compusense, 679 Southgate Drive, Guelph, ON N1G 4S2, Canada) https://compusense.com/en/ accessed on 14 December 2021

- −

- Fizz (Biosystemes, 9, rue des Mardors, 21560 Couternon, France) http://www.biosystemes.com/fizz.php accessed on 14 December 2021

- −

- Red Jade Sensory (Tragon Corporation, 350 Bridge Parkway, Redwood Shores, CA 94065, USA) http://www.redjade.net/ accessed on 14 December 2021

- −

- Eye Question (Nieuwe Aamsestraat 90D Elst(Gld), PO Box 206 NL-6660 AE The Netherlands) https://eyequestion.nl/ accessed on 14 December 2021

- −

- TimeSens (l’Institut National de Recherche Agronomique (INRA), 35 rue Parmentier, 21000 Dijon, France) http://www.timesens.com/contact.aspx accessed on 14 December 2021

- −

- SIMS (Sensory Computer Systems: 144 Summit Avenue, Berkeley Heights, NJ, USA) http://www.sims2000.com/ accessed on 14 December 2021

- −

- Smart Sensory Box (Via Rockefeller 54, 07100 Sassari, Italy) https://www.smartsensorybox.com accessed on 14 December 2021

- −

- XLSTAT (Addinsoft Corporation, 40, rue Damrémont, 75018 Paris, France) https://www.xlstat.com/en/ accessed on 14 December 2021

- −

- Senstools (OP&P Product Research BV, Burgemeester Reigerstraat 89, 3581 KP Utrecht, The Netherland) http://www.senstools.com/ accessed on 14 December 2021

- −

- SensoMineR (The R Foundation for Statistical Computing, Institute for Statistics and Mathematics, Wirtschaftsuniversität Wien, Augasse 2-6, 1090 Vienna, Austria) http://sensominer.free.fr/ accessed on 14 December 2021

- −

- SensoMaker (Federal University of Lavras, CP 3037, 37200-000 Lavras-MG, Brazil) http://ufla.br/sensomaker/ accessed on 14 December 2021

- −

- Chemoface (Federal University of Lavras, CP 3037, 37200-000 Lavras-MG, Brazil) http://ufla.br/chemoface/ accessed on 14 December 2021

- −

- PanelCheck (Nofima, Breivika, PO Box 6122, NO-9291 Tromsø, Norway, Danish Technical University (DTU), Informatics and Mathematical Modelling, Lyngby, Denmark) http://www.panelcheck.com/ accessed on 14 December 2021

- −

- SenseCheck (AROXA™, Cara Technology Limited, Bluebird House, Mole Business Park, Station Road, Leatherhead, Surrey KT22 7BA, UK) https://www.aroxa.com/about-sensory-software accessed on 14 December 2021

- −

- V-Power (OP & P Product Research BV, Burgemeester Reigerstraat 89, 3581 KP Utrecht, The Netherland) http://www.senstools.com/v-power.html accessed on 14 December 2021

- −

- Design Express (Qi Statistics Ltd., Ruscombe Lane, RG10 9JN Reading, UK) https://www.qistatistics.co.uk/product/design-express/ accessed on 14 December 2021

- −

- SenPAQ (Qi Statistics Ltd., Ruscombe Lane, RG10 9JN Reading, UK) https://www.qistatistics.co.uk/product/senpaq/ accessed on 14 December 2021

- −

- ConsumerCheck (Nofima, Norway, Danish Technical University (DTU), Denmark, University of Maccherata, Italy, Stellenbosch University, South Africa, CSIRO, Australia) https://consumercheck.co/ accessed on 14 December 2021

- −

- OptiPAQ (Qi Statistics Ltd., Ruscombe Lane, RG10 9JN Reading, UK) https://www.qistatistics.co.uk/product/optipaq/ accessed on 14 December 2021

- −

- MaxDiff (Best-Worst) Scaling Apps (Qi Statistics Ltd., Ruscombe Lane, RG10 9JN Reading, UK) https://www.qistatistics.co.uk/product/maxdiff-best-worst-scaling-apps/ accessed on 14 December 2021

- −

- QualiSense (CAMO Software Inc, One Woodbridge Center Suite 319 Woodbridge, NJ 07095, USA) http://www.solutions4u-asia.com/pdt/cm/CM_Unscrambler-Quali%20Sense.html accessed on 14 December 2021

- −

- RapidCheck (Nofima, Breivika, PO Box 6122, NO-9291 Tromsø, Norway) http://nofima.no/en/forskning/naringsnytte/learn-to-taste-yourself/ accessed on 14 December 2021

- −

- SensoMineR https://cran.r-project.org/web/packages/SensoMineR/SensoMineR.pdf accessed on 14 December 2021

- −

- FactoMineR https://cran.r-project.org/web/packages/FactoMineR/FactoMineR.pdf accessed on 14 December 2021

- −

- SensR https://cran.r-project.org/web/packages/sensR/sensR.pdf accessed on 14 December 2021

- −

- lmerTest https://cran.r-project.org/web/packages/lmerTest/lmerTest.pdf accessed on 14 December 2021

- −

- SensMixed https://cran.r-project.org/web/packages/SensMixed/SensMixed.pdf accessed on 14 December 2021

- −

- mumm https://cran.r-project.org/web/packages/mumm/mumm.pdf accessed on 14 December 2021

- −

- Examination of discriminatory abilities (using samples with a certain degree of difference);

- −

- Testing of aroma identification abilities (using samples to which special aromas with known concentration and purity have been added);

- −

- Scale usage analysis (using series of samples covering a wide range of intensities in a single flavor);

- −

- Statistical analysis of repeated evaluations of samples (by analyzing routine test tasks evaluated by assessors).

- −

- Matlab (MathWorks Inc., 3 Apple Hill Drive Natick, MA 01760-2098, USA) https://www.mathworks.com/products/matlab.html accessed on 14 December 2021

- −

- SPSS Statistics (IBM Corporation Software Group, Route 100 Somers, NY 10589, USA) https://www.ibm.com/se-en/products/spss-statistics accessed on 14 December 2021

- −

- Statistica (StatSoft, Inc. 2300 East 14th Street Tulsa, OK 74104, USA) http://www.statsoft.com/Products/STATISTICA-Features accessed on 14 December 2021

- −

- SAS (SAS Institute Inc., 100 SAS Campus Drive, Cary, NC 27513-2414, USA) https://www.sas.com/en_us/home.html accessed on 14 December 2021

- −

- Unscrambler (CAMO Software Inc., One Woodbridge Center Suite 319 Woodbridge, NJ 07095, USA) https://www.camo.com/unscrambler/ accessed on 14 December 2021

- −

- R-project (The R Foundation for Statistical Computing, Institute for Statistics and Mathematics, Wirtschaftsuniversität Wien, Augasse 2-6, 1090 Vienna, Austria) https://www.r-project.org/ accessed on 14 December 2021

- −

- Palisade (Palisade Corporation, 798 Cascadilla Street, Ithaca, NY 14850, USA) https://www.palisade-br.com/stattools/testimonials.asp accessed on 14 December 2021

- −

- SYSTAT (Systat Software, Inc. 501 Canal Blvd, Suite E, Point Richmond, CA 94804-2028, USA) https://systatsoftware.com/ accessed on 14 December 2021

6. Conclusions and Outlook

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Stone, H. Example Food: What Are Its Sensory Properties and Why Is That Important? NPJ Sci. Food 2018, 2, 11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Lawless, H.T.; Heymann, H. Sensory Evaluation of Food: Principles and Practices. In Food Science Text Series, 2nd ed.; Springer: New York, NY, USA, 2010; ISBN 978-1-4419-6487-8. [Google Scholar]

- ISO 8586. Sensory Analysis—General Guidelines for the Selection, Training and Monitoring of Selected Assessors and Expert Sensory Assessors; ISO: Geneva, Switzerland, 2012. [Google Scholar]

- Dinnella, C.; Pierguidi, L.; Spinelli, S.; Borgogno, M.; Gallina Toschi, T.; Predieri, S.; Lavezzi, G.; Trapani, F.; Tura, M.; Magli, M.; et al. Remote Testing: Sensory Test during COVID-19 Pandemic and Beyond. Food Qual. Prefer. 2022, 96, 104437. [Google Scholar] [CrossRef]

- Moussour, M.; Lavarde, M.; Pensé-Lhéritier, A.-M.; Bouton, F. Sensory Analysis of Cosmetic Powders: Personal Care Ingredients and Emulsions. Int. J. Cosmet. Sci. 2017, 39, 83–89. [Google Scholar] [CrossRef] [PubMed]

- Rogers, L. Sensory Panel Management: A Practical Handbook for Recruitment, Training and Performance; Woodhead Publishing: Shaston, UK, 2017; ISBN 978-0-08-101115-7. [Google Scholar]

- ISO 5492. Sensory Analysis—Vocabulary; ISO: Geneva, Switzerland, 2008. [Google Scholar]

- Meilgaard, M.C.; Carr, B.T.; Civille, G.V. Sensory Evaluation Techniques, 4th ed.; CRC Press: Boca Raton, FL, USA, 2006; ISBN 978-0-8493-3839-7. [Google Scholar]

- Raithatha, C.; Rogers, L. Panel Quality Management: Performance, Monitoring and Proficiency. In Descriptive Analysis in Sensory Evaluation; John Wiley & Sons: Hoboken, NJ, USA, 2018; pp. 113–164. ISBN 978-0-470-67139-9. [Google Scholar]

- Bourne, M. Food Texture and Viscosity, 2nd ed.; Academic Press: San Diego, CA, USA, 2002. [Google Scholar]

- ISO 11036. Sensory Analysis—Methodology—Texture Profile; ISO: Geneva, Switzerland, 2020. [Google Scholar]

- Aprea, E.; Charles, M.; Endrizzi, I.; Laura Corollaro, M.; Betta, E.; Biasioli, F.; Gasperi, F. Sweet Taste in Apple: The Role of Sorbitol, Individual Sugars, Organic Acids and Volatile Compounds. Sci. Rep. 2017, 7, 44950. [Google Scholar] [CrossRef] [PubMed]

- Næs, T.; Brockhoff, P.B.; Tomic, O. Statistics for Sensory and Consumer Science, 1st ed.; John Wiley & Sons, Ltd.: Hoboken, NJ, USA, 2010. [Google Scholar]

- Romano, R.; Vestergaard, J.S.; Kompany-Zareh, M.; Bredie, W.L.P. Monitoring Panel Performance Within and between Sensory Experiments by Multi-Way Analysis. In Classification and Multivariate Analysis for Complex Data Structures; Fichet, B., Piccolo, D., Verde, R., Vichi, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2011; pp. 335–342. [Google Scholar]

- Bleibaum, R.N.; Kern, M.J.; Thomas, H. 24—Contextual Product Testing for Small to Medium Sized Enterprises (SMEs). In Context; Meiselman, H.L., Ed.; Woodhead Publishing: Shaston, UK, 2019; pp. 501–520. ISBN 978-0-12-814495-4. [Google Scholar]

- Djekic, I.; Lorenzo, J.M.; Munekata, P.E.S.; Gagaoua, M.; Tomasevic, I. Review on Characteristics of Trained Sensory Panels in Food Science. J. Texture Stud. 2021, 52, 501–509. [Google Scholar] [CrossRef]

- Halagarda, M.; Suwała, G. Sensory optimisation in new food product development: A case study of polish apple juice. Ital. J. Food Sci. 2018, 30, 317–335. [Google Scholar] [CrossRef]

- Giacalone, D. Chapter 4—Sensory and Consumer Approaches for Targeted Product Development in the Agro-Food Sector. In Case Studies in the Traditional Food Sector; Cavicchi, A., Santini, C., Eds.; Woodhead Publishing Series in Food Science, Technology and Nutrition; Woodhead Publishing: Shaston, UK, 2018; pp. 91–128. ISBN 978-0-08-101007-5. [Google Scholar]

- King, B.M.; Arents, P.; Moreau, N. Cost/Efficiency Evaluation of Descriptive Analysis Panels—I. Panel Size. Food Qual. Prefer. 1995, 6, 245–261. [Google Scholar] [CrossRef]

- Zamora, M.C.; Guirao, M. Performance Comparison Between Trained Assessors and Wine Experts Using Specific Sensory Attributes. J. Sens. Stud. 2004, 19, 530–545. [Google Scholar] [CrossRef]

- Pinto, F.S.T.; Fogliatto, F.S.; Qannari, E.M. A Method for Panelists’ Consistency Assessment in Sensory Evaluations Based on Cronbach’s Alpha Coefficient. Food Qual. Prefer. 2014, 32, 41–47. [Google Scholar] [CrossRef]

- Monteleone, E.; Langstaff, S. Olive Oil Sensory Science; John Wiley & Sons: Hoboken, NJ, USA, 2013; ISBN 978-1-118-33250-4. [Google Scholar]

- Rébufa, C.; Pinatel, C.; Artaud, J.; Girard, F. A Comparative Study of the Main International Extra Virgin Olive Oil Competitions: Their Impact on Producers and Consumers. Trends Food Sci. Technol. 2021, 107, 445–454. [Google Scholar] [CrossRef]

- Borràs, E.; Ferré, J.; Boqué, R.; Mestres, M.; Aceña, L.; Calvo, A.; Busto, O. Prediction of Olive Oil Sensory Descriptors Using Instrumental Data Fusion and Partial Least Squares (PLS) Regression. Talanta 2016, 155, 116–123. [Google Scholar] [CrossRef]

- Delgado, C.; Guinard, J.-X. How Do Consumer Hedonic Ratings for Extra Virgin Olive Oil Relate to Quality Ratings by Experts and Descriptive Analysis Ratings? Food Qual. Prefer. 2011, 22, 213–225. [Google Scholar] [CrossRef]

- Pearson, W.; Schmidtke, L.; Francis, I.L.; Blackman, J.W. An Investigation of the Pivot© Profile Sensory Analysis Method Using Wine Experts: Comparison with Descriptive Analysis and Results from Two Expert Panels. Food Qual. Prefer. 2020, 83, 103858. [Google Scholar] [CrossRef]

- da Costa, A.C.V.; Sousa, J.M.B.; da Silva, M.A.A.P.; Garruti, D.; dos Santos, G.; Madruga, M.S. Sensory and Volatile Profiles of Monofloral Honeys Produced by Native Stingless Bees of the Brazilian Semiarid Region. Food Res. Int. 2018, 105, 110–120. [Google Scholar] [CrossRef]

- Piana, M.L.; Oddo, L.P.; Bentabol, A.; Bruneau, E.; Bogdanov, S.; Declerck, C.G. Sensory Analysis Applied to Honey: State of the Art. Apidologie 2004, 35, S26–S37. [Google Scholar] [CrossRef] [Green Version]

- Pérez-Elortondo, F.J.; Symoneaux, R.; Etaio, I.; Coulon-Leroy, C.; Maître, I.; Zannoni, M. Current Status and Perspectives of the Official Sensory Control Methods in Protected Designation of Origin Food Products and Wines. Food Control 2018, 88, 159–168. [Google Scholar] [CrossRef]

- ISO 20613. Sensory Analysis—General Guidance for the Application of Sensory Analysis in Quality Control; ISO: Geneva, Switzerland, 2019. [Google Scholar]

- Smith, A.M.; McSweeney, M.B. Partial Projective Mapping and Ultra-Flash Profile with and without Red Light: A Case Study with White Wine. J. Sens. Stud. 2019, 34, e12528. [Google Scholar] [CrossRef]

- Csambalik, L.; Divéky-Ertsey, A.; Pap, Z.; Orbán, C.; Stégerné Máté, M.; Gere, A.; Stefanovits-Bányai, É.; Sipos, L. Coherences of Instrumental and Sensory Characteristics: Case Study on Cherry Tomatoes. J. Food Sci. 2014, 79, C2192–C2202. [Google Scholar] [CrossRef]

- Sipos, L.; Ficzek, G.; Kókai, Z.; Tóth, M. New Multiresistant Apple Cultivars—Complex Assessment of Sensory and Some Instrumental Attributes. Acta Aliment. 2013, 42, 264–274. [Google Scholar] [CrossRef]

- Bavay, C.; Symoneaux, R.; Maître, I.; Kuznetsova, A.; Brockhoff, P.B.; Mehinagic, E. Importance of Fruit Variability in the Assessment of Apple Quality by Sensory Evaluation. Postharvest Biol. Technol. 2013, 77, 67–74. [Google Scholar] [CrossRef]

- Corollaro, M.L.; Aprea, E.; Endrizzi, I.; Betta, E.; Demattè, M.L.; Charles, M.; Bergamaschi, M.; Costa, F.; Biasioli, F.; Corelli Grappadelli, L.; et al. A Combined Sensory-Instrumental Tool for Apple Quality Evaluation. Postharvest Biol. Technol. 2014, 96, 135–144. [Google Scholar] [CrossRef]

- Bernhardt, B.; Sipos, L.; Kókai, Z.; Gere, A.; Szabó, K.; Bernáth, J.; Sárosi, S. Comparison of Different Ocimum Basilicum L. Gene Bank Accessions Analyzed by GC–MS and Sensory Profile. Ind. Crop. Prod. 2015, 67, 498–508. [Google Scholar] [CrossRef]

- Sipos, L.; Végh, R.; Bodor, Z.; Zaukuu, J.-L.Z.; Hitka, G.; Bázár, G.; Kovacs, Z. Classification of Bee Pollen and Prediction of Sensory and Colorimetric Attributes—A Sensometric Fusion Approach by e-Nose, e-Tongue and NIR. Sensors 2020, 20, 6768. [Google Scholar] [CrossRef]

- Chun, S.; Chambers, E.; Han, I. Development of a Sensory Flavor Lexicon for Mushrooms and Subsequent Characterization of Fresh and Dried Mushrooms. Foods 2020, 9, 980. [Google Scholar] [CrossRef] [PubMed]

- Chambers, D.H.; Allison, A.-M.A.; Chambers Iv, E. Training Effects on Performance of Descriptive Panelists. J. Sens. Stud. 2004, 19, 486–499. [Google Scholar] [CrossRef]

- Di Donfrancesco, B.; Gutierrez Guzman, N.; Chambers, E., IV. Comparison of Results from Cupping and Descriptive Sensory Analysis of Colombian Brewed Coffee. J. Sens. Stud. 2014, 29, 301–311. [Google Scholar] [CrossRef] [Green Version]

- Varela, P.; Ares, G. Sensory Profiling, the Blurred Line between Sensory and Consumer Science. A Review of Novel Methods for Product Characterization. Food Res. Int. 2012, 48, 893–908. [Google Scholar] [CrossRef]

- O’Mahony, M. Who Told You the Triangle Test Was Simple? Food Qual. Prefer. 1995, 6, 227–238. [Google Scholar] [CrossRef]

- ISO 16820. Sensory Analysis—Methodology—Sequential Analysis; ISO: Geneva, Switzerland, 2019. [Google Scholar]

- ISO 11132. Sensory Analysis—Methodology—Guidelines for Monitoring the Performance of a Quantitative Sensory Panel; ISO: Geneva, Switzerland, 2012. [Google Scholar]

- ISO 13299. Sensory Analysis—Methodology—General Guidance For Establishing A Sensory Profile; ISO: Geneva, Switzerland, 2016. [Google Scholar]

- Kilcast, D. Sensory Analysis for Food and Beverage Quality Control: A Practical Guide; Elsevier: Amsterdam, The Netherlands, 2010; ISBN 978-1-84569-951-2. [Google Scholar]

- Brockhoff, P.B. Statistical Testing of Individual Differences in Sensory Profiling. Food Qual. Prefer. 2003, 14, 425–434. [Google Scholar] [CrossRef]

- Brockhoff, P.B.; Schlich, P.; Skovgaard, I. Taking Individual Scaling Differences into Account by Analyzing Profile Data with the Mixed Assessor Model. Food Qual. Prefer. 2015, 39, 156–166. [Google Scholar] [CrossRef]

- Derndorfer, E.; Baierl, A.; Nimmervoll, E.; Sinkovits, E. A Panel Performance Procedure Implemented in R. J. Sens. Stud. 2005, 20, 217–227. [Google Scholar] [CrossRef]

- Kermit, M.; Lengard, V. Assessing the Performance of a Sensory Panel–Panellist Monitoring and Tracking. J. Chemom. 2005, 19, 154–161. [Google Scholar] [CrossRef]

- Sipos, L.; Ladányi, M.; Gere, A.; Kókai, Z.; Kovács, S. Panel Performance Monitoring by Poincaré Plot: A Case Study on Flavoured Bottled Waters. Food Res. Int. 2017, 99, 198–205. [Google Scholar] [CrossRef]

- Pineau, N.; Moser, M.; Rawyler, F.; Lepage, M.; Antille, N.; Rytz, A. Design of Experiment with Sensory Data: A Pragmatic Data Analysis Approach. J. Sens. Stud. 2019, 34, e12489. [Google Scholar] [CrossRef]

- ISO 29842. Sensory Analysis—Methodology—Balanced Incomplete Block Designs; ISO: Geneva, Switzerland, 2011. [Google Scholar]

- Peltier, C.; Visalli, M.; Schlich, P. Multiplicative Decomposition of the Scaling Effect in the Mixed Assessor Model into a Descriptor-Specific and an Overall Coefficients. Food Qual. Prefer. 2016, 48, 268–273. [Google Scholar] [CrossRef]

- Peltier, C.; Brockhoff, P.B.; Visalli, M.; Schlich, P. The MAM-CAP Table: A New Tool for Monitoring Panel Performances. Food Qual. Prefer. 2014, 32, 24–27. [Google Scholar] [CrossRef]

- Lepage, M.; Neville, T.; Rytz, A.; Schlich, P.; Martin, N.; Pineau, N. Panel Performance for Temporal Dominance of Sensations. Food Qual. Prefer. 2014, 38, 24–29. [Google Scholar] [CrossRef]

- Tomic, O.; Nilsen, A.; Martens, M.; Næs, T. Visualization of Sensory Profiling Data for Performance Monitoring. LWT-Food Sci. Technol. 2007, 40, 262–269. [Google Scholar] [CrossRef]

- Tomic, O.; Luciano, G.; Nilsen, A.; Hyldig, G.; Lorensen, K.; Næs, T. Analysing Sensory Panel Performance in a Proficiency Test Using the PanelCheck Software. Eur. Food Res. Technol. 2009, 230, 497. [Google Scholar] [CrossRef]

- Rossi, F. Assessing Sensory Panelist Performance Using Repeatability and Reproducibility Measures. Food Qual. Prefer. 2001, 12, 467–479. [Google Scholar] [CrossRef]

- Le, S.; Husson, F. Sensominer: A Package for Sensory Data Analysis. J. Sens. Stud. 2008, 23, 14–25. [Google Scholar] [CrossRef]

- Le, S.; Worch, T. Analyzing Sensory Data with R; CRC Press: Boca Raton, FL, USA, 2018; ISBN 978-1-315-36274-8. [Google Scholar]

- Latreille, J.; Mauger, E.; Ambroisine, L.; Tenenhaus, M.; Vincent, M.; Navarro, S.; Guinot, C. Measurement of the Reliability of Sensory Panel Performances. Food Qual. Prefer. 2006, 17, 369–375. [Google Scholar] [CrossRef]

- Moussaoui, K.A.; Varela, P. Exploring Consumer Product Profiling Techniques and Their Linkage to a Quantitative Descriptive Analysis. Food Qual. Prefer. 2010, 21, 1088–1099. [Google Scholar] [CrossRef] [Green Version]

- López-López, A.; Sánchez-Gómez, A.H.; Montaño, A.; Cortés-Delgado, A.; Garrido-Fernández, A. Panel and Panelist Performance in the Sensory Evaluation of Black Ripe Olives from Spanish Manzanilla and Hojiblanca Cultivars. Foods 2019, 8, 562. [Google Scholar] [CrossRef] [Green Version]

- Elía, M. A Procedure for Sensory Evaluation of Bread: Protocol Developed by a Trained Panel. J. Sens. Stud. 2011, 26, 269–277. [Google Scholar] [CrossRef]

- Castura, J.C.; Findlay, C.J.; Lesschaeve, I. Monitoring Calibration of Descriptive Sensory Panels Using Distance from Target Measurements. Food Qual. Prefer. 2005, 16, 682–690. [Google Scholar] [CrossRef]

- Bi, J. Agreement and Reliability Assessments for Performance of Sensory Descriptive Panel. J. Sens. Stud. 2003, 18, 61–76. [Google Scholar] [CrossRef]

- Bi, J.; Kuesten, C. Intraclass Correlation Coefficient (ICC): A Framework for Monitoring and Assessing Performance of Trained Sensory Panels and Panelists. J. Sens. Stud. 2012, 27, 352–364. [Google Scholar] [CrossRef]

- Kuesten, C.; Bi, J. Application of a Panel Performance Reliability versus Product Effect Size (PR-ES) Framework: A Protein Powder Case Study. Food Qual. Prefer. 2021, 89, 104152. [Google Scholar] [CrossRef]

- Kollár-Hunek, K.; Heszberger, J.; Kókai, Z.; Láng-Lázi, M.; Papp, E. Testing Panel Consistency with GCAP Method in Food Profile Analysis. J. Chemom. 2008, 22, 218–226. [Google Scholar] [CrossRef]

- Sipos, L.; Kovács, Z.; Szöllősi, D.; Kókai, Z.; Dalmadi, I.; Fekete, A. Comparison of Novel Sensory Panel Performance Evaluation Techniques with E-Nose Analysis Integration. J. Chemom. 2011, 25, 275–286. [Google Scholar] [CrossRef]

- Peltier, C.; Visalli, M.; Schlich, P. Canonical Variate Analysis of Sensory Profiling Data. J. Sens. Stud. 2015, 30, 316–328. [Google Scholar] [CrossRef]

- Wilderjans, T.F.; Cariou, V. CLV3W: A Clustering around Latent Variables Approach to Detect Panel Disagreement in Three-Way Conventional Sensory Profiling Data. Food Qual. Prefer. 2016, 47, 45–53. [Google Scholar] [CrossRef] [Green Version]

- Kollár-Hunek, K.; Héberger, K. Method and Model Comparison by Sum of Ranking Differences in Cases of Repeated Observations (Ties). Chemom. Intell. Lab. Syst. 2013, 127, 139–146. [Google Scholar] [CrossRef]

- Amelio, M. Olive Oil Sensory Evaluation: An Alternative to the Robust Coefficient of Variation (CVr %) for Measuring Panel Group Performance in Official Tasting Sessions. Trends Food Sci. Technol. 2019, 88, 567–570. [Google Scholar] [CrossRef]

- Findlay, C.J.; Castura, J.C.; Schlich, P.; Lesschaeve, I. Use of Feedback Calibration to Reduce the Training Time for Wine Panels. Food Qual. Prefer. 2006, 17, 266–276. [Google Scholar] [CrossRef]

- Schlich, P. Grapes: A Method and a Sas® Program for Graphical Representations of Assessor Performances. J. Sens. Stud. 1994, 9, 157–169. [Google Scholar] [CrossRef]

- Kazi-Aoual, F.; Hitier, S.; Sabatier, R.; Lebreton, J.-D. Refined Approximations to Permutation Tests for Multivariate Inference. Comput. Stat. Data Anal. 1995, 20, 643–656. [Google Scholar] [CrossRef]

- Schlich, P. Defining and Validating Assessor Compromises about Product Distances and Attribute Correlations. Data Handl. Sci. Technol. 1996, 16, 259–306. [Google Scholar]

- Pineau, N.; Chabanet, C.; Schlich, P. Modeling the Evolution of the Performance of a Sensory Panel: A Mixed-Model and Control Chart Approach. J. Sens. Stud. 2007, 22, 212–241. [Google Scholar] [CrossRef]

- Amorim, I.S.; Ferreira, E.B.; Lima, R.R.; Pereira, R.G.F.A. Monte Carlo Based Test for Inferring about the Unidimensionality of a Brazilian Coffee Sensory Panel. Food Qual. Prefer. 2010, 21, 319–323. [Google Scholar] [CrossRef]

- Bro, R.; Qannari, E.M.; Kiers, H.A.L.; Næs, T.; Frøst, M.B. Multi-Way Models for Sensory Profiling Data. J. Chemom. 2008, 22, 36–45. [Google Scholar] [CrossRef]

- Aguirre, V.M.; Huerta-Pacheco, N.S.; Lopez, M.T. CONS an R Based Graphical Interface to Perform Consonance Analysis. Food Qual. Prefer. 2017, 62, 183–189. [Google Scholar] [CrossRef]

- Elgaard, L.; Mielby, L.A.; Hopfer, H.; Byrne, D.V. A Comparison of Two Sensory Panels Trained with Different Feedback Calibration Range Specifications via Sensory Description of Five Beers. Foods 2019, 8, 534. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Talsma, P. Assessing Sensory Panel Performance Using Generalizability Theory. Food Qual. Prefer. 2016, 47, 3–9. [Google Scholar] [CrossRef]

- Tomic, O.; Forde, C.; Delahunty, C.; Næs, T. Performance Indices in Descriptive Sensory Analysis—A Complimentary Screening Tool for Assessor and Panel Performance. Food Qual. Prefer. 2013, 28, 122–133. [Google Scholar] [CrossRef]

- ISO EA-4/09. Accreditation for Sensory Testing Laboratories; ISO: Geneva, Switzerland, 2017. [Google Scholar]

- ISO/IEC 17043. Conformity Assessment—General Requirements for Proficiency Testing; ISO: Geneva, Switzerland, 2010. [Google Scholar]

- ISO/IEC 17025. Testing and Calibration Laboratories; ISO: Geneva, Switzerland, 2017. [Google Scholar]

- Hyldig, G. 3-Proficiency Testing of Sensory Panels. In Sensory Analysis for Food and Beverage Quality Control; Kilcast, D., Ed.; Woodhead Publishing Series in Food Science, Technology and Nutrition; Woodhead Publishing: Shaston, UK, 2010; pp. 37–48. ISBN 978-1-84569-476-0. [Google Scholar]

- Pagès, J.; Husson, F. Inter-Laboratory Comparison of Sensory Profiles: Methodology and Results. Food Qual. Prefer. 2001, 12, 297–309. [Google Scholar] [CrossRef]

- McEwan, J.A.; Hunter, E.A.; van Gemert, L.J.; Lea, P. Proficiency Testing for Sensory Profile Panels: Measuring Panel Performance. Food Qual. Prefer. 2002, 13, 181–190. [Google Scholar] [CrossRef]

- McEwan, J.A.; Heiniö, R.-L.; Hunter, E.A.; Lea, P. Proficiency Testing for Sensory Ranking Panels: Measuring Panel Performance. Food Qual. Prefer. 2003, 14, 247–256. [Google Scholar] [CrossRef]

- Sauvageot, F.; Herbreteau, V.; Berger, M.; Dacremont, C. A Comparison between Nine Laboratories Performing Triangle Tests. Food Qual. Prefer. 2012, 24, 1–7. [Google Scholar] [CrossRef]

- Gagaoua, M.; Micol, D.; Picard, B.; Terlouw, C.E.M.; Moloney, A.P.; Juin, H.; Meteau, K.; Scollan, N.; Richardson, I.; Hocquette, J.-F. Inter-Laboratory Assessment by Trained Panelists from France and the United Kingdom of Beef Cooked at Two Different End-Point Temperatures. Meat Sci. 2016, 122, 90–96. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Barbieri, S.; Brkić Bubola, K.; Bendini, A.; Bučar-Miklavčič, M.; Lacoste, F.; Tibet, U.; Winkelmann, O.; García-González, D.L.; Gallina Toschi, T. Alignment and Proficiency of Virgin Olive Oil Sensory Panels: The OLEUM Approach. Foods 2020, 9, 355. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Meullenet, J.-F.; Xiong, R.; Findlay, C.J. Multivariate and Probabilistic Analyses of Sensory Science Problems; John Wiley & Sons: Hoboken, NJ, USA, 2008; ISBN 978-0-470-27631-0. [Google Scholar]

| Panelist or Panel | Panel Performance Parameter | Statistical Method/Plot | Software | References |

|---|---|---|---|---|

| Panelist and panel | Discrimination | ANOVA1 (Fisher’s F) | PanelCheck * | [57,58] |

| Agreement | CPCA2 (Tucker-1), Kendall’s τ (Eggshell) | |||

| Consensus | PCA3 (Manhattan) | |||

| Repeatability | MSE4 | |||

| Repeatability and Discrimination | p * MSE | |||

| Panelist and panel | Discrimination | ANOVA1 | MAM-CAP * | [55,56] |

| Agreement | ANOVA1 | |||

| Repeatability | RMSE5 | TimeSens | ||

| Scaling | ANOVA1 | |||

| Panelist and panel | Repeatability | h6, k7 | S-Plus 2000 | [59] |

| Reproducibility | ||||

| Panelist and panel | Discrimination | ANOVA1 | SensoMineR * and FactoMineR * | [60,61] |

| Agreement | ||||

| Repeatability | ||||

| Panel | Reproducibility | MFA8 | FactoMineR * | [60,61] |

| Panelist and panel | Discrimination | ANOVA1 | SAS macro | [62] |

| Agreement | ||||

| Repeatability | ||||

| Panelist | Discrimination | ANOVA1 | SenPAQ | [63] |

| Agreement | ANOVA1 | XLSTAT | [64] | |

| Repeatability | RMSE5 | Fizz | [65] | |

| Panelist | Response accuracy | frequency counts | Compusense | [66] |

| DT 9, ADT 10 | ||||

| Panelist and panel | Accuracy | ICC11 Cronbach’s α | R-code * | [67] |

| Validity | [68] | |||

| Reliability | [69] | |||

| Panelist and panel | Agreement | CSM12 | R-code * | [51] |

| (Consensus) | Poincaré plot | |||

| Panelist and panel | Consistency | GCAP13 | Excel macro | [70] |

| Reliability | [71] | |||

| Panel | Discrimination | CVA14 | CVApack * | [72] |

| Panelist | Agreement | ANOVA1 | SAS macro PANMODEL | [47] |

| Scaling | ||||

| Sensitivity | ||||

| Panel | Agreement | CLV3W15 | ClustVarLV * | [73] |

| Panelist and panel | Agreement | SRD16 | Excel macro | [74] |

| Panel | Reproducibility | CVr%17 | Excel macro | [75] |

| Panelist and panel | Discrimination | PCA3 | R-project * | [49,61] |

| Agreement | ||||

| Repeatability | ||||

| Panelist and panel | Discrimination | ANOVA1 | CompuSense | [76] |

| Panelist and panel | Discrimination | ANOVA1 | SAS macro GRAPES | [77] |

| Agreement | ||||

| Repeatability | ||||

| Panel | Agreement | RV18 | CompuSense | [78] [79] |

| Repeatability | NRV19 | |||

| Panelist and panel | Repeatability | RMSE5 control chart | SAS | [80] |

| Panel | Agreement | MC simulation with PCA 3 | R-project * | [81] |

| Panelist and panel | Agreement | PARAFAC20 | MATLAB N-way Toolbox | [82] [14] |

| Sensitivity | ||||

| Consistency | ||||

| Panel | Predictive ability | N-PLS21 | Unscrambler | [14] |

| Panelist | Agreement | PCA | R-project * | [83] |

| PANCA22 | ||||

| Panelist and panel | Agreement | GPA 23 | CompuSense | [76,84] |

| Discrimination | GPA23 | |||

| Scale usage | FCM24 | |||

| Repeatability | MSE4 | |||

| Discrimination | ANOVA1 | |||

| Panelist | Agreement (Consonance) | VAF25 | CONS | [83] |

| C26 | ||||

| Panelist and panel | Discrimination | G27 Φ 28 | Excel Macro | [85] |

| Agreement | ||||

| Repeatability | ||||

| Panelist and panel | Discrimination | ANOVA1 | PanelCheck 2010 * | [86] |

| Agreement | RV16RV229 | |||

| Repeatability | RV16RV229 | |||

| Panelist and panel | Agreement (Consonance) | Cronbach’s α | SPSS | [21] |

| Repeatability | ||||

| Reproducibility |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sipos, L.; Nyitrai, Á.; Hitka, G.; Friedrich, L.F.; Kókai, Z. Sensory Panel Performance Evaluation—Comprehensive Review of Practical Approaches. Appl. Sci. 2021, 11, 11977. https://doi.org/10.3390/app112411977

Sipos L, Nyitrai Á, Hitka G, Friedrich LF, Kókai Z. Sensory Panel Performance Evaluation—Comprehensive Review of Practical Approaches. Applied Sciences. 2021; 11(24):11977. https://doi.org/10.3390/app112411977

Chicago/Turabian StyleSipos, László, Ákos Nyitrai, Géza Hitka, László Ferenc Friedrich, and Zoltán Kókai. 2021. "Sensory Panel Performance Evaluation—Comprehensive Review of Practical Approaches" Applied Sciences 11, no. 24: 11977. https://doi.org/10.3390/app112411977

APA StyleSipos, L., Nyitrai, Á., Hitka, G., Friedrich, L. F., & Kókai, Z. (2021). Sensory Panel Performance Evaluation—Comprehensive Review of Practical Approaches. Applied Sciences, 11(24), 11977. https://doi.org/10.3390/app112411977