An Upper Extremity Rehabilitation System Using Efficient Vision-Based Action Identification Techniques

Abstract

:Featured Application

Abstract

1. Introduction

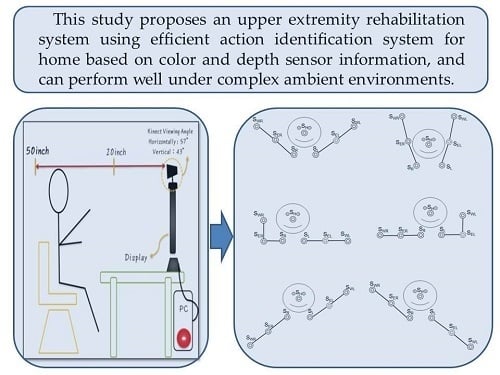

2. The Proposed Action Identification System for Home Upper Extremity Rehabilitation

2.1. Experimental Environment

2.2. The Proposed Methods

2.2.1. Depth/RGB Image Sensor

2.2.2. Skeletonizing

2.2.3. Skin Detection

2.2.4. Skeleton Point Establishment

Head Skeleton Point Determination

Shoulder Skeleton Point Determination

Elbow and Wrist Skeleton Point Determination

The Overall Skeleton Points Correction Process

2.2.5. Action Classifier

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Kvedar, J.; Coye, M.J.; Everett, W. Connected health: A review of technologies and strategies to improve patient care with telemedicine and telehealth. Health Aff. 2014, 33, 194–199. [Google Scholar] [CrossRef] [PubMed]

- Lindberg, B.; Nilsson, C.; Zotterman, D.; Soderberg, S.; Skar, L. Using Information and Communication Technology in Home Care for Communication between Patients, Family Members, and Healthcare Professionals: A Systematic Review. Int. J. Telemed. Appl. 2013, 2013, 461829. [Google Scholar] [CrossRef] [PubMed]

- Bianciardi Valassina, M.F.; Bella, S.; Murgia, F.; Carestia, A.; Prosseda, E. Telemedicine in pediatric wound care. Clin. Ther. 2016, 167, e21–e23. [Google Scholar]

- Gattu, R.; Teshome, G.; Lichenstein, R. Telemedicine Applications for the Pediatric Emergency Medicine: A Review of the Current Literature. Pediatr. Emerg. Care 2016, 32, 123–130. [Google Scholar] [CrossRef] [PubMed]

- Burke, B.L., Jr.; Hall, R.W. Telemedicine: Pediatric Applications. Pediatrics 2015, 136, e293–e308. [Google Scholar] [CrossRef] [PubMed]

- Grabowski, D.C.; O’Malley, A.J. Use of telemedicine can reduce hospitalizations of nursing home residents and generate savings for medicare. Health Aff. 2014, 33, 244–250. [Google Scholar] [CrossRef] [PubMed]

- Isetta, V.; Lopez-Agustina, C.; Lopez-Bernal, E.; Amat, M.; Vila, M.; Valls, C.; Navajas, D.; Farre, R. Cost-effectiveness of a new internet-based monitoring tool for neonatal post-discharge home care. J. Med. Internet Res. 2013, 15, e38. [Google Scholar] [CrossRef] [PubMed]

- Henderson, C.; Knapp, M.; Fernandez, J.L.; Beecham, J.; Hirani, S.P.; Cartwright, M.; Rixon, L.; Beynon, M.; Rogers, A.; Bower, P.; et al. Cost effectiveness of telehealth for patients with long term conditions (Whole Systems Demonstrator telehealth questionnaire study): Nested economic evaluation in a pragmatic, cluster randomised controlled trial. BMJ 2013, 346, f1035. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Patel, S.; Park, H.; Bonato, P.; Chan, L.; Rodgers, M. A review of wearable sensors and systems with application in rehabilitation. J. Neuroeng. Rehabil. 2012, 9, 21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- DeLisa, J.A.; Gans, B.M.; Walsh, N.E. Physical Medicine and Rehabilitation: Principles and Practice; Lippincott Williams & Wilkins: Philadelphia, PA, USA, 2005; Volume 1. [Google Scholar]

- Cameron, M.H.; Monroe, L. Physical Rehabilitation for the Physical Therapist Assistant; Elsevier: Amsterdam, The Netherlands, 2014. [Google Scholar]

- Taylor, R.S.; Watt, A.; Dalal, H.M.; Evans, P.H.; Campbell, J.L.; Read, K.L.; Mourant, A.J.; Wingham, J.; Thompson, D.R.; Pereira Gray, D.J. Home-based cardiac rehabilitation versus hospital-based rehabilitation: A cost effectiveness analysis. Int. J. Cardiol. 2007, 119, 196–201. [Google Scholar] [CrossRef] [PubMed]

- Lange, B.; Chang, C.Y.; Suma, E.; Newman, B.; Rizzo, A.S.; Bolas, M. Development and evaluation of low cost game-based balance rehabilitation tool using the Microsoft Kinect sensor. In Proceedings of the Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Boston, MA, USA, 30 August–3 September 2011; pp. 1831–1834. [Google Scholar]

- Jorgensen, M.G. Assessment of postural balance in community-dwelling older adults—Methodological aspects and effects of biofeedback-based Nintendo Wii training. Dan. Med. J. 2014, 61, B4775. [Google Scholar] [PubMed]

- Bartlett, H.L.; Ting, L.H.; Bingham, J.T. Accuracy of force and center of pressure measures of the Wii Balance Board. Gait Posture 2014, 39, 224–228. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clark, R.A.; Bryant, A.L.; Pua, Y.; McCrory, P.; Bennell, K.; Hunt, M. Validity and reliability of the Nintendo Wii Balance Board for assessment of standing balance. Gait Posture 2010, 31, 307–310. [Google Scholar] [CrossRef] [PubMed]

- Seamon, B.; DeFranco, M.; Thigpen, M. Use of the Xbox Kinect virtual gaming system to improve gait, postural control and cognitive awareness in an individual with Progressive Supranuclear Palsy. Disabil. Rehabil. 2016, 39, 721–726. [Google Scholar] [CrossRef] [PubMed]

- Ding, M.; Fan, G. Articulated and generalized gaussian kernel correlation for human pose estimation. IEEE Trans. Image Process. 2016, 25, 776–789. [Google Scholar] [CrossRef] [PubMed]

- Ding, M.; Fan, G. Articulated gaussian kernel correlation for human pose estimation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Boston, MA, USA, 7–12 June 2015; pp. 57–64. [Google Scholar]

- Ding, M.; Fan, G. Generalized Sum of Gaussians for Real-Time Human Pose Tracking from a Single Depth Sensor. In Proceedings of the IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa, HI, USA, 5–9 January 2015; pp. 47–54. [Google Scholar]

- Ye, M.; Wang, X.; Yang, R.; Ren, L.; Pollefeys, M. Accurate 3d pose estimation from a single depth image. In Proceedings of the IEEE International Conference on Computer Vision (ICCV), Barcelona, Spain, 6–13 November 2011; pp. 731–738. [Google Scholar]

- Baak, A.; Müller, M.; Bharaj, G.; Seidel, H.P.; Theobalt, C. A data-driven approach for real-time full body pose reconstruction from a depth camera. In Consumer Depth Cameras for Computer Vision; Springer: London, UK, 2013; pp. 71–98. [Google Scholar]

- Cao, Z.; Simon, T.; Wei, S.E.; Sheikh, Y. Realtime Multi-person 2D Pose Estimation Using Part Affinity Fields. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu Hawaii, 21–26 July 2017; pp. 7291–7299. [Google Scholar]

- PCL/OpenNI Tutorial 1: Installing and Testing. Available online: http://robotica.unileon.es/index.php/PCL/OpenNI_tutorial_1:_Installing_and_testing (accessed on 17 July 2018).

- Falahati, S. OpenNI Cookbook; Packt Publishing Ltd.: Birmingham, UK, 2013. [Google Scholar]

- Hackenberg, G.; McCall, R.; Broll, W. Lightweight palm and finger tracking for real-time 3D gesture control. In Proceedings of the IEEE Virtual Reality Conference, Singapore, 19–23 March 2011; pp. 19–26. [Google Scholar]

- Gonzalez-Sanchez, T.; Puig, D. Real-time body gesture recognition using depth camera. Electron. Lett. 2011, 47, 697–698. [Google Scholar] [CrossRef]

- Rosenfeld, A.; Pfaltz, J.L. Sequential operations in digital picture processing. J. ACM 1966, 13, 471–494. [Google Scholar]

- John, C.R. The Image Processing Handbook, 6th ed.; CRC Press: Boca Raton, FL, USA, 2016; pp. 654–659. ISBN 9781439840634. [Google Scholar]

- Kakumanu, P.; Makrogiannis, S.; Bourbakis, N. A survey of skin-color modeling and detection methods. Pattern Recognit. 2007, 40, 1106–1122. [Google Scholar] [CrossRef]

- Sempena, S.; Maulidevi, N.U.; Aryan, P.R. Human action recognition using dynamic time warping. In Proceedings of the IEEE International Conference on Electrical Engineering and Informatics (ICEEI), Bandung, Indonesia, 17–19 July 2011; pp. 1–5. [Google Scholar]

- Muscillo, R.; Schmid, M.; Conforto, S.; D’Alessio, T. Early recognition of upper limb motor tasks through accelerometer: Real-time implementation of a DTW-based algorithm. Comput. Biol. Med. 2011, 41, 164–172. [Google Scholar] [CrossRef] [PubMed]

- Patlolla, C.; Sidharth, M.; Nasser, K. Real-time hand-pair gesture recognition using a stereo webcam. In Proceedings of the IEEE International Conference on Emerging Signal Processing Applications (ESPA), Las Vegas, NV, USA, 12–14 January 2012; pp. 135–138. [Google Scholar]

| Position No. | Position of Skeleton Points | Demo of Movement |

|---|---|---|

| position 0 (initial position) |  |  |

| position 1 |  |  |

| position 2 |  |  |

| position 3 |  |  |

| position 4 |  |  |

| position 5 |  |  |

| position 6 |  |  |

| Hardware | Software |

|---|---|

| CPU: Intel Core(TM)2 Quad 2.33 GHz 2.34 GHz | OS: WIN7 |

| RAM: 4 GB | Platform: QT 2.4.1 |

| Depth camera: Kinect | Library: QT 4.7.4, OpenCV-2.4.3 OpenNI1.5.2.23(only for RGB image and depth information) |

| RGB camera: LogitechC920 | / |

| Dpi | Release | |

|---|---|---|

| ms | fps | |

| 640 × 480 | 27 | 37 |

| 320 × 240 | 8 | 125 |

| Test Sequence | No. of Frames | Skin Color Detection Speed | System Execution Speed | ||

|---|---|---|---|---|---|

| Release Model | Release Model | ||||

| ms | fps | ms | fps | ||

| Test Sample 1 | 618 | 0.27 | 3704 | 8.46 | 118 |

| Test Sample 2 | 555 | 0.25 | 4000 | 8.29 | 121 |

| Test Sample 3 | 554 | 0.26 | 3846 | 8.04 | 124 |

| Test Sample 4 | 509 | 0.26 | 3846 | 8.14 | 123 |

| Test Sample 5 | 541 | 0.25 | 4000 | 8.09 | 124 |

| Test Sample 6 | 547 | 0.27 | 3704 | 8.20 | 122 |

| Test Sample 7 | 539 | 0.25 | 4000 | 8.02 | 125 |

| Test Sample 8 | 640 | 0.26 | 3846 | 7.97 | 125 |

| Test Sample 9 | 612 | 0.26 | 3846 | 8.08 | 124 |

| Test Sample 10 | 540 | 0.26 | 3846 | 7.93 | 126 |

| Test Sample 11 | 629 | 0.25 | 4000 | 7.86 | 127 |

| Test Sample 12 | 534 | 0.27 | 3704 | 7.87 | 127 |

| Test Sample 13 | 517 | 0.28 | 3571 | 8.58 | 117 |

| Test Sample 14 | 517 | 0.25 | 4000 | 7.65 | 131 |

| Test Sample 15 | 604 | 0.25 | 4000 | 7.94 | 126 |

| Test Sample 16 | 528 | 0.25 | 4000 | 8.07 | 124 |

| Test Sample 17 | 535 | 0.25 | 4000 | 7.67 | 130 |

| Test Sample 18 | 553 | 0.27 | 3704 | 7.90 | 127 |

| Test Sample 19 | 524 | 0.24 | 4167 | 7.75 | 129 |

| Test Sample 20 | 538 | 0.25 | 4000 | 7.90 | 127 |

| average | 557 | 0.26 | 3889 | 8.02 | 125 |

| Test Sequence | No. of Actual Determined Actions | No. of Correctly Determined Actions | Accuracy Rate of Identifying Rehabilitation Action |

|---|---|---|---|

| position 1 | 151 | 149 | 98.7% |

| position 2 | 150 | 146 | 97.3% |

| position 3 | 156 | 155 | 99.4% |

| position 4 | 190 | 184 | 96.8% |

| position 5 | 120 | 119 | 99.2% |

| position 6 | 186 | 182 | 97.8% |

| Total | 953 | 935 | 98.1% |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, Y.-L.; Liu, C.-H.; Yu, C.-W.; Lee, P.; Kuo, Y.-W. An Upper Extremity Rehabilitation System Using Efficient Vision-Based Action Identification Techniques. Appl. Sci. 2018, 8, 1161. https://doi.org/10.3390/app8071161

Chen Y-L, Liu C-H, Yu C-W, Lee P, Kuo Y-W. An Upper Extremity Rehabilitation System Using Efficient Vision-Based Action Identification Techniques. Applied Sciences. 2018; 8(7):1161. https://doi.org/10.3390/app8071161

Chicago/Turabian StyleChen, Yen-Lin, Chin-Hsuan Liu, Chao-Wei Yu, Posen Lee, and Yao-Wen Kuo. 2018. "An Upper Extremity Rehabilitation System Using Efficient Vision-Based Action Identification Techniques" Applied Sciences 8, no. 7: 1161. https://doi.org/10.3390/app8071161

APA StyleChen, Y. -L., Liu, C. -H., Yu, C. -W., Lee, P., & Kuo, Y. -W. (2018). An Upper Extremity Rehabilitation System Using Efficient Vision-Based Action Identification Techniques. Applied Sciences, 8(7), 1161. https://doi.org/10.3390/app8071161