Structural Low-Level Dynamic Response Analysis Using Deviations of Idealized Edge Profiles and Video Acceleration Magnification

Abstract

:1. Introduction

2. Theory and Algorithm

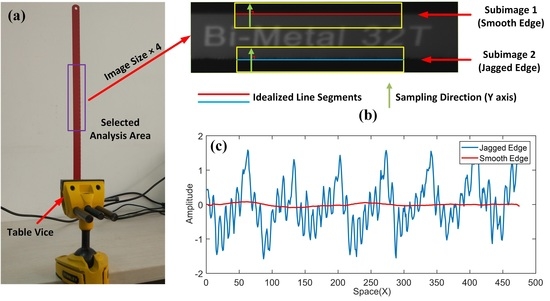

2.1. Deviation Extraction in a Single Image

2.2. Low-Level Variation Magnification

2.3. SVD-Based Variations’ Extraction

3. Experimental Verifications

3.1. Light-Weight Beam Property Analysis

3.2. Vibration Analysis of the Noise Barrier

4. Discussion and Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- You, D.; Gao, X.; Katayama, S. Monitoring of high-power laser welding using high-speed photographing and image processing. Mech. Syst. Signal Proc. 2014, 49, 39–52. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Experimental validation of cost-effective vision-based structural health monitoring. Mech. Syst. Signal Proc. 2017, 88, 199–211. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Computer vision for SHM of civil infrastructure: From dynamic response measurement to damage detection—A review. Eng. Struct. 2018, 156, 105–117. [Google Scholar] [CrossRef]

- Poozesh, P.; Sarrafi, A.; Mao, Z.; Avitabile, P.; Niezrecki, C. Feasibility of extracting operating shapes using phase-based motion magnification technique and stereo-photogrammetry. J. Sound Vib. 2017, 407, 350–366. [Google Scholar] [CrossRef]

- Yang, Y.; Dorn, C.; Mancini, T.; Talken, Z.; Nagarajaiah, S.; Kenyon, G.; Farrar, C.; Mascareñas, D. Blind identification of full-field vibration modes of output-only structures from uniformly-sampled, possibly temporally-aliased (sub-Nyquist), video measurements. J. Sound Vib. 2017, 390, 232–256. [Google Scholar] [CrossRef]

- Molina-Viedma, A.J.; Felipe-Sesé, L.; López-Alba, E.; Díaz, F. High frequency mode shapes characterisation using Digital Image Correlation and phase-based motion magnification. Mech. Syst. Signal Proc. 2018, 102, 245–261. [Google Scholar] [CrossRef]

- Park, J.W.; Lee, J.J.; Jung, H.J.; Myung, H. Vision-based displacement measurement method for high-rise building structures using partitioning approach. NDT E Int. 2010, 43, 642–647. [Google Scholar] [CrossRef]

- Cha, Y.J.; Chen, J.G.; Büyüköztürk, O. Output-only computer vision based damage detection using phase-based optical flow and unscented Kalman filters. Eng. Struct. 2017, 132, 300–313. [Google Scholar] [CrossRef]

- Sarrafi, A.; Mao, Z.; Niezrecki, C.; Poozesh, P. Vibration-based damage detection in wind turbine blades using Phase-based Motion Estimation and motion magnification. J. Sound Vib. 2018, 421, 300–318. [Google Scholar] [CrossRef] [Green Version]

- Reu, P.L.; Rohe, D.P.; Jacobs, L.D. Comparison of DIC and LDV for practical vibration and modal measurements. Mech. Syst. Signal Proc. 2017, 86, 2–16. [Google Scholar] [CrossRef]

- Baker, S.; Matthews, I. Lucas-kanade 20 years on: A unifying framework. Int. J. Comput. Vis. 2004, 56, 221–255. [Google Scholar] [CrossRef]

- Guo, J.; Zhu, C.A.; Lu, S.; Zhang, D.; Zhang, C. Vision-based measurement for rotational speed by improving Lucas–Kanade template tracking algorithm. Appl. Opt. 2016, 55, 7186–7194. [Google Scholar] [CrossRef] [PubMed]

- Pan, B.; Tian, L. Superfast robust digital image correlation analysis with parallel computing. Opt. Eng. 2017, 54, 034106. [Google Scholar] [CrossRef]

- Feng, W.; Jin, Y.; Wei, Y.; Hou, W.; Zhu, C. Technique for two-dimensional displacement field determination using a reliability-guided spatial-gradient-based digital image correlation algorithm. Appl. Opt. 2018, 57, 2780–2789. [Google Scholar] [CrossRef] [PubMed]

- Chen, J.G.; Wadhwa, N.; Cha, Y.J.; Durand, F.; Freeman, W.T.; Buyukozturk, O. Modal identification of simple structures with high-speed video using motion magnification. J. Sound Vib. 2015, 345, 58–71. [Google Scholar] [CrossRef]

- Davis, A.; Bouman, K.; Chen, J.; Rubinstein, M.; Buyukozturk, O.; Durand, F.; Freeman, W.T. Visual vibrometry: Estimating material properties from small motions in video. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 732–745. [Google Scholar] [CrossRef] [PubMed]

- Davis, A.; Rubinstein, M.; Wadhwa, N.; Mysore, G.J.; Durand, F.; Freeman, W.T. The visual microphone: Passive recovery of sound from video. ACM Trans. Graph. 2014, 33, 79. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, J.; Lei, X.; Zhu, C. Note: Sound recovery from video using svd-based information extraction. Rev. Sci. Instrum. 2016, 87, 086111. [Google Scholar] [CrossRef] [PubMed]

- Zhu, G.; Yao, X.R.; Qiu, P.; Mahmood, W.; Yu, W.K.; Sun, Z.B.; Zhai, G.J.; Zhao, Q. Sound recovery via intensity variations of speckle pattern pixels selected with variance-based method. Opt. Eng. 2018, 57, 026117. [Google Scholar] [CrossRef]

- Lu, S.; Guo, J.; He, Q.; Liu, F.; Liu, Y.; Zhao, J. A novel contactless angular resampling method for motor bearing fault diagnosis under variable speed. IEEE Trans. Instrum. Meas. 2016, 65, 2538–2550. [Google Scholar] [CrossRef]

- Wang, X.; Guo, J.; Lu, S.; Shen, C.; He, Q. A computer-vision-based rotating speed estimation method for motor bearing fault diagnosis. Meas. Sci. Technol. 2017, 28, 065012. [Google Scholar] [CrossRef]

- Evangelidis, G.D.; Psarakis, E.Z. Parametric image alignment using enhanced correlation coefficient maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [PubMed]

- Guizar-Sicairos, M.; Thurman, S.T.; Fienup, J.R. Efficient subpixel image registration algorithms. Opt. Lett. 2008, 33, 156–158. [Google Scholar] [CrossRef] [PubMed]

- Zhang, D.; Guo, J.; Lei, X.; Zhu, C. A high-speed vision-based sensor for dynamic vibration analysis using fast motion extraction algorithms. Sensors 2016, 16, 572. [Google Scholar] [CrossRef]

- Wadhwa, N.; Dekel, T.; Wei, D.; Freeman, W.T.; Durand, F. Deviation magnification: Revealing departures from ideal geometries. ACM Trans. Graph. 2015, 34, 226. [Google Scholar] [CrossRef]

- Simoncelli, E.P.; Freeman, W.T.; Adelson, E.H.; Heeger, D.J. Shiftable multiscale transforms. IEEE Trans. Inf. Theory 1991, 38, 587–607. [Google Scholar] [CrossRef]

- Wu, H.Y.; Rubinstein, M.; Shih, E.; Guttag, J.; Durand, F.; Freeman, W.T. Eulerian video magnification for revealing subtle changes in the world. ACM Trans. Graph. 2012, 31, 65. [Google Scholar] [CrossRef]

- Wadhwa, N.; Rubinstein, M.; Durand, F.; Freeman, W.T. Phase-based video motion processing. ACM Trans. Graph. 2013, 34, 80. [Google Scholar] [CrossRef]

- Wadhwa, N.; Wu, H.Y.; Davis, A.; Rubinstein, M.; Shih, E.; Mysore, G.J.; Durand, F. Eulerian video magnification and analysis. Commun. ACM 2016, 60, 87–95. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Pintea, S.L.; van Gemert, J.C. Video acceleration magnification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; Volume 60, pp. 502–510. [Google Scholar]

- Fleet, D.J.; Jepson, A.D. Computation of component image velocity from local phase information. Int. J. Comput. Vis. 1990, 5, 77–104. [Google Scholar] [CrossRef]

- Lindeberg, T. Scale-Space Theory in Computer Vision; Springer: Berlin, Germany, 2013; Volume 256, pp. 349–382. [Google Scholar]

- Cordelia, S. Indexing based on scale invariant interest points. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Kauai, HI, USA, 8–14 December 2001; Volume 1, pp. 525–531. [Google Scholar]

- Feng, D.; Feng, M.Q.; Ozer, E.; Fukuda, Y. A vision-based sensor for noncontact structural displacement measurement. Sensors 2015, 15, 16557–16575. [Google Scholar] [CrossRef] [PubMed]

- Levin, A.; Lischinski, D.; Weiss, Y. Colorization using optimization. ACM Trans. Graph. 2004, 23, 689–694. [Google Scholar] [CrossRef]

- Chunli, Z.; Jie, G.; Dashan, Z.; Yuan, S.; Dongcai, L. In situ measurement of wind-induced pulse response of sound barrier based on high-speed imaging technology. Math. Probl. Eng. 2016, 1–8. [Google Scholar] [CrossRef]

- Feng, M.Q.; Fukuda, Y.; Feng, D.; Mizuta, M. Nontarget vision sensor for remote measurement of bridge dynamic response. J. Bridge Eng. 2015, 20, 04015023. [Google Scholar] [CrossRef]

- Feng, D.; Feng, M.Q. Model updating of railway bridge using in situ dynamic displacement measurement under trainloads. J. Bridge Eng. 2015, 20, 04015019. [Google Scholar] [CrossRef]

- Hermanns, L.; Giménez, J.G.; Alarcón, E. Efficient computation of the pressures developed during high-speed train passing events. Comput. Struct. 2005, 83, 793–803. [Google Scholar] [CrossRef] [Green Version]

| Model 1 | Model 2 | Model 3 | Model 4 | |

|---|---|---|---|---|

| Theoretical (Hz) | 6.41 | 40.17 | 112.43 | 220.31 |

| Proposed Method (Hz) | 6.37 | 40.16 | 113.60 | 221.60 |

| Laplacian Kernel () | - | 2.20 | 0.79 | 0.40 |

| Amplification Factor () | - | 8 | 10 | 12 |

| Model A | Model B | Model C | |

|---|---|---|---|

| Proposed Method (Hz) | 10.12 | 21.07 | 45.77 |

| Laplacian Kernel () | - | 1.95 | 0.90 |

| Amplification Factor () | - | 6 | 10 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, D.; Fang, L.; Wei, Y.; Guo, J.; Tian, B. Structural Low-Level Dynamic Response Analysis Using Deviations of Idealized Edge Profiles and Video Acceleration Magnification. Appl. Sci. 2019, 9, 712. https://doi.org/10.3390/app9040712

Zhang D, Fang L, Wei Y, Guo J, Tian B. Structural Low-Level Dynamic Response Analysis Using Deviations of Idealized Edge Profiles and Video Acceleration Magnification. Applied Sciences. 2019; 9(4):712. https://doi.org/10.3390/app9040712

Chicago/Turabian StyleZhang, Dashan, Liangfei Fang, Ye Wei, Jie Guo, and Bo Tian. 2019. "Structural Low-Level Dynamic Response Analysis Using Deviations of Idealized Edge Profiles and Video Acceleration Magnification" Applied Sciences 9, no. 4: 712. https://doi.org/10.3390/app9040712

APA StyleZhang, D., Fang, L., Wei, Y., Guo, J., & Tian, B. (2019). Structural Low-Level Dynamic Response Analysis Using Deviations of Idealized Edge Profiles and Video Acceleration Magnification. Applied Sciences, 9(4), 712. https://doi.org/10.3390/app9040712