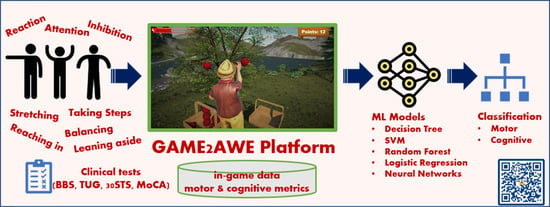

A Machine-Learning-Based Motor and Cognitive Assessment Tool Using In-Game Data from the GAME2AWE Platform

Abstract

:1. Introduction

1.1. Background and Motivation

1.2. Related Work

1.2.1. Cognitive Assessment

1.2.2. Physical Health Assessment

1.3. Contribution

2. Materials and Methods

2.1. GAME2AWE Platform

2.1.1. Fruit Harvest Exergame

- i.

- State change speed: this is the average time interval, measured in seconds, that elapses for a fruit to transition between its states (green, ripe, and rotten).

- ii.

- Appearance mode: this involves an equal distribution of the harvest target on both sides (left/right), with fruits first appearing on one side and then on the other, or the side where the appearance is continuously alternating, or where fruits appear randomly on either side.

- iii.

- Harvest target: the number of fruits that must be collected in a round of the game.

2.1.2. Data Management

- Senior demographic information and account login details.

- Past data derived from the game scenarios played by the seniors such as the following: date, user ID, game ID, game name, difficulty level, score, vitality level, missed sessions, completed movements, performed movement timestamp, and confidence.

- Statistical information on the seniors’ performance in the game, their accomplishment of goals, and their level of engagement.

- Motor and cognitive clinical assessment test results.

2.2. Study Design

2.2.1. Data Characteristics

2.2.2. Demographics of Participants

2.3. Machine Learning Methodology

2.3.1. Data Collection

2.3.2. Data Cleaning, Feature Engineering, and Selection

2.3.3. Target Class Construction and Balancing

2.3.4. Machine Learning Techniques

3. Results

4. Discussion

Limitations and Future Work

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Prince, M.J.; Wu, F.; Guo, Y.; Robledo, L.M.G.; O’Donnell, M.; Sullivan, R.; Yusuf, S. The burden of disease in older people and implications for health policy and practice. Lancet 2015, 385, 549–562. [Google Scholar] [CrossRef]

- Demanze Laurence, B.; Michel, L. The fall in older adults: Physical and cognitive problems. Curr. Aging Sci. 2017, 10, 185–200. [Google Scholar]

- Glisky, E.L. Changes in cognitive function in human aging. In Brain Aging: Models, Methods, and Mechanisms; CRC Press: Boca Raton, FL, USA; Taylor & Francis: Boca Raton, FL, USA, 2007; pp. 3–20. [Google Scholar]

- Schoene, D.S.; Sturnieks, D.L. Cognitive-Motor Interventions and Their Effects on Fall Risk in Older People. In Falls in Older People: Risk Factors, Strategies for Prevention and Implications for Practice; Cambridge University Press: Cambridge, UK, 2021; pp. 287–310. [Google Scholar]

- Gremeaux, V.; Gayda, M.; Lepers, R.; Sosner, P.; Juneau, M.; Nigam, A. Exercise and longevity. Maturitas 2012, 73, 312–317. [Google Scholar] [CrossRef] [PubMed]

- Levin, O.; Netz, Y.; Ziv, G. The beneficial effects of different types of exercise interventions on motor and cognitive functions in older age: A systematic review. Eur. Rev. Aging Phys. Act. 2017, 14, 20. [Google Scholar] [CrossRef]

- Schutzer, K.A.; Graves, B.S. Barriers and motivations to exercise in older adults. Prev. Med. 2004, 39, 1056–1061. [Google Scholar] [CrossRef]

- Larsen, L.H.; Schou, L.; Lund, H.H.; Langberg, H. The physical effect of exergames in healthy elderly—A systematic review. Games Health J. Res. Dev. Clin. Appl. 2013, 2, 205–212. [Google Scholar] [CrossRef] [Green Version]

- Kappen, D.L.; Mirza-Babaei, P.; Nacke, L.E. Older Adults’ Physical Activity and Exergames: A Systematic Review. Int. J. Hum.–Comput. Interact. 2019, 35, 140–167. [Google Scholar] [CrossRef]

- Kamnardsiri, T.; Phirom, K.; Boripuntakul, S.; Sungkarat, S. An Interactive Physical-Cognitive Game-Based Training System Using Kinect for Older Adults: Development and Usability Study. JMIR Serious Games 2021, 9, e27848. [Google Scholar] [CrossRef] [PubMed]

- Wang, R.-Y.; Huang, Y.-C.; Zhou, J.-H.; Cheng, S.-J.; Yang, Y.-R. Effects of Exergame-Based Dual-Task Training on Executive Function and Dual-Task Performance in Community-Dwelling Older People: A Randomized-Controlled Trial. Games Health J. 2021, 10, 347–354. [Google Scholar] [CrossRef] [PubMed]

- Yang, C.M.; Hsieh, J.S.C.; Chen, Y.C.; Yang, S.Y.; Lin, H.C.K. Effects of Kinect exergames on balance training among community older adults: A randomized controlled trial. Medicine 2020, 99, e21228. [Google Scholar] [CrossRef]

- Uzor, S.; Baillie, L. Investigating the long-term use of exergames in the home with elderly fallers. In Proceedings of the SIGCHI Conference on Human Factors in Computing Systems, Toronto, ON, Canada, 26 April–1 May 2014; pp. 2813–2822. [Google Scholar]

- Velazquez, A.; Martínez-García, A.I.; Favela, J.; Ochoa, S.F. Adaptive exergames to support active aging: An action research study. Pervasive Mob. Comput. 2017, 34, 60–78. [Google Scholar] [CrossRef]

- Guimarães, V.; Oliveira, E.; Carvalho, A.; Cardoso, N.; Emerich, J.; Dumoulin, C.; Swinnen, N.; De Jong, J.; de Bruin, E.D. An Exergame Solution for Personalized Multicomponent Training in Older Adults. Appl. Sci. 2021, 11, 7986. [Google Scholar] [CrossRef]

- Konstantinidis, E.I.; Bamidis, P.D.; Billis, A.; Kartsidis, P.; Petsani, D.; Papageorgiou, S.G. Physical Training In-Game Metrics for Cognitive Assessment: Evidence from Extended Trials with the Fitforall Exergaming Platform. Sensors 2021, 21, 5756. [Google Scholar] [CrossRef] [PubMed]

- Tong, T.; Chignell, M.; Tierney, M.C.; Lee, J. A Serious Game for Clinical Assessment of Cognitive Status: Validation Study. JMIR Serious Games 2016, 4, e7. [Google Scholar] [CrossRef] [PubMed]

- Folstein, M.F.; Folstein, S.E.; McHugh, P.R. “Mini-Mental State”: A Practical Method for Grading the Cognitive State of Patients for the Clinician. J. Psychiatr. Res. 1975, 12, 189–198. [Google Scholar] [CrossRef] [PubMed]

- Nasreddine, Z.S.; Phillips, N.A.; Bédirian, V.; Charbonneau, S.; Whitehead, V.; Collin, I.; Cummings, J.L.; Chertkow, H. The Montreal Cognitive Assessment, MoCA: A Brief Screening Tool For Mild Cognitive Impairment. J. Am. Geriatr. Soc. 2005, 53, 695–699. [Google Scholar] [CrossRef] [PubMed]

- Boletsis, C.; McCallum, S. Smartkuber: A Serious Game for Cognitive Health Screening of Elderly Players. Games Health J. 2016, 5, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Zygouris, S.; Iliadou, P.; Lazarou, E.; Giakoumis, D.; Votis, K.; Alexiadis, A.; Triantafyllidis, A.; Segkouli, S.; Tzovaras, D.; Tsiatsos, T.; et al. Detection of mild cognitive impairment in an atrisk group of older adults: Can a novel self-administered serious game-based screening test improve diagnostic accuracy? J. Alzheimer’s Dis. 2020, 78, 405–412. [Google Scholar] [CrossRef]

- Karapapas, C.; Goumopoulos, C. Mild Cognitive Impairment Detection Using Machine Learning Models Trained on Data Collected from Serious Games. Appl. Sci. 2021, 11, 8184. [Google Scholar] [CrossRef]

- Mezrar, S.; Bendella, F. Machine learning and Serious Game for the Early Diagnosis of Alzheimer’s Disease. Simul. Gaming 2022, 53, 369–387. [Google Scholar] [CrossRef]

- van Diest, M.; Lamoth, C.J.; Stegenga, J.; Verkerke, G.J.; Postema, K. Exergaming for balance training of elderly: State of the art and future developments. J. Neuroeng. Rehabil. 2013, 10, 101. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Wüest, S.; Borghese, N.A.; Pirovano, M.; Mainetti, R.; Van De Langenberg, R.; de Bruin, E.D. Usability and Effects of an Exergame-Based Balance Training Program. Games Health J. Res. Dev. Clin. Appl. 2014, 3, 106–114. [Google Scholar] [CrossRef] [Green Version]

- Shih, M.C.; Wang, R.Y.; Cheng, S.J.; Yang, Y.R. Effects of a balance-based exergaming intervention using the Kinect sensor on posture stability in individuals with Parkinson’s disease: A single-blinded randomized controlled trial. J. Neuroeng. Rehabil. 2016, 13, 78. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Staiano, A.E.; Calvert, S.L. The promise of exergames as tools to measure physical health. Entertain. Comput. 2011, 2, 17–21. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Clark, R.A.; Mentiplay, B.F.; Pua, Y.H.; Bower, K.J. Reliability and validity of the Wii Balance Board for assessment of standing balance: A systematic review. Gait Posture 2018, 61, 40–54. [Google Scholar] [CrossRef] [PubMed]

- Soancatl Aguilar, V.; van de Gronde, J.J.; Lamoth, C.J.C.; van Diest, M.; Maurits, N.M.; Roerdink, J.B.T.M. Visual data exploration for balance quantification in real-time during exergaming. PLoS ONE 2017, 12, e0170906. [Google Scholar] [CrossRef] [Green Version]

- Mahboobeh, D.J.; Dias, S.B.; Khandoker, A.H.; Hadjileontiadis, L.J. Machine Learning-Based Analysis of Digital Movement Assessment and ExerGame Scores for Parkinson’s Disease Severity Estimation. Front. Psychol. 2022, 13, 857249. [Google Scholar] [CrossRef]

- Villegas, C.M.; Curinao, J.L.; Aqueveque, D.C.; Guerrero-Henríquez, J.; Matamala, M.V. Identifying neuropathies through time series analysis of postural tests. Gait Posture 2023, 99, 24–34. [Google Scholar] [CrossRef]

- Zhang, Z. Microsoft Kinect Sensor and Its Effect. IEEE MultiMedia 2012, 19, 4–10. [Google Scholar] [CrossRef] [Green Version]

- Goumopoulos, C.; Karapapas, C. Personalized Exergaming for the Elderly through an Adaptive Exergame Platform. In Intelligent Sustainable Systems: Selected Papers of WorldS4 2022; Springer Nature: Singapore, 2023; Volume 2, pp. 185–193. [Google Scholar]

- Goumopoulos, C.; Chartomatsidis, M.; Koumanakos, G. Participatory Design of Fall Prevention Exergames using Multiple Enabling Technologies. In ICT4AWE; SciTePress: Setubal, Portugal, 2022; pp. 70–80. [Google Scholar] [CrossRef]

- Goumopoulos, C.; Drakakis, E.; Gklavakis, D. Feasibility and Acceptance of Augmented and Virtual Reality Exergames to Train Motor and Cognitive Skills of Elderly. Computers 2023, 12, 52. [Google Scholar] [CrossRef]

- Danousis, M.; Goumopoulos, C.; Fakis, A. Exergames in the GAME2AWE Platform with Dynamic Difficulty Adjustment. In Entertainment Computing–ICEC 2022: 21st IFIP TC 14 International Conference, ICEC 2022, Bremen, Germany, 1–3 November 2022, Proceedings; Springer International Publishing: Cham, Switzerland, 2022; pp. 214–223. [Google Scholar]

- Goumopoulos, C.; Ougkrenidis, D.; Gklavakis, D.; Ioannidis, I. A Smart Floor Device of an Exergame Platform for Elderly Fall Prevention. In Proceedings of the 2022 25th Euromicro Conference on Digital System Design (DSD), Maspalomas, Spain, 31 August–2 September 2022; IEEE: New York, NY, USA, 2022; pp. 585–592. [Google Scholar]

- Poptsi, E.; Moraitou, D.; Eleftheriou, M.; Kounti-Zafeiropoulou, F.; Papasozomenou, C.; Agogiatou, C.; Bakoglidou, E.; Batsila, G.; Liapi, D.; Markou, N.; et al. Normative Data for the Montreal Cognitive Assessment in Greek Older Adults with Subjective Cognitive Decline, Mild Cognitive Impairment and Dementia. J. Geriatr. Psychiatry Neurol. 2019, 32, 265–274. [Google Scholar] [CrossRef]

- Goumopoulos, C.; Skikos, G.; Frounta, M. Feasibility and Effects of Cognitive Training with the COGNIPLAT Game Platform in Elderly with Mild Cognitive Impairment: Pilot Randomized Controlled Trial. Games Health J. 2023; ahead of print. [Google Scholar] [CrossRef]

- Berg, K.O.; Wood-Dauphinee, S.L.; Williams, J.I.; Maki, B. Measuring balance in the elderly: Validation of an instrument. Can. J. Public Health Rev. Can. Sante Publique 1992, 83, S7–S11. [Google Scholar]

- Rikli, R.E.; Jones, C.J. Development and Validation of a Functional Fitness Test for Community-Residing Older Adults. J. Aging Phys. Act. 1999, 7, 129–161. [Google Scholar] [CrossRef]

- Haines, T.; Kuys, S.S.; Morrison, G.; Clarke, J.; Bew, P.; McPhail, S. Development and Validation of the Balance Outcome Measure for Elder Rehabilitation. Arch. Phys. Med. Rehabil. 2007, 88, 1614–1621. [Google Scholar] [CrossRef] [PubMed]

- Shumway-Cook, A.; Baldwin, M.; Polissar, N.L.; Gruber, W. Predicting the probability for falls in community-dwelling older adults. Phys. Ther. 1997, 77, 812–819. [Google Scholar] [CrossRef] [Green Version]

- Rikli, R.E.; Jones, C.J. Functional Fitness Normative Scores for Community-Residing Older Adults, Ages 60–94. J. Aging Phys. Act. 1999, 7, 162–181. [Google Scholar] [CrossRef]

- Bischoff, H.A.; Stähelin, H.B.; Monsch, A.U.; Iversen, M.D.; Weyh, A.; von Dechend, M.; Akos, R.; Conzelmann, M.; Dick, W.; Theiler, R. Identifying a cut-off point for normal mobility: A comparison of the timed ‘up and go’ test in community-dwelling and institutionalised elderly women. Age Ageing 2003, 32, 315–320. [Google Scholar] [CrossRef] [Green Version]

- Iglewicz, B.; Hoaglin, D.C. Volume 16: How to Detect and Handle Outliers; Quality Press: Milwaukee, WI, USA, 1993. [Google Scholar]

- Groeneveld, R.A.; Meeden, G. Measuring skewness and kurtosis. J. R. Stat. Soc. Ser. D (Stat.) 1984, 33, 391–399. [Google Scholar] [CrossRef]

- Blagus, R.; Lusa, L. SMOTE for high-dimensional class-imbalanced data. BMC Bioinform. 2013, 14, 106. [Google Scholar] [CrossRef] [Green Version]

- Luo, G. A review of automatic selection methods for machine learning algorithms and hyper-parameter values. Netw. Model. Anal. Health Inform. Bioinform. 2016, 5, 18. [Google Scholar] [CrossRef]

- Maimon, O.Z.; Rokach, L. Data Mining with Decision Trees: Theory and Applications; World Scientific: Singapore, 2014; Volume 81. [Google Scholar]

- Wang, L. (Ed.) Support Vector Machines: Theory and Applications; Springer Science & Business Media: Berlin/Heidelberg, Germany, 2005; Volume 177. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef] [Green Version]

- LaValley, M.P. Logistic regression. Circulation 2008, 117, 2395–2399. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Jain, A.; Mao, J.; Mohiuddin, K. Artificial neural networks: A tutorial. Computer 1996, 29, 31–44. [Google Scholar] [CrossRef] [Green Version]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:1609.04747. [Google Scholar]

- Foster, K.R.; Koprowski, R.; Skufca, J.D. Machine learning, medical diagnosis, and biomedical engineering research-commentary. Biomed. Eng. Online 2014, 13, 94. [Google Scholar] [CrossRef] [Green Version]

- Boughorbel, S.; Jarray, F.; El-Anbari, M. Optimal classifier for imbalanced data using Matthews Correlation Coefficient metric. PLoS ONE 2017, 12, e0177678. [Google Scholar] [CrossRef]

- Savadkoohi, M.; Oladunni, T.; Thompson, L. A machine learning approach to epileptic seizure prediction using Electroencephalogram (EEG) Signal. Biocybern. Biomed. Eng. 2020, 40, 1328–1341. [Google Scholar] [CrossRef]

- Parsapoor, M.; Alam, M.R.; Mihailidis, A. Performance of machine learning algorithms for dementia assessment: Impacts of language tasks, recording media, and modalities. BMC Med. Inform. Decis. Mak. 2023, 23, 45. [Google Scholar] [CrossRef]

- Corrigan, J.D.; Hinkeldey, N.S. Relationships between Parts A and B of the Trail Making Test. J. Clin. Psychol. 1987, 43, 402–409. [Google Scholar] [CrossRef]

- Stroop, J.R. Studies of interference in serial verbal reactions. J. Exp. Psychol. 1935, 18, 643–662. [Google Scholar] [CrossRef]

- Xie, K.; Wang, T.; Iqbal, U.; Guo, Y.; Fidler, S.; Shkurti, F. Physics-based human motion estimation and synthesis from videos. In Proceedings of the 2021 IEEE/CVF International Conference on Computer Vision, Montreal, QC, Canada, 10–17 October 2021; pp. 11532–11541. [Google Scholar]

| Age Category | Male | Female |

|---|---|---|

| 65–64 | <14 | <12 |

| 65–69 | <12 | <11 |

| 70–74 | <12 | <10 |

| 75–79 | <11 | <10 |

| 80–84 | <10 | <9 |

| 85–89 | <8 | <8 |

| 90–94 | <7 | <4 |

| State | MoCA | BBS | 30SST | TUG |

|---|---|---|---|---|

| Normal | 11 | 12 | 10 | 11 |

| Abnormal | 4 | 3 | 5 | 4 |

| Attribute | Type | Description |

|---|---|---|

| game_score | Number | The score of a game round |

| game_level | Categorical | The difficulty level of a game round 1: Beginner, 2: Easy, 3: Medium, 4: Hard, and 5: Dynamic |

| game_length | Number | The duration of a game round in seconds |

| dlp_appearance | Categorical | Appearance mode (it is a difficulty level parameter): 1: One by one side, 2: Continuous alternation, and 3: Random |

| dlp_speed | Number | State change speed: the average time to change harvest state in seconds |

| dlp_target | Number | Harvest target: number of fruits to collect in a game round |

| vitality_level | Number | The ratio of expected successful movements in a game round, which is also represented in the user interface as the vitality bar level |

| move_conf | Number | The confidence level of movement detection |

| move_side | Categorical | The side of the performed movement: −1: left side, 1: right side |

| idle_time | Number | The idle time between two consecutive movements |

| action_type | Categorical | The type of action performed in the gameplay: 1: harvest too early, 2: harvest on time, and 3: harvest too late |

| reaction_time | Number | The time between a game stimulus and the detection of the corresponding action |

| MoCA | Number | The score of the MoCA test per participant |

| BBS | Number | The score of the BBS test per participant |

| 30SST | Number | The score of the 30SST test per participant |

| TUG | Number | The score of the TUG test per participant |

| motor_state | Categorical | The target binary class for the motor level assessment based on the cut-off values of the motor clinical tests (BBS, 30SST, and TUG): 0: normal, 1: abnormal |

| cognitive_state | Categorical | The target binary class for the cognitive level assessment based on the cut-off values of the MoCA test: 0: normal, 1: abnormal |

| Predictor | Description |

|---|---|

| avg_move_conf | The average value of the move_conf attribute within the context of a round. |

| avg_move_side | The average value of the move_side attribute within the context of a round. |

| avg_idle_time | The average value of the idle_time attribute within the context of a round. |

| avg_reaction_time | The average value of the reaction_time attribute within the context of a round. |

| action1_% | The percentage of type 1 actions within the context of a round. |

| action2_% | The percentage of type 2 actions within the context of a round. |

| action3_% | The percentage of type 3 actions within the context of a round. |

| action1_Q1/Q2/Q3/Q4 | The percentage of type 1 actions in the 1st/2nd/3rd/4th quarter of a round |

| action1_skew | The skewness of RoE (type 1 actions within the context of a round). |

| action2_skew | The skewness of RoT (type 2 actions within the context of a round). |

| action3_skew | The skewness of RoL (type 3 actions within the context of a round). |

| game_logs | The number of game logs within the context of a round. |

| Dataset | MoCA | BBS | 30SST | TUG |

|---|---|---|---|---|

| Original (Normal/Abnormal) | 220/80 | 240/60 | 200/100 | 220/80 |

| SMOTE augmented (total) | 440 | 480 | 400 | 440 |

| Increase (%) | 46.7 | 60.0 | 33.3 | 46.7 |

| Metric | Description | Formula |

|---|---|---|

| Accuracy | A measure of the overall correctness of a classification model | |

| Precision | A measure of the accuracy of positive predictions | |

| Recall | A measure to correctly identify positive instances | |

| F1 score | A single metric that combines precision and recall | |

| MCC | A measure of the quality of binary classifications |

| ML Model | Accuracy | Precision | Recall | F1 Score | MCC |

|---|---|---|---|---|---|

| DT | 0.8480 ± 0.0581 | 0.7413 | 0.6425 | 0.6875 | 0.4227 |

| SVM | 0.7957 ± 0.0264 | 0.9303 | 0.6426 | 0.7580 | 0.6235 |

| RF | 0.9232 ± 0.0398 | 0.9362 | 0.9065 | 0.9144 | 0.8450 |

| LR | 0.6371 ± 0.0395 | 0.6490 | 0.5988 | 0.6223 | 0.2755 |

| 3L ANN | 0.8227 ± 0.0000 | 0.8743 | 0.7661 | 0.8110 | 0.6574 |

| 7L ANN | 0.8712 ± 0.0017 | 0.9142 | 0.8194 | 0.8642 | 0.7465 |

| ML Model | Accuracy | Precision | Recall | F1 Score | MCC |

|---|---|---|---|---|---|

| DT | 0.9091 ± 0.0161 | 0.9388 | 0.8745 | 0.9095 | 0.8142 |

| SVM | 0.9200 ± 0.0281 | 0.9840 | 0.8538 | 0.9140 | 0.8478 |

| RF | 0.9561 ± 0.0182 | 0.9671 | 0.9498 | 0.9593 | 0.9044 |

| LR | 0.7555 ± 0.0387 | 0.7825 | 0.7084 | 0.7433 | 0.5136 |

| 3L ANN | 0.9044 ± 0.0016 | 0.9247 | 0.8809 | 0.9021 | 0.8100 |

| 7L ANN | 0.9075 ± 0.0047 | 0.9458 | 0.8652 | 0.9035 | 0.8183 |

| ML Model | Accuracy | Precision | Recall | F1 Score | MCC |

|---|---|---|---|---|---|

| DT | 0.8304 ± 0.0304 | 0.8720 | 0.7890 | 0.8172 | 0.6877 |

| SVM | 0.8296 ± 0.0126 | 0.9228 | 0.7237 | 0.8096 | 0.6769 |

| RF | 0.9119 ± 0.0366 | 0.9471 | 0.8859 | 0.9057 | 0.8143 |

| LR | 0.6437 ± 0.0602 | 0.6931 | 0.5260 | 0.5917 | 0.3000 |

| 3L ANN | 0.8010 ± 0.0329 | 0.8555 | 0.7369 | 0.7888 | 0.6117 |

| 7L ANN | 0.8391 ± 0.0156 | 0.8778 | 0.7889 | 0.8308 | 0.6819 |

| ML Model | Accuracy | Precision | Recall | F1 Score | MCC |

|---|---|---|---|---|---|

| DT | 0.8317 ± 0.0433 | 0.8088 | 0.8700 | 0.8399 | 0.6677 |

| SVM | 0.8042 ± 0.0352 | 0.7520 | 0.9071 | 0.8218 | 0.6236 |

| RF | 0.9133 ± 0.0632 | 0.9066 | 0.9400 | 0.9214 | 0.8522 |

| LR | 0.6633 ± 0.0382 | 0.6507 | 0.7133 | 0.6790 | 0.3301 |

| 3L ANN | 0.8483 ± 0.0150 | 0.7976 | 0.9433 | 0.8618 | 0.7158 |

| 7L ANN | 0.8717 ± 0.0150 | 0.8094 | 0.9733 | 0.8837 | 0.7594 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Danousis, M.; Goumopoulos, C. A Machine-Learning-Based Motor and Cognitive Assessment Tool Using In-Game Data from the GAME2AWE Platform. Informatics 2023, 10, 59. https://doi.org/10.3390/informatics10030059

Danousis M, Goumopoulos C. A Machine-Learning-Based Motor and Cognitive Assessment Tool Using In-Game Data from the GAME2AWE Platform. Informatics. 2023; 10(3):59. https://doi.org/10.3390/informatics10030059

Chicago/Turabian StyleDanousis, Michail, and Christos Goumopoulos. 2023. "A Machine-Learning-Based Motor and Cognitive Assessment Tool Using In-Game Data from the GAME2AWE Platform" Informatics 10, no. 3: 59. https://doi.org/10.3390/informatics10030059

APA StyleDanousis, M., & Goumopoulos, C. (2023). A Machine-Learning-Based Motor and Cognitive Assessment Tool Using In-Game Data from the GAME2AWE Platform. Informatics, 10(3), 59. https://doi.org/10.3390/informatics10030059