A Comprehensive Review of Bio-Inspired Optimization Algorithms Including Applications in Microelectronics and Nanophotonics

Abstract

:1. Introduction

2. A Possible Taxonomy of Bio-Inspired Algorithms

3. Heuristics

“Basic” Heuristic Algorithms

4. Metaheuristics

4.1. Evolutionary Algorithms (EAs)

4.1.1. Genetic Algorithms (GAs)

4.1.2. Memetic Algorithms (MAs)

- Initializing the Population: Random generation of candidate solutions.

- Evaluation: The fitness of each candidate solution is assessed according to the problem’s fitness criterion (objective function).

- Evolutionary Process: Genetic operators are applied (selection, crossover and mutation) according to the standard evolutionary algorithm rules; thus, the population evolves through generations.

- Local Search: In addition to the evolutionary processes, local search techniques (memes) are applied to refine or improve individual solutions. This local search often utilizes problem-specific knowledge or heuristics to locally explore the solution space more accurately.

- End: The algorithm terminates when the ending criterion is met—achieving a satisfactory solution or reaching the maximum set number of iterations.

4.1.3. Differential Evolution (DE)

4.2. Swarm Intelligence (SI) Algorithms

4.2.1. Particle Swarm Optimization (PSO)

- Its current position in the search space;

- Its best position in the past—past best (Pbest);

- The best position in its direct proximity—local best (Lbest);

- The ideal position for all particles combined—global best (Gbest).

4.2.2. Ant Colony Optimization (ACO)

4.2.3. Whale Optimization Algorithm (WOA)

4.2.4. Grey Wolf Optimizer (GWO)

4.2.5. Firefly Optimization Algorithm (FOA)

4.2.6. Bat Optimization Algorithm (BOA)

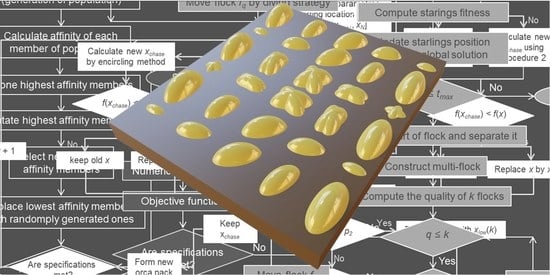

4.2.7. Orca Predation Algorithm (OPA)

4.2.8. Starling Murmuration Optimizer (SMO)

4.3. Metaheuristics Mimicking Human or Zoological Physiological Functions

Artificial Immune Systems (AISs)

4.4. Anthropological Algorithms (Mimicking Human Social Behavior)

Tabu Search Algorithm (TSA)

4.5. Plant-Based Algorithms

Flower Pollination Algorithm (FPA)

5. Hyper-Heuristics

5.1. Selection Hyper-Heuristics

5.2. Generation Hyper-Heuristics

5.3. Ensemble Hyper-Heuristics

6. Hybridization Methods

6.1. Hybrids of Two or More Metaheuristic Algorithms

6.2. Hybrids with Hyper-Heuristics

6.3. Hybrids with Mathematical Programming (MP)

6.4. Hybrids with Machine Learning Techniques

6.5. Hybrids with Fuzzy Logic

7. Multi-Objective Optimization (MOO)

7.1. Multi-Objective Metaheuristics

7.2. Machine Learning in Multi-Objective Optimization

7.2.1. Neural Networks in Multi-Objective Optimization

7.2.2. Surrogate Models in Multi-Objective Optimization

7.2.3. Reinforcement Learning in Multi-Objective Optimization

7.2.4. Gaussian Processes in Multi-Objective Optimization

8. Neural Networks and Multi-Objective Optimization

8.1. Artificial Neural Networks

8.2. Convolutional Neural Networks (CNNs)

8.3. Recurrent Neural Networks (RNNs)

8.4. Radial Basis Function (RBF) Networks

8.5. Generative Adversarial Networks (GANs)

8.6. Autoencoders

9. Applications in Microelectronics

- Circuit Element Parameters [228]: The properties of the components built into a microelectronic circuit can be optimized in order to achieve targeted circuit performance, e.g., desired speed, power consumption, decreased heat dissipation and decreased noise. The values of passive device parameters such as resistances, capacitances and inductances [228] and also various parameters of active devices (different types of transistors, amplifiers, analog-digital converters, etc.) are optimized.

- Circuit Sizing [231]: Circuit area minimization is critical for practically all microelectronic devices and systems, especially for implantable and wearable healthcare devices and generally those where the area of the circuit is limited by design requirements. Optimal circuit sizing is actually of interest for basically all microelectronic circuits since it helps improve overall performance and enhance circuit reliability.

- Power Consumption [229]: Bio-inspired algorithms can be employed to optimize power consumption by minimizing leakage current, optimizing voltage levels and reducing dynamic power dissipation in circuits. This directly improves circuit reliability by avoiding overheating, and it also helps in keeping the power consumption at its minimum, which is of paramount importance for all battery-supplied circuitry.

- Sensitivity to Design Parameter Variations/Robustness [232]: Optimizing designs to wider variations in process parameters (temperature, atmosphere, material choice and the tolerances of their properties, various technological uncertainties) can be used to achieve the maximum performance robustness and insensitivity to variations in external parameters.

- Production Yield [233]: Bio-inspired optimization can help decrease faults and increase the percentage of successfully produced chips during planar technology fabrication. By considering process variations, temperature variations and component tolerances, bio-inspired algorithms can optimize circuit designs to achieve robust performance and improve yield.

9.1. Optimizing Analog Circuit Sizing

9.2. Optimizing Circuit Routing

9.3. Future Directions

10. Applications in Nanophotonics

10.1. Optimization of Parameters of Nanophotonic Materials

10.2. Optimization of Nanostructural Design of Basic Nanophotonic Building Blocks

10.3. Optimization of Nanophotonic Devices

10.4. Optical Waveguide Optimization

10.5. Optimization of Photonic Circuit Design

10.6. Future Directions

11. Discussion

11.1. Comparative Advantages and Disadvantages of Selected Optimization Algorithms

11.2. Comparative Computational Costs of Selected Optimization Algorithms

12. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Conflicts of Interest

References

- Alanis, A.Y.; Arana-Daniel, N.; López-Franco, C. Bio-inspired Algorithms. In Bio-Inspired Algorithms for Engineering; Alanis, A.Y., Arana-Daniel, N., López-Franco, C., Eds.; Butterworth-Heinemann: Oxford, UK, 2018; pp. 1–14. [Google Scholar] [CrossRef]

- Zhang, B.; Zhu, J.; Su, H. Toward the third generation artificial intelligence. Sci. China Inf. Sci. 2023, 66, 121101. [Google Scholar] [CrossRef]

- Stokel-Walker, C. Can we trust AI search engines? New Sci. 2023, 258, 12. [Google Scholar] [CrossRef]

- Gill, S.S.; Xu, M.; Ottaviani, C.; Patros, P.; Bahsoon, R.; Shaghaghi, A.; Golec, M.; Stankovski, V.; Wu, H.; Abraham, A. AI for next generation computing: Emerging trends and future directions. Internet Things 2022, 19, 100514. [Google Scholar] [CrossRef]

- Stadnicka, D.; Sęp, J.; Amadio, R.; Mazzei, D.; Tyrovolas, M.; Stylios, C.; Carreras-Coch, A.; Merino, J.A.; Żabiński, T.; Navarro, J. Industrial Needs in the Fields of Artificial Intelligence, Internet of Things and Edge Computing. Sensors 2022, 22, 4501. [Google Scholar] [CrossRef] [PubMed]

- Sujitha, S.; Pyari, S.; Jhansipriya, W.Y.; Reddy, Y.R.; Kumar, R.V.; Nandan, P.R. Artificial Intelligence based Self-Driving Car using Robotic Model. In Proceedings of the 2023 Third International Conference on Artificial Intelligence Smart Energy (ICAIS), Coimbatore, India, 2–4 February 2023; pp. 1634–1638. [Google Scholar] [CrossRef]

- Park, H.; Kim, S. Overviewing AI-Dedicated Hardware for On-Device AI in Smartphones. In Artificial Intelligence and Hardware Accelerators; Mishra, A., Cha, J., Park, H., Kim, S., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 127–150. [Google Scholar] [CrossRef]

- Apell, P.; Eriksson, H. Artificial intelligence (AI) healthcare technology innovations: The current state and challenges from a life science industry perspective. Technol. Anal. Strateg. Manag. 2023, 35, 179–193. [Google Scholar] [CrossRef]

- Yan, L.; Grossman, G.M. Robots and AI: A New Economic Era; Taylor & Francis: Boca Raton, FL, USA, 2023. [Google Scholar] [CrossRef]

- Wakchaure, M.; Patle, B.K.; Mahindrakar, A.K. Application of AI techniques and robotics in agriculture: A review. Artif. Intell. Life Sci. 2023, 3, 100057. [Google Scholar] [CrossRef]

- Pan, Y.; Zhang, L. Integrating BIM and AI for Smart Construction Management: Current Status and Future Directions. Arch. Comput. Methods Eng. 2023, 30, 1081–1110. [Google Scholar] [CrossRef]

- Baburaj, E. Comparative analysis of bio-inspired optimization algorithms in neural network-based data mining classification. Int. J. Swarm Intell. Res. (IJSIR) 2022, 13, 25. [Google Scholar] [CrossRef]

- Taecharungroj, V. “What can ChatGPT do?” analyzing early reactions to the innovative AI chatbot on twitter. Big Data Cogn. Comput. 2023, 7, 35. [Google Scholar] [CrossRef]

- Zhao, B.; Zhan, D.; Zhang, C.; Su, M. Computer-aided digital media art creation based on artificial intelligence. Neural Comput. Appl. 2023. [Google Scholar] [CrossRef]

- Adam, D. The muse in the machine. Proc. Natl. Acad. Sci. USA 2023, 120, e2306000120. [Google Scholar] [CrossRef] [PubMed]

- Kenny, D. Machine Translation for Everyone: Empowering Users in the Age of Artificial Intelligence; Language Science Press: Berlin, Germany, 2022. [Google Scholar] [CrossRef]

- Hassabis, D. Artificial Intelligence: Chess match of the century. Nature 2017, 544, 413–414. [Google Scholar] [CrossRef]

- Kirkpatrick, K. Can AI Demonstrate Creativity? Commun. ACM 2023, 66, 21–23. [Google Scholar] [CrossRef]

- Chamberlain, J. The Risk-Based Approach of the European Union’s Proposed Artificial Intelligence Regulation: Some Comments from a Tort Law Perspective. Eur. J. Risk Regul. 2022, 14, 1–13. [Google Scholar] [CrossRef]

- Rahul, M.; Jayaprakash, J. Mathematical model automotive part shape optimization using metaheuristic method-review. Mater. Today Proc. 2021, 47, 100–103. [Google Scholar] [CrossRef]

- McLean, S.D.; Juul Hansen, E.A.; Pop, P.; Craciunas, S.S. Configuring ADAS Platforms for Automotive Applications Using Metaheuristics. Front. Robot. AI 2022, 8, 762227. [Google Scholar] [CrossRef]

- Champasak, P.; Panagant, N.; Pholdee, N.; Vio, G.A.; Bureerat, S.; Yildiz, B.S.; Yıldız, A.R. Aircraft conceptual design using metaheuristic-based reliability optimisation. Aerosp. Sci. Technol. 2022, 129, 107803. [Google Scholar] [CrossRef]

- Calicchia, M.A.; Atefi, E.; Leylegian, J.C. Creation of small kinetic models for CFD applications: A meta-heuristic approach. Eng. Comput. 2022, 38, 1923–1937. [Google Scholar] [CrossRef]

- Menéndez-Pérez, A.; Fernández-Aballí Altamirano, C.; Sacasas Suárez, D.; Cuevas Barraza, C.; Borrajo-Pérez, R. Metaheuristics applied to the optimization of a compact heat exchanger with enhanced heat transfer surface. Appl. Therm. Eng. 2022, 214, 118887. [Google Scholar] [CrossRef]

- Minzu, V.; Serbencu, A. Systematic Procedure for Optimal Controller Implementation Using Metaheuristic Algorithms. Intell. Autom. Soft Comput. 2020, 26, 663–677. [Google Scholar] [CrossRef]

- Castillo, O.; Melin, P. A Review of Fuzzy Metaheuristics for Optimal Design of Fuzzy Controllers in Mobile Robotics. In Complex Systems: Spanning Control and Computational Cybernetics: Applications: Dedicated to Professor Georgi M. Dimirovski on His Anniversary; Shi, P., Stefanovski, J., Kacprzyk, J., Eds.; Springer International Publishing: Cham, Switzerland, 2022; pp. 59–72. [Google Scholar] [CrossRef]

- Guo, K. Special Issue on Application of Artificial Intelligence in Mechatronics. Appl. Sci. 2023, 13, 158. [Google Scholar] [CrossRef]

- Lu, S.; Li, S.; Habibi, M.; Safarpour, H. Improving the thermo-electro-mechanical responses of MEMS resonant accelerometers via a novel multi-layer perceptron neural network. Measurement 2023, 218, 113168. [Google Scholar] [CrossRef]

- Pertin, O.; Guha, K.; Jakšić, O.; Jakšić, Z.; Iannacci, J. Investigation of Nonlinear Piezoelectric Energy Harvester for Low-Frequency and Wideband Applications. Micromachines 2022, 13, 1399. [Google Scholar] [CrossRef] [PubMed]

- Razmjooy, N.; Ashourian, M.; Foroozandeh, Z. (Eds.) Metaheuristics and Optimization in Computer and Electrical Engineering; Springer Nature Switzerland AG: Cham, Switzerland, 2021. [Google Scholar] [CrossRef]

- Pijarski, P.; Kacejko, P.; Miller, P. Advanced Optimisation and Forecasting Methods in Power Engineering—Introduction to the Special Issue. Energies 2023, 16, 2804. [Google Scholar] [CrossRef]

- Joseph, S.B.; Dada, E.G.; Abidemi, A.; Oyewola, D.O.; Khammas, B.M. Metaheuristic algorithms for PID controller parameters tuning: Review, approaches and open problems. Heliyon 2022, 8, e09399. [Google Scholar] [CrossRef]

- Valencia-Ponce, M.A.; González-Zapata, A.M.; de la Fraga, L.G.; Sanchez-Lopez, C.; Tlelo-Cuautle, E. Integrated Circuit Design of Fractional-Order Chaotic Systems Optimized by Metaheuristics. Electronics 2023, 12, 413. [Google Scholar] [CrossRef]

- Roni, M.H.K.; Rana, M.S.; Pota, H.R.; Hasan, M.M.; Hussain, M.S. Recent trends in bio-inspired meta-heuristic optimization techniques in control applications for electrical systems: A review. Int. J. Dyn. Control 2022, 10, 999–1011. [Google Scholar] [CrossRef]

- Amini, E.; Nasiri, M.; Pargoo, N.S.; Mozhgani, Z.; Golbaz, D.; Baniesmaeil, M.; Nezhad, M.M.; Neshat, M.; Astiaso Garcia, D.; Sylaios, G. Design optimization of ocean renewable energy converter using a combined Bi-level metaheuristic approach. Energy Convers. Manag. X 2023, 19, 100371. [Google Scholar] [CrossRef]

- Qaisar, S.M.; Khan, S.I.; Dallet, D.; Tadeusiewicz, R.; Pławiak, P. Signal-piloted processing metaheuristic optimization and wavelet decomposition based elucidation of arrhythmia for mobile healthcare. Biocybern. Biomed. Eng. 2022, 42, 681–694. [Google Scholar] [CrossRef]

- Rasheed, I.M.; Motlak, H.J. Performance parameters optimization of CMOS analog signal processing circuits based on smart algorithms. Bull. Electr. Eng. Inform. 2023, 12, 149–157. [Google Scholar] [CrossRef]

- de Souza Batista, L.; de Carvalho, L.M. Optimization deployed to lens design. In Advances in Ophthalmic Optics Technology; Monteiro, D.W.d.L., Trindade, B.L.C., Eds.; IOP Publishing: Bristol, UK, 2022; pp. 9-1–9-29. [Google Scholar] [CrossRef]

- Chen, X.; Lin, D.; Zhang, T.; Zhao, Y.; Liu, H.; Cui, Y.; Hou, C.; He, J.; Liang, S. Grating waveguides by machine learning for augmented reality. Appl. Opt. 2023, 62, 2924–2935. [Google Scholar] [CrossRef]

- Edee, K. Augmented Harris Hawks Optimizer with Gradient-Based-Like Optimization: Inverse Design of All-Dielectric Meta-Gratings. Biomimetics 2023, 8, 179. [Google Scholar] [CrossRef]

- Vineeth, P.; Suresh, S. Performance evaluation and analysis of population-based metaheuristics for denoising of biomedical images. Res. Biomed. Eng. 2021, 37, 111–133. [Google Scholar] [CrossRef]

- Nssibi, M.; Manita, G.; Korbaa, O. Advances in nature-inspired metaheuristic optimization for feature selection problem: A comprehensive survey. Comput. Sci. Rev. 2023, 49, 100559. [Google Scholar] [CrossRef]

- AlShathri, S.I.; Chelloug, S.A.; Hassan, D.S.M. Parallel Meta-Heuristics for Solving Dynamic Offloading in Fog Computing. Mathematics 2022, 10, 1258. [Google Scholar] [CrossRef]

- Ghanbarzadeh, R.; Hosseinalipour, A.; Ghaffari, A. A novel network intrusion detection method based on metaheuristic optimisation algorithms. J. Ambient. Intell. Humaniz. Comput. 2023, 14, 7575–7592. [Google Scholar] [CrossRef]

- Darwish, S.M.; Farhan, D.A.; Elzoghabi, A.A. Building an Effective Classifier for Phishing Web Pages Detection: A Quantum-Inspired Biomimetic Paradigm Suitable for Big Data Analytics of Cyber Attacks. Biomimetics 2023, 8, 197. [Google Scholar] [CrossRef] [PubMed]

- Razaghi, B.; Roayaei, M.; Charkari, N.M. On the Group-Fairness-Aware Influence Maximization in Social Networks. IEEE Trans. Comput. Soc. Syst. 2022, 1–9. [Google Scholar] [CrossRef]

- Gomes de Araujo Rocha, H.M.; Schneider Beck, A.C.; Eduardo Kreutz, M.; Diniz Monteiro Maia, S.M.; Magalhães Pereira, M. Using evolutionary metaheuristics to solve the mapping and routing problem in networks on chip. Des. Autom. Embed. Syst. 2023. [Google Scholar] [CrossRef]

- Fan, Z.; Lin, J.; Dai, J.; Zhang, T.; Xu, K. Photonic Hopfield neural network for the Ising problem. Opt. Express 2023, 31, 21340–21350. [Google Scholar] [CrossRef]

- Aldalbahi, A.; Siasi, N.; Mazin, A.; Jasim, M.A. Digital compass for multi-user beam access in mmWave cellular networks. Digit. Commun. Netw. 2022. [Google Scholar] [CrossRef]

- Mohan, P.; Subramani, N.; Alotaibi, Y.; Alghamdi, S.; Khalaf, O.I.; Ulaganathan, S. Improved Metaheuristics-Based Clustering with Multihop Routing Protocol for Underwater Wireless Sensor Networks. Sensors 2022, 22, 1618. [Google Scholar] [CrossRef] [PubMed]

- Bichara, R.M.; Asadallah, F.A.B.; Awad, M.; Costantine, J. Quantum Genetic Algorithm for the Design of Miniaturized and Reconfigurable IoT Antennas. IEEE Trans. Antenn. Propag. 2023, 71, 3894–3904. [Google Scholar] [CrossRef]

- Gharehchopogh, F.S.; Abdollahzadeh, B.; Khodadadi, N.; Mirjalili, S. Metaheuristics for clustering problems. In Comprehensive Metaheuristics; Mirjalili, S., Gandomi, A.H., Eds.; Academic Press: Cambridge, MA, USA, 2023; pp. 379–392. [Google Scholar] [CrossRef]

- Kashani, A.R.; Camp, C.V.; Rostamian, M.; Azizi, K.; Gandomi, A.H. Population-based optimization in structural engineering: A review. Artif. Intell. Rev. 2022, 55, 345–452. [Google Scholar] [CrossRef]

- Sadrossadat, E.; Basarir, H.; Karrech, A.; Elchalakani, M. Multi-objective mixture design and optimisation of steel fiber reinforced UHPC using machine learning algorithms and metaheuristics. Eng. Comput. 2022, 38, 2569–2582. [Google Scholar] [CrossRef]

- Aslay, S.E.; Dede, T. Reduce the construction cost of a 7-story RC public building with metaheuristic algorithms. Archit. Eng. Des. Manag. 2023, 1–16. [Google Scholar] [CrossRef]

- Smetankina, N.; Semenets, O.; Merkulova, A.; Merkulov, D.; Misura, S. Two-Stage Optimization of Laminated Composite Elements with Minimal Mass. In Smart Technologies in Urban Engineering; Arsenyeva, O., Romanova, T., Sukhonos, M., Tsegelnyk, Y., Eds.; Springer International Publishing: Cham, Switzerland, 2023; pp. 456–465. [Google Scholar] [CrossRef]

- Jiang, Y.; Li, H.; Feng, B.; Wu, Z.; Zhao, S.; Wang, Z. Street Patrol Routing Optimization in Smart City Management Based on Genetic Algorithm: A Case in Zhengzhou, China. ISPRS Int. J. Geo-Inf. 2022, 11, 171. [Google Scholar] [CrossRef]

- Jovanović, A.; Stevanović, A.; Dobrota, N.; Teodorović, D. Ecology based network traffic control: A bee colony optimization approach. Eng. Appl. Artif. Intell. 2022, 115, 105262. [Google Scholar] [CrossRef]

- Kaur, M.; Singh, D.; Kumar, V.; Lee, H.N. MLNet: Metaheuristics-Based Lightweight Deep Learning Network for Cervical Cancer Diagnosis. IEEE J. Biomed. Health Inform. 2022, 1–11. [Google Scholar] [CrossRef]

- Aziz, R.M. Cuckoo Search-Based Optimization for Cancer Classification: A New Hybrid Approach. J. Comput. Biol. 2022, 29, 565–584. [Google Scholar] [CrossRef]

- Kılıç, F.; Uncu, N. Modified swarm intelligence algorithms for the pharmacy duty scheduling problem. Expert Syst. Appl. 2022, 202, 117246. [Google Scholar] [CrossRef]

- Luukkonen, S.; van den Maagdenberg, H.W.; Emmerich, M.T.M.; van Westen, G.J.P. Artificial intelligence in multi-objective drug design. Curr. Opin. Struct. Biol. 2023, 79, 102537. [Google Scholar] [CrossRef]

- Amorim, A.R.; Zafalon, G.F.D.; Contessoto, A.d.G.; Valêncio, C.R.; Sato, L.M. Metaheuristics for multiple sequence alignment: A systematic review. Comput. Biol. Chem. 2021, 94, 107563. [Google Scholar] [CrossRef]

- Jain, S.; Bharti, K.K. Genome sequence assembly using metaheuristics. In Comprehensive Metaheuristics; Mirjalili, S., Gandomi, A.H., Eds.; Academic Press: Cambridge, MA, USA, 2023; pp. 347–358. [Google Scholar] [CrossRef]

- Neelakandan, S.; Prakash, M.; Geetha, B.T.; Nanda, A.K.; Metwally, A.M.; Santhamoorthy, M.; Gupta, M.S. Metaheuristics with Deep Transfer Learning Enabled Detection and classification model for industrial waste management. Chemosphere 2022, 308, 136046. [Google Scholar] [CrossRef] [PubMed]

- Alshehri, A.S.; You, F. Deep learning to catalyze inverse molecular design. Chem. Eng. J. 2022, 444, 136669. [Google Scholar] [CrossRef]

- Juan, A.A.; Keenan, P.; Martí, R.; McGarraghy, S.; Panadero, J.; Carroll, P.; Oliva, D. A review of the role of heuristics in stochastic optimisation: From metaheuristics to learnheuristics. Ann. Oper. Res. 2023, 320, 831–861. [Google Scholar] [CrossRef]

- Dhouib, S.; Zouari, A. Adaptive iterated stochastic metaheuristic to optimize holes drilling path in manufacturing industry: The Adaptive-Dhouib-Matrix-3 (A-DM3). Eng. Appl. Artif. Intell. 2023, 120, 105898. [Google Scholar] [CrossRef]

- Para, J.; Del Ser, J.; Nebro, A.J. Energy-Aware Multi-Objective Job Shop Scheduling Optimization with Metaheuristics in Manufacturing Industries: A Critical Survey, Results, and Perspectives. Appl. Sci. 2022, 12, 1491. [Google Scholar] [CrossRef]

- Sarkar, T.; Salauddin, M.; Mukherjee, A.; Shariati, M.A.; Rebezov, M.; Tretyak, L.; Pateiro, M.; Lorenzo, J.M. Application of bio-inspired optimization algorithms in food processing. Curr. Res. Food Sci. 2022, 5, 432–450. [Google Scholar] [CrossRef]

- Khan, A.A.; Shaikh, Z.A.; Belinskaja, L.; Baitenova, L.; Vlasova, Y.; Gerzelieva, Z.; Laghari, A.A.; Abro, A.A.; Barykin, S. A Blockchain and Metaheuristic-Enabled Distributed Architecture for Smart Agricultural Analysis and Ledger Preservation Solution: A Collaborative Approach. Appl. Sci. 2022, 12, 1487. [Google Scholar] [CrossRef]

- Mousapour Mamoudan, M.; Ostadi, A.; Pourkhodabakhsh, N.; Fathollahi-Fard, A.M.; Soleimani, F. Hybrid neural network-based metaheuristics for prediction of financial markets: A case study on global gold market. J. Comput. Des. Eng. 2023, 10, 1110–1125. [Google Scholar] [CrossRef]

- Houssein, E.H.; Dirar, M.; Hussain, K.; Mohamed, W.M. Artificial Neural Networks for Stock Market Prediction: A Comprehensive Review. In Metaheuristics in Machine Learning: Theory and Applications; Oliva, D., Houssein, E.H., Hinojosa, S., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 409–444. [Google Scholar] [CrossRef]

- Quek, S.G.; Selvachandran, G.; Tan, J.H.; Thiang, H.Y.A.; Tuan, N.T.; Son, L.H. A New Hybrid Model of Fuzzy Time Series and Genetic Algorithm Based Machine Learning Algorithm: A Case Study of Forecasting Prices of Nine Types of Major Cryptocurrencies. Big Data Res. 2022, 28, 100315. [Google Scholar] [CrossRef]

- Hosseinalipour, A.; Ghanbarzadeh, R. A novel metaheuristic optimisation approach for text sentiment analysis. Int. J. Mach. Learn. Cybern. 2023, 14, 889–909. [Google Scholar] [CrossRef]

- Wolpert, D.H.; Macready, W.G. No free lunch theorems for optimization. IEEE Trans. Evol. Comput. 1997, 1, 67–82. [Google Scholar] [CrossRef]

- Rajwar, K.; Deep, K.; Das, S. An exhaustive review of the metaheuristic algorithms for search and optimization: Taxonomy, applications, and open challenges. Artif. Intell. Rev. 2023. [Google Scholar] [CrossRef] [PubMed]

- Almufti, S.M.; Marqas, R.B.; Saeed, V.A. Taxonomy of bio-inspired optimization algorithms. J. Adv. Comput. Sci. Technol. 2019, 8, 23–31. [Google Scholar] [CrossRef]

- Fan, X.; Sayers, W.; Zhang, S.; Han, Z.; Ren, L.; Chizari, H. Review and Classification of Bio-inspired Algorithms and Their Applications. J. Bionic Eng. 2020, 17, 611–631. [Google Scholar] [CrossRef]

- Molina, D.; Poyatos, J.; Ser, J.D.; García, S.; Hussain, A.; Herrera, F. Comprehensive Taxonomies of Nature- and Bio-inspired Optimization: Inspiration Versus Algorithmic Behavior, Critical Analysis Recommendations. Cogn. Comput. 2020, 12, 897–939. [Google Scholar] [CrossRef]

- Beiranvand, V.; Hare, W.; Lucet, Y. Best practices for comparing optimization algorithms. Optim. Eng. 2017, 18, 815–848. [Google Scholar] [CrossRef]

- Halim, A.H.; Ismail, I.; Das, S. Performance assessment of the metaheuristic optimization algorithms: An exhaustive review. Artif. Intell. Rev. 2021, 54, 2323–2409. [Google Scholar] [CrossRef]

- Schneider, P.-I.; Garcia Santiago, X.; Soltwisch, V.; Hammerschmidt, M.; Burger, S.; Rockstuhl, C. Benchmarking Five Global Optimization Approaches for Nano-optical Shape Optimization and Parameter Reconstruction. ACS Photonics 2019, 6, 2726–2733. [Google Scholar] [CrossRef]

- Smith, D.R. Top-down synthesis of divide-and-conquer algorithms. Artif. Intell. 1985, 27, 43–96. [Google Scholar] [CrossRef]

- Jacobson, S.H.; Yücesan, E. Analyzing the Performance of Generalized Hill Climbing Algorithms. J. Heuristics 2004, 10, 387–405. [Google Scholar] [CrossRef]

- Boettcher, S. Inability of a graph neural network heuristic to outperform greedy algorithms in solving combinatorial optimization problems. Nat. Mach. Intell. 2023, 5, 24–25. [Google Scholar] [CrossRef]

- Cheriyan, J.; Cummings, R.; Dippel, J.; Zhu, J. An improved approximation algorithm for the matching augmentation problem. SIAM J. Discret. Math. 2023, 37, 163–190. [Google Scholar] [CrossRef]

- Gao, J.; Tao, X.; Cai, S. Towards more efficient local search algorithms for constrained clustering. Inf. Sci. 2023, 621, 287–307. [Google Scholar] [CrossRef]

- Bahadori-Chinibelagh, S.; Fathollahi-Fard, A.M.; Hajiaghaei-Keshteli, M. Two Constructive Algorithms to Address a Multi-Depot Home Healthcare Routing Problem. IETE J. Res. 2022, 68, 1108–1114. [Google Scholar] [CrossRef]

- Nadel, B.A. Constraint satisfaction algorithms. Comput. Intell. 1989, 5, 188–224. [Google Scholar] [CrossRef]

- Narendra, P.M.; Fukunaga, K. A Branch and Bound Algorithm for Feature Subset Selection. IEEE Trans. Comput. 1977, 26, 917–922. [Google Scholar] [CrossRef]

- Basu, A.; Conforti, M.; Di Summa, M.; Jiang, H. Complexity of branch-and-bound and cutting planes in mixed-integer optimization. Math. Program. 2023, 198, 787–810. [Google Scholar] [CrossRef]

- Dutt, S.; Deng, W. Cluster-aware iterative improvement techniques for partitioning large VLSI circuits. ACM Trans. Des. Autom. Electron. Syst. 2002, 7, 91–121. [Google Scholar] [CrossRef]

- Vasant, P.; Weber, G.-W.; Dieu, V.N. (Eds.) Handbook of Research on Modern Optimization Algorithms and Applications in Engineering and Economics; IGI Global: Hershey, PA, USA, 2016. [Google Scholar] [CrossRef]

- Fávero, L.P.; Belfiore, P. Data Science for Business and Decision Making; Academic Press: Cambridge, MA, USA, 2018. [Google Scholar] [CrossRef]

- Montoya, O.D.; Molina-Cabrera, A.; Gil-González, W. A Possible Classification for Metaheuristic Optimization Algorithms in Engineering and Science. Ingeniería 2022, 27, 1. [Google Scholar] [CrossRef]

- Ma, Z.; Wu, G.; Suganthan, P.N.; Song, A.; Luo, Q. Performance assessment and exhaustive listing of 500+ nature-inspired metaheuristic algorithms. Swarm Evol. Comput. 2023, 77, 101248. [Google Scholar] [CrossRef]

- Del Ser, J.; Osaba, E.; Molina, D.; Yang, X.-S.; Salcedo-Sanz, S.; Camacho, D.; Das, S.; Suganthan, P.N.; Coello Coello, C.A.; Herrera, F. Bio-inspired computation: Where we stand and what’s next. Swarm Evol. Comput. 2019, 48, 220–250. [Google Scholar] [CrossRef]

- Holland, J.H. Adaptation in Natural and Artificial Systems; University of Michigan Press: Ann Arbor, MI, USA, 1975. [Google Scholar]

- Wilson, A.J.; Pallavi, D.R.; Ramachandran, M.; Chinnasamy, S.; Sowmiya, S. A review on memetic algorithms and its developments. Electr. Autom. Eng. 2022, 1, 7–12. [Google Scholar] [CrossRef]

- Bilal; Pant, M.; Zaheer, H.; Garcia-Hernandez, L.; Abraham, A. Differential Evolution: A review of more than two decades of research. Eng. Appl. Artif. Intell. 2020, 90, 103479. [Google Scholar] [CrossRef]

- Sivanandam, S.N.; Deepa, S.N.; Sivanandam, S.N.; Deepa, S.N. Genetic Algorithms; Springer: Berlin/Heidelberg, Germany, 2008. [Google Scholar] [CrossRef]

- Dawkins, R. The Selfish Gene; Oxford University Press: Oxford, UK, 1976. [Google Scholar]

- Sengupta, S.; Basak, S.; Peters, R.A. Particle Swarm Optimization: A survey of historical and recent developments with hybridization perspectives. Mach. Learn. Knowl. Extr. 2019, 1, 157–191. [Google Scholar] [CrossRef]

- Mirjalili, S.; Lewis, A. The Whale Optimization Algorithm. Adv. Eng. Softw. 2016, 95, 51–67. [Google Scholar] [CrossRef]

- Mirjalili, S.; Mirjalili, S.M.; Lewis, A. Grey Wolf Optimizer. Adv. Eng. Softw. 2014, 69, 46–61. [Google Scholar] [CrossRef]

- Karaboga, D.; Gorkemli, B.; Ozturk, C.; Karaboga, N. A comprehensive survey: Artificial bee colony (ABC) algorithm and applications. Artif. Intell. Rev. 2014, 42, 21–57. [Google Scholar] [CrossRef]

- Dorigo, M.; Stützle, T. Ant Colony Optimization: Overview and Recent Advances. In Handbook of Metaheuristics; Gendreau, M., Potvin, J.-Y., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 311–351. [Google Scholar] [CrossRef]

- Neshat, M.; Sepidnam, G.; Sargolzaei, M.; Toosi, A.N. Artificial fish swarm algorithm: A survey of the state-of-the-art, hybridization, combinatorial and indicative applications. Artif. Intell. Rev. 2014, 42, 965–997. [Google Scholar] [CrossRef]

- Fister, I.; Fister, I.; Yang, X.-S.; Brest, J. A comprehensive review of firefly algorithms. Swarm Evol. Comput. 2013, 13, 34–46. [Google Scholar] [CrossRef]

- Ranjan, R.K.; Kumar, V. A systematic review on fruit fly optimization algorithm and its applications. Artif. Intell. Rev. 2023. [Google Scholar] [CrossRef]

- Yang, X.-S.; Deb, S. Cuckoo search: Recent advances and applications. Neural Comput. Appl. 2014, 24, 169–174. [Google Scholar] [CrossRef]

- Agarwal, T.; Kumar, V. A Systematic Review on Bat Algorithm: Theoretical Foundation, Variants, and Applications. Arch. Comput. Methods Eng. 2022, 29, 2707–2736. [Google Scholar] [CrossRef]

- Selva Rani, B.; Aswani Kumar, C. A Comprehensive Review on Bacteria Foraging Optimization Technique. In Multi-objective Swarm Intelligence: Theoretical Advances and Applications; Dehuri, S., Jagadev, A.K., Panda, M., Eds.; Springer: Berlin/Heidelberg, Germany, 2015; pp. 1–25. [Google Scholar] [CrossRef]

- Luque-Chang, A.; Cuevas, E.; Fausto, F.; Zaldívar, D.; Pérez, M. Social Spider Optimization Algorithm: Modifications, Applications, and Perspectives. Math. Probl. Eng. 2018, 2018, 6843923. [Google Scholar] [CrossRef]

- Cuevas, E.; Fausto, F.; González, A. Locust Search Algorithm Applied to Multi-threshold Segmentation. In New Advancements in Swarm Algorithms: Operators and Applications; Cuevas, E., Fausto, F., González, A., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 211–240. [Google Scholar] [CrossRef]

- Ezugwu, A.E.; Prayogo, D. Symbiotic organisms search algorithm: Theory, recent advances and applications. Expert Syst. Appl. 2019, 119, 184–209. [Google Scholar] [CrossRef]

- Shehab, M.; Abualigah, L.; Al Hamad, H.; Alabool, H.; Alshinwan, M.; Khasawneh, A.M. Moth–flame optimization algorithm: Variants and applications. Neural Comput. Appl. 2020, 32, 9859–9884. [Google Scholar] [CrossRef]

- Hashim, F.A.; Houssein, E.H.; Hussain, K.; Mabrouk, M.S.; Al-Atabany, W. Honey Badger Algorithm: New metaheuristic algorithm for solving optimization problems. Math. Comput. Simul. 2022, 192, 84–110. [Google Scholar] [CrossRef]

- Li, J.; Lei, H.; Alavi, A.H.; Wang, G.-G. Elephant Herding Optimization: Variants, Hybrids, and Applications. Mathematics 2020, 8, 1415. [Google Scholar] [CrossRef]

- Abualigah, L.; Diabat, A. A comprehensive survey of the Grasshopper optimization algorithm: Results, variants, and applications. Neural Comput. Appl. 2020, 32, 15533–15556. [Google Scholar] [CrossRef]

- Alabool, H.M.; Alarabiat, D.; Abualigah, L.; Heidari, A.A. Harris hawks optimization: A comprehensive review of recent variants and applications. Neural Comput. Appl. 2021, 33, 8939–8980. [Google Scholar] [CrossRef]

- Jiang, Y.; Wu, Q.; Zhu, S.; Zhang, L. Orca predation algorithm: A novel bio-inspired algorithm for global optimization problems. Expert Syst. Appl. 2022, 188, 116026. [Google Scholar] [CrossRef]

- Zamani, H.; Nadimi-Shahraki, M.H.; Gandomi, A.H. Starling murmuration optimizer: A novel bio-inspired algorithm for global and engineering optimization. Comput. Methods Appl. Mech. Eng. 2022, 392, 114616. [Google Scholar] [CrossRef]

- Dehghani, M.; Trojovský, P. Serval Optimization Algorithm: A New Bio-Inspired Approach for Solving Optimization Problems. Biomimetics 2022, 7, 204. [Google Scholar] [CrossRef] [PubMed]

- Salcedo-Sanz, S. A review on the coral reefs optimization algorithm: New development lines and current applications. Prog. Artif. Intell. 2017, 6, 1–15. [Google Scholar] [CrossRef]

- Wang, G.-G.; Gandomi, A.H.; Alavi, A.H.; Gong, D. A comprehensive review of krill herd algorithm: Variants, hybrids and applications. Artif. Intell. Rev. 2019, 51, 119–148. [Google Scholar] [CrossRef]

- Agushaka, J.O.; Ezugwu, A.E.; Abualigah, L. Gazelle optimization algorithm: A novel nature-inspired metaheuristic optimizer. Neural Comput. Appl. 2023, 35, 4099–4131. [Google Scholar] [CrossRef]

- Hizarci, H.; Demirel, O.; Turkay, B.E. Distribution network reconfiguration using time-varying acceleration coefficient assisted binary particle swarm optimization. Eng. Sci. Technol. Int. J. 2022, 35, 101230. [Google Scholar] [CrossRef]

- Liu, H.; Zhang, X.; Liang, H.; Tu, L. Stability analysis of the human behavior-based particle swarm optimization without stagnation assumption. Expert Syst. Appl. 2020, 159, 113638. [Google Scholar] [CrossRef]

- Lin, A.; Sun, W.; Yu, H.; Wu, G.; Tang, H. Adaptive comprehensive learning particle swarm optimization with cooperative archive. Appl. Soft Comput. 2019, 77, 533–546. [Google Scholar] [CrossRef]

- Mech, L.D.; Boitani, L. Wolves: Behavior, Ecology, and Conservation; University of Chicago Press: Chicago, IL, USA, 2007. [Google Scholar]

- Yang, X.-S. A New Metaheuristic Bat-Inspired Algorithm. In Nature Inspired Cooperative Strategies for Optimization (NICSO 2010); González, J.R., Pelta, D.A., Cruz, C., Terrazas, G., Krasnogor, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 65–74. [Google Scholar] [CrossRef]

- Ahmmad, S.N.Z.; Muchtar, F. A review on applications of optimization using bat algorithm. Int. J. Adv. Trends Comput. Sci. Eng. 2020, 9, 212–219. [Google Scholar] [CrossRef]

- Yang, X.-S.; He, X. Bat algorithm: Literature review and applications. Int. J. Bio-Inspired Comput. 2013, 5, 141–149. [Google Scholar] [CrossRef]

- Biyanto, T.R.; Matradji; Irawan, S.; Febrianto, H.Y.; Afdanny, N.; Rahman, A.H.; Gunawan, K.S.; Pratama, J.A.D.; Bethiana, T.N. Killer Whale Algorithm: An Algorithm Inspired by the Life of Killer Whale. Procedia Comput. Sci. 2017, 124, 151–157. [Google Scholar] [CrossRef]

- Golilarz, N.A.; Gao, H.; Addeh, A.; Pirasteh, S. ORCA optimization algorithm: A new meta-heuristic tool for complex optimization problems. In Proceedings of the 17th International Computer Conference on Wavelet Active Media Technology and Information Processing (ICCWAMTIP), Chengdu, China, 18–20 December 2020; pp. 198–204. [Google Scholar] [CrossRef]

- Drias, H.; Drias, Y.; Khennak, I. A new swarm algorithm based on orcas intelligence for solving maze problems. In Trends and Innovations in Information Systems and Technologies; Rocha, Á., Adeli, H., Reis, L.P., Costanzo, S., Orovic, I., Moreira, F., Eds.; Springer International Publishing: Cham, Switzerland, 2020; pp. 788–797. [Google Scholar] [CrossRef]

- Drias, H.; Bendimerad, L.S.; Drias, Y. A Three-Phase Artificial Orcas Algorithm for Continuous and Discrete Problems. Int. J. Appl. Metaheuristic Comput. 2022, 13, 1–20. [Google Scholar] [CrossRef]

- Cavagna, A.; Cimarelli, A.; Giardina, I.; Parisi, G.; Santagati, R.; Stefanini, F.; Viale, M. Scale-free correlations in starling flocks. Proc. Natl. Acad. Sci. USA 2010, 107, 11865–11870. [Google Scholar] [CrossRef]

- Chu, H.; Yi, J.; Yang, F. Chaos Particle Swarm Optimization Enhancement Algorithm for UAV Safe Path Planning. Appl. Sci. 2022, 12, 8977. [Google Scholar] [CrossRef]

- Dasgupta, D.; Yu, S.; Nino, F. Recent Advances in Artificial Immune Systems: Models and Applications. Appl. Soft Comput. 2011, 11, 1574–1587. [Google Scholar] [CrossRef]

- Sadollah, A.; Sayyaadi, H.; Yadav, A. A dynamic metaheuristic optimization model inspired by biological nervous systems: Neural network algorithm. Appl. Soft Comput. 2018, 71, 747–782. [Google Scholar] [CrossRef]

- Mousavirad, S.J.; Ebrahimpour-Komleh, H. Human mental search: A new population-based metaheuristic optimization algorithm. Appl. Intell. 2017, 47, 850–887. [Google Scholar] [CrossRef]

- Bernardino, H.S.; Barbosa, H.J.C. Artificial Immune Systems for Optimization. In Nature-Inspired Algorithms for Optimisation; Chiong, R., Ed.; Springer: Berlin/Heidelberg, Germany, 2009; pp. 389–411. [Google Scholar] [CrossRef]

- Sharma, V.; Tripathi, A.K. A systematic review of meta-heuristic algorithms in IoT based application. Array 2022, 14, 100164. [Google Scholar] [CrossRef]

- Tang, C.; Todo, Y.; Ji, J.; Lin, Q.; Tang, Z. Artificial immune system training algorithm for a dendritic neuron model. Knowl. Based Syst. 2021, 233, 107509. [Google Scholar] [CrossRef]

- Xing, B.; Gao, W.-J. Imperialist Competitive Algorithm. In Innovative Computational Intelligence: A Rough Guide to 134 Clever Algorithms; Xing, B., Gao, W.-J., Eds.; Springer International Publishing: Cham, Switzerland, 2014; pp. 203–209. [Google Scholar] [CrossRef]

- Bozorgi, A.; Bozorg-Haddad, O.; Chu, X. Anarchic Society Optimization (ASO) Algorithm. In Advanced Optimization by Nature-Inspired Algorithms; Bozorg-Haddad, O., Ed.; Springer: Singapore, 2018; pp. 31–38. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Mohamed, R.; Chakrabortty, R.K.; Sallam, K.; Ryan, M.J. An efficient teaching-learning-based optimization algorithm for parameters identification of photovoltaic models: Analysis and validations. Energy Convers. Manag. 2021, 227, 113614. [Google Scholar] [CrossRef]

- Ray, T.; Liew, K.M. Society and civilization: An optimization algorithm based on the simulation of social behavior. IEEE Trans. Evol. Comput. 2003, 7, 386–396. [Google Scholar] [CrossRef]

- Husseinzadeh Kashan, A. League Championship Algorithm (LCA): An algorithm for global optimization inspired by sport championships. Appl. Soft Comput. 2014, 16, 171–200. [Google Scholar] [CrossRef]

- Moghdani, R.; Salimifard, K. Volleyball Premier League Algorithm. Appl. Soft Comput. 2018, 64, 161–185. [Google Scholar] [CrossRef]

- Biyanto, T.R.; Fibrianto, H.Y.; Nugroho, G.; Hatta, A.M.; Listijorini, E.; Budiati, T.; Huda, H. Duelist algorithm: An algorithm inspired by how duelist improve their capabilities in a duel. In Advances in Swarm Intelligence; Tan, Y., Shi, Y., Niu, B., Eds.; Springer International Publishing: Cham, Switzerland, 2016; pp. 39–47. [Google Scholar] [CrossRef]

- Laguna, M. Tabu Search. In Handbook of Heuristics; Martí, R., Pardalos, P.M., Resende, M.G.C., Eds.; Springer International Publishing: Cham, Switzerland, 2018; pp. 741–758. [Google Scholar] [CrossRef]

- Ghasemian, H.; Ghasemian, F.; Vahdat-Nejad, H. Human urbanization algorithm: A novel metaheuristic approach. Math. Comput. Simul. 2020, 178, 1–15. [Google Scholar] [CrossRef]

- Askari, Q.; Younas, I.; Saeed, M. Political Optimizer: A novel socio-inspired meta-heuristic for global optimization. Knowl. Based Syst. 2020, 195, 105709. [Google Scholar] [CrossRef]

- Muazu, A.A.; Hashim, A.S.; Sarlan, A. Review of Nature Inspired Metaheuristic Algorithm Selection for Combinatorial t-Way Testing. IEEE Access 2022, 10, 27404–27431. [Google Scholar] [CrossRef]

- Abdel-Basset, M.; Shawky, L.A. Flower pollination algorithm: A comprehensive review. Artif. Intell. Rev. 2019, 52, 2533–2557. [Google Scholar] [CrossRef]

- Ibrahim, A.; Anayi, F.; Packianather, M.; Alomari, O.A. New hybrid invasive weed optimization and machine learning approach for fault detection. Energies 2022, 15, 1488. [Google Scholar] [CrossRef]

- Waqar, A.; Subramaniam, U.; Farzana, K.; Elavarasan, R.M.; Habib, H.U.R.; Zahid, M.; Hossain, E. Analysis of Optimal Deployment of Several DGs in Distribution Networks Using Plant Propagation Algorithm. IEEE Access 2020, 8, 175546–175562. [Google Scholar] [CrossRef]

- Gupta, D.; Sharma, P.; Choudhary, K.; Gupta, K.; Chawla, R.; Khanna, A.; Albuquerque, V.H.C.D. Artificial plant optimization algorithm to detect infected leaves using machine learning. Expert Syst. 2021, 38, e12501. [Google Scholar] [CrossRef]

- Cinar, A.C.; Korkmaz, S.; Kiran, M.S. A discrete tree-seed algorithm for solving symmetric traveling salesman problem. Eng. Sci. Technol. Int. J. 2020, 23, 879–890. [Google Scholar] [CrossRef]

- Premaratne, U.; Samarabandu, J.; Sidhu, T. A new biologically inspired optimization algorithm. In Proceedings of the 2009 International Conference on Industrial and Information Systems (ICIIS), Peradeniya, Sri Lanka, 28–31 December 2009; pp. 279–284. [Google Scholar] [CrossRef]

- Yang, X.-S. Flower Pollination Algorithm for Global Optimization. In Unconventional Computation and Natural Computation; Durand-Lose, J., Jonoska, N., Eds.; Springer: Berlin/Heidelberg, Germany, 2012; pp. 240–249. [Google Scholar] [CrossRef]

- Chawla, M.; Duhan, M. Levy Flights in Metaheuristics Optimization Algorithms—A Review. Appl. Artif. Intell. 2018, 32, 802–821. [Google Scholar] [CrossRef]

- Yang, X.-S.; Karamanoglu, M.; He, X. Flower pollination algorithm: A novel approach for multiobjective optimization. Eng. Optim. 2014, 46, 1222–1237. [Google Scholar] [CrossRef]

- Valenzuela, L.; Valdez, F.; Melin, P. Flower Pollination Algorithm with Fuzzy Approach for Solving Optimization Problems. In Nature-Inspired Design of Hybrid Intelligent Systems; Melin, P., Castillo, O., Kacprzyk, J., Eds.; Springer International Publishing: Cham, Switzerland, 2017; pp. 357–369. [Google Scholar] [CrossRef]

- Burke, E.K.; Gendreau, M.; Hyde, M.; Kendall, G.; Ochoa, G.; Özcan, E.; Qu, R. Hyper-heuristics: A survey of the state of the art. J. Oper. Res. Soc. 2013, 64, 1695–1724. [Google Scholar] [CrossRef]

- Cowling, P.; Kendall, G.; Soubeiga, E. A hyperheuristic approach to scheduling a sales summit. In Practice and Theory of Automated Timetabling III, Proceedings of the Third International Conference, PATAT 2000, Konstanz, Germany, 16–18 August 2000; Selected Papers; Burke, E., Erben, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2001; pp. 176–190. [Google Scholar] [CrossRef]

- Moerland, T.M.; Broekens, J.; Plaat, A.; Jonker, C.M. Model-based Reinforcement Learning: A Survey. Found. Trends® Mach. Learn. 2023, 16, 1–118. [Google Scholar] [CrossRef]

- Mazyavkina, N.; Sviridov, S.; Ivanov, S.; Burnaev, E. Reinforcement learning for combinatorial optimization: A survey. Comput. Oper. Res. 2021, 134, 105400. [Google Scholar] [CrossRef]

- Lin, J.; Zhu, L.; Gao, K. A genetic programming hyper-heuristic approach for the multi-skill resource constrained project scheduling problem. Expert Syst. Appl. 2020, 140, 112915. [Google Scholar] [CrossRef]

- Raidl, G.R.; Puchinger, J.; Blum, C. Metaheuristic Hybrids. In Handbook of Metaheuristics; Gendreau, M., Potvin, J.-Y., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 385–417. [Google Scholar] [CrossRef]

- Pellerin, R.; Perrier, N.; Berthaut, F. A survey of hybrid metaheuristics for the resource-constrained project scheduling problem. Eur. J. Oper. Res. 2020, 280, 395–416. [Google Scholar] [CrossRef]

- Laskar, N.M.; Guha, K.; Chatterjee, I.; Chanda, S.; Baishnab, K.L.; Paul, P.K. HWPSO: A new hybrid whale-particle swarm optimization algorithm and its application in electronic design optimization problems. Appl. Intell. 2019, 49, 265–291. [Google Scholar] [CrossRef]

- Mohamed, H.; Korany, R.M.; Mohamed Farhat, O.H.; Salah, S.A.O. Optimal design of vertical silicon nanowires solar cell using hybrid optimization algorithm. J. Photonics Energy 2017, 8, 022502. [Google Scholar] [CrossRef]

- Cruz-Duarte, J.M.; Amaya, I.; Ortiz-Bayliss, J.C.; Conant-Pablos, S.E.; Terashima-Marín, H.; Shi, Y. Hyper-Heuristics to customise metaheuristics for continuous optimisation. Swarm Evol. Comput. 2021, 66, 100935. [Google Scholar] [CrossRef]

- Talbi, E.-G. Combining metaheuristics with mathematical programming, constraint programming and machine learning. Ann. Oper. Res. 2016, 240, 171–215. [Google Scholar] [CrossRef]

- Mahesh, B. Machine learning algorithms—A review. Int. J. Sci. Res. 2020, 9, 381–386. [Google Scholar]

- Keivanian, F.; Chiong, R. A novel hybrid fuzzy–metaheuristic approach for multimodal single and multi-objective optimization problems. Expert Syst. Appl. 2022, 195, 116199. [Google Scholar] [CrossRef]

- Coello, C.A.C.; Brambila, S.G.; Gamboa, J.F.; Tapia, M.G.C. Multi-Objective Evolutionary Algorithms: Past, Present, and Future. In Black Box Optimization, Machine Learning, and No-Free Lunch Theorems; Pardalos, P.M., Rasskazova, V., Vrahatis, M.N., Eds.; Springer International Publishing: Cham, Switzerland, 2021; pp. 137–162. [Google Scholar] [CrossRef]

- Coello, C.A.C.; Lamont, G.B.; Veldhuizen, D.A.V. Evolutionary Algorithms for Solving Multi-Objective Problems; Springer: New York, NY, USA, 2007. [Google Scholar] [CrossRef]

- Zhang, W.-W.; Qi, H.; Ji, Y.-K.; He, M.-J.; Ren, Y.-T.; Li, Y. Boosting photoelectric performance of thin film GaAs solar cell based on multi-objective optimization for solar energy utilization. Sol. Energy 2021, 230, 1122–1132. [Google Scholar] [CrossRef]

- Xulin, W.; Zhenyuan, J.; Jianwei, M.; Dongxu, H.; Xiaoqian, Q.; Chuanheng, G.; Wei, L. Optimization of nanosecond laser processing for microgroove on TC4 surface by combining response surface method and genetic algorithm. Opt. Eng. 2022, 61, 086103. [Google Scholar] [CrossRef]

- Shunmugathammal, M.; Columbus, C.C.; Anand, S. A nature inspired optimization algorithm for VLSI fixed-outline floorplanning. Analog Integr. Circuits Signal Process. 2020, 103, 173–186. [Google Scholar] [CrossRef]

- Abdi, A.; Zarandi, H.R. A meta heuristic-based task scheduling and mapping method to optimize main design challenges of heterogeneous multiprocessor embedded systems. Microelectron. J. 2019, 87, 1–11. [Google Scholar] [CrossRef]

- Ramírez-Ochoa, D.-D.; Pérez-Domínguez, L.A.; Martínez-Gómez, E.-A.; Luviano-Cruz, D. PSO, a swarm intelligence-based evolutionary algorithm as a decision-making strategy: A review. Symmetry 2022, 14, 455. [Google Scholar] [CrossRef]

- Vinay Kumar, S.B.; Rao, P.V.; Singh, M.K. Optimal floor planning in VLSI using improved adaptive particle swarm optimization. Evol. Intell. 2022, 15, 925–938. [Google Scholar] [CrossRef]

- Kien, N.T.; Hong, I.-P. Application of Metaheuristic Optimization Algorithm and 3D Printing Technique in 3D Bandpass Frequency Selective Structure. J. Electr. Eng. Technol. 2020, 15, 795–801. [Google Scholar] [CrossRef]

- Kritele, L.; Benhala, B.; Zorkani, I. Ant Colony Optimization for Optimal Low-Pass Filter Sizing. In Bioinspired Heuristics for Optimization; Talbi, E.-G., Nakib, A., Eds.; Springer International Publishing: Cham, Switzerland, 2019; pp. 283–299. [Google Scholar] [CrossRef]

- Srinivasan, B.; Venkatesan, R.; Aljafari, B.; Kotecha, K.; Indragandhi, V.; Vairavasundaram, S. A Novel Multicriteria Optimization Technique for VLSI Floorplanning Based on Hybridized Firefly and Ant Colony Systems. IEEE Access 2023, 11, 14677–14692. [Google Scholar] [CrossRef]

- Qin, D.; He, Z.; Zhao, X.; Liu, J.; Zhang, F.; Xiao, L. Area and power optimization for Fixed Polarity Reed–Muller logic circuits based on Multi-strategy Multi-objective Artificial Bee Colony algorithm. Eng. Appl. Artif. Intell. 2023, 121, 105906. [Google Scholar] [CrossRef]

- Ravi, R.V.; Subramaniam, K.; Roshini, T.V.; Muthusamy, S.P.B.; Prasanna Venkatesan, G.K.D. Optimization algorithms, an effective tool for the design of digital filters: A review. J. Ambient. Intell. Humaniz. Comput. 2019. [Google Scholar] [CrossRef]

- Qamar, F.; Siddiqui, M.U.; Hindia, M.N.; Hassan, R.; Nguyen, Q.N. Issues, challenges, and research trends in spectrum management: A comprehensive overview and new vision for designing 6G networks. Electronics 2020, 9, 1416. [Google Scholar] [CrossRef]

- Lorenti, G.; Ragusa, C.S.; Repetto, M.; Solimene, L. Data-Driven Constraint Handling in Multi-Objective Inductor Design. Electronics 2023, 12, 781. [Google Scholar] [CrossRef]

- Brûlé, Y.; Wiecha, P.; Cuche, A.; Paillard, V.; Colas des Francs, G. Magnetic and electric Purcell factor control through geometry optimization of high index dielectric nanostructures. Opt. Express 2022, 30, 20360–20372. [Google Scholar] [CrossRef]

- Liu, B.; Zhang, W.; Li, K.; Duan, W.; Liu, M.; Meng, Q. High-Efficiency Multiobjective Synchronous Modeling and Solution of Analog ICs. Circuits Syst. Signal Process. 2023, 42, 1984–2006. [Google Scholar] [CrossRef]

- Srinivasan, B.; Venkatesan, R. Multi-objective optimization for energy and heat-aware VLSI floorplanning using enhanced firefly optimization. Soft Comput. 2021, 25, 4159–4174. [Google Scholar] [CrossRef]

- Dayana, R.; Kalavathy, G.M. Quantum firefly secure routing for fog based wireless sensor networks. Intell. Autom. Soft Comput. 2022, 31, 1511–1528. [Google Scholar] [CrossRef]

- Asha, A.; Rajesh, A.; Verma, N.; Poonguzhali, I. Multi-objective-derived energy efficient routing in wireless sensor networks using hybrid African vultures-cuckoo search optimization. Int. J. Commun. Syst. 2023, 36, e5438. [Google Scholar] [CrossRef]

- Gude, S.; Jana, K.C.; Laudani, A.; Thanikanti, S.B. Parameter extraction of photovoltaic cell based on a multi-objective approach using nondominated sorting cuckoo search optimization. Sol. Energy 2022, 239, 359–374. [Google Scholar] [CrossRef]

- Acharya, B.R.; Sethi, A.; Das, A.K.; Saha, P.; Pratihar, D.K. Multi-objective optimization in electrochemical micro-drilling of Ti6Al4V using nature-inspired techniques. Mater. Manuf. Process. 2023. [Google Scholar] [CrossRef]

- Saif, F.A.; Latip, R.; Hanapi, Z.M.; Shafinah, K. Multi-Objective Grey Wolf Optimizer Algorithm for Task Scheduling in Cloud-Fog Computing. IEEE Access 2023, 11, 20635–20646. [Google Scholar] [CrossRef]

- Miriyala, S.S.; Mitra, K. Multi-objective optimization of iron ore induration process using optimal neural networks. Mater. Manuf. Process. 2020, 35, 537–544. [Google Scholar] [CrossRef]

- Zhang, J.; Taflanidis, A.A. Multi-objective optimization for design under uncertainty problems through surrogate modeling in augmented input space. Struct. Multidiscip. Optim. 2019, 59, 351–372. [Google Scholar] [CrossRef]

- Barto, A.G. Chapter 2—Reinforcement Learning. In Neural Systems for Control; Omidvar, O., Elliott, D.L., Eds.; Academic Press: San Diego, CA, USA, 1997; pp. 7–30. [Google Scholar] [CrossRef]

- Zou, F.; Yen, G.G.; Tang, L.; Wang, C. A reinforcement learning approach for dynamic multi-objective optimization. Inf. Sci. 2021, 546, 815–834. [Google Scholar] [CrossRef]

- Kim, S.; Kim, I.; You, D. Multi-condition multi-objective optimization using deep reinforcement learning. J. Comput. Phys. 2022, 462, 111263. [Google Scholar] [CrossRef]

- Zhang, X.; Yu, G.; Jin, Y.; Qian, F. An adaptive Gaussian process based manifold transfer learning to expensive dynamic multi-objective optimization. Neurocomputing 2023, 538, 126212. [Google Scholar] [CrossRef]

- Hernández Rodríguez, T.; Sekulic, A.; Lange-Hegermann, M.; Frahm, B. Designing Robust Biotechnological Processes Regarding Variabilities Using Multi-Objective Optimization Applied to a Biopharmaceutical Seed Train Design. Processes 2022, 10, 883. [Google Scholar] [CrossRef]

- Liu, D.; Xue, S.; Zhao, B.; Luo, B.; Wei, Q. Adaptive Dynamic Programming for Control: A Survey and Recent Advances. IEEE Trans. Syst. Man Cybern. Syst. 2021, 51, 142–160. [Google Scholar] [CrossRef]

- Li, Y.; Jia, M.; Han, X.; Bai, X.-S. Towards a comprehensive optimization of engine efficiency and emissions by coupling artificial neural network (ANN) with genetic algorithm (GA). Energy 2021, 225, 120331. [Google Scholar] [CrossRef]

- Bas, E.; Egrioglu, E.; Kolemen, E. Training simple recurrent deep artificial neural network for forecasting using particle swarm optimization. Granul. Comput. 2022, 7, 411–420. [Google Scholar] [CrossRef]

- Xue, Y.; Tong, Y.; Neri, F. An ensemble of differential evolution and Adam for training feed-forward neural networks. Inf. Sci. 2022, 608, 453–471. [Google Scholar] [CrossRef]

- Lendaris, G.G.; Mathia, K.; Saeks, R. Linear Hopfield networks and constrained optimization. IEEE Trans. Syst. Man Cybern. Part B (Cybern.) 1999, 29, 114–118. [Google Scholar] [CrossRef]

- Probst, M.; Rothlauf, F.; Grahl, J. Scalability of using Restricted Boltzmann Machines for combinatorial optimization. Eur. J. Oper. Res. 2017, 256, 368–383. [Google Scholar] [CrossRef]

- Li, X.; Wang, J.; Kwong, S. Boolean matrix factorization based on collaborative neurodynamic optimization with Boltzmann machines. Neural Netw. 2022, 153, 142–151. [Google Scholar] [CrossRef]

- Morales-Hernández, A.; Van Nieuwenhuyse, I.; Rojas Gonzalez, S. A survey on multi-objective hyperparameter optimization algorithms for machine learning. Artif. Intell. Rev. 2022. [Google Scholar] [CrossRef]

- Yang, L.; Shami, A. On hyperparameter optimization of machine learning algorithms: Theory and practice. Neurocomputing 2020, 415, 295–316. [Google Scholar] [CrossRef]

- Kaveh, M.; Mesgari, M.S. Application of Meta-Heuristic Algorithms for Training Neural Networks and Deep Learning Architectures: A Comprehensive Review. Neural Process. Lett. 2022. [Google Scholar] [CrossRef] [PubMed]

- İnik, Ö.; Altıok, M.; Ülker, E.; Koçer, B. MODE-CNN: A fast converging multi-objective optimization algorithm for CNN-based models. Appl. Soft Comput. 2021, 109, 107582. [Google Scholar] [CrossRef]

- Hosseini, A.; Wang, J.; Hosseini, S.M. A recurrent neural network for solving a class of generalized convex optimization problems. Neural Netw. 2013, 44, 78–86. [Google Scholar] [CrossRef]

- Kitayama, S.; Srirat, J.; Arakawa, M.; Yamazaki, K. Sequential approximate multi-objective optimization using radial basis function network. Struct. Multidiscip. Optim. 2013, 48, 501–515. [Google Scholar] [CrossRef]

- Chang, H.; Zhang, G.; Sun, Y.; Lu, S. Using Sequence-Approximation Optimization and Radial-Basis-Function Network for Brake-Pedal Multi-Target Warping and Cooling. Polymers 2022, 14, 2578. [Google Scholar] [CrossRef]

- Jafar-Zanjani, S.; Salary, M.M.; Huynh, D.; Elhamifar, E.; Mosallaei, H. TCO-Based Active Dielectric Metasurfaces Design by Conditional Generative Adversarial Networks. Adv. Theory Simul. 2021, 4, 2000196. [Google Scholar] [CrossRef]

- Liu, X.; Chu, J.; Zhang, Z.; He, M. Data-driven multi-objective molecular design of ionic liquid with high generation efficiency on small dataset. Mater. Des. 2022, 220, 110888. [Google Scholar] [CrossRef]

- Kartci, A.; Agambayev, A.; Farhat, M.; Herencsar, N.; Brancik, L.; Bagci, H.; Salama, K.N. Synthesis and Optimization of Fractional-Order Elements Using a Genetic Algorithm. IEEE Access 2019, 7, 80233–80246. [Google Scholar] [CrossRef]

- Lanchares, J.; Garnica, O.; Fernández-de-Vega, F.; Ignacio Hidalgo, J. A review of bioinspired computer-aided design tools for hardware design. Concurr. Comput. Pract. Exp. 2013, 25, 1015–1036. [Google Scholar] [CrossRef]

- Karthick, R.; Senthil Selvi, A.; Meenalochini, P.; Senthil Pandi, S. An Optimal Partitioning and Floor Planning for VLSI Circuit Design based on a Hybrid Bio-inspired Whale Optimization and Adaptive Bird Swarm Optimization (WO-ABSO) Algorithm. J. Circuits Syst. Comput. 2023, 32, 2350273. [Google Scholar] [CrossRef]

- Devi, S.; Guha, K.; Jakšić, O.; Baishnab, K.L.; Jakšić, Z. Optimized Design of a Self-Biased Amplifier for Seizure Detection Supplied by Piezoelectric Nanogenerator: Metaheuristic Algorithms versus ANN-Assisted Goal Attainment Method. Micromachines 2022, 13, 1104. [Google Scholar] [CrossRef] [PubMed]

- Rayas-Sánchez, J.E.; Koziel, S.; Bandler, J.W. Advanced RF and Microwave Design Optimization: A Journey and a Vision of Future Trends. IEEE J. Microw. 2021, 1, 481–493. [Google Scholar] [CrossRef]

- Chordia, A.; Tripathi, J.N. An Automated Framework for Variability Analysis for Integrated Circuits Using Metaheuristics. IEEE Trans. Signal Power Integr. 2022, 1, 104–111. [Google Scholar] [CrossRef]

- Mallick, S.; Kar, R.; Mandal, D. Optimal design of second generation current conveyor using craziness-based particle swarm optimisation. Int. J. Bio-Inspired Comput. 2022, 19, 87–96. [Google Scholar] [CrossRef]

- Reyes Fernandez de Bulnes, D.; Maldonado, Y.; Trujillo, L. Development of Multiobjective High-Level Synthesis for FPGAs. Sci. Program. 2020, 2020, 7095048. [Google Scholar] [CrossRef]

- Joshi, D.; Dash, S.; Reddy, S.; Manigilla, R.; Trivedi, G. Multi-objective Hybrid Particle Swarm Optimization and its Application to Analog and RF Circuit Optimization. Circuits Syst. Signal Process. 2023. [Google Scholar] [CrossRef]

- Nouri, H. Analyzing and optimizing of electro-thermal MEMS micro-actuator performance by BFA algorithm. Microsys. Tech. 2022, 28, 1621–1636. [Google Scholar] [CrossRef]

- Razavi, B. Design of Analog Cmos Integrated Circuit, 2nd ed.; McGraw Hill Education (India): Noida, India, 2017. [Google Scholar]

- Puhan, J.; Burmen, A.; Tuma, T. Analogue integrated circuit sizing with several optimization runs using heuristics for setting initial points. Can. J. Electr. Comput. Eng. 2003, 28, 105–111. [Google Scholar] [CrossRef]

- Fortes, A.; da Silva, L.A., Jr.; Domanski, R.; Girardi, A. Two-Stage OTA Sizing Optimization Using Bio-Inspired Algorithms. J. Integr. Circuits Syst. 2019, 14, 1–10. [Google Scholar] [CrossRef]

- Motlak, H.J.; Mohammed, M.J. Design of self-biased folded cascode CMOS op-amp using PSO algorithm for low-power applications. Int. J. Electron. Lett. 2019, 7, 85–94. [Google Scholar] [CrossRef]

- Fakhfakh, M.; Cooren, Y.; Sallem, A.; Loulou, M.; Siarry, P. Analog circuit design optimization through the particle swarm optimization technique. Analog Integr. Circuits Signal Process. 2010, 63, 71–82. [Google Scholar] [CrossRef]

- Barari, M.; Karimi, H.R.; Razaghian, F. Analog Circuit Design Optimization Based on Evolutionary Algorithms. Math. Probl. Eng. 2014, 2014, 593684. [Google Scholar] [CrossRef]

- Rojec, Ž.; Bűrmen, Á.; Fajfar, I. Analog circuit topology synthesis by means of evolutionary computation. Eng. Appl. Artif. Intell. 2019, 80, 48–65. [Google Scholar] [CrossRef]

- Dendouga, A.; Oussalah, S.; Thienpont, D.; Lounis, A. Multiobjective Genetic Algorithms Program for the Optimization of an OTA for Front-End Electronics. Adv. Electr. Eng. 2014, 2014, 374741. [Google Scholar] [CrossRef]

- Kudikala, S.; Sabat, S.L.; Udgata, S.K. Performance study of harmony search algorithm for analog circuit sizing. In Proceedings of the 2011 International Symposium on Electronic System Design, Kochi, India, 19–21 December 2011; pp. 12–17. [Google Scholar] [CrossRef]

- Majeed, M.A.M.; Rao, P.S. Optimization of CMOS analog circuits using grey wolf optimization algorithm. In Proceedings of the 2017 14th IEEE India Council International Conference (INDICON), Roorkee, India, 15–17 December 2017; pp. 1–6. [Google Scholar] [CrossRef]

- Majeed, M.A.M.; Patri, S.R. A hybrid of WOA and mGWO algorithms for global optimization and analog circuit design automation. COMPEL—Int. J. Comput. Math. Electr. Electron. Eng. 2019, 38, 452–476. [Google Scholar] [CrossRef]

- Bachir, B.; Ali, A.; Abdellah, M. Multiobjective optimization of an operational amplifier by the ant colony optimisation algorithm. Electr. Electron. Eng. 2012, 2, 230–235. [Google Scholar] [CrossRef]

- Benhala, B. An improved aco algorithm for the analog circuits design optimization. Int. J. Circuits Syst. Signal Process. 2016, 10, 128–133. [Google Scholar] [CrossRef]

- He, Y.; Bao, F.S. Circuit routing using monte carlo tree search and deep neural networks. arXiv 2020, arXiv:2006.13607. [Google Scholar] [CrossRef]

- Qi, Z.; Cai, Y.; Zhou, Q. Accurate prediction of detailed routing congestion using supervised data learning. In Proceedings of the IEEE 32nd International Conference on Computer Design (ICCD), Seoul, Republic of Korea, 19–22 October 2014; pp. 97–103. [Google Scholar] [CrossRef]

- Zhou, Q.; Wang, X.; Qi, Z.; Chen, Z.; Zhou, Q.; Cai, Y. An accurate detailed routing routability prediction model in placement. In Proceedings of the 2015 6th Asia Symposium on Quality Electronic Design (ASQED), Kuala Lumpur, Malaysia, 4–5 August 2015; pp. 119–122. [Google Scholar] [CrossRef]

- Xie, Z.; Huang, Y.H.; Fang, G.Q.; Ren, H.; Fang, S.Y.; Chen, Y.; Hu, J. RouteNet: Routability prediction for Mixed-Size Designs Using Convolutional Neural Network. In Proceedings of the 2018 IEEE/ACM International Conference on Computer-Aided Design (ICCAD), San Diego, CA, USA, 5–8 November 2018; pp. 1–8. [Google Scholar] [CrossRef]

- Shi, D.; Davoodi, A.; Linderoth, J. A procedure for improving the distribution of congestion in global routing. In Proceedings of the 2016 Design, Automation & Test in Europe Conference & Exhibition (DATE), Dresden, Germany, 14–18 March 2016; pp. 249–252. [Google Scholar]

- Liao, H.; Zhang, W.; Dong, X.; Poczos, B.; Shimada, K.; Burak Kara, L. A Deep Reinforcement Learning Approach for Global Routing. J. Mech. Des. 2019, 142, 061701. [Google Scholar] [CrossRef]

- Mandal, T.N.; Banik, A.D.; Dey, K.; Mehera, R.; Pal, R.K. Algorithms for minimizing bottleneck crosstalk in two-layer channel routing. In Computational Advancement in Communication Circuits and Systems; Maharatna, K., Kanjilal, M.R., Konar, S.C., Nandi, S., Das, K., Eds.; Springer: Singapore, 2020; pp. 313–330. [Google Scholar] [CrossRef]

- Kahng, A.B.; Lienig, J.; Markov, I.L.; Hu, J. VLSI Physical Design: From Graph Partitioning to Timing Closure; Springer: Cham, Switzerland, 2022. [Google Scholar] [CrossRef]

- Cai, H.; Srinivasan, S.; Czaplewski, D.A.; Martinson, A.B.F.; Gosztola, D.J.; Stan, L.; Loeffler, T.; Sankaranarayanan, S.K.R.S.; López, D. Inverse design of metasurfaces with non-local interactions. NPJ Comput. Mater. 2020, 6, 116. [Google Scholar] [CrossRef]

- Molesky, S.; Lin, Z.; Piggott, A.Y.; Jin, W.; Vucković, J.; Rodriguez, A.W. Inverse design in nanophotonics. Nat. Photonics 2018, 12, 659–670. [Google Scholar] [CrossRef]

- Wiecha, P.R.; Arbouet, A.; Girard, C.; Muskens, O.L. Deep learning in nano-photonics: Inverse design and beyond. Photon. Res. 2021, 9, B182–B200. [Google Scholar] [CrossRef]

- Barry, M.A.; Berthier, V.; Wilts, B.D.; Cambourieux, M.-C.; Bennet, P.; Pollès, R.; Teytaud, O.; Centeno, E.; Biais, N.; Moreau, A. Evolutionary algorithms converge towards evolved biological photonic structures. Sci. Rep. 2020, 10, 12024. [Google Scholar] [CrossRef] [PubMed]

- Peurifoy, J.; Shen, Y.; Jing, L.; Yang, Y.; Cano-Renteria, F.; DeLacy, B.G.; Joannopoulos, J.D.; Tegmark, M.; Soljačić, M. Nanophotonic particle simulation and inverse design using artificial neural networks. Sci. Adv. 2018, 4, eaar4206. [Google Scholar] [CrossRef]

- Liu, C.; Maier, S.A.; Li, G. Genetic-Algorithm-Aided Meta-Atom Multiplication for Improved Absorption and Coloration in Nanophotonics. ACS Photonics 2020, 7, 1716–1722. [Google Scholar] [CrossRef]

- Corey, T.M.; Chenglong, Y.; Jonathan, P.D.; Georgios, V. Method for simultaneous optimization of the material composition and dimensions of multilayer photonic nanostructures. In Proceedings of the Active Photonic Platforms XI. SPIE, San Diego, CA, USA, 11–15 August 2019; Volume 11081, p. 110812. [Google Scholar] [CrossRef]

- Elsawy, M.M.R.; Lanteri, S.; Duvigneau, R.; Fan, J.A.; Genevet, P. Numerical Optimization Methods for Metasurfaces. Laser Photonics Rev. 2020, 14, 1900445. [Google Scholar] [CrossRef]

- Qiu, C.-W.; Zhang, T.; Hu, G.; Kivshar, Y. Quo Vadis, Metasurfaces? Nano Lett. 2021, 21, 5461–5474. [Google Scholar] [CrossRef]

- Abdelraouf, O.A.M.; Wang, Z.; Liu, H.; Dong, Z.; Wang, Q.; Ye, M.; Wang, X.R.; Wang, Q.J.; Liu, H. Recent Advances in Tunable Metasurfaces: Materials, Design, and Applications. ACS Nano 2022, 16, 13339–13369. [Google Scholar] [CrossRef]

- Li, L.; Zhao, H.; Liu, C.; Li, L.; Cui, T.J. Intelligent metasurfaces: Control, communication and computing. eLight 2022, 2, 7. [Google Scholar] [CrossRef]

- Kudyshev, Z.A.; Kildishev, A.V.; Shalaev, V.M.; Boltasseva, A. Machine learning–assisted global optimization of photonic devices. Nanophotonics 2021, 10, 371–383. [Google Scholar] [CrossRef]

- Nugroho, F.A.A.; Bai, P.; Darmadi, I.; Castellanos, G.W.; Fritzsche, J.; Langhammer, C.; Gómez Rivas, J.; Baldi, A. Inverse designed plasmonic metasurface with parts per billion optical hydrogen detection. Nat. Comm. 2022, 13, 5737. [Google Scholar] [CrossRef]

- Wiecha, P.R.; Majorel, C.; Girard, C.; Cuche, A.; Paillard, V.; Muskens, O.L.; Arbouet, A. Design of plasmonic directional antennas via evolutionary optimization. Opt. Express 2019, 27, 29069–29081. [Google Scholar] [CrossRef]

- Bonod, N.; Bidault, S.; Burr, G.W.; Mivelle, M. Evolutionary Optimization of All-Dielectric Magnetic Nanoantennas. Adv. Opt. Mater. 2019, 7, 1900121. [Google Scholar] [CrossRef]

- Hammond, A.M.; Camacho, R.M. Designing integrated photonic devices using artificial neural networks. Opt. Express 2019, 27, 29620–29638. [Google Scholar] [CrossRef] [PubMed]

- Shiratori, R.; Nakata, M.; Hayashi, K.; Baba, T. Particle swarm optimization of silicon photonic crystal waveguide transition. Opt. Lett. 2021, 46, 1904–1907. [Google Scholar] [CrossRef] [PubMed]

- Jakšić, Z.; Maksimović, M.; Jakšić, O.; Vasiljević-Radović, D.; Djurić, Z.; Vujanić, A. Fabrication-induced disorder in structures for nanophotonics. Microelectron. Eng. 2006, 83, 1792–1797. [Google Scholar] [CrossRef]

- Dinc, N.U.; Saba, A.; Madrid-Wolff, J.; Gigli, C.; Boniface, A.; Moser, C.; Psaltis, D. From 3D to 2D and back again. Nanophotonics 2023, 12, 777–793. [Google Scholar] [CrossRef]

- Shen, Y.; Harris, N.C.; Skirlo, S.; Prabhu, M.; Baehr-Jones, T.; Hochberg, M.; Sun, X.; Zhao, S.; Larochelle, H.; Englund, D.; et al. Deep learning with coherent nanophotonic circuits. Nat. Photonics 2017, 11, 441–446. [Google Scholar] [CrossRef]

- Wetzstein, G.; Ozcan, A.; Gigan, S.; Fan, S.; Englund, D.; Soljačić, M.; Denz, C.; Miller, D.A.B.; Psaltis, D. Inference in artificial intelligence with deep optics and photonics. Nature 2020, 588, 39–47. [Google Scholar] [CrossRef] [PubMed]

- Lin, X.; Rivenson, Y.; Yardimci, N.T.; Veli, M.; Luo, Y.; Jarrahi, M.; Ozcan, A. All-optical machine learning using diffractive deep neural networks. Science 2018, 361, 1004–1008. [Google Scholar] [CrossRef] [PubMed]

| Algorithm Name | The Main Properties of the Algorithm | Ref. |

|---|---|---|

| Divide and Conquer Algorithm | The problem is decomposed into smaller, manageable sub-problems that are first independently solved in an approximate manner and then merged into the final solution. | [84] |

| Hill Climbing | The algorithm explores the neighboring solutions and picks those with the best properties, so that the algorithm constantly “climbs” toward them. | [85] |

| Greedy Algorithms | Immediate local improvements are prioritized without taking into account the effect on global optimization. The underlying assumption is that such “greedy” choices will result in an acceptable approximation. | [86] |

| Approximation Algorithms | Solutions are searched for within provable limits around the optimal solution. The aim is to achieve the maximum efficiency. This is convenient for difficult nondeterministic polynomial time problems. | [87] |

| Local Search Algorithms | An initial solution is assumed, and it is iteratively improved by exploring the immediate vicinity and making small local modifications. No completely new solutions are constructed. | [88] |

| Constructive Algorithms | Solutions are built part-by-part from an empty set by adding one building block at a time. The procedure is iterative and uses heuristics for the choice of the building blocks. | [89] |

| Constraint Satisfaction Algorithms | A set of constraints is defined at the beginning. The solution space is then searched locally, each time applying the constraints until all of them are satisfied. | [90] |

| Branch And Bound Algorithm | The solution space is systematically divided into smaller sub-problems, the search space is bounded according to problem-specific criteria, and branches that result in suboptimal solutions are pruned and removed. | [91] |

| Cutting Plane Algorithm | An optimization method solving linear programming problems. It finds the optimal solution by iteratively adding new, additional constraints (cutting planes), thus gradually tightening the region of possible solutions and converging towards the optimum. | [92] |

| Iterative Improvement Algorithms | Here the goal is to iteratively improve an initially proposed problem solution. Thus, systematic adjustments and improvements are made to the initial set by targeting the predefined objectives. The values may be reordered, retuned or swapped until the desired optimization is complete. | [93] |

| Algorithm Name | Abbr. | Proposed by, Year | Ref. |

|---|---|---|---|

| Genetic Algorithm | GA | Holland, 1975 | [99] |

| Memetic Algorithm | MA | Moscato, 1989 | [100] |

| Differential Evolution | DE | Storn, 1995 | [101] |

| Algorithm Name | Abbr. | Proposed by, Year | Ref. |

|---|---|---|---|

| Particle Swarm Optimization | PSO | Eberhart, Kennedy, 1995 | [104] |

| Whale Optimization Algorithm | WOA | Mirjalili, Lewis, 2016 | [105] |

| Gray Wolf Optimizer | GWO | Mirjalili, Mirjalili, and Lewis, 2014 | [106] |

| Artificial Bee Colony Algorithm | ABCA | Karaboga, 2005 | [107] |

| Ant Colony Optimization | ACO | Dorigo, 1992 | [108] |

| Artificial Fish Swarm Algorithm | AFSA | Li, Qian, 2003 | [109] |

| Firefly Algorithm | FA | Yang, 2009 | [110] |

| Fruit Fly Optimization Algorithm | FFOA | Pan, 2012 | [111] |

| Cuckoo Search Algorithm | CS | Yang and Deb, 2009 | [112] |

| Bat Algorithm | BA | Yang, 2010 | [113] |

| Bacterial Foraging | BFA | Passino, 2002 | [114] |

| Social Spider Optimization | SSO | Kaveh et al., 2013 | [115] |

| Locust Search Algorithm | LS | Cuevas et al., 2015 | [116] |

| Symbiotic Organisms Search | SOS | Cheng and Prayogo, 2014 | [117] |

| Moth-Flame Optimization | MFOA | Mirjalili, 2015 | [118] |

| Honey Badger Algorithm | HBA | Hashim et al., 2022 | [119] |

| Elephant Herding Optimization | EHO | Wang, Deb, Coleho, 2015 | [120] |

| Grasshopper Algorithm | GOA | Saremi, Mirjalili, Lewis, 2017 | [121] |

| Harris Hawks Optimization | HHO | Heidari et al., 2019 | [122] |

| Orca Predation Algorithm | OPA | Jiang, Wu, Zhu, Zhang, 2022 | [123] |

| Starling Murmuration Optimizer | SMO | Zamani, Nadimi-Shahraki, Gandomi, 2022 | [124] |

| Serval Optimization Algorithm | SOA | Dehghani, Trojovský, 2022 | [125] |

| Coral Reefs Optimization Algorithm | CROA | Salcedo-Sanz et al., 2014 | [126] |

| Krill Herd Algorithm | KH | Gandomi, Alavi, 2012 | [127] |

| Gazelle Optimization Algorithm | GOA | Agushaka, Ezugwu, Abualigah, 2023 | [128] |

| Algorithm Name | Abbr. | Proposed by, Year | Ref. |

|---|---|---|---|

| Artificial Immune System | AIS | Dasgupta, Ji, Gonzalez, 2003 | [142] |

| Neural Network Algorithm | NNA | Sadollah, Sayyaadi, and Yadav, 2018 | [143] |

| Human Mental Search | HMS | Mousavirad, Ebrahimpour-Komleh, 2017 | [144] |

| Algorithm Name | Abbr. | Proposed by, Year | Ref. |

|---|---|---|---|

| Imperialist Competitive Algorithm | ICA | Atashpaz-Gargari et al., 2007 | [148] |

| Anarchic Society Optimization | ASO | Ahmadi-Javid, 2012 | [149] |

| Teaching-Learning Base Optimization | TLBO | Rao, Savsani, and Vakharia, 2011 | [150] |

| Society and Civilization Optimization | SC | Ray et al., 2003 | [151] |

| League Championship Algorithm | LCA | Kashan, 2009 | [152] |

| Volleyball Premier League Algorithm | VPL | Moghdani, Salimifard, 2018 | [153] |

| Duelist Algorithm | DA | Biyanto et al., 2016 | [154] |

| Tabu Search | TS | Glover, Laguna, 1986 | [155] |

| Human Urbanization Algorithm | HUA | Ghasemian, Ghasemian, Vahdat-Nejad, 2020 | [156] |

| Political Optimizer | PO | Askari, Younas, Saeed, 2020 | [157] |

| Algorithm Name | Abbr. | Proposed by, Year | Ref. |

|---|---|---|---|

| Flower Pollination Algorithm | FPA | Yang, 2012 | [159] |

| Invasive Weed Optimization | IWO | Mehrabian, Lucas, 2006 | [160] |

| Plant Propagation Algorithm | PPA | Salhi, Fraga, 2011 | [161] |

| Plant Growth Optimization | PGO | Cai, Yang, Chen, 2008 | [162] |

| Tree Seed Algorithm | TSA | Kiran, 2015 | [163] |

| Paddy Field Algorithm | PFA | Premaratne, Samarabandu, Sidhu, 2009 | [164] |

| Algorithm Name | Some Applications, References |

|---|---|

| Multi-Objective (MO) Genetic Algorithm | Improvement of photoelectric performance of thin film solar cells [184] Optimization of nanosecond laser processing [185] VLSI floor planning optimization regarding measures such as area, wire length and dead space between modules [186] Lifetime reliability, performance and power consumption of heterogeneous multiprocessor embedded systems [187] |

| MO Particle Swarm Optimization | Review of many applications of MO PSO in diverse areas [188] Floor planning of the VLSI circuit and layout area minimization using MO PSO [189] |

| MO Ant Colony Optimization | A 3D printed bandpass frequency-selective surface structure with desired center frequency and bandwidth [190] Analog filter design [191] Multi-criteria optimization for VLSI floor planning [192] |

| Artificial Bee Colony | Area and power optimization for logic circuit design [193] Design of digital filters [194] |

| Artificial Immune System | Spectrum management and design of 6G networks [195] Multi-objective design of an inductor for a DC-DC buck converter [196] |

| Differential Evolution | Geometry optimization of high-index dielectric nanostructures [197] Multi-objective synchronous modeling and optimal solving of an analog IC [198] |

| Firefly Algorithm | Reducing heat generation, sizing and interconnect length for VLSI floor planning [199] Secure routing for fog-based wireless sensor networks [200] |

| Cuckoo Search | Multi-objective-derived energy-efficient routing in wireless sensor networks [201] Parameter extraction of photovoltaic cell based on a multi-objective approach [202] |

| MO Grey Wolf Optimizer | Electrochemical micro-drilling in MEMS [203] Multi-objective task scheduling in cloud-fog computing [204] |

|

|

|

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Genetic Algorithm (GA) |

|

|

| Memetic Algorithm (MA) |

|

|

| Differential Evolution (DE) |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Particle Swarm Optimization (PSO) |

|

|

| Ant Colony Optimization (ACO) |

|

|

| Whale Optimization Algorithm (WOA) |

|

|

| Grey Wolf Optimizer (GWO) |

|

|

| Firefly Optimization Algorithm (FOA) |

|

|

| Bat Optimization Algorithm (BOA) |

|

|

| Orca Predation Algorithm (OPA) |

|

|

| Starling Murmuration Optimizer (SMO) |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Artificial Immune System (AIS) |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Tabu Search Algorithm (TSA) |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Flower Pollination Algorithm (FPA) |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Hyper-Heuristic Algorithms |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Hybrid Algorithms |

|

|

| Algorithm | Advantages | Disadvantages |

|---|---|---|

| Artificial Neural Networks (ANNs) |

|

|

| Convolutional Neural Networks (CNNs) |

|

|

| Algorithm | Time Complexity | Memory Efficiency | Parallelizability | Scalability | Convergence and Accuracy |

|---|---|---|---|---|---|

| Genetic Algorithm | Limited | Good | Good | Good | Moderate |

| Memetic Algorithm | Moderate | Good | Good | Good | Limited |

| Differential Evolution | Moderate | Good | Good | Moderate | Good |

| Particle Swarm Algorithm | Moderate | Good | Limited | Moderate | Moderate to good |

| Ant Colony Optimization | Moderate | Good | Limited | Good | Moderate to limited |

| Whale Optimization Algorithm | Moderate | Good | Limited | Good | Moderate to good |

| Grey Wolf Optimizer | Moderate | Good | Limited | Good | Moderate to good |

| Firefly Optimization Algorithm | Moderate | Good | Limited | Good | Moderate to good |

| Bat Optimization Algorithm | Moderate | Good | Limited | Good | Moderate to good |

| Orca Predation Algorithm | Moderate to good | Good | Limited to moderate | Good | Moderate |

| Starling Murmura tion Optimizer | Moderate | Moderate to good | Limited | Limited | Good |

| Artificial Immune System | Moderate | Good | Limited | Good | Moderate |

| Tabu Search Algorithm | Moderate | Good | Limited | Good | Moderate |

| Flower Pollination Algorithm | Good to moderate | Good | Limited | Good | Moderate |

| Hyper-Heuristics | Moderate | Good | Limited | Moderate to good | Moderate to good |