1. Introduction

Music is a structured sonic event for listening. This description is inclusive of a listener who is an actor in musical interaction. Music without a listener is ontologically incomplete. Composers and performers model listening experiences by being listeners themselves in planned or on-the-fly production of musical events. In ways, music models a meaning that precedes logos. While there are shared neural resources in both music and language processing as shown in [

1,

2,

3,

4], a musical experience is not readily and effectively describable in words. Therefore, we can state that music, in its initial encounters with listeners, is relatively free from, or defers the kind of syntactic and semantic probing required for language processing. At the same time, music appeals to language when a listener wanders through a musical landscape exploring how to describe their listening experiences, to convey meaning to themselves and others by ‘figuring things out’. The ‘things’ here are perceived musical elements or features from a background event, and to ‘figure … out’ means to draw distinctions among musical elements. This process engages neurophysiological pathways from low level sensory motor mechanisms to cognition as well as social and cultural reference frameworks. Music inherently evokes multimodal interaction and musical interaction research can potentially catalyze a community of practice when applied to the field of multimodal technologies and interaction (MTI).

This MTI special issue on Musical Interactions (Volume I) is a response to an emerging research opportunity among multiple disciplines that share a growing history of conversations around three foundational topics: music, technologies, and interaction. For musical interaction, the research agenda is yet to be refined, and calls for understanding the complexity of coordinated dynamics that form a circuit between technologies and human action perception in musical liveness. Presented as a research theme, “musical” is the active qualifier and “interaction” is the designated subject. This is inverse to the research perspective where “interaction” is a qualifier, such as “interactive music” or “interactive technologies”. For a quarter century, increased computing power has opened many possibilities for musical information encoding and retrieval, applying real-time digital signal processing (DSP) and interactive input-output processing, which are also utilised for conditioning the state of interaction. This era has welcomed novel applications with interactive music systems engineering which exploit multiple forms of technology appropriation in musical practice. Prolific publication has trended with human-centric or machine-centric investigations, including audience-user studies producing data that is both self-reported and psychologically measured. User Interface and User Experience (UIUX), real-time DSP signal flow, interactive system architecture and prototyping and related system performance demonstration and evaluation are also relevant for musical interaction. Among these, two MTI-centric phenomena remain underexplored: (1) Musical experiences are ever contextualized by crossmodal perception and cognition, and by listeners’ situated encounters. How do the interactions with multimodal technologies impact people’s multimodal perceptual processing and subsequent musical experience? (2) Many situated interactions arise in the context of technology applications in daily life, where musical experiences may have broader impact on the quality of interaction. What kind of musical interaction is desirable with technologies in daily life and how do we recognize when there is one? For musical interaction, it seems to be clear that the concept of music can also be broadened and devised with respect to fundamental aspects of human perception and cognition, beyond music specialisation, and this presents challenges as well as opportunities in the current research landscape. Whether applied to scientific testing or aesthetic experience, musical interaction with multimodal technologies will always require an instrumentation, a process for connecting human subjects and devices to enable information exchange and data flow.

With multimodal technologies, it is essential the meaning of music and interaction be mutually modified to satisfy emerging requirements and diversity of human experiences with evolving technological capacity. Multimodality of music has been recognised in research across modelling [

5,

6], music information retrieval [

7,

8], music therapy [

9,

10,

11,

12], and multisensory and crossmodal interaction [

13,

14], many of these informing the context of embodiment and mediation [

15]. Music Supported Therapy (MST) especially in conjunction with neurological data has demonstrated positive impact on brain recovery [

16,

17] and on motor recovery as is evidenced by clinical measures. Wan et al. [

18] and Ghai et al. [

19] inform MST by investigating underlying auditory motor connectivity and coupling [

18,

19]. Neuroscientific findings in conjunction with brain imaging also inform the impacts of music on brain development through a structural adaptation by long-term training [

20,

21,

22], and the impact of musical multisensory and motor experience on neural plasticity [

23,

24,

25].

Scientific findings from the above literature bring an increasing awareness of the multimodal nature of music. The implications from research design in this literature also point to the importance of instrumentation. There is a promising space with both depth and breadth in the topic of musical interaction. The goal of this issue is to be inclusive and mindful to cultivate coherent breadth for that depth. Identifying the loci of musical interaction poses significant challenges, which may require new approaches to how we behold methods and evaluate both music and systems for studying purposeful, planned, intended, or even straying actions emerging through a musical experience, in ways that are inclusive of interactive and listening experiences of both human and technological actors. In this regard, it is valuable to see the range of original research in this collection. This special issue on Musical Interaction includes topics wide-ranging yet connected, from engaging mobile devices to supercomputers, from prototyping pedagogy to production of musical events, from tactile music sensing to communicating musical control by eye gaze, from literature survey to development perspectives. Leveraging the diverse paths demonstrated by the authors, we can expand horizons in musical interaction research and application areas with more inclusive multidisciplinary teams. New horizons will also deepen our perspective on how to anchor musical interaction as a research theme, to influence ways of engaging devices and technologies for mediating human activities and experiences in daily life. The purpose of this article is twofold: (1) To provide an exposure to the depth of discourse undertaken in a community of practice evolved around music as a central focus, and (2) to consider a conceptual framework to discuss and assess musical interaction in MTI context whereby the contributing articles are contextually surveyed.

Organization of this Article and Rationale

This article is organized in two parts for a twofold purpose. Part one includes

Section 2 and

Section 3 where a context of musical interaction and theoretical framework are discussed with respect to a community of practice. Part two includes

Section 4 and

Section 5, which introduce and discuss each of the contributing articles and present a summary and conclusion. For a deeper perspective on musical practice, readers may consult

Appendix A which provides a succinct survey of the European historical Common Practice Period (c.1650–1900).

Appendix A is developed to foreshadow our emerging community of practice by illustrating a handful of examples and referential frameworks to minimally disambiguate what it means to musically interact, whereby to motivate (1) imagination of what it is like to form an emerging practice with the qualifying criteria of music, and (2) strategic thinking towards future poles and markers proper to MTI agenda. It is important to be cognizant that musical origins which we share are likely to belong to a past Western classical music tuned to certain ethnic and social groups, therefore our informed reflection is in order, on our assumptions of what it means to be music.

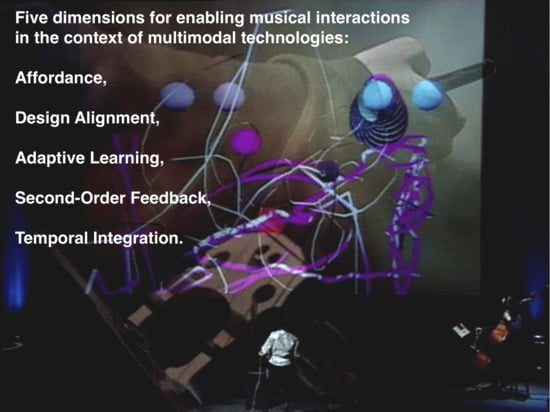

Section 3 considers a conceptual framework for research and design with five enabling dimensions for musical interaction. The 1st dimension, Affordance, adopted from Gibson’s theory of ecology [

26,

27], denotes (inter) action possibilities perceived by an agent through the relationship between the agent and its environment. The 2nd dimension, Design Alignment denotes the process of identifying design criteria for gathering requirements tuned to musical criteria so that the design resources and music resources in an interactive system can be structurally coupled. The 3rd dimension, Adaptive Learning denotes the system capacity to facilitate a user’s learning pathway as well as their learning capacity. The 4th dimension, Second-Order Feedback is a term adopted from general systems theory (general control theory) [

28,

29] and second-order cybernetics [

30]. Here, it is applied to multisensory feedback processed with top-down auditory attention (see

Section 3.4) by listeners to observe and guide their own sensory-motor performances. The 5th dimension, Temporal Integration denotes the requirement for time critical data transmission in a multimodal system’s architectural flow between users and system components to facilitate musical interaction. In

Section 3, each dimension is presented as sub-sections and discussion focuses on MTI context.

Section 4 introduces, surveys, and discusses the contributing articles with respect to the five dimensions.

Section 5 presents a summary and conclusion.

2. Musical Interaction as a Community of Practice

A community reflects a referential framework comprised of a shared skill set and expertise, methodologies, assumptions informed by literature and theories, and a set of criteria for evaluating outputs, all together characterizing an ecosystem of the field [

31,

32,

33]. Accordingly, music of the Common Practice Period (

Appendix A) evolved around literature (in the form of musical scores with implicit performance practice and music theory), performance repertoire (a canon of compositions written for an instrument or ensemble) and instrument design aligned with literature and performance idioms (for example viola da gamba vs. violin). In current music research involving technologies, a topic relatively unexplored is what may constitute an ecosystem, meaning a system of interconnected ideas or elements shared in a community of practice, that would catalyze concurrent and mutually supporting developments across literature, repertoire, instrument design, and their equivalents, through which a coherent research pathway is foreseeable. Musical interaction research in the context of MTI requires a contextual shift with an interdisciplinary research team beyond music specializations. In that sense, a community of practice for MTI musical interaction takes a different turn: literature will include scientific discoveries as referenced in

Section 1; repertoire will include neuroscientific findings on multimodality in music production and perception, demonstration of case studies, data, interaction patterns, and use cases where instrument design can be analogous to designing DMI (Digital Music Instruments) and will extend to designing interactive systems and prototypes.

What constitutes musical interaction? When is an interaction musical and how does it come about? In recent Eurocentric tradition, music is identified as a persistent memory in a notated form so that a musical event can be reconstructed by enacting that memory. In the Eurocentric tradition, music is often approached as synonymous to its notated musical score. In a crude digital analogy, a musical score is more akin to a Memory Address Register than to musical data stored in that memory. Music notation is a placeholder aiding performers to execute tone, which requires interpreting a represented code of musical information. This case holds for music performed from a memorized score and music improvised from a simplified score such as a commercial music lead sheet. Horsley defines a score as a visual representation of musical coordination [

34]. Musical coordination includes temporal execution for both sequence and synchrony of tone production, managing speed and regularity of meters and beats, applying expressive dynamics to tones and phrases, and teamwork in an ensemble. A score is a kind of performance manual for executing and coordinating musical information, but the instruction is implicit because the musical score is representational, and interpretation refers to a tradition in a community of practice. This means, even with our highly developed notation system, performance is implicitly aware of the oral tradition specific to Eurocentric culture. To facilitate the persistent memory from generation to generation and from instrument to instrument, the European notation system evolved over 1000 years before its use in a fully developed form during the Common Practice Period. This era recognizes a widespread Western art music tradition from the mid-17th to early 20th centuries, centered around a shared tuning system, a system of tonality, a common notation, and a theory of harmony. Together these constitute an ecosystem where a theoretical framework for common practice matured, as illustrated in

Appendix A.

Establishing a common practice of European art music, reinforced music as a specialized domain requiring highly tuned skills to compose—which is to register the representation of memory, and to perform—which is to enact the memory for artful retrieval. The expertise of a composer or virtuoso cannot be acquired in any measurable magnitude of investment. Music virtuosi dedicate their entire life to one and only one thing, performing instruments. In this tradition, the word “music” carries a specific meaning requiring a finely tuned tonal acculturation [

35] for a listener to apprehend the idiomatic expressions. It is noteworthy that the Common Practice system of tonality affords the musical idioms built around the stability and instability in tonal relationships, which are directly linked to perceptual consequences in listening. Composers of the Common Practice Period artfully played with this link. Wagner’s well-known Tristan chord marks an extreme boundary by fully exploiting and exhausting the use of this link with prolonged tension-building musical schema, without violating the rules of play. This tradition portrays a well-developed and established ecosystem, which provides an affordance for a common reference framework for diverse idioms and expressions. These references suggest the long-term relevance of an alternative and informed concept of a music applied to MTI.

3. Musical Interaction Research and Design: A Conceptual Framework with Five Enabling Dimensions

The complex ecology of the Common Practice Period constituted a system of references, notation, theory of harmony and musical forms, which evolved through a long discourse primarily concerned with ratios in sonic events that are directly linked to human senses and cognition. Today, we are advanced with new tools and techniques, and we are no longer bound to the Common Practice paradigm. Whatever assumptions we may have for what it means to be musical, we can step far back in an attempt to look at the very foundational conditions of music, ‘going back to the drawing board’ if you will.

First, the concept and idea of music is sensorially concrete but linguistically elusive. The potential semantic space for the word “music” is vast, perhaps much larger than many other words. The concept of music is highly subject to variance according to culture and epoch as well as individual memory. For example, we cannot experience the phenomenon of the Greek chorus of its time; not only is it nearly impossible to reconstruct the music from remaining papyrus fragments [

36], but also modern people hold no clue to decode the musical information registered in the papyrus that can be only interpreted with a priori oral tradition [

37]. In terms of musical experience, we live in a world of connectivity through technological devices and infrastructure where multicultural or diachronic proximity cannot be reduced into the sociological or historical concept of “cultural diversity” or an “origin” [

38], because every culture generates its own lived experience [

39,

40], and individuals’ memory formations are also influenced by their cultural backgrounds [

41,

42,

43,

44]. Altogether, these implicate the need to understand the relationship between people and music in radically different ways with multicultural perspectives, thinking through what it means to be musical in the context of MTI.

Second, music is an ephemeral phenomenon. No live musical instance can be exactly repeatable due to the emergent behaviours among all interacting bodies involved in the phenomena. This aspect of music has been underrepresented historically but can be highly relevant for MTI. Effectively, MTI presents a multitude of affordances to explore musical liveness. Understanding the interaction dynamics is critical whether the interaction is for composing, performing, listening and especially for

experiencing technologies with musical interaction. This implication of technological interaction upon music can be traced to the early 1950’s and the history of the experimental marriage between music and computing machinery Early experimenters include Lejaren Hiller with the ILLIAC 1 [

45], Max Mathews with the IBM 704 & 7094 [

46], and Alan Turing with the Mark II [

47]. They endured non-real-time workflows with laborious programming and waiting hours or days for the results. Most of the experimenters were not professional musicians and some were not accepted as legitimate musicians in recognized fields. Nonetheless, their experimental outcomes catalysed a new discipline called computer music, which became an agency of the results and techniques used in new millennium platforms such as web, games, and mobile applications. These widespread outcomes of early investigations suggest that it is not prudent to assume that ‘music is music’, and it makes more sense to ask, “What constitutes musical interaction?” and “When is interaction musical and how does it come about?”. Let’s also address what the early experimenters did with same questions. The following discussion is based on the hypothesis that, unless we do so, we may not be able to articulate the deeper relationship between music and technologies in terms of any form of interaction reflecting human input, choice, cognition, machine processing and mediation, outputs, and experiences, which altogether constitute a contextual adaptation in musical interaction, not a causal link, yet informed of causality.

Third, musical interactions are designed, whether for a concert, gameplay, pedagogy, therapy, exercise, or psychological testing. This research collective suggests an agenda to conceive of a coherent path for characterising musical interaction for MTI with a frame of reference that we can consult for designing and assessing a project. The following discussion identifies five enabling dimensions to consider for musical interaction on which a theoretical framework can be explored:

Affordance

Design Alignment

Adaptive Learning

Second-Order Feedback

Temporal Integration

The choice of these terms is informed by design practice, Human Computer Interaction (HCI), AI, music composition and engineering.

3.1. Affordance

Multimodal technologies afford musical interaction by situating expectation. An actor anticipates how an action will produce a musical outcome, and the way this expectation is resolved contributes to the formation of a mental model of musical interaction. Researchers and practitioners investigate how people utilize their senses when interacting with technologies, asking what properties appeal to people suggesting repertoires of actions or set expectations for experience potential. The role of expectation in musical interaction can be approached by investigating affordance and mental model.

The concept of affordance was conceived by J.J. Gibson [

26,

27,

48] for describing an ecological relationship between an organism and an environment, where the organism perceives its environment as to offer potential resources. In 1988, D. Norman introduced the term to the design community [

49,

50]. In 1991, W. Gaver introduced the term to the HCI community, proposing that affordance be considered for designing perceptible objects or features to offer information how they may be acted upon or explored for complex actions [

51]. Reybrouck [

52] exercises two terms, “music users” and “sonic environment”, the former denoting the observers and the latter standing for the broadened concept of a music. Music users explore musical affordance in sonic environment. Menin and Shiavio [

53] propose to investigate musical affordance around the sensory motor experience that is pre-linguistic, involving intrinsically-motor-based intentionality. Consistent to this line of thinking, Krueger [

54] describes listeners as active perceivers and solicitors of musical affordances. In this issue, Rowe [

55] discusses an affordance in relation to representation. The term, affordance, resists a simple conceptual model. Interpretation and adaptation of its concept differs across research communities and can be confusing, making it hard to see how it can be applied to research and practice. Affordance is also deeply related to the concept of mental model, which is another term often used with over-simplifications or over-complications. The following discusses these concepts as interrelated by referring to their original sources.

Gibson constructed the word affordance from the verb “afford” and he “…coined this word as a substitute for values…” to “mean simply what things furnish, for good or ill. What they afford the observer, after all, depends on their properties.” [

48] p. 285. Therefore, affordance is what an environment offers. At the time of prevalent behavioural science and the beginnings of cognitive psychology, Gibson authored

The Senses Considered as Perceptual System [

48]. In the preface, he expresses his attempt to reformulate old theories such as stimulus-response theory, Gestalt phenomenology and psychophysics to extract new theorems. Gibson’s theory of senses denotes senses as active and outreaching perceptual systems to acquire perceptual information about the world, therefore senses are “to detect something” (active) rather than “to have a sensation” (passive). Note the information is perceptual, meaning it is internal to the observer. In this formulation, affordance is something that is perceived and explored by an observer. Sensing an affordance is preceded by another state of observation that occurs “When the constant properties of constant objects are perceived (the shape, size, color, texture, composition, motion, animation and position relative to other objects), the observer can go on to detect their affordances.” [

48] (p. 285); “The properties of perceived … are nutritive values or affordances.” [

48] (p. 139). As our senses are active perceptual systems and that activeness coordinates movement for exploring affordances, to perceive is to obtain information about an environment, what affordances (possibly value propositions) it may offer. In that sense the perceptual information about an affordance is a perceived value potential. By using the information, an organism orients itself to action, whether to explore or exploit the detected affordance. Based on the outcome, an organism’s perceptual information as well as its own action can be assessed and updated with respect to the previous state of its perceptual information. In this regard, an observer’s actions performed upon the perceived affordances assume a perceived set of invariants from an environment and that becomes a basis for anticipations or expectations.

Gibsonian affordance characterises the nontrivial circularity built in the relationship between an observer and an environment in which the observer is part of their environment. Belonging to the environment provides an essential dimension for musical interaction, for characterizing the relationship between people and systems. The term is also intended to bypass the dichotomic division between subjectivity and objectivity, which Gibson considers to be inadequate for describing the (entity) relationships in an ecology [

48]. The Gibsonian perspective relates to

What the Frog’s Eye Tells the Frog’s Brain [

56]; what senses are registered in an organism’s perceptual mechanism determines how the environment is perceived and what affordance it may present. For design orientation, affordance leads us to attend to the conditions of interaction, not to the system features and properties or to an affordance directly, but to the thoughts for how the features and properties can contribute to the relationship between systems and users. One cannot design an interaction nor an affordance without attending to the conditions from which the affordances can be perceived by users for action-interaction potential. For musical interaction, an affordance can be envisioned by creating a set of propositions that a system may appeal to users as meaningful, meaning how the system is designed to suggest users what they may gain or experience. A problem is that affordance may not be assessable in a quantitative measure when prototyping and testing user experiences. Here, mental model comes as a pragmatic tool, not as means of representation of, or for modeling users cognitive states, but as a design tool to work with users on what value potentials they recognized, what expectations they had and what action-interaction they thought as possible when they encountered and interacted with the system. Both qualitative and quantitative assessments become possible by measuring the discrepancy between design intents and users’ descriptions, and the discrepancy between users’ descriptions (what users understood they were doing) and system data (what the system recorded users actually did).

The concept of mental model in its original conception can be traced to Kenneth Craik in 1943 [

57]. The Artificial Neural Network (Warren McCullough and William Pitts) was also introduced in that year, inspired by biological neural mechanisms, especially the associative nature and causal links, which also had an impact on Craik’s thinking in the relationship between human operators and technologies. Craik describes a kind of thought model simulated in an organism’s head, a ‘small-scale model’ of external reality and possible actions in it (and with it). In this conception, the model means a system, whether physical or chemical, that “…has a relation-structure similar to (or parallel to) that of

the process it imitates” [

57] (p. 51). The similarity is in the structural relationship and does not hinge on a pictorial resemblance. Craik uses an example of tide predictor that has no resemblance to tides, but it produces oscillatory patterns to imitate the variations in tide level. An implication for designing an artificial system is to inquire of the structure and processes for implementing the system that can imitate and predict external processes, the process external to the machine, that is, the state changes of input signals from people or anticipating what users may do. For people to know how to input signals or what to do next, they use mental models. Therefore, a system needs to be presented to facilitate the (in)formation of a mental model analogue or compatible to what it can offer.

Johnson-Laird describes the process of constructing and using mental models as different from formal reasoning based on a set of beliefs: mental models represent distinct possibilities, or a kinematic sequence unfolding in time, whereby we base our conclusions [

58]. The formative process of a mental model allows people to handle uncertainties such as what their actions may entail or what to do next when first time working with a system of representation standing for a set of functional premises coded in the system. Consistent to Johnson-Laird’s description [

58], the process such as working with interactive systems, especially learning to play/work with novel systems, is very different from formal reasoning with a set of beliefs due to the constant adaptation process through an exploration of affordances. It also means making use of working memory to perform tasks and handle counterexamples. Through experiences, people can modify, improve, or refine their mental models.

Appendix B illustrates the relationship between mental model and affordance.

3.2. Design Alignment

Aligning the variety of the resources between music and design determines the quality of musical interaction. Design tasks tend to involve identifying problems and solutions whereas music is more about generating structured sound events. While both require an aptitude of creativity to complete the tasks, the orientation of the tasks in each field consults different criteria. While musical instruments evolved over centuries in the musically specific ecosystem, musical interaction in MTI needs to consider diverse applications and translating the principles from music to a system of interaction through design implementation. A multimodal interaction requires an instrumentation with multimodal technologies for engineering a site for an actor, which introduces the investigation of interface and affordances. The general HCI human in the loop and gameplay are good references but insufficient for music. Musical interaction requires musical propositions. Musical interaction with MTI requires technological propositions. The values musical interaction may offer are not obvious largely due to the lack of existing mental models, while the values music may offer are more familiar with the mental model of a listener being seated, physically inactive, regardless of the state of listeners’ mental activity in perceptual and cognitive engagement. With MTI, audiences are invited to generate musical experiences by proactively experiencing technologies, which means, MTI musical interaction proposes one interaction, that is musical, for two experiences, that are of both music and technology. Designers and researchers for musical interaction cannot take such things for granted. First, user interface components (UI) and system interfaces need to be temporally aligned to support time critical interaction. Second, musical agenda and design directives need to be well aligned with a shared vision of what kind of relationship to people a system may afford, what kind of active participation a system suggests, and what exposure does a system present to access control, all together mobilizing the formation of a mental model. Third, the system implementation needs to be guided by a structural relationship between musical agenda and design directives, so that task domains for generating music resources and design resources are compatible with the target range of musical outcomes and design solutions.

Three priorities are (1) Defining design tasks for a system of interaction to be musical; (2) defining the repertoire, variety, and features of musical resources so that musical interaction can grow alongside the formative process of a mental model; (3) aligning musical resources and design resources so that interactivity can be conveyed to people as action possibilities, with the intended ranges of action perception cycle and the degree of controllability. Musical interaction in MTI requires good control strategies for generating sounds through UIs that embody an affordance; both system and user interface define the variety of control, the interaction repertoire, and the access to hierarchy of information granularity.

For musical interaction, an affordance can be envisioned by creating a set of design propositions. Aiming for or assessing affordance in design can be simplified by a target-set of constructs measured against hypothesis and outcomes. Affordance clues in users to orienting for an interaction possibility, therefore affordance is related to but not the same as mental model. What values users may gain, and how to achieve them can be only suggestive. Beyond this phase it is a mental model that leads to more specific design directives. In a design process, mental models are consulted to determine what kind of interaction pattern is suitable for which task, or an overall workflow for a complex task. The compatibility between system and people’s mental models is assessable from a user’s perspective by soliciting a set of use cases and workflows in their models, then measuring against the design intent, implementation, and system performance (see

Section 3.1 for related discussion).

The term,

requisite variety, is a useful concept applicable to the structural relationship between design and music: Ashby’s law of requisite variety states, “Only variety can control variety” [

59]. The design tasks and music tasks need to be mutually informed of the possible variety, and at some point, define the scope within which the two tasks optimize the alignment. For example, in terms of system interface, musical interaction is, by definition, time critical, which requires highly synchronized system feedback to support the user’s action perception cycle (see

Section 3.4 and

Section 3.5). Both UI design and music tasks can provide and receive information of how system architecture and instrumentation are to be aligned to account for which modality is synchronized with which channels of interaction data. Accordingly, system designers will allocate resources for concurrent signal processing and scheduling including asynchronous data buffer management to optimize the real-time data flows among various components interfaces. These processes ensure that the variety in the domain of system control is compatible to the desired variety in the range of musical outcomes.

3.3. Adaptive Learning

We are all novices in the first conception of digital technologies. Musical instruments were designed for virtuosi. Digital interfaces are designed for novices. A musical instrument is an interface between a performer and sound events. In recent trends, some HCI researchers in digital interfaces have been inspired by an analogy between musical instruments and new interfaces. While the analogy may evoke a compelling intuition, it has been a source of misconception in the field of digital music interfaces and interactive music software. Historically, musical instruments have been evolved with virtuosi, the masters of certain musical instruments in certain era. J.S. Bach was a driving force behind the development of the keyboard instrument known as Well-Tempered Klavier applying finely tuned string ratios known as Well-Temperament, a tuning system, which enabled Bach to play on 24 different keys. These are precursors for the piano as we know now, and the modern tuning system called Equal Temperament (see

Appendix A). Virtuosi were the forces and users for whom the modifications and improvements were made as instrument makers tailored and tested their product. The process of perfecting a musical instrument was like a prolonged physical coding exercise, an iteration upon eliciting expert knowledge, changing the instrument’s physical structure, and testing with mature skills.

Unless for an expert system, a virtuoso consultation may risk a bias for conceiving digital technologies. We are all novices for digital interfaces and for interaction in its first conception even for professional digital tools. Most musical instruments offer few playful entry points below a certain skill level. In contrast, MTI with musical interaction is open to consider a system that affords (1) a playful entry point for all skill levels, and (2) a trajectory or capacity to evolve along maturing skills. To advance a musical interaction paradigm with MTI, we can envision not only facilitating novices’ playful interaction but also designing a system of interaction that can afford a mutually supportive learning between people and machines with AI and machine learning techniques. A playful entry point can facilitate a user’s orientation to the system from the very beginning by forming a crude mental model. Beyond that point, it is desirable for a system to learn to sense and keep pace with a user’s skill level to support an adaptive learning pathway, and to facilitate users to advance by dynamically maturing and refining their mental models. Otherwise, a progressive level design can suffice, as is common in game design.

3.4. Second-Order Feedback

We observe our own actions by observing the result of our actions. Music performance is a sensorimotor coordination flow guided by auditory perception to achieve a musical goal. In second-order cybernetics, von Foester describes an observer as an observing system that observes itself while accounting itself as a part of its observation, thereby observation affects the observed [

60]. This is consistent with music performance where a performer’s state is constantly affected by the performer observing her own performance. In this context, Second-Order Feedback sensitivity refers to the physiological and psychological condition of a performer engaged in time critical action perception cycle interacting with an instrument or a device in a certain environmental setting. Fuster describes perception action cycle as the circular flow of information between the environment and an organism’s sensory structures, in which an organism is engaged in sequences of sensory guided actions with a goal directed behaviour [

61]. What is implicit here is the environmental feedback that influences an organism’s sensory guided actions which in turn influence the environmental changes or responses. For musical interaction, the circular flow is an important concept, and it is more proper to call it action perception cycle because a performer always initiates an action to perceive her own action and its outcomes. The idea of circular flow was already seeded through the well-known 1940s and 1950s Macy Foundation conferences on an interdisciplinary topic “Circular Causal and Feedback Mechanisms in Biological and Social Systems”, chaired by Warren McCulloch. Notable participants included Gregory Bateson, Norbert Wiener, Margaret Mead, and W. Ross Ashby, catalysing the meta-discipline known as Cybernetics. This class of cyclical model for embodied action is reflected in a broader context of discussions in cognitive science and philosophy of mind [

62,

63,

64,

65].

To illustrate this dimension, let us take a familiar example. A violinist performs an action of bowing on strings and this introduces an excitatory energy into the resonating body. The subsequent sound quality is its resonance response to the input patterns (excitatory signal patterns) further shaped by the violinist’s left-hand control. By engaging an external body, which is a violin in this case, the interactive feedback cycle involves the second-order circular flows extending from the performer’s body to the instrumental body. In effect, a performer acts and perceives her own sensory motor coordination, which constitutes the first-order circular flow, and she also assesses how her action entails the resonating responses of the external body, which constitutes the second-order circular flow. For the performer to anticipate and to project a following action, it is critical that the second-order circular flow feeds back multisensory information including auditory feedback, a series of audible complex waveforms that conveys the quality of sounds. For a violinist, the auditory feedback follows the immediate tactile vibratory sensation through her chin from the violin as a resonating body as well as the friction sensed from bowing, whereby she perceives and confirms her own action assisted by proprioceptive feedback. Krueger describes this as “ongoing mutually regulatory integration” involving motor entrainment [

54]. Particularly, music performers are trained to use auditory feedback to assess their own performances engaging auditory attention, attention regulation, and expectancy, which are higher-cognitive processes in auditory perception as explained in [

66,

67,

68], the processes engaged in the second-order circular flow. Further, an acute situational awareness is required for a performer to assess complex environmental responses such as resonant frequency and amplification characteristics of a concert hall so that she can fine tune her performance to achieve an optimal projection of sounds to bloom in a particular hall. In sum, what determines the quality of a performance is an artful execution of sensory motor coordination in an effective and timely manner mastering the circular flow in musical interaction with an instrument and her own situational awareness.

To account for the time critical nature of musical interaction, the term, Second-Order Feedback is adopted from the general control theory [

28,

29] and cybernetics [

30,

69,

70]. To respect a performer’s second-order feedback sensitivity, the foremost important factor is timing. For musical interaction in MTI, the timely coordination between the temporal granularity of computing and performer’s multimodal action perception cycle is the most challenging problem. It requires a careful temporal alignment between DSP units for computing sounds, and desired composition of interactive signal granularities applied both to control sounds and to communicate with various interface units. Musical interaction, by definition, is driven by auditory feedback along which performers (users) transition from one state to the next by assessing multisensory feedback with directed attention to sounds, thereby influencing their actions. Perceiving music involves a multilayer temporal structure for processing pitch (related to frequency), timbre (related to frequency spectra), and rhythms (comprised of unit durations of note relationship and the patterns of disposition). Accordingly, musical interaction applied to MTI requires a multilayer DSP compatible to human perception and real-time feedback capacity, to support a user’s fluent action perception cycle. In the author’s multimodal musical interaction practices, the timing represented in the LIDA model (Learning Intelligent Distribution Agent) [

71,

72] offers a useful temporal framework compatible with the cascading flow of action perception cycles in multimodal music performances [

73,

74].

Figure 1 illustrates Second-Order Feedback and the LIDA model related to temporal integration.

3.5. Temporal Integration

Any system of musical interaction is a complex system that requires a temporal integration in its architecture. Musical interaction agenda in MTI may note a special emphasis on temporal requirements for designing and engineering real-time signal and information flow among all components in a complex system with respect to end users’ second-order feedback sensitivity. The choice of parallelization to compute concurrent real-time processing, for the wholistic signal flow through all multimodal components, may result in down sampling some perceptual features, and this choice is prioritized by second-order feedback sensitivity. People’s perception affords a contextual adaptation within an acceptable temporal range. Three main properties subjected to temporal integration are (1) the unit definition of human performance gesture, (2) signal mapping and navigation responses of designed components, across UI and system interfaces and (3) DSP component responses. Requirements for temporal integration are contingent to the design alignment in terms of the definition of temporal granularity and mapping between control gestures and musical outcomes (through wherever modalities the control inputs are channeled), as well as the degree of indirection from action to perceived musical results.

Further details can be found in [

73,

74] with multimodal performance examples applying temporal integration among multimodal components and an architecture for musical interaction.

Figure 1 (adapted and modified from [

74]) illustrates a general architecture for temporal integration, based upon implementations of multimodal performance systems. Parallel streams of multimodal interaction generate first-order and second-order feedback through image/video and sound/music streams. Digital processing generating interactive media streams maintains temporal symmetry with micro, meso and macro timescales of user perception and cognition, parsing the user’s continuous actions into the three temporal control bands. Temporal integration anticipates the user’s mental model of governing the pacing of action.

Referring again to Craik, his original conception of mental model describes a kind of servo-control mechanism with built in prediction of when the necessity arises so that “…sensory-feedback must take the form of delayed modification of the amplitude of subsequent movement” where “…the sensory control can alter the amplification of the operator with a time lag and determines whether subsequent corrective movements will be made [

75] (p. 87).” While such servo-control mechanism has been advanced in many mechanical systems, especially for sensory-driven multimodal coordination in robotics, this is still a challenging problem in time-critical multimodal music performances due to a performer’s second-order feedback sensitivity. In highly time critical interactive music performance, even with powerful CPUs and parallel computing, 10 ms. delay can cause a disruption. This is critical because

the mental model for musical interaction is also a model of time, requiring consistent support for anticipation, meaning predicting in time, with clear presentation of accessibility and variability of unit control and temporal granularity. Some time lag is prone to happen in experimental real-time multimodal performances that require intensive computing resources. A system affordance in such case is to secure tolerable temporal variations within a predictable range so that a performer can develop tolerance and recovery skill to counteract small inconsistencies. When a performer is committed to such experimental systems, especially for prototyping, a flexible mental model is helpful. When performing with a multimedia or multimodal system, by trusting the reliability of synchronization between input and output signals, a performer may explore the available affordance of performance gestural repertoire.

4. The Contributing Articles

This section is dedicated to introducing and surveying the eight contributing articles, detailing and positioning them as future propositions. It is noteworthy the authors who contributed to this issue perform highly interdisciplinary research and found their ways to contribute from many different perspectives. The presentation of their work is ordered in recognition of the articles’ diverse orientations. The first and second articles, authored by creative practitioners, are discussed in sequence to illuminate similarities and differences on aims and approaches, artistic dimension, maturity of detail, ways of engaging technologies, and implications for MTI. The third article is a literature survey proposing a framework for classifying creative outputs of interactive sound installations. From this trio of articles, readers may draw further implications on the relationship between documentation, representation, and creative practices. The fourth article is a perspective essay which pivots between the initial trio of creative investigations and a following set of scientific investigations. The latter offer innovative inquiries, presenting research questions and proposing solutions for musical instrument online learning, mobile computing, communicative gestures in music performance and cross-modal musical interaction. For each of these articles,

Section 5 shows the distribution of research in each article as aligned with the five enabling dimensions of musical interaction.

4.1. Using High-Performance Computers to Enable Collaborative and Interactive Composition with DISSCO, by S. Tipei, A. B. Craig, and P. F. Rodriguez

By engaging High Performance Computing (HPC) in music composition, the first author, Sever Tipei [

76] sustains the Illinois School of experimental music, active since 1956 when Lejaren Hiller produced the first algorithmic composition (

Quartet No. 4 for Strings ‘

Illiac Suite’, 1956 [

45]) utilizing the early Illiac supercomputer. Hiller, originally trained as a chemist, pioneered a kind of non-real-time musical interaction using computational processes for generating musical instances, which gave rise to what we call algorithmic compositions. Given a set of rules and instructions as inputs, computation returns instances of outputs, which Hiller translated into musical notation. Tipei et al. [

76] addresses how 21st century HPC may be engaged in the algorithmic processes applied to both musical structure and sound synthesis. The rules of the interaction, or the rules of the game played with a computer if you like, stipulate that to preserve algorithmic integrity the composers should not change the output to create arbitrary musical effects. Situating a machine process in the middle of a creative workflow entails an alternative human behaviour for creative pursuit in the presence of large-scale computation.

A kind of discourse, “from musical ideas to computers and back” as eloquently expressed by Herbert Brun [

77] is necessitated by the constraints and integrity imposed by time-bound state of the art HPC. Often, the discourse works in parallel for both optimising use cases and defining the next generation of HPC tools and methods. Perhaps, those with a fine taste of traditional music must be invited to experience a kind of “music that I don’t like, at least not yet”, meaning that such musical outputs may need time to mature in the listener’s expectations. This approach implies a kind of musical interaction where a listener has awareness of the input actions that were applied to produce musical outputs.

A participant’s awareness of input actions is a foundation of all musical interactions. In Tipei et al. musical interaction extends from sound production into the domain of composition. For those who work with an algorithmic process, this consideration is in part philosophical and in part ethical. What “I don’t like yet” is the result of what I did, meaning the choice can be made: Either change what I did as input, rather than change the output to imitate something I did not do, or I learn to understand the system and my inputs and keep discovering what the result may offer.

Tipei is a highly acclaimed composer and pianist who is committed to algorithmic compositions. His use of HPC is due to the intense computing power and parallel processing required to compute the massive number of oscillators for additive sound synthesis, granular synthesis, and stochastic processes to achieve coherent structure from sound grains to musical form. Note that a high level of computing power brings musical interaction to confront the vast number of instructions required to generate 20,000 samples per second for each monophonic sound source, in addition to instructions for rendering the musical events from notes to rhythms, from voices to harmonies, from phrases to sections and large forms.

Due to the experimental conditions interacting with HPC, for composers like Tipei, there is no comfort zone for the habit of falling back to established styles and accepted aesthetics. Interacting with computers to create algorithmic compositions forces composers to focus on a creative attention to inquiries, not to styles. Tipei et al. represents a long history of working towards more sustainable ways of managing the compositional processes, in terms of generating massive instruction sets without relinquishing creative control, also streamlining the time it takes, modularizing computing resources as reusable assets, and being able to work in teams. The result is an HPC collaborative platform applied to music. Due to the multiple skill requirements working with HPC, the collaboration is an important feature of the platform.

The article presents two main topics: (1) the platform called DISSCO (Digital Instrument for Sound Synthesis and Composition) which runs on the Comet supercomputer: the authors describe the components and technical details of implementation, and (2) the collaboration: the authors describe teamwork and collaboration management. Often, sound synthesis and musical forms are processed using different tools, incorporating either structured random functions or deterministic means. DISSCO is an integrated system that handles both sound synthesis and compositional structure. The latter is “…the implementation of an acyclic directed graph, a rooted tree, whose vertices or nodes represent “Events” at different structural levels.” Then the events from DISSCO need to be translated into the sequentially playable sound events, and the authors state, “…facilitating this translation for multiple users is what makes this platform implementation very different than organizing an online group of musicians with physical instruments.” Three modules constitute the architecture of DISSCO: a library of sound synthesis instruments, a composition module, and a Graphic User Interface (GUI). DISSCO runs nearly in real-time while affording multiple users working concurrently. Significant contributions of this paper include a benchmarking and sorting solution with optimal window size to solve the problem between parallel computing and the serial (temporal) nature of sounds. Here the project investigates a fundamental affordance of HPC. The design tasks involve asynchronous parallelization that is required to return signal outputs applying timely ‘hold and release’ for synchronization for musical requirements.

A further contribution is the explication of collaboration in composition necessitated by skill requirements, which touches upon another dimension of interaction dynamics for compositional activities. Studies of collaborative composition in cases, articulations, and knowledge are rare and beneficial for musical interaction research. A human-to-human interaction demands behavioral adaptation to sustain a collaboration until a mutually satisfactory musical output is achieved. While these topics are not fully developed in this article, it serves as an overture for widening a scope of discourse over decision-making processes. By using a platform like DISSCO, we can observe more systematically why and how choices of computing techniques are made and how those choices are based on musical intents. It also involves optimizing computational resources (parallel processes and multicore distribution) to support the complexity of instruction sets for sound synthesis, which requires high-definition signal processing to produce detailed musical qualities. Tipei et al. increases the relevance of musical practice migrating from the experimental field of computer music to contemporary technological practices, serving as a conduit to deepen collaborative insight, to spin an evolving aesthetics with respect to computing intervention and to question the values musical practice may offer.

4.2. Promoting Contemplative Culture through Media Arts, by J. Wu

Jiayue Wu’s article [

78] is an invitation to view the landscape of the author’s creative practice engaging multimodal technologies. It can be considered as an individual practitioner’s autoethnographic case study how MTI can facilitate what can be described as an experience transfer, through technological appropriation for a cultural practice, in Wu’s case by transferring sensorial resources from the Tibetan spiritual practice. The article compiles three creative outputs produced over several years under a common theme of contemplative cultural practice. Presented as case studies, each project is elaborated by the aims, artistic goals, techniques, process descriptions, collaboration, and results. Due to the complexity of combined technologies and ethno-musical dimensions, the three projects involved various collaborators with a common goal as the author states, “…to address the questions of how media arts technology and new artistic expressions can expand the human repertoire, and how to promote underrepresented culture and cross-cultural communication through these new expressions.”

The first case study presents the multimedia performance piece, The Virtual Mandala. The interactive piece utilizes both live voice and electronic instruments, particle simulation for visualising the sand-like formation of Mandala, motion tracking, physical to virtual space mapping, MIDI activated real-time control input to sound synthesis module and interactive 3D object files for creating atmospheric ambience. The form follows the traditional Mandala process of construction, climax, and deconstruction. The second case study presents Tibetan Singing Prayer Wheel, an “haptic-audio system” engaging a physical controller for a multimedia experience emulating the circular motion inputs to a singing bowl. Three input channels pass signals from the voice, gestures from the prayer wheel, and a set of trigger onsets to activate a virtual singing bowl, voice processing, and synthesis modules. The result is a sound installation where an audience can ease into an interactive exploration leveraging the interface shapes and gesture inputs, intuitively mapped into the resulting visual and sound experiences. The third case study presents Resonance of the Hearts, which utilizes a pattern recognition system for a set of hand forms called “Mudra” which are used as control gestures to trigger and manipulate sounds. For continuous gesture to continuous sound generation mapping, a special fractal rendering technique is appropriated in real-time, with machine learning technique to anticipate the next state of hand trajectories while recognising the current state and sensors to accommodate the barehand mobility to allow the flexibility to convey “the beauty of the ancient form” in unobtrusive ways. The result is multifaceted as it was used for teaching and learning in classroom situations, real-time performance and an interactive installation.

Wu’s work touches on the enabling dimensions of integrated design and music as well as second-order feedback. These are discussed in informal ways describing technical challenges. Her work appeals to general audiences for its artistic presentation of alternative cultural experience with easy-to-engage interface experiences. Through the three case studies, the author states that her goal is to create “Embodied Sonic Meditation”. In terms of scholarly presentation, the writing style of the article combines the style of artist statements regarding the source of inspirations, artistic goals, an aesthetic motivation, and the style of describing the work that was completed, the dissemination channel, and the audience responses. The inclusion of Wu’s article in this issue provides for a bidirectional discourse between an authentic voice of a creative practitioner and a scientific norm of a scholarly assessment. Rather than formal user studies, Wu adopts artist-centered subjective observations and informal descriptions of audience responses. The paper eschews technical details and methodologies, focusing on personal discoveries rather than foundational relationships to prior art.

As a final remark, it is worthwhile to discuss a creative impulse and motivation with respect to the wisdom and anticipated cost working with technologies. Wu states, “…from these case studies, I also discovered that sometimes even a cutting-edge technology may not achieve the original goal that an artist planned.” Often a cutting-edge technology presents more challenges than solutions it offers, and this is what mobilizes the creative impulse for those experimental composers and artists who address challenges more than opportunities and niches. Perhaps, have they known from the very beginning what may come out of the creative tunnel and the costs through the process, some creative outputs might have never seen the light of day. Then often, those practitioners engage the challenges one after another, not because what came out of the tunnel from the previous project was recognized and rewarded by others, but because what came out of the tunnel not only differs in its kinds of reward but also for its further possibilities. How a discourse unfolds between and within the process of creation and the culture that hosts it, is an open question. To begin with, creative practitioners need to be critical about their own artistic goals: what are they pursuing, an aesthetic effect or a creative cause?

4.3. Comprehensive Framework for Describing Interactive Sound Installations: Highlighting Trends through a Systematic Review, by V. Fraisse, M. Wanderley, and C. Guastavino

In terms of a creative engagement of sounds, we can consider two broad modes: sound as an exclusive medium and sound as a primary medium alongside other modalities. In Western European tradition, the former is more familiar to the contemporary audience who will likely turn on a music channel or go to concerts to hear music. For the latter, human societies have been always engaging sounds in various activities such as farming, rituals, hunting and social play. However, in the Western European tradition, the origin of the latter can be traced to the early 20th Century Dada and Avant Garde movements as a manifestation of breaking out of the concert tradition. This backdrop is foregrounded to suggest that sitting in a concert hall to listen to sounds offers less certain engagement compared to actively doing something with or along with sounds or making sounds. Concert-goers may immerse themselves in hours and hours watching how kinesthetic patterns and coordination among orchestra members play out in time and how such interactions constitute sounds. At the same time, we should not overlook the consideration that active and participatory ways of engaging sounds may be more natural for people. The last two decades saw prolific practices of sound as a primary medium under the label of “public art”, “interactive sounds”, “sound installation” or “site-specific audio art”. Those art forms often deploy sensors with varying degree of intelligence to encourage and process audience’s participatory behaviors. Given the prevalence of sound installations, there have been relatively few systemic inquiries on how these practices come together, what technologies and methodologies are used, what the artists think they are doing and whether their intentions resonate with audiences. Previous research provides useful contributions mostly focusing on interfaces, yet often these accounts are anecdotal without structural analyses linking systems and aesthetic outcomes. In that regard, this article is highly relevant for this issue because it is one of the first aiming at an overarching framework, inclusive of prior works with specificity, that is extensible for describing musical interaction.

Fraisse et al. [

79] explore systematic approaches for describing interactive sound installation, regardless of the purpose either engineering or artistic. The researchers used literature review methodology adopting the curatorial protocol called PRISMA, and indirectly investigated 195 interactive sound installations for extracting descriptors from 181 publications where the installations were discussed. Clearly this methodology will result in exclusion of all sound installation works that were not discussed in the 181 sources. However, the authors are very clear about inclusion and exclusion criteria, search processes, and further curatorial processes involving qualitative assessment as well as manual coding. There are also consequences that more relevant work in the Scopus database would have been excluded from the search process, simply because the publications lacked the search terms. There are also several merits: First, the corpus is a collection of publications that went through peer review where obscure terms and jargon have been scrutinised. Second, by limiting the corpus to research-oriented documents, the process is likely less overburdened by artists’ statements that have different communication protocols and goals. Third, since the methodology is relatively transparent, the limitations and omissions are clear; what is omitted and why are obvious, not a shortcoming of the methodology nor of the authors’ research design. If any, the last point speaks to how and why works of art will benefit by being informed of and aligned with a kind of literacy, as compiled by Fraisse’s team as an example, encouraging artists’ responsibility of authenticating their descriptions.

The result of the literature survey is a taxonomy with maximum four layers in the hierarchy across 111 taxa. The root level consists of three nodes: artistic intention, interaction, and system design, which are described as the three complementary perspectives. Nodes in the middle and leaf layers are organized according to the three perspectives and constitute a conceptual framework for describing interactive installations. The authors present the resulting taxonomy as the proposed framework, which can be considered more than a taxonomy because it is framed to enable further insights, encouraging readers to explore an interactive data visualization on their website. For exploration and interpretation of the data, one should always bear in mind that the data is corpus bound, not the representation of the sound installation practice at large, which is noted by the authors. The organization of the article is effective and includes peripheral data such as bibliometrics showing a stiff rise of publications between 2000 and 2006, which may indicate the increased accessibility of technologies and prototyping opportunities with integrated circuits, sensors and actuators and LAN bitrates. Other informative data includes the landscape of research fields around the topic with diverse focus and motivation: music and computer science applications are equal top contributors followed by software fields. While expected, this can be interpreted as a concentration concerning implementation of prototypes or artworks exploiting technological and application opportunities, with less focus on user studies and little on explanatory frameworks. In that context, Fraisse et al. is a timely contribution, especially with its systematic method eliciting a set of clean terminology. Implicit in their framework is an ecology of the musical interaction research community, various constituents in interdisciplinarity, and diverse profiles in terms of project motivations. Throughout the article, authors meticulously present their findings, and it is worthwhile to stress that some findings are valuable indicators of limitations and challenges directed to practitioners, primarily in how they purpose the documentation about their creative practices, and secondarily in how they choose the descriptors compatible to the semantics they wish to associate to their creative practices.

Despite or perhaps because of simplicity, the framework’s three organizing perspectives are both complementary and comprehensive. It seems unnecessary to arrive at additions or alternative perspectives even with a larger corpus of literature and bespoke vocabularies. An adaptive framework may be considered by increasing the affordance of the framework to avoid a closed reinforcement cycle between the inclusion criteria and the existing taxa. At the same time, it may be challenging to align the arts- and humanities-oriented language with physical science and engineering oriented language. Perhaps, a cleanly defined scope of language may be a choice that needs to be respected.

4.4. Representations, Affordances, and Interactive Systems, by R. Rowe

Robert Rowe’s article [

55] provides a deep perspective on the relationship between system and musical practice, through the concept of representation and how it effects musical activities from conceiving an interactive system to composing and performing. Underlying Rowe’s perspective is the conjecture: creative outputs are not separable from the systems with which they are produced. While this may be obvious for some readers, in music practice, systems cannot be taken for granted as means and tools for some unarticulated higher priority that one desires, or for a mere pursuit of a musical effect to evoke an affective state, without closely examining the compatibility between the musical information one wishes to encode and the system to encode it. The article ends with an inspirational note indicating that, by thinking through “the issues of representation, abstraction, and computation”, artists are positioned to make a central contribution now (more than ever before), for “Artificial intelligence has great utility and has made rapid progress, but still has a long way to go”.

For discussing symbolic and sub-symbolic representation, Rowe presents music notation (from the Common Practice Period) as an example of symbolic representation, as compared to raw samples from audio recording as an example of sub-symbolic representation. The discussion of MIDI is nuanced considering how much it dominated the computer music community with its “standardized representation” as a communication protocol between keyboard and sound synthesizer. For electro-acoustic music, a progenitor of MIDI, the relationship between control and output signals is at the heart of music creation. Before MIDI, readers may imagine there were two primary ways of coding the relationship between control signals and an output signal: either electrical, through wiring complex patterns of patch cables in analog synthesizers such as Buchla or Moog; or digital, through classical software programming languages (such as Fortran or C). Compared to these precedents, MIDI offered an efficient and convenient way of setting the relationship between control signals and musical output signals. With the use of MIDI however, Rowe systematically draws an implication that users in exchange for convenience may unwittingly commit to accepting the hidden layers of processing with little control. For example, consider the hidden processing where the system registers time stamps from MIDI messages and organizes them into Western style musical time units. This resonates with the discourse between French and Italian schools of the 13th and 14th century, over encoding the medieval rhythmic modes into the system of notation (

Appendix A). Rowe is referring to the age of MIDI when he states, “The wild success and proliferation of the MIDI standard engendered an explosion of applications and systems that are still based today on a 35-year-old conception of music”, but the Western keyboard paradigm that informs the discrete control and symbolic levels of representation in the MIDI protocol, dates far earlier than the Common Practice Period.

As Rowe critically examines, the determination of “what information is sufficient to encode” hinges on a system of representation. Young composers in our digital age will benefit by deeply internalizing this reality; although the acquisition of musical expertise requires guidance and support with well-established traditions, there is no obvious tradition for musical tasks engaging modern technologies. As discussed earlier, the tradition of common practice symbolizes the long history of evolution from practice to theory, then in turn the theory provides prescriptive functions for the practice, and this cycle continues until the system is exhaustively exploited. Better or not, in the absence of such tradition with the use of computation in the late 20th century, we are in a complex landscape of many systems and musical practices taking idiosyncratic paths, where no coherent ecology can be seen other than the exemplary traces of thought and activity of composers like Koenig, Hiller, Xenakis, and Eno as described in Rowe’s article. In this regard, the article carries us further by introducing the current challenges in Artificial Neural Networks (ANNs), their black-box quality and difficulties in representations of multimodal signals. Despite ANN’s sub-symbolic capacity encoding training sets, their internal representation of learning process as weight functions is far from grounded in ways we understand how knowledge formation may occur. Certainly, this concern is shared by the explainable AI community to improve the transparency of the internal processes of black boxes and explanation of deep network representation [

80,

81,

82]. With Rowe’s train of thought and articulation, it is logical that he arrives at Gibson’s ecological perception and the concept of affordance. Rowe presents affordance in relation to accessibility of system control, as he states “…the exposed control parameters present an explicit set of affordances”. This makes sense if an affordance is perceivable by artists as to expose its representation of available spaces for making artistic choices, and if that explicitness in the set of affordances is engineered by a system designer through a system of representation. Therefore, which one comes first, affordance or representation, is subject to further thoughts.

Rowe’s article raises three considerations, imperative for future discourse on musical interaction: mapping, navigation, and representation. Referring to user control of computer-generated sound, Rowe presents the difference between mapping and navigation, stating “The difference appears as a change in orientation toward the underlying representations: mapping creates point-to-point correspondences between input features, or groups of features, and output behaviours. Navigation (or sailing) suggests the exploration of a high-dimensional space of possibilities whose complex interactions will emerge as we move through them.” Here, Rowe’s use of “mapping” is implicitly aligned with a specific use in the computer music community. Rowe cites the work of Chadabe [

83] who introduces a focused use of “mapping” to refer to the creation of a type of audible relationship between control signal and sound. Musical output is sometimes criticised when a control mapping produces an invariant audible signature in the musical flow. Whereas the general use of “mapping” is synonymous to implementing a transfer function for scaling a control signal to a range of synthesis parameter values. It is unlikely that a navigation paradigm can be implemented without the use of a general transfer function. Mapping in this sense is a useful technique for defining choices and constraints to discover emerging control spaces in a continuous and multi-dimensional exploration. The general concept describes a necessary condition for information encoding and resolution capacity.

In terms of navigation, if navigation also engages a mode of exploration, the very nature of exploration does not “aimlessly circle through undifferentiated choices” because an exploration is inherently based on a what-if scenario. Human perception and cognition can only defer aimlessness in attention even at the level of simple awareness. To resist limitations imposed on compositional orientation is understandable, but the general function of mapping is also exploration-enabling.

For representation, we may further investigate the relationship and the order of representation and affordance as Rowe has put forward. Recent neuroscientific findings indicate that a genome does not encode representation or strategies based on ANN-type optimization principles. Genomes encode wiring rules and patterns from which instances of behaviors and representations are generated [

84]. This ties well with Gibson’s theory of senses as active and outreaching perceptual systems to acquire perceptual information about the world (see

Section 3 above: “to detect something” rather than “to have a sensation”). As our perceptual systems are highly interrelated and their activity level coordinates movement and sensing, this is an area we need to examine further on two sides; what affordance musical interaction may bring to MTI and what affordance MTI may bring to musical interaction, in ways the two sides mutually expand the margins. Perhaps, this activity contributes to the plasticity of neuronal wiring, which creates further affordances or alternative ones, and this should inspire the future perspectives of musical interaction involving AI.

In sum, Rowe’s perspective article provides both critical insight and new orientation for young generations of composers, with highly informed interdisciplinary concepts. Deeply committed to the future of interactive systems informed by computer music literacy, Rowe brings interdisciplinary connections to refresh the foundational inquiries for musical information encoding and representation. The above discussion is much in debt to the authenticity of Rowe’s article and the maturity of his enduring creative practices.

4.5. What Early User Involvement Could Look like—Developing Technology Applications for Piano Teaching and Learning, by T. Bobbe, L. Oppici, L.-M. Lüneburg, O. Münzberg, S-C. Li, S. Narciss, K-H. Simon, J. Krzywinski, and E. Muschter

Bobbe et al. [

85] propose an application of Tactile Internet with Human-in-the-Loop (TaHIL) to piano lessons and present their studies exploring online teaching and learning scenarios. One can argue that the concept of TaHIL [

86,

87] is not new considering many elements housed in that idea have been around for a long time, especially from the era of telepresence and teleoperation [

88,