Parallel and Distributed Computing: Algorithms and Applications

A topical collection in Algorithms (ISSN 1999-4893). This collection belongs to the section "Parallel and Distributed Algorithms".

Viewed by 66907Editors

Interests: design and analysis of algorithms; parallel and distributed computing; mobile ad hoc networks; sensor networks

Special Issues, Collections and Topics in MDPI journals

Interests: design of algorithms; parallel and distributed computing; pervasive computing; sensor networks; security and privacy issues in pervasive environments

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

It is an undeniable fact that parallel and distributed computing is ubiquitous now in nearly all computational scenarios ranging from mainstream computing to high-performance and/or distributed architectures such as cloud architectures and supercomputers. The ever-increasing complexity of parallel/distributed systems requires effective algorithmic techniques for unleashing the enormous computational power of these systems and attaining the promising performance of parallel/distributed computing. Moreover, the new possibilities offered by the high-performance systems pave the way to a new genre of applications that were considered as far-fetched a short while ago.

This Topical Collection is focused on all algorithmic aspects of parallel and distributed computing and applications. Essentially, every scenario where multiple operations or tasks are executed at the same time is within the scope of this Topical Collection. Topics of interest include (but are not limited to) the following:

- Theoretical aspects of parallel and distributed computing;

- Design and analysis of parallel and distributed algorithms;

- Algorithm engineering in parallel and distributed computing;

- Load balancing and scheduling techniques;

- Green computing;

- Algorithms and applications for big data, machine learning and artificial intelligence;

- Game-theoretic approaches in parallel and distributed computing;

- Algorithms and applications on GPUs and multicore or manycore platforms;

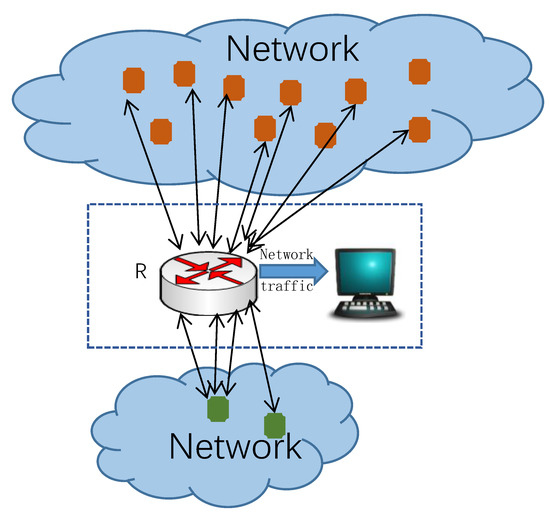

- Cloud computing, edge/fog computing, IoT and distributed computing;

- Scientific computing;

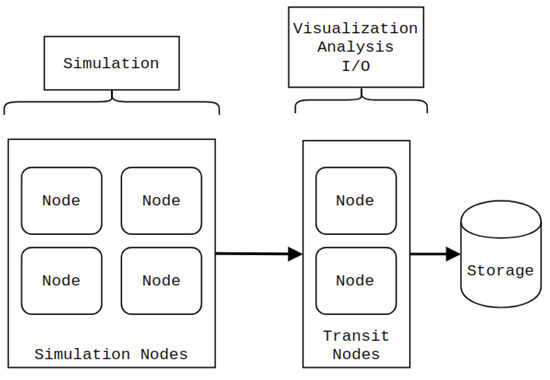

- Simulation and visualization;

- Graph and irregular applications.

Dr. Charalampos Konstantopoulos

Prof. Dr. Grammati Pantziou

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Algorithms is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 1600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- Parallel algorithms

- Distributed algorithms

- GPUs

- Multicore and manycore architectures

- Supercomputing

- Data centers

- Big data

- Cloud architectures

- IoT