Sensors and Robotics for Digital Agriculture

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Sensors and Robotics".

Viewed by 123455Editors

Interests: operation management; supply chain automation; agri-robotics; ICT-agri

Interests: precision agriculture; remote sensing; sensor networks; IoT; digital farming; decision support systems; agricultural engineering; agricultural automations

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

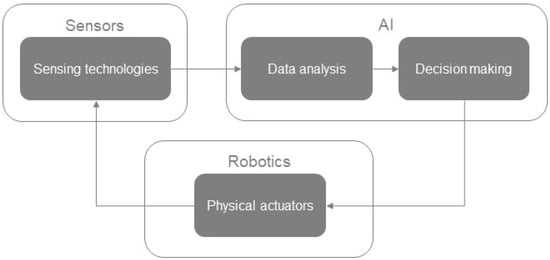

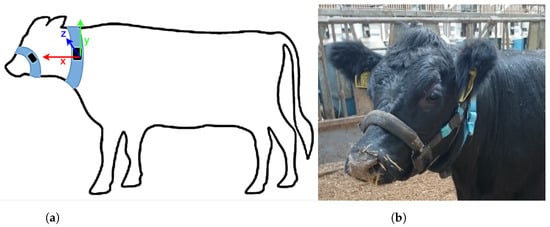

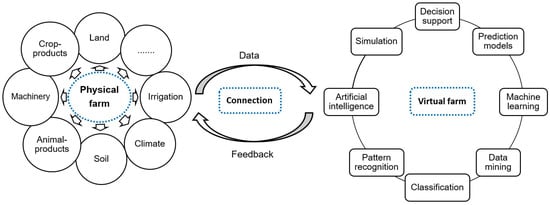

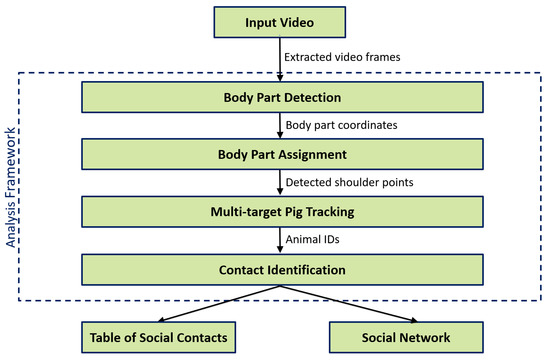

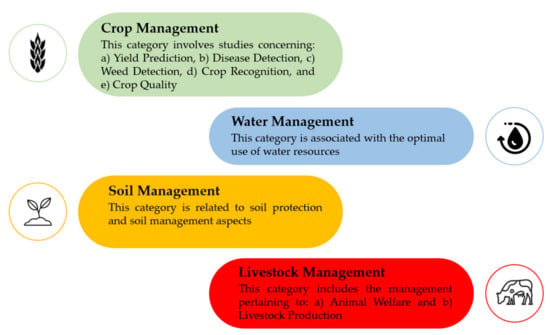

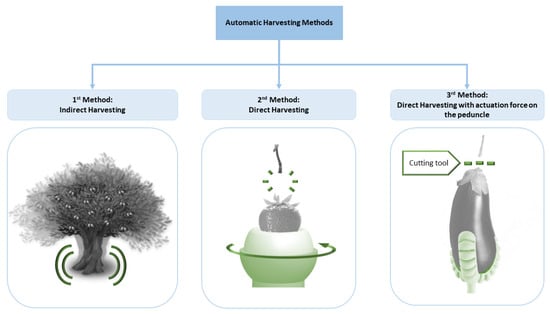

In recent years, there has been a growing interest in sensors and robotic systems as part of the digitalization of agriculture, which has the potential to vastly increase agricultural systems’ efficiency and sustainability. Agricultural robots (including automation and amended intelligent IT systems) can accomplish various tasks which can lead to more efficient farm management and improved profitability. Sensors deployed on agricultural robots are an essential component for the robots’ autonomy and agronomical functions. These sensors include navigation sensors, context and situation awareness sensors, and sensors ensuring a safe execution of the operation as regards autonomy, as well as sensor technologies for yield mapping and measuring, soil sensing, nutrient and pesticide application, irrigation control, selective harvesting, etc. as regards agronomical functions, all in the framework of precision agriculture applications.

The purpose of this Special Issue is to publish research articles, as well as review articles, addressing recent advances in systems and processes in the field of sensors and robotics within the concept of precision agriculture. Original, high-quality contributions that have not yet been published and that are not currently under review by other journals or peer-reviewed conferences are sought.

Indicatively, research topics include:

- Human–robot interaction;

- Computer vision;

- Robot sensing systems;

- Artificial intelligence and machine learning;

- Sensor fusion in agri-robotics;

- Variable rate applications;

- Farm management information systems;

- Remote sensing;

- ICT applications;

- UAVs in agriculture;

- Agri-robotics navigation and awareness;

- SLAM—Simultaneous localization and mapping;

- Resource-constrained navigation in agricultural environments;

- Mapping and obstacle avoidance in agricultural environments.

Prof. Dr. Dionysis Bochtis

Dr. Aristotelis C. Tagarakis

Guest Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.