Cloud Classification in Wide-Swath Passive Sensor Images Aided by Narrow-Swath Active Sensor Data

Abstract

:1. Introduction

2. Sensors and Data

2.1. Sensors

2.1.1. MODIS

2.1.2. CPR

2.2. Data

2.2.1. MODIS Data

2.2.2. CPR Data

3. Similar Radiance Matching Hypothesis

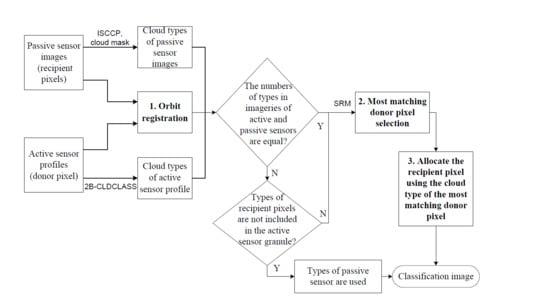

4. Cloud Classification Strategy

4.1. Orbit Registration Process

4.2. Most Matching Donor Pixel Selection

5. Results

5.1. SRM Hypothesis Analysis Results

5.2. Orbit Registration

5.3. Cloud Classification

6. Discussion

7. Conclusions

Author Contributions

Acknowledgments

Conflicts of Interest

Appendix A. Boundary Selection of the Orbit Registration Criterion

References

- Hughes, M.J.; Hayes, D.J. Automated detection of cloud and cloud shadow in single-date Landsat imagery using neural networks and spatial post-processing. Remote Sens. 2014, 6, 4907–4926. [Google Scholar] [CrossRef]

- Musial, J.P.; Hüsler, F.; Sütterlin, M.; Neuhaus, C.; Wunderle, S. Daytime low stratiform cloud detection on AVHRR imagery. Remote Sens. 2014, 6, 5124–5150. [Google Scholar] [CrossRef] [Green Version]

- Hollstein, A.; Segl, K.; Guanter, L.; Brell, M.; Enesco, M. Ready-to-use methods for the detection of clouds, cirrus, snow, shadow, water and clear sky pixels in sentinel-2 MSI images. Remote Sens. 2016, 8, 666. [Google Scholar] [CrossRef]

- Li, H.; Zheng, H.; Han, C.; Wang, H.; Miao, M. Onboard spectral and spatial cloud detection for hyperspectral remote sensing images. Remote Sens. 2018, 10, 152. [Google Scholar] [CrossRef]

- Li, J.; Yi, Y.; Minnis, P.; Huang, J.; Yan, H.; Ma, Y.; Wang, W.; Ayers, J.K. Radiative effect differences between multi-layered and single-layer clouds derived from CERES, CALIPSO and CloudSat data. J. Quant. Spectrosc. Radiat. Transf. 2011, 112, 361–375. [Google Scholar] [CrossRef]

- Behrangi, A.; Casey, S.P.F.; Lambrigtsen, B.H. Three-dimensional distribution of cloud types over the USA and surrounding areas observed by CloudSat. Int. J. Remote Sens. 2012, 33, 4856–4870. [Google Scholar] [CrossRef]

- Wang, Z.; Sassen, K. Level 2 Cloud Scenario Classification Product Process Description and Interface Control Document. Available online: http://irina.eas.gatech.Edu/EAS_Fall2008/CloudSat_ATBD_L2_cloud_clas.pdf (accessed on 8 March 2018).

- Parmes, E.; Rauste, Y.; Molinier, M.; Andersson, K.; Seitsonen, L. Automatic cloud and shadow detection in optical satellite imagery without using thermal bands—Application to Suomi NPP VIIRS images over Fennoscandia. Remote Sens. 2017, 9, 806. [Google Scholar] [CrossRef]

- Tan, K.; Zhang, Y.; Tong, X. Cloud extraction from Chinese high resolution satellite imagery by probabilistic latent semantic analysis and object-based machine learning. Remote Sens. 2016, 8, 963. [Google Scholar] [CrossRef]

- Frey, R.; Baum, B.; Heidinger, A.; Ackerman, S.; Maddux, B.; Menzel, P. MODIS CTP (MOD06) Webinar #7. Available online: http://modis-atmos.gsfc.nasa.gov/sites/default/files/ModAtmo/MODIS_C6_Cloud_Top_Products_Menzel.pdf (accessed on 8 March 2018).

- Heidinger, A.K.; Pavolonis, M.J. Global daytime distribution of overlapping cirrus cloud from NOAA’s Advanced very High Resolution Radiometer. J. Clim. 2005, 18, 4772–4784. [Google Scholar] [CrossRef]

- Joiner, J.; Vasilkov, A.P.; Bhartia, P.K.; Wind, G.; Platnick, S.; Menzel, W.P. Detection of multi-layer and vertically-extended clouds using A-train sensors. Atmos. Meas. Tech. 2010, 3, 233–247. [Google Scholar] [CrossRef]

- Menzel, W.P.; Frey, R.A.; Baum, B.A. Cloud Top Properties and Cloud Phase Algorithm Theoretical Basis Document. Available online: https://modis-atmos.gsfc.nasa.gov/_docs/MOD06-ATBD_2015_05_01.pdf (accessed on 8 March 2018).

- Platnick, S.; King, M.D.; Meyer, K.G.; Wind, G.; Amarasinghe, N.; Marchant, B.; Arnold, G.T.; Zhang, Z.; Hubanks, P.A.; Ridgway, B.; et al. MODIS Cloud Optical Properties: User Guide for the Collection 6 Level-2 MOD06/MYD06 Product and Associated Level-3 Datasets. Available online: https://modis-atmos. gsfc.nasa.gov/_docs/C6MOD06OPUserGuide.pdf (accessed on 8 March 2018).

- Spinhirne, J.D.; Palm, S.P.; Hart, W.D.; Hlavka, D.L.; Welton, E.J. Cloud and aerosol measurements from GLAS: Overview and initial results. Geophys. Res. Lett. 2005, 32, L22S03. [Google Scholar] [CrossRef]

- Spinhirne, J.D.; Palm, S.P.; Hart, W.D. Antarctica cloud cover for October 2003 from GLAS satellite lidar profiling. Geophys. Res. Lett. 2005, 32, L22S05. [Google Scholar] [CrossRef]

- Kato, S.; Sun-Mack, S.; Miller, W.F.; Rose, F.G.; Chen, Y.; Minnis, P.; Wielicki, B.A. Relationships among cloud occurrence frequency, overlap, and effective thickness derived from CALIPSO and CloudSat merged cloud vertical profiles. J. Geophys. Res. Atmos. 2010, 115, D00H28. [Google Scholar] [CrossRef]

- Miller, S.D.; Forsythe, J.M.; Partain, P.T.; Haynes, J.M.; Bankert, R.L.; Sengupta, M.; Mitrescu, C.; Hawkins, J.D.; Vonder Haar, T.H. Estimating three-dimensional cloud structure via statistically blended satellite observations. J. Appl. Meteorol. Clim. 2014, 53, 437–455. [Google Scholar] [CrossRef]

- Minnis, P.; Sun-Mack, S.; Chen, Y.; Yi, H.; Huang, J.; Nguyen, L.; Khaiyer, M.M. Detection and retrieval of multi-layered cloud properties using satellite data. In Proceedings of the SPIE Europe International Symposium on Remote Sensing, Remote Sensing of Clouds and the Atmosphere X, Bruges, Belgium, 19–22 September 2005. [Google Scholar]

- Wind, G.; Platnick, S.; King, M.D.; Hubanks, P.A.; Pavolonis, M.J.; Heidinger, A.K.; Yang, P.; Baum, B.A. Multilayer cloud detection with the MODIS near-infrared water vapor absorption band. J. Appl. Meteorol. Clim. 2010, 49, 2315–2332. [Google Scholar] [CrossRef]

- Golea, V. International Satellite Cloud Climatology Project. Available online: http://isccp.giss.nasa.gov/cloudtypes.html#DIAGRAM (accessed on 8 March 2018).

- Marchand, R.; Ackerman, T.; Smyth, M.; Rossow, W.B. A review of cloud top height and optical depth histograms from MISR, ISCCP and MODIS. J. Geophys. Res. Atmos. 2010, 115, D16206. [Google Scholar] [CrossRef]

- Pincus, R.; Platnick, S.; Ackerman, S.A.; Hemler, R.S.; Hofmann, R.J.P. Reconciling simulated and observed views of clouds: MODIS, ISCCP and the limits of instrument simulators. J. Clim. 2012, 25, 4699–4720. [Google Scholar] [CrossRef]

- Rossow, W.B.; Garder, L.C.; Lu, P.J.; Walker, A. International Satellite Cloud Climatology Project (ISCCP) Documentation of Cloud Data; WMO/TD 266 (rev.); World Climate Research Programme: Geneva, Switzerland, 1991; pp. 1–85. [Google Scholar]

- Rossow, W.B.; Schiffer, R.A. Advances in understanding clouds from ISCCP. Bull. Am. Meteorol. Soc. 1999, 80, 2261–2287. [Google Scholar] [CrossRef]

- Sun-Mack, S.; Minnis, P.; Chen, Y.; Gibson, S.; Yi, Y.; Trepte, Q.; Wielicki, B.; Kato, S.; Winker, D. Integrated cloud-aerosol-radiation product using CERES, MODIS, CALIPSO and CloudSat data. In Proceedings of the SPIE Europe Conference on the Remote Sensing of Clouds and the Atmosphere, Florence, Italy, 25 October 2007; pp. 1–12. [Google Scholar] [CrossRef]

- Sun-Mack, S.; Minnis, P.; Kato, S.; Chen, Y.; Yi, Y.; Gibson, S.; Heck, P.; Winker, D.; Ayers, K. Enhanced cloud algorithm from collocated CALIPSO, CloudSat and MODIS global boundary layer lapse rate studies. In Proceedings of the IEEE International Geoscience & Remote Sensing Symposium, Honolulu, HI, USA, 25–30 July 2010; pp. 201–204. [Google Scholar]

- Sun, X.J.; Li, H.R.; Barker, H.W.; Zhang, R.W.; Zhou, Y.B.; Liu, L. Satellite-based estimation of cloud-base heights using constrained spectral radiance matching. Q. J. R. Meteorol. Soc. 2016, 142, 224–232. [Google Scholar] [CrossRef]

- Austin, R.T.; Heymsfield, A.J.; Stephens, G.L. Retrieval of ice cloud microphysical parameters using the CloudSat millimeter-wave radar and temperature. J. Geophys. Res. 2009, 114, D00A23. [Google Scholar] [CrossRef]

- Leptoukh, G.; Kempler, S.; Smith, P.; Savtchenko, A.; Kummerer, R.; Gopalan, A.; Farley, J.; Chen, A. A-train data depot: Integrating and exploring data along the A-train tracks. In Proceedings of the IEEE International Geoscience & Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; Volume 100, pp. 1118–1121. [Google Scholar] [CrossRef]

- Savtchenko, A.; Kummerer, R.; Smith, P.; Gopalan, A.; Kempler, S.; Leptoukh, G. A-train data depot: Bringing atmospheric measurements together. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2788–2795. [Google Scholar] [CrossRef]

- Barker, D.M.; Huang, W.; Guo, Y.R.; Bourgeois, A.J.; Xiao, Q.N. A three-dimensional variational data assimilation system for MM5: Implementation and initial results. Mon. Weather Rev. 2004, 132, 897–914. [Google Scholar] [CrossRef]

- Barker, H.W.; Jerg, M.P.; Wehr, T.; Kato, S.; Donovan, D.P.; Hogan, R.J. A 3D cloud-construction algorithm for the EarthCARE satellite mission. Q. J. R. Meteorol. Soc. 2011, 137, 1042–1058. [Google Scholar] [CrossRef]

- Chan, M.A.; Comiso, J.C. Arctic cloud characteristics as derived from MODIS, CALIPSO and CloudSat. J. Clim. 2013, 26, 3285–3306. [Google Scholar] [CrossRef]

- Luo, Y.; Zhang, R.; Wang, H. Comparing occurrences and vertical Structures of hydrometeors between eastern China and the Indian monsoon region using CloudSat/CALIPSO Data. J. Clim. 2009, 22, 1052–1064. [Google Scholar] [CrossRef]

- Zeng, S.; Riedi, J.; Trepte, C.R.; Winker, D.M.; Hu, Y.X. Study of global cloud droplet number concentration with A-train satellites. Atmos. Chem. Phys. 2014, 14, 7125–7134. [Google Scholar] [CrossRef]

- Young, A.H.; Bates, J.J.; Curry, J.A. Application of cloud vertical structure from CloudSat to investigate MODIS-derived cloud properties of cirriform, anvil, and deep convective clouds. J. Geophys. Res. Atmos. 2013, 118, 4689–4699. [Google Scholar] [CrossRef]

- Wang, H.; Xu, X. Orbit registration between wide swaths of passive sensors and narrow tracks of active sensors. In Proceedings of the 13th IEEE International Conference on Signal Processing, Chengdu, China, 6–10 November 2016; pp. 209–214. [Google Scholar]

- Zhang, Z.; Li, D.; Liu, S.; Xiao, B.; Cao, X. Cross-domain ground-based cloud classification based on transfer of local features and discriminative metric learning. Remote Sens. 2018, 10, 8. [Google Scholar] [CrossRef]

- Stephens, G.L.; Vane, D.G.; TeBockhorst, D. CloudSat-Instrument: Home. Available online: http://www.cloudsat.cira.colostate.edu/ (accessed on 8 March 2018).

- Delanoë, J.; Hogan, R.J. Combined CloudSat-CALIPSO-MODIS retrievals of the properties of ice clouds. J. Geophys. Res. Atmos. 2010, 115, 1–17. [Google Scholar] [CrossRef]

- Maccherone, B.; Frazier, S. MODIS Level 1 Data, Geolocation, Cloudmask and Atmosphere Product. Available online: http://modis-atmos.gsfc.nasa.gov/ (accessed on 8 March 2018).

- Stephens, G.L.; Vane, D.G.; Tanelli, S.; Im, E.; Durden, S.; Rokey, M.; Reinke, D.; Partain, P.; Mace, G.G.; Austin, R. CloudSat mission: Performance and early science after the first year of operation. J. Geophys. Res. Atmos. 2008, 113, D00A18. [Google Scholar] [CrossRef]

- Partain, P. Cloudsat MODIS-AUX Auxiliary Data Process Description and Interface Control Document; Colorado State University: Fort Collins, CO, USA, 2007. [Google Scholar]

- Wang, S.; Yao, Z.; Han, Z.; Zhao, Z. Feasibility analysis of extending the spatial coverage of cloud-base height from CloudSat. Meteorol. Mon. 2012, 38, 210–219. [Google Scholar]

- Sassen, K.; Wang, Z. Classifying clouds around the globe with the CloudSat radar: 1-year of results. Geophys. Res. Lett. 2008, 35, L04805. [Google Scholar] [CrossRef]

- Xiong, X. The Research of Specific Cloud Properties Based on MODIS Remote Sensing Data. Master’s Thesis, University of Electronic Science and Technology of China, Chengdu, China, 2015. [Google Scholar]

- Ham, S.H.; Kato, S.; Barker, H.W.; Rose, F.G.; Sun-Mack, S. Effects of 3-D clouds on atmospheric transmission of solar radiation: Cloud type dependencies inferred from A-train satellite data. J. Geophys. Res. Atmos. 2014, 119, 943–963. [Google Scholar] [CrossRef]

- Capderou, M. Handbook of Satellite Orbits-from Kepler to GPS; Lyle, S., Translator; Springer: Cham, Switzerland, 2014; pp. 25–52. ISBN 978-3-319-03416-4. [Google Scholar]

| Cloud Types | CTH (km) | CBH (km) |

|---|---|---|

| Cirrus (Ci) | 12.77 ± 2.27 | 10.41 ±2.64 |

| Altostratus (As) | 6.36 ± 2.66 | 4.01 ± 3.01 |

| Altocumulus (Ac) | 4.31 ± 1.63 | 3.14 ± 1.41 |

| Stratus (St) | 1.06 ± 0.65 | 0.71 ± 0.54 |

| Stratocumulus (Sc) | 1.66 ± 0.81 | 0.88 ± 0.68 |

| Cumulus (Cu) | 2.19 ± 1.62 | 0.81 ± 1.42 |

| Nimbostratus (Ns) | 4.43 ± 2.10 | 0.47 ± 2.34 |

| Deep convective clouds (Dc) | 5.42 ± 1.90 | 0.56 ± 1.54 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Xu, X. Cloud Classification in Wide-Swath Passive Sensor Images Aided by Narrow-Swath Active Sensor Data. Remote Sens. 2018, 10, 812. https://doi.org/10.3390/rs10060812

Wang H, Xu X. Cloud Classification in Wide-Swath Passive Sensor Images Aided by Narrow-Swath Active Sensor Data. Remote Sensing. 2018; 10(6):812. https://doi.org/10.3390/rs10060812

Chicago/Turabian StyleWang, Hongxia, and Xiaojian Xu. 2018. "Cloud Classification in Wide-Swath Passive Sensor Images Aided by Narrow-Swath Active Sensor Data" Remote Sensing 10, no. 6: 812. https://doi.org/10.3390/rs10060812

APA StyleWang, H., & Xu, X. (2018). Cloud Classification in Wide-Swath Passive Sensor Images Aided by Narrow-Swath Active Sensor Data. Remote Sensing, 10(6), 812. https://doi.org/10.3390/rs10060812