A Novel Object-Based Supervised Classification Method with Active Learning and Random Forest for PolSAR Imagery

Abstract

:1. Introduction

2. Materials and Methods

2.1. Polarimetric Feature Extraction

2.2. Generalized Statistical Region Merging (GSRM)

2.2.1. Merging Predicate

2.2.2. Merging Order

2.3. Active Learning (AL)

| Algorithm 1. The general process of AL. |

| Input: labeled sample set L, unlabeled sample set , the number of training sample sets 1. Initialize training sample set based on random selection from labeled sample set L 2. While size of training sample set do 3. Learn a model by classifier C according to training sample set 4. Select the most informative samples based on query function Q 5. Label the most informative samples from unlabeled sample set U 6. Update unlabeled sample set and new labeled sample set 7. 8. End while Output: the training sample set of the most informative samples |

2.3.1. The Mutual Information (MI)-Based Criterion

2.3.2. Breaking Ties (BT) Algorithm

2.3.3. The Modified Breaking Ties (MBT) Algorithm

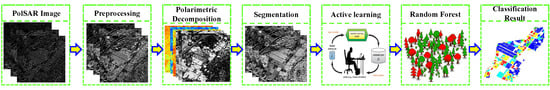

2.4. The Procedure of the Proposed Method

3. Experiments and Results

3.1. Description of the Image Datasets

3.2. Experiments and Results

3.2.1. Experiments with AIRSAR Image

3.2.2. Experiments with UAVSAR Image

3.2.3. Experiments with RadarSat-2 Image

4. Discussion

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Lee, J.S.; Grunes, M.R.; Kwok, R. Classification of multi-look polarimetric SAR imagery based on complex Wishart distribution. Int. J. Remote. Sens. 1994, 15, 2299–2311. [Google Scholar] [CrossRef]

- Martinuzzi, S.; Gould, W.A.; González, O.M.R. Land development, land use, and urban sprawl in Puerto Rico integrating remote sensing and population census data. Landsc. Urban Plan. 2007, 79, 288–297. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, J.; Li, P. Damage assessment in urban areas using post-earthquake airborne PolSAR imagery. Int. J. Remote. Sens. 2013, 34, 8952–8966. [Google Scholar] [CrossRef]

- Sun, W.; Li, P.; Yang, J. Polarimetric SAR image classification using a wishart test statistic and a wishart dissimilarity measure. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1–5. [Google Scholar] [CrossRef]

- Zhao, L.; Yang, J.; Li, P. Seasonal inundation monitoring and vegetation pattern mapping of the Erguna floodplain by means of a RADARSAT-2 fully polarimetric time series. Remote Sens. Environ. 2014, 152, 426–440. [Google Scholar] [CrossRef]

- Shi, L.; Zhang, L.; Yang, J. Supervised graph embedding for polarimetric SAR image classification. IEEE Geosci. Remote Sens. Lett. 2013, 10, 216–220. [Google Scholar] [CrossRef]

- Qi, Z.; Yeh, A.G.-O.; Li, X. A novel algorithm for land use and land cover classification using RADARSAT-2 polarimetric SAR data. Remote Sens. Environ. 2012, 118, 21–39. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Convolutional neural networks for large-scale remote-sensing image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 645–657. [Google Scholar] [CrossRef]

- Samat, A.; Gamba, P.; Du, P. Active extreme learning machines for quad-polarimetric SAR imagery classification. Int. J. Appl. Earth Obs. Geoinf. 2015, 35, 305–319. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F. Random forest wetland classification using ALOS-2 L-band, RADARSAT-2 C-band, and TerraSAR-X imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 13–31. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Hyperspectral image segmentation using a new bayesian approach with active learning. IEEE Trans. Geosci. Remote Sens. 2011, 49, 3947–3960. [Google Scholar] [CrossRef]

- Rajan, S.; Ghosh, J.; Crawford, M.M. An active learning approach to hyperspectral data classification. IEEE Trans. Geosci. Remote Sens. 2008, 46, 1231–1242. [Google Scholar] [CrossRef]

- Li, J.; Bioucas-Dias, J.M.; Plaza, A. Spectral–spatial classification of hyperspectral data using loopy belief propagation and active learning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 844–856. [Google Scholar] [CrossRef]

- Lee, J.S.; Pottier, E. Polarimetric Radar Imaging From Basics to Applications; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Cloude, S.R.; Pottier, E. A review of target decomposition theorems in radar polarimetry. IEEE Trans. Geosci. Remote Sens. 1996, 34, 498–518. [Google Scholar] [CrossRef]

- Cloude, S.R. Target decomposition theorems in radar scattering. Electron. Lett. 1985, 21, 22–24. [Google Scholar] [CrossRef]

- Freeman, A.; Durden, S.L. A three-component scattering model for polarimetric SAR data. IEEE Trans. Geosci. Remote Sens. 1998, 36, 963–973. [Google Scholar] [CrossRef]

- Van Zyl, J.J. Application of Cloude’s target decomposition theorem to polarimetric imaging radar data. In Radar Polarimetry; Bellingham: Washington, DC, USA, 1993; Volume 1748, pp. 184–192. [Google Scholar]

- Park, S.-E.; Yamaguchi, Y.; Kim, D.-J. Polarimetric SAR remote sensing of the 2011 Tohoku earthquake using ALOS/PALSAR. Remote Sens. Environ. 2013, 132, 212–220. [Google Scholar] [CrossRef]

- Touzi, R. Target scattering decomposition in terms of roll-invariant target parameters. IEEE Trans. Geosci. Remote Sens. 2007, 45, 73–84. [Google Scholar] [CrossRef]

- Arii, M.; van Zyl, J.J.; Kim, Y. Adaptive model-based decomposition of polarimetric SAR covariance matrices. IEEE Trans. Geosci. Remote Sens. 2011, 49, 1104–1113. [Google Scholar] [CrossRef]

- Qin, F.; Guo, J.; Sun, W. Object-oriented ensemble classification for polarimetric SAR imagery using restricted Boltzmann machines. Remote Sens. Lett. 2016, 8, 204–213. [Google Scholar] [CrossRef]

- Ma, L.; Li, M.; Ma, X. A review of supervised object-based land-cover image classification. ISPRS J. Photogramm. Remote Sens. 2017, 130, 277–293. [Google Scholar] [CrossRef]

- Lang, F.; Yang, J.; Li, D.; Zhao, L.; Shi, L. Polarimetric SAR image segmentation using statistical region merging. IEEE Geosci. Remote Sens. Lett. 2014, 11, 509–513. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Qin, F.; Guo, J.; Lang, F. Superpixel segmentation for polarimetric SAR imagery using local iterative clustering. IEEE Geosci. Remote Sens. Lett. 2017, 12, 13–17. [Google Scholar]

- Xiang, D.; Ban, Y.; Wang, W. Adaptive superpixel generation for polarimetric SAR images with local iterative clustering and SIRV model. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3115–3131. [Google Scholar] [CrossRef]

- Liu, W.; Yang, J.; Zhao, J. A novel method of unsupervised change detection using multi-temporal PolSAR images. Remote Sens. 2017, 9, 1135. [Google Scholar] [CrossRef]

- Zhang, L.; Li, A.; Li, X. Remote sensing image segmentation based on an improved 2-D gradient histogram and MMAD model. IEEE Geosci. Remote Sens. Lett. 2015, 12, 58–62. [Google Scholar] [CrossRef]

- Nock, R.; Nielsen, F. Statistical region merging. IEEE Trans. Pattern Anal. Mach. Intell. 2004, 26, 1452. [Google Scholar] [CrossRef] [PubMed]

- Luo, T.; Kramer, K.; Goldgof, D.B.; Hall, L.O.; Samson, S.; Remsen, A.; Hopkins, T. Active learning to recognize multiple types of plankton. J. Mach. Learn. Res. 2005, 6, 589–613. [Google Scholar]

- Tuia, D.; Ratle, F.; Pacifici, F. Active learning methods for remote sensing image classification. IEEE Trans. Geosci. Remote Sens. 2009, 47, 2218–2232. [Google Scholar] [CrossRef]

- Tuia, D.; Volpi, M.; Copa, L.; Kanevski, M.; Munoz-Mari, J. A survey of active learning algorithms for supervised remote sensing image classification. IEEE J. Sel. Top. Signal Process. 2011, 5, 606–617. [Google Scholar] [CrossRef]

- Hastie, T.; Tibshirani, R. Discriminant adaptive nearest neighbor classification and regression. IEEE Trans. Pattern Anal. Mach. Intell. 1996, 18, 409–415. [Google Scholar] [CrossRef]

| Polarimetric Parameters | Physical Description |

|---|---|

| , , | The backscattering coefficients of HH (), HV (), and VV () |

| The copolarized phase difference between HH and VV | |

| C3 | The nine elements of covariance matrix C3 |

| T3 | The nine elements of coherence matrix T3 |

| H/A/Alpha | The entropy (H), anisotropy (A), and alpha angle (Alpha) obtained from Cloude decomposition |

| Freeman_Odd Freeman_Dbl Freeman_Vol | The parameters of surface scattering, double scattering, and volume scattering obtained from Freeman–Durden three-component decomposition |

| VanZyl3_Odd VanZyl3_Dbl VanZyl3_Vol | The parameters of surface scattering, double scattering, and volume scattering obtained from van Zyl decomposition |

| Yamaguchi4_Odd Yamaguchi4_Dbl Yamaguchi4_Vol Yamaguchi4_Hlx | The parameters of surface scattering, double scattering, volume scattering, and helix scattering obtained from Yamaguchi four-component decomposition |

| TSVM_psi TSVM_tau TSVM_phi TSVM_alpha | The sixteen decomposition parameters from TSVM decomposition |

| Arii3_NNED_Odd Arii3_NNED_Dbl Arii3_NNED_Vol | The parameters of surface scattering, double scattering, and volume scattering obtained from Arii decomposition |

| Categories | MBT_pixel | MBT_T3 | RS | MI | BT | MBT |

|---|---|---|---|---|---|---|

| Rape seed | 0.7891 ± 0.0183 | 0.9325 ± 0.0071 | 0.9658 ± 0.0025 | 0.9997 ± 0.0002 | 0.9608 ± 0.0034 | 0.9985 ± 0.0007 |

| Stem beams | 0.9441 ± 0.0095 | 0.9607 ± 0.0053 | 0.9350 ± 0.0047 | 0.7747 ± 0.0214 | 0.9949 ± 0.0005 | 0.9958 ± 0.0012 |

| Bare soil | 0.2694 ± 0.0212 | 0.7284 ± 0.0115 | 0.9116 ± 0.0102 | 0.9998 ± 0.0001 | 0.9910 ± 0.0010 | 0.9998 ± 0.0001 |

| Water | 0.9636 ± 0.0064 | 0.9637 ± 0.0087 | 0.9876 ± 0.0034 | 0.9997 ± 0.0002 | 0.9916 ± 0.0004 | 0.9996 ± 0.0001 |

| Forest | 0.8947 ± 0.0120 | 0.9572 ± 0.0046 | 0.8219 ± 0.0072 | 0.9969 ± 0.0024 | 0.9791 ± 0.0011 | 0.9974 ± 0.0010 |

| Wheat C | 0.8975 ± 0.0074 | 0.9802 ± 0.0027 | 0.9926 ± 0.0012 | 0.9996 ± 0.0001 | 0.9988 ± 0.0002 | 0.9989 ± 0.0004 |

| Lucerne | 0.9101 ± 0.0038 | 0.9917 ± 0.0013 | 0.9993 ± 0.0007 | 0.8615 ± 0.0216 | 0.9991 ± 0.0005 | 0.9997 ± 0.0002 |

| Wheat A | 0.8876 ± 0.0167 | 0.9909 ± 0.0011 | 0.9954 ± 0.0014 | 0.9698 ± 0.0117 | 0.9976 ± 0.0011 | 0.9827 ± 0.0040 |

| Peas | 0.9601 ± 0.0078 | 0.9925 ± 0.0004 | 0.9908 ± 0.0003 | 0.9998 ± 0.0001 | 0.9814 ± 0.0018 | 0.9720 ± 0.0037 |

| Wheat B | 0.7901 ± 0.0147 | 0.9169 ± 0.0029 | 0.9493 ± 0.0041 | 0.9964 ± 0.0013 | 0.9879 ± 0.0025 | 0.9902 ± 0.0010 |

| Beet | 0.9308 ± 0.0201 | 0.4568 ± 0.0146 | 0.9597 ± 0.0023 | 0.9884 ± 0.0024 | 0.8275 ± 0.0033 | 0.9969 ± 0.0002 |

| Potatoes | 0.9124 ± 0.0094 | 0.9997 ± 0.0001 | 0.9775 ± 0.0017 | 0.9738 ± 0.0011 | 0.9168 ± 0.0101 | 0.9891 ± 0.0011 |

| Barely | 0.8654 ± 0.0182 | 0.9891 ± 0.0016 | 0.9692 ± 0.0051 | 0.9989 ± 0.0007 | 0.9983 ± 0.0020 | 0.9997 ± 0.0002 |

| Building | 0.9943 ± 0.0024 | 0.9989 ± 0.0007 | 0.9624 ± 0.0011 | 0.9981 ± 0.0003 | 0.8273 ± 0.0007 | 0.9981 ± 0.0004 |

| Grass | 0.8484 ± 0.0102 | 0.9662 ± 0.0035 | 0.9814 ± 0.0014 | 0.9888 ± 0.0010 | 0.9935 ± 0.0004 | 0.9917 ± 0.0017 |

| OA | 0.9014 ± 0.0111 | 0.9442 ± 0.0052 | 0.9816 ± 0.0016 | 0.9862 ± 0.0022 | 0.9924 ± 0.0017 | 0.9974 ± 0.0012 |

| Kappa | 0.8915 ± 0.0181 | 0.9388 ± 0.0057 | 0.9798 ± 0.0015 | 0.9848 ± 0.0024 | 0.9916 ± 0.0019 | 0.9971 ± 0.0015 |

| Classification Algorithm | OA | Kappa |

|---|---|---|

| KNN | 0.8332 ± 0.0143 | 0.8173 ± 0.0139 |

| Wishart | 0.8680 ± 0.0024 | 0.8571 ± 0.0028 |

| LOR-LBP | 0.9557 ± 0.0039 | 0.9512 ± 0.0041 |

| RF | 0.9974 ± 0.0012 | 0.9971 ± 0.0015 |

| Categories | MBT_pixel | MBT_T3 | RS | MI | BT | MBT |

|---|---|---|---|---|---|---|

| Paddy 1 | 0.9080 ± 0.0075 | 0.9229 ± 0.0021 | 0.9371 ± 0.0020 | 0.9257 ± 0.0025 | 0.9072 ± 0.0031 | 0.8730 ± 0.0023 |

| Paddy 2 | 0.8697 ± 0.0124 | 0.9021 ± 0.0030 | 0.9187 ± 0.0044 | 0.8251 ± 0.0091 | 0.8714 ± 0.0020 | 0.7836 ± 0.0102 |

| Paddy 3 | 0.8670 ± 0.0100 | 0.9567 ± 0.0017 | 0.9534 ± 0.0017 | 0.9617 ± 0.0010 | 0.9835 ± 0.0004 | 0.9731 ± 0.0009 |

| Paddy 4 | 0.5489 ± 0.0089 | 0.7855 ± 0.0041 | 0.7774 ± 0.0059 | 0.7897 ± 0.0028 | 0.8503 ± 0.0011 | 0.8145 ± 0.0012 |

| Paddy 5 | 0.5989 ± 0.0102 | 0.7671 ± 0.0033 | 0.7606 ± 0.0043 | 0.8193 ± 0.0027 | 0.7333 ± 0.0102 | 0.8723 ± 0.0007 |

| OA | 0.7704 ± 0.0060 | 0.8725 ± 0.0041 | 0.8794 ± 0.0052 | 0.8856 ± 0.0043 | 0.8873 ± 0.0043 | 0.9093 ± 0.0012 |

| Kappa | 0.7516 ± 0.0063 | 0.8317 ± 0.0069 | 0.8207 ± 0.0070 | 0.8349 ± 0.0043 | 0.8372 ± 0.0042 | 0.8509 ± 0.0025 |

| Classification Algorithm | OA | Kappa |

|---|---|---|

| KNN | 0.8391 ± 0.0092 | 0.7717 ± 0.0084 |

| Wishart | 0.8492 ± 0.0051 | 0.7890 ± 0.0039 |

| LOR-LBP | 0.8747 ± 0.0022 | 0.8210 ± 0.0020 |

| RF | 0.9093 ± 0.0012 | 0.8509 ± 0.0025 |

| Categories | MBT_pixel | MBT _T3 | RS | MI | BT | MBT |

|---|---|---|---|---|---|---|

| Building | 0.6045 ± 0.0164 | 0.6096 ± 0.0093 | 0.6593 ± 0.0141 | 0.7347 ± 0.0072 | 0.6606 ± 0.0047 | 0.6537 ± 0.0047 |

| Forst | 0.5928 ± 0.0138 | 0.8294 ± 0.0037 | 0.7825 ± 0.0133 | 0.6754 ± 0.0124 | 0.8573 ± 0.0028 | 0.8360 ± 0.0021 |

| Water | 0.9286 ± 0.0019 | 0.9450 ± 0.0014 | 0.9483 ± 0.0020 | 0.9599 ± 0.0010 | 0.9287 ± 0.0011 | 0.9583 ± 0.0023 |

| Soil | 0.2997 ± 0.0022 | 0.1501 ± 0.0077 | 0.1309 ± 0.0083 | 0.1446 ± 0.0049 | 0.1374 ± 0.0106 | 0.2591 ± 0.0104 |

| OA | 0.8226 ± 0.0031 | 0.8486 ± 0.0038 | 0.8179 ± 0.0113 | 0.8382 ± 0.0116 | 0.8462 ± 0.0040 | 0.8623 ± 0.0039 |

| Kappa | 0.6959 ± 0.0064 | 0.7398 ± 0.0054 | 0.6936 ± 0.0154 | 0.7180 ± 0.0205 | 0.7345 ± 0.0073 | 0.7590 ± 0.0068 |

| Classification Algorithm | OA | Kappa |

|---|---|---|

| KNN | 0.8109 ± 0.0149 | 0.6786 ± 0.0112 |

| Wishart | 0.8201 ± 0.0103 | 0.6927 ± 0.0191 |

| LOR-LBP | 0.8469 ± 0.0064 | 0.7351 ± 0.0101 |

| RF | 0.8623 ± 0.0039 | 0.7590 ± 0.0068 |

© 2018 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, W.; Yang, J.; Li, P.; Han, Y.; Zhao, J.; Shi, H. A Novel Object-Based Supervised Classification Method with Active Learning and Random Forest for PolSAR Imagery. Remote Sens. 2018, 10, 1092. https://doi.org/10.3390/rs10071092

Liu W, Yang J, Li P, Han Y, Zhao J, Shi H. A Novel Object-Based Supervised Classification Method with Active Learning and Random Forest for PolSAR Imagery. Remote Sensing. 2018; 10(7):1092. https://doi.org/10.3390/rs10071092

Chicago/Turabian StyleLiu, Wensong, Jie Yang, Pingxiang Li, Yue Han, Jinqi Zhao, and Hongtao Shi. 2018. "A Novel Object-Based Supervised Classification Method with Active Learning and Random Forest for PolSAR Imagery" Remote Sensing 10, no. 7: 1092. https://doi.org/10.3390/rs10071092

APA StyleLiu, W., Yang, J., Li, P., Han, Y., Zhao, J., & Shi, H. (2018). A Novel Object-Based Supervised Classification Method with Active Learning and Random Forest for PolSAR Imagery. Remote Sensing, 10(7), 1092. https://doi.org/10.3390/rs10071092