Figure 1.

(a) Location of the study site (in black), Catalonia Spain, (b) Sentinel-1 footprints over Catalonia used in the study, (c) digital elevation model (DEM) from shuttle radar topography mission (SRTM) data, (d) agricultural areas of Catalonia derived from geographical information system for agricultural parcels (SIGPAC) data. The hatched area represents the zone finally used for classification.

Figure 1.

(a) Location of the study site (in black), Catalonia Spain, (b) Sentinel-1 footprints over Catalonia used in the study, (c) digital elevation model (DEM) from shuttle radar topography mission (SRTM) data, (d) agricultural areas of Catalonia derived from geographical information system for agricultural parcels (SIGPAC) data. The hatched area represents the zone finally used for classification.

Figure 2.

Precipitation and temperature records for a local meteorological station in Tornabous of the interior plain of Catalonia, Spain.

Figure 2.

Precipitation and temperature records for a local meteorological station in Tornabous of the interior plain of Catalonia, Spain.

Figure 3.

Distribution of the number of agricultural plots per class of crop type.

Figure 3.

Distribution of the number of agricultural plots per class of crop type.

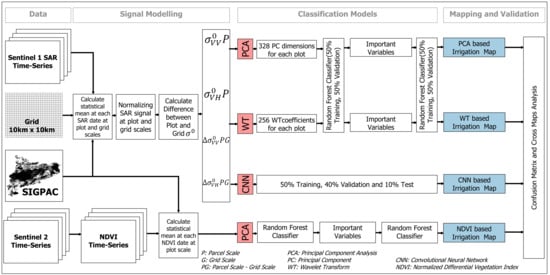

Figure 4.

Workflow overview using random forest (RF) and the convolutional neural network (CNN).

Figure 4.

Workflow overview using random forest (RF) and the convolutional neural network (CNN).

Figure 5.

Architecture of the one dimensional (1D) CNN model (CNN1D) used for classification of irrigated/non-irrigated plots using SAR and optical data.

Figure 5.

Architecture of the one dimensional (1D) CNN model (CNN1D) used for classification of irrigated/non-irrigated plots using SAR and optical data.

Figure 6.

Temporal evolution of SAR backscattering coefficient σ° in VV polarization at plot scale (green curve) and 10 km grid scale (red curve) with precipitation data recorded at a local meteorological station for (a) the non-irrigated plot, (b) the irrigated plot.

Figure 6.

Temporal evolution of SAR backscattering coefficient σ° in VV polarization at plot scale (green curve) and 10 km grid scale (red curve) with precipitation data recorded at a local meteorological station for (a) the non-irrigated plot, (b) the irrigated plot.

Figure 7.

Scatter plot of a random sample of 2000 irrigated and 2000 non-irrigated plots using different combinations of important principal component (PC) variables. Irrigated plots are presented in blue and non-irrigated plots represented in red. (a) PC1 of with PC1 of , (b) PC16 of with PC1 of , (c) PC1 of with PC16 of , (d) PC1 of with PC2 of , (e) PC16 of with PC2 of and (f) PC5 of with PC2 of . = − and = − . “P” means plot scale and “G” means grid scale.

Figure 7.

Scatter plot of a random sample of 2000 irrigated and 2000 non-irrigated plots using different combinations of important principal component (PC) variables. Irrigated plots are presented in blue and non-irrigated plots represented in red. (a) PC1 of with PC1 of , (b) PC16 of with PC1 of , (c) PC1 of with PC16 of , (d) PC1 of with PC2 of , (e) PC16 of with PC2 of and (f) PC5 of with PC2 of . = − and = − . “P” means plot scale and “G” means grid scale.

Figure 8.

Reconstruction of SAR signal in VV polarization at plot scale through the linear combinations of the ‘Haar’ wavelet coefficients using (a) 2 coefficients, (b) 4 coefficients, (c) 8 coefficients, (d) 16 coefficients, (e) 32 coefficients, and (f) 64 coefficients.

Figure 8.

Reconstruction of SAR signal in VV polarization at plot scale through the linear combinations of the ‘Haar’ wavelet coefficients using (a) 2 coefficients, (b) 4 coefficients, (c) 8 coefficients, (d) 16 coefficients, (e) 32 coefficients, and (f) 64 coefficients.

Figure 9.

Scatter plot of a random sample of 2000 irrigated and 2000 non-irrigated plots using different combinations of important wavelet transformation (WT) coefficients. Irrigated plots are presented in blue and non-irrigated plots represented in red (a) WC61 of with WC62 of , (b) WC62 of with WC53 of , (c) WC62 of with WC53 of . = − and = − . “P” means plot scale and “G” means grid scale and WC means wavelet coefficient.

Figure 9.

Scatter plot of a random sample of 2000 irrigated and 2000 non-irrigated plots using different combinations of important wavelet transformation (WT) coefficients. Irrigated plots are presented in blue and non-irrigated plots represented in red (a) WC61 of with WC62 of , (b) WC62 of with WC53 of , (c) WC62 of with WC53 of . = − and = − . “P” means plot scale and “G” means grid scale and WC means wavelet coefficient.

Figure 10.

Irrigation mapping using the (a) WT-RF model, (b) PC-RF model and (c) the CNN model. Irrigated areas are presented in blue while non-irrigated areas are shown in red. A zoom version of the yellow box in each map is provided to better visualize different classification results.

Figure 10.

Irrigation mapping using the (a) WT-RF model, (b) PC-RF model and (c) the CNN model. Irrigated areas are presented in blue while non-irrigated areas are shown in red. A zoom version of the yellow box in each map is provided to better visualize different classification results.

Figure 11.

Comparison of accuracy indices between RF and CNN classifications in three different scenarios: (a) Using the S1 SAR data, (b) using S2 optical data and (c) using S1 SAR and S2 optical data.

Figure 11.

Comparison of accuracy indices between RF and CNN classifications in three different scenarios: (a) Using the S1 SAR data, (b) using S2 optical data and (c) using S1 SAR and S2 optical data.

Table 1.

Summary of the used remote sensing data.

Table 1.

Summary of the used remote sensing data.

| Data | Type | Satellite | Spatial Resolution | Images Number | Frequency Used | Calibration Performed | Bands Polarization |

|---|

| Satellite Images | (Synthetic aperture radar) SAR | Sentinel-1 | 10 m | 82 | 6 days | Geometric/Radiometric | VV, VH |

| Optical | Sentinel-2 | 10 m | 17 | One month | Ortho-rectified Reflectance | Near Infrared, Red |

Table 2.

Accuracy indices calculated for each classifier. TP is the number of the irrigated plots truly classified as irrigated, TN is the number non-irrigated plots truly classified as non-irrigated plots, FP is the number of non-irrigated plots falsely classified as irrigated, FN is the number of irrigated plots falsely classified as non-irrigated plots, N is the total number of plots, and EA is the expected accuracy.

Table 2.

Accuracy indices calculated for each classifier. TP is the number of the irrigated plots truly classified as irrigated, TN is the number non-irrigated plots truly classified as non-irrigated plots, FP is the number of non-irrigated plots falsely classified as irrigated, FN is the number of irrigated plots falsely classified as non-irrigated plots, N is the total number of plots, and EA is the expected accuracy.

| Index | Equation |

|---|

| OA | |

| EA | |

| Kappa | |

| F1 score | |

| Precision | |

| Recall | |

Table 3.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using the 328 SAR PC variables and 15 important SAR PC variables.

Table 3.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using the 328 SAR PC variables and 15 important SAR PC variables.

| Method | Class | Precision | Recall | F-Score |

|---|

| PC-RF 328 Variables | Irrigated | 0.95 | 0.79 | 0.86 |

| Non-Irrigated | 0.90 | 0.98 | 0.94 |

| OA | 91.2% |

| Kappa | 0.79 |

| F-score | 0.91 |

| PC-RF 15 Important Variables | Irrigated | 0.92 | 0.81 | 0.86 |

| Non-Irrigated | 0.90 | 0.96 | 0.93 |

| OA | 90.7% |

| Kappa | 0.79 |

| F-score | 0.91 |

Table 4.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using the 256 wavelet coefficients and the 16 important wavelet coefficients.

Table 4.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using the 256 wavelet coefficients and the 16 important wavelet coefficients.

| Method | Class | Precision | Recall | F-Score |

|---|

| WT-RF 256 Variables | Irrigated | 0.94 | 0.81 | 0.87 |

| Non-Irrigated | 0.90 | 0.97 | 0.94 |

| OA | 91.4% |

| Kappa | 0.81 |

| F-score | 0.91 |

| WT-RF 18 Important Variables | Irrigated | 0.89 | 0.78 | 0.83 |

| Non-Irrigated | 0.89 | 0.95 | 0.92 |

| OA | 89.1% |

| Kappa | 0.75 |

| F-score | 0.89 |

Table 5.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using the 17 normalized differential vegetation index (NDVI)-PC and the seven important NDVI PC values.

Table 5.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using the 17 normalized differential vegetation index (NDVI)-PC and the seven important NDVI PC values.

| Method | Class | Precision | Recall | F-Score |

|---|

| NDVI-RF 17 Variables | Irrigated | 0.94 | 0.78 | 0.85 |

| Non-Irrigated | 0.89 | 0.97 | 0.93 |

| OA | 90.5% |

| Kappa | 0.78 |

| F-score | 0.91 |

| NDVI-RF 7 Important Variables | Irrigated | 0.92 | 0.76 | 0.84 |

| Non-Irrigated | 0.88 | 0.96 | 0.92 |

| OA | 89.5% |

| Kappa | 0.76 |

| F-score | 0.88 |

Table 6.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using 15 important SAR PC variables and the seven important NDVI PC variables.

Table 6.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the RF classifier using 15 important SAR PC variables and the seven important NDVI PC variables.

| Method | Class | Precision | Recall | F-Score |

|---|

| 15 variable SAR PC-RF + 7 variable NDVI-RF | Irrigated | 0.95 | 0.82 | 0.88 |

| Non-Irrigated | 0.91 | 0.98 | 0.94 |

| OA | 92.3% |

| Kappa | 0.82 |

| F-score | 0.91 |

Table 7.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the CNN method on SAR data.

Table 7.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the CNN method on SAR data.

| Method | Class | Precision | Recall | F-Score |

|---|

| CNN on SAR Data | Irrigated | 0.93 | 0.89 | 0.91 |

| Non-Irrigated | 0.95 | 0.96 | 0.96 |

| OA | 94.1% 0.06 |

| Kappa | 0.87 0.0014 |

| F-score | 0.94 0.0006 |

Table 8.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the CNN method on NDVI data.

Table 8.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the CNN method on NDVI data.

| Method | Class | Precision | Recall | F-Score |

|---|

| CNN on Optical Data | Irrigated | 0.93 | 0.81 | 0.87 |

| Non-Irrigated | 0.91 | 0.97 | 0.94 |

| OA | 91.6% 0.06 |

| Kappa | 0.81 0.0016 |

| F-score | 0.91 0.0006 |

Table 9.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the CNN method on combined SAR and optical data.

Table 9.

The values of the overall accuracy, Kappa, F-score, precision, and recall obtained from the CNN method on combined SAR and optical data.

| Method | Class | Precision | Recall | F-Score |

|---|

| CNN on Combined SAR and Optical Data | Irrigated | 0.94 | 0.90 | 0.92 |

| Non-Irrigated | 0.95 | 0.97 | 0.96 |

| OA | 94.5% 0.05 |

| Kappa | 0.88 0.0016 |

| F-score | 0.95 0.0005 |