Enhancement of Component Images of Multispectral Data by Denoising with Reference

Abstract

:1. Introduction

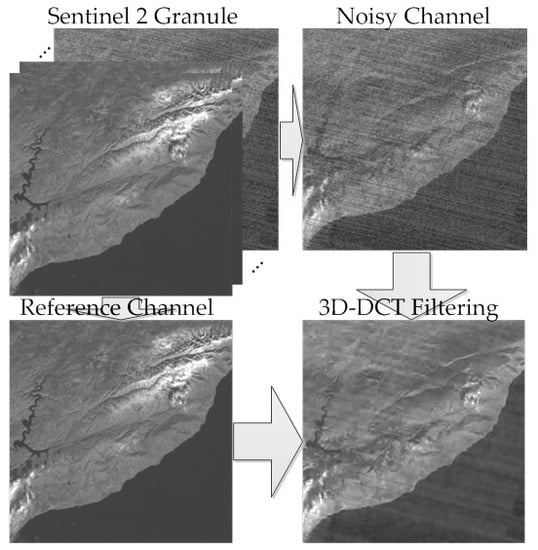

2. Materials and Methods

2.1. Image/Noise Model and Basic Principles of Image Denoising with Reference

2.2. Performance Criteria

3. Results

3.1. Analysis of Simulation Data

3.2. Application to Real Life Images

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Schowengerdt, R.A. Remote Sensing: Models and Methods for Image Processing, 3rd ed.; Academic Press: San Diego, CA, USA, 2007. [Google Scholar]

- Mielke, C.; Boshce, N.K.; Rogass, C.; Segl, K.; Gauert, C.; Kaufmann, H. Potential Applications of the Sentinel-2 Multispectral Sensor and the ENMAP hyperspectral Sensor in Mineral Exploration. EARSEL Eproceedings 2014, 13, 93–102. [Google Scholar]

- Joshi, N.; Baumann, M.; Ehammer, A.; Fensholt, R.; Grogan, K.; Hostert, P.; Jepsen, M.R.; Kuemmerle, T.; Meyfroidt, P.; Mitchard, E.T.A.; et al. A Review of the Application of Optical and Radar Remote Sensing Data Fusion to Land Use Mapping and Monitoring. Remote Sens. 2016, 8, 70. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, R. Multiple-Spectral-Band CRFs for Denoising Junk Bands of Hyperspectral Imagery. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2269–2275. [Google Scholar] [CrossRef]

- First Applications from Sentinel-2A. Available online: http://www.esa.int/Our_Activities/Observing_the_Earth/Copernicus/Sentinel-2/First_applications_from_Sentinel-2A (accessed on 10 January 2019).

- Uss, M.; Vozel, B.; Lukin, V.; Chehdi, K. Local signal-dependent noise variance estimation from hyperspectral textural images. IEEE J. Sel. Top. Signal Process. 2011, 5, 469–486. [Google Scholar] [CrossRef]

- Fan, Y.R.; Huang, T.Z.; Zhao, X.L.; Deng, L.J.; Fan, X. Multispectral Image Denoising Via Nonlocal Multitask Sparse Learning. Remote Sens. 2018, 10, 116. [Google Scholar] [CrossRef]

- Lukin, V.; Abramov, S.; Krivenko, S.; Kurekin, A.; Pogrebnyak, O. Analysis of classification accuracy for pre-filtered multichannel remote sensing data. Expert Syst. Appl. 2013, 40, 6400–6411. [Google Scholar] [CrossRef]

- Ponomaryov, V.; Rosales, A.; Gallegos, F.; Loboda, I. Adaptive vector directional filters to process multichannel images. IEICE Trans. Commun. 2007, 90, 429–430. [Google Scholar] [CrossRef]

- Lukac, R.; Plataniotis, K.N. Color Image Processing: Methods and Applications; CRC Press: Boca Raton, FL, USA, 2006; 600p. [Google Scholar]

- Astola, J.; Haavisto, P.; Neuvo, Y. Vector median filters. Proc. IEEE 1990, 78, 678–689. [Google Scholar] [CrossRef]

- Manolakis, D.; Lockwood, R.; Cooley, T. On the Spectral Correlation Structure of Hyperspectral Imaging Data. In Proceedings of the IGARSS 2008—2008 IEEE International Geoscience and Remote Sensing Symposium, Boston, MA, USA, 7–11 July 2008; pp. II-581–II-584. [Google Scholar]

- Mizutani, J.; Ogawa, S.; Shinoda, K.; Hasegawa, M.; Kato, S. Multispectral demosaicking algorithm based on inter-channel correlation. In Proceedings of the 2014 IEEE Visual Communications and Image Processing Conference, Valletta, Malta, 7–10 December 2014; pp. 474–477. [Google Scholar]

- Deledalle, C.; Denis, L.; Tabti, S.; Tupin, F. MuLoG, or How to Apply Gaussian Denoisers to Multi-Channel SAR Speckle Reduction? IEEE Trans. Image Process. 2017, 26, 4389–4403. [Google Scholar] [CrossRef] [Green Version]

- Ponomarenko, N.N.; Zelensky, A.A.; Koivisto, P.T. 3D DCT Based Filtering of Color and Multichannel Images. Telecommun. Radio Eng. 2008, 67, 1369–1392. [Google Scholar] [CrossRef]

- Lukac, R.; Smolka, B.; Plataniotis, K.N.; Venetsanopoulos, A.N. Vector sigma filters for noise detection and removal in color images. J. Vis. Commun. Image Represent. 2006, 17, 1–26. [Google Scholar] [CrossRef]

- Pizurica, A.; Philips, W. Estimating the probability of the presence of a signal of interest in multiresolution single- and multiband image denoising. IEEE Trans. Image Process. 2006, 15, 654–665. [Google Scholar] [CrossRef] [PubMed]

- Kurekin, A.A.; Lukin, V.V.; Zelensky, A.A.; Koivisto, P.; Astola, J.; Saarinen, K.P. Comparison of component and vector filter performance with application to multichannel and color image processing. In Proceedings of the IEEE-EURASIP Workshop on NSIP, Antalya, Turkey, 20–23 June 1999; pp. 38–42. [Google Scholar]

- Fevralev, D.V.; Ponomarenko, N.N.; Lukin, V.V.; Abramov, S.K.; Egiazarian, K.O.; Astola, J.T. Efficiency analysis of color image filtering. EURASIP J. Adv. Signal. Process. 2011, 2011, 41. [Google Scholar] [CrossRef] [Green Version]

- Kozhemiakin, R.A.; Rubel, O.; Abramov, S.K.; Lukin, V.V.; Vozel, B.; Chehdi, K. Efficiency analysis for 3D filtering of multichannel images. In Proceedings of the SPIE Remote Sensing, Edinburgh, UK, 26–29 September 2016; p. 11. [Google Scholar]

- Shah, C.A.; Watanachaturaporn, P.; Varshney, P.K.; Arora, M.K. Some recent results on hyperspectral image classification. In Proceedings of the IEEE Workshop on Advances in Techniques for Analysis of Remotely Sensed Data, Greenbelt, MD, USA, 27–28 October 2003; pp. 346–353. [Google Scholar]

- Liu, P.; Huang, F.; Li, G.; Liu, Z. Remote-Sensing Image Denoising Using Partial Differential Equations and Auxiliary Images as Priors. IEEE Geosci. Remote Sens. Lett. 2012, 9, 358–362. [Google Scholar] [CrossRef]

- Zelinski, A.; Goyal, V. Denoising Hyperspectral Imagery and Recovering Junk Bands using Wavelets and Sparse Approximation. In Proceedings of the 2006 IEEE International Symposium on Geoscience and Remote Sensing, Denver, CO, USA, 31 July–4 August 2006; pp. 387–390. [Google Scholar]

- Lukin, V.V.; Abramov, S.K.; Abramova, V.V.; Astola, J.T.; Egiazarian, K.O. DCT-based denoising in multichannel imaging with reference. Telecommun. Radio Eng. 2016, 75, 1167–1191. [Google Scholar] [CrossRef]

- Yuan, Q.; Zhang, L.; Shen, H. Hyperspectral Image Denoising With a Spatial–Spectral View Fusion Strategy. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2314–2325. [Google Scholar] [CrossRef]

- Liu, X.; Bourennane, S.; Fossati, C. Denoising of Hyperspectral Images Using the PARAFAC Model and Statistical Performance Analysis. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3717–3724. [Google Scholar] [CrossRef]

- Wang, Y.; Niu, R.; Yu, X. Anisotropic Diffusion for Hyperspectral Imagery Enhancement. IEEE Sens. J. 2010, 10, 469–477. [Google Scholar] [CrossRef]

- Chen, G.; Qian, S. Denoising of Hyperspectral Imagery Using Principal Component Analysis and Wavelet Shrinkage. IEEE Trans. Geosci. Remote Sens. 2011, 49, 973–980. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Color Image Denoising via Sparse 3D Collaborative Filtering with Grouping Constraint in Luminance-Chrominance Space. In Proceedings of the 2007 IEEE International Conference on Image Processing, San Antonio, TX, USA, 16 September–19 October 2007; pp. I-313–I-316. [Google Scholar]

- Lukin, V.V.; Abramov, S.K.; Abramova, V.V.; Astola, J.T.; Egiazarian, K.O. Denoising of Multichannel Images with References. Telecommun. Radio Eng. 2017, 76, 1719–1748. [Google Scholar] [CrossRef]

- Abramov, S.K.; Abramova, V.V.; Lukin, V.V.; Egiazarian, K.O. Denoising of multichannel images with nonlinear transformation of reference image. Telecommun. Radio Eng. 2018, 77, 769–786. [Google Scholar] [CrossRef]

- Dabov, K.; Foi, A.; Katkovnik, V.; Egiazarian, K. Image Denoising by Sparse 3-D Transform-Domain Collaborative Filtering. IEEE Trans. Image Process. 2007, 16, 2080–2095. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Uss, M.; Vozel, B.; Lukin, V.; Chehdi, K. Analysis of signal-dependent sensor noise on JPEG 2000-compressed Sentinel-2 multi-spectral images. In Proceedings of the SPIE Conference on Signal and Image Processing for Remote Sensing, Warsaw, Poland, September 2017; p. 12. [Google Scholar]

- Rubel, O.; Lukin, V.; Abramov, S.; Vozel, B.; Pogrebnyak, O.; Egiazarian, K. Is Texture Denoising Efficiency Predictable? Int. J. Pattern Recognit. Artif. Intell. 2018, 32, 1860005. [Google Scholar] [CrossRef] [Green Version]

- Rubel, O.; Kozhemiakin, R.A.; Abramov, S.K.; Lukin, V.V.; Vozel, B.; Chehdi, K. Performance prediction for 3D filtering of multichannel images. In Proceedings of the Image and Signal Processing for Remote Sensing XXI, Toulouse, France, 21 September 2015. [Google Scholar]

- Meola, J.; Eismann, M.T.; Moses, R.L.; Ash, J.N. Modeling and estimation of signal-dependent noise in hyperspectral imagery. Appl. Opt. 2011, 50, 3829–3846. [Google Scholar] [CrossRef] [PubMed]

- Ponomarenko, N.; Lukin, V.; Astola, J.; Egiazarian, K. Analysis of HVS-Metrics’ Properties Using Color Image Database TID2013. In Proceedings of the ACIVS, Catania, Italy, 26–29 October 2015; pp. 613–624. [Google Scholar]

- Pogrebnyak, O.B.; Lukin, V.V. Wiener discrete cosine transform-based image filtering. J. Electron. Imaging 2012, 21, 16. [Google Scholar] [CrossRef]

- Solbo, S.; Eltoft, T. Homomorphic wavelet-based statistical despeckling of SAR images. IEEE Trans. Geosci. Remote Sens. 2004, 42, 711–721. [Google Scholar] [CrossRef]

- Ponomarenko, N.; Silvestri, F.; Egiazarian, K.; Carli, M.; Astola, J.; Lukin, V. On between-coefficient contrast masking of DCT basis functions. In Proceedings of the Third International Workshop on Video Processing and Quality Metrics for Consumer Electronics, VPQM 2007, Scottsdale, AZ, USA, 25–26 January 2007. [Google Scholar]

| Granule 1 | |||||||||||||

| Channel Name | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 8A | 09 | 10 | 11 | 12 |

| 218.0 | 75.7 | 26.4 | 53.3 | 27.5 | 42.9 | 71.7 | 92.8 | 103.1 | 38.2 | 9.8 | 31.7 | 54.7 | |

| 0.042 | 0.024 | 0.015 | 0.027 | 0.013 | 0.019 | 0.030 | 0.028 | 0.035 | 0.052 | 0.010 | 0.011 | 0.022 | |

| 291.5 | 109.5 | 43.5 | 82.4 | 42.0 | 68.1 | 114.9 | 132.4 | 156.3 | 65.6 | 10.0 | 45.2 | 72.3 | |

| 45.7 | 51.6 | 56.0 | 54.7 | 57.9 | 56.2 | 54.1 | 53.1 | 52.9 | 46.9 | 11.6 | 54.7 | 50.3 | |

| R#10 | 0.18 | 0.21 | 0.29 | 0.36 | 0.42 | 0.49 | 0.52 | 0.53 | 0,56 | 0,570 | 1.00 | 0.782 | 0.772 |

| Granule 2 | |||||||||||||

| Channel Name | 01 | 02 | 03 | 04 | 05 | 06 | 07 | 08 | 8A | 09 | 10 | 11 | 12 |

| 110.9 | 66.45 | 1.87 | 37.66 | 9.47 | 10.15 | 57.14 | 53.42 | 35.65 | 42.51 | 7.68 | 15.27 | 36.98 | |

| 0.003 | 0.026 | 0.023 | 0.030 | 0.017 | 0.048 | 0.025 | 0.040 | 0.059 | 0.014 | 0.024 | 0.017 | 0.044 | |

| 114.7 | 96.46 | 22.61 | 61.05 | 25.21 | 78.23 | 97.37 | 114.5 | 139.1 | 49.50 | 7.77 | 44.77 | 94.28 | |

| 29.36 | 32.72 | 41.55 | 41.31 | 46.90 | 51.58 | 52.40 | 51.38 | 51.47 | 44.11 | 11.91 | 52.48 | 47.89 | |

| R#10 | 0.366 | 0.384 | 0.412 | 0.467 | 0.490 | 0.243 | 0.229 | 0.243 | 0.261 | 0.308 | 1.00 | 0.651 | 0.618 |

| Image | FR01 | FR02 | FR03 | FR04 | RS1 | RS2 | ||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Variants | PSNR | PHVSM | PSNR | PHVSM | PSNR | PHVSM | PSNR | PHVSM | PSNR | PHVSM | PSNR | PHVSM |

| σ2=10 | ||||||||||||

| Input | 38.14 | 45.66 | 38.15 | 45.65 | 38.12 | 45.99 | 38.13 | 44.89 | 38.13 | 42.33 | 38.13 | 41.96 |

| 2D | 39.20 | 46.15 | 39.28 | 46.44 | 38.87 | 46.20 | 39.18 | 46.01 | 42.16 | 43.70 | 42.15 | 42.78 |

| BM3D | 39.69 | 46.64 | 39.72 | 46.76 | 39.26 | 46.45 | 39.59 | 46.40 | 42.68 | 44.41 | 42.69 | 43.68 |

| 3DC1 | 42.06 | 48.93 | 42.06 | 49.34 | 42.22 | 49.73 | 42.23 | 48.69 | 45.51 | 48.13 | 45.59 | 47.99 |

| 3DC2 | 44.13 | 51.79 | 44.24 | 51.85 | 43.98 | 52.12 | 44.27 | 51.37 | 45.95 | 49.10 | 45.87 | 48.53 |

| σ2 = 25 | ||||||||||||

| Input | 34.15 | 40.28 | 34.16 | 40.27 | 34.16 | 40.43 | 34.14 | 39.72 | 34.13 | 37.52 | 34.15 | 37.25 |

| 2D | 35.95 | 41.15 | 35.94 | 41.36 | 35.52 | 40.86 | 35.76 | 40.89 | 39.68 | 39.57 | 39.82 | 38.84 |

| BM3D | 36.60 | 41.65 | 36.59 | 42.00 | 36.06 | 41.44 | 36.27 | 41.47 | 40.17 | 40.31 | 40.26 | 39.60 |

| 3DC1 | 39.19 | 44.31 | 39.14 | 44.69 | 39.19 | 44.89 | 39.31 | 44.29 | 42.79 | 43.93 | 42.85 | 43.66 |

| 3DC2 | 40.53 | 46.83 | 40.61 | 46.82 | 40.29 | 46.79 | 40.60 | 46.54 | 43.01 | 44.44 | 43.00 | 43.84 |

| σ2 = 100 | ||||||||||||

| Input | 28.15 | 32.55 | 28.14 | 32.49 | 28.12 | 32.51 | 28.14 | 32.17 | 28.13 | 30.71 | 28.12 | 30.54 |

| 2D | 31.50 | 34.08 | 31.37 | 34.28 | 31.04 | 33.62 | 31.12 | 33.72 | 36.31 | 34.15 | 36.88 | 34.12 |

| BM3D | 32.35 | 34.78 | 32.21 | 35.08 | 31.68 | 34.25 | 31.71 | 34.29 | 36.78 | 34.81 | 37.28 | 34.73 |

| 3DC1 | 34.99 | 38.10 | 34.78 | 38.20 | 34.67 | 38.26 | 34.74 | 38.03 | 38.98 | 38.10 | 39.05 | 37.54 |

| 3DC2 | 35.55 | 39.47 | 35.52 | 39.61 | 35.15 | 39.23 | 35.33 | 39.20 | 39.01 | 38.17 | 39.11 | 37.50 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Abramov, S.; Uss, M.; Lukin, V.; Vozel, B.; Chehdi, K.; Egiazarian, K. Enhancement of Component Images of Multispectral Data by Denoising with Reference. Remote Sens. 2019, 11, 611. https://doi.org/10.3390/rs11060611

Abramov S, Uss M, Lukin V, Vozel B, Chehdi K, Egiazarian K. Enhancement of Component Images of Multispectral Data by Denoising with Reference. Remote Sensing. 2019; 11(6):611. https://doi.org/10.3390/rs11060611

Chicago/Turabian StyleAbramov, Sergey, Mikhail Uss, Vladimir Lukin, Benoit Vozel, Kacem Chehdi, and Karen Egiazarian. 2019. "Enhancement of Component Images of Multispectral Data by Denoising with Reference" Remote Sensing 11, no. 6: 611. https://doi.org/10.3390/rs11060611

APA StyleAbramov, S., Uss, M., Lukin, V., Vozel, B., Chehdi, K., & Egiazarian, K. (2019). Enhancement of Component Images of Multispectral Data by Denoising with Reference. Remote Sensing, 11(6), 611. https://doi.org/10.3390/rs11060611

.png)