A New Remote Sensing Images and Point-of-Interest Fused (RPF) Model for Sensing Urban Functional Regions

Abstract

:1. Introduction

2. Data Source

2.1. Functional Classification

2.2. Remote Sensing Images

2.3. Point-of-Interest

3. Methods

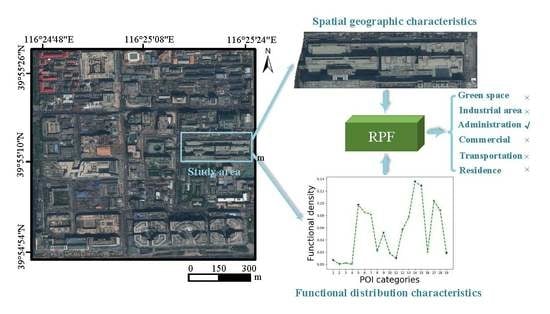

3.1. The Proposed Framework

3.2. Urban Functional Regions Classification Based on Remote Sensing Images

3.3. Urban Functional Regions Classification Based on POI

3.4. Urban Functional Regions Classification by Integrating Remote Sensing Images and POI

4. Results

4.1. Experiments and Settings

4.1.1. Experimental Data Description

4.1.2. Settings

4.1.3. Experiments

4.2. Classification Results Based on Single Remote Sensing Images

4.3. Classification Results Based on Single POI Data

4.3.1. Statistical Analysis of POI Data for Different Urban Functional Regions

4.3.2. Sensing Results Based on POI Data

4.4. Classification Results Based on Data Fused

4.5. Examples of Sensing Urban Functional Regions

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cohen, B. Urbanization in developing countries: Current trends, future projections, and key challenges for sustainability. Technol. Soc. 2006, 28, 63–80. [Google Scholar] [CrossRef]

- Plimmer, F.; Olawore, A. Land Administration for Sustainable Development. Prop. Manag. 2009, 29, 324. [Google Scholar]

- Jie, Y.; Yin, Z.; Zhong, H.; Xu, S.; Hu, X.; Wang, J.; Wu, J. Monitoring urban expansion and land use/land cover changes of Shanghai metropolitan area during the transitional economy (1979–2009) in China. Environ. Monit. Assess. 2011, 177, 609–621. [Google Scholar]

- Liu, X.; Ma, L.; Li, X.; Ai, B.; Li, S.; He, Z. Simulating urban growth by integrating landscape expansion index (LEI) and cellular automata. Int. J. Geogr. Inf. Sci. 2014, 28, 148–163. [Google Scholar] [CrossRef]

- Regan, C. Real options analysis for land use management: Methods, application, and implications for policy. J. Environ. Manag. 2015, 161, 144–152. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Xia, L.; Liu, X.; Liu, P.; Ke, M. Sensing spatial distribution of urban land use by integrating points-of-interest and Google Word2Vec model. Int. J. Geogr. Inf. Sci. 2016, 31, 1–24. [Google Scholar] [CrossRef]

- Yuan, J.; Zheng, Y.; Xie, X. Discovering regions of different functions in a city using human mobility and POIs. In Proceedings of the 18th ACM SIGKDD international conference on Knowledge Discovery and Data Mining, Beijing, China, 12–16 August 2012; pp. 186–194. [Google Scholar]

- Li, M.; Shen, Z.; Hao, X. Revealing the relationship between spatio-temporal distribution of population and urban function with social media data. Geojournal 2016, 81, 1–17. [Google Scholar] [CrossRef]

- Huang, W.; Fan, H.; Qiu, Y.; Cheng, Z.; Xu, P.; Qian, Y. Causation mechanism analysis for haze pollution related to vehicle emission in Guangzhou, China by employing the fault tree approach. Chemosphere 2016, 151, 9–16. [Google Scholar] [CrossRef]

- Tonne, C.; Halonen, J.I.; Beevers, S.D.; Dajnak, D.; Anderson, H.R. Long-term traffic air and noise pollution in relation to mortality and hospital readmission among myocardial infarction survivors. Int. J. Hyg. Environ. Health 2015, 219, 72–78. [Google Scholar] [CrossRef] [Green Version]

- Richter, M.; Weiland, U. Monitoring Urban Land Use Changes with Remote Sensing Techniques; Wiley-Blackwell: Oxford, UK, 2011. [Google Scholar]

- Herold, M.; Couclelis, H.; Clarke, K.C. The role of spatial metrics in the analysis and modeling of urban land use change. Comput. Environ. Urban Syst. 2005, 29, 369–399. [Google Scholar] [CrossRef]

- Bratasanu, D.; Nedelcu, I.; Datcu, M. Bridging the Semantic Gap for Satellite Image Annotation and Automatic Mapping Applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 193–204. [Google Scholar] [CrossRef] [Green Version]

- Zhang, X.; Du, S. A Linear Dirichlet Mixture Model for decomposing scenes: Application to analyzing urban functional zonings. Remote Sens. Environ. 2015, 169, 37–49. [Google Scholar] [CrossRef]

- Kaliraj, S.; Chandrasekar, N.; Ramachandran, K.; Srinivas, Y.; Saravanan, S. Coastal landuse and land cover change and transformations of Kanyakumari coast, India using remote sensing and GIS. Egypt. J. Remote Sens. Space Sci. 2017, 20, 169–185. [Google Scholar] [CrossRef]

- Li, W.; Liu, H.; Wang, Y.; Li, Z.; Jia, Y.; Gui, G. Deep Learning-Based Classification Methods for Remote Sensing Images in Urban Built-up Areas. IEEE Access 2019, 7, 36274–36284. [Google Scholar] [CrossRef]

- Pei, T.; Sobolevsky, S.; Ratti, C.; Shaw, S.L.; Li, T.; Zhou, C. A new insight into land use classification based on aggregated mobile phone data. Int. J. Geogr. Inf. Sci. 2014, 28, 1988–2007. [Google Scholar] [CrossRef] [Green Version]

- Coulter, L.L.; Stow, D.A.; Tsai, Y.H.; Ibanez, N.; Shih, H.c.; Kerr, A.; Benza, M.; Weeks, J.R.; Mensah, F. Classification and assessment of land cover and land use change in southern Ghana using dense stacks of Landsat 7 ETM+ imagery. Remote Sens. Environ. 2016, 184, 396–409. [Google Scholar] [CrossRef]

- Gómez, C.; White, J.C.; Wulder, M.A. Optical remotely sensed time series data for land cover classification: A review. ISPRS J. Photogramm. Remote Sens. 2016, 116, 55–72. [Google Scholar] [CrossRef] [Green Version]

- Pagliarella, M.C.; Sallustio, L.; Capobianco, G.; Conte, E.; Corona, P.; Fattorini, L.; Marchetti, M. From one- to two-phase sampling to reduce costs of remote sensing-based estimation of land-cover and land-use proportions and their changes. Remote Sens. Environ. 2016, 184, 410–417. [Google Scholar] [CrossRef]

- Peng, F.; Weng, Q. A time series analysis of urbanization induced land use and land cover change and its impact on land surface temperature with Landsat imagery. Remote Sens. Environ. 2016, 175, 205–214. [Google Scholar]

- Comber, A.J.; Brunsdon, C.; Farmer, C. Community detection in spatial networks: Inferring land use from a planar graph of land cover objects. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 274–282. [Google Scholar] [CrossRef] [Green Version]

- Li, M.; Stein, A.; Bijker, W.; Zhan, Q. Urban land use extraction from Very High Resolution remote sensing imagery using a Bayesian network. ISPRS J. Photogramm. Remote Sens. 2016, 122, 192–205. [Google Scholar] [CrossRef]

- Pacifici, F.; Chini, M.; Emery, W.J. A neural network approach using multi-scale textural metrics from very high-resolution panchromatic imagery for urban land-use classification. Remote Sens. Environ. 2009, 113, 1276–1292. [Google Scholar] [CrossRef]

- Voltersen, M.; Berger, C.; Hese, S.; Schmullius, C. Object-based land cover mapping and comprehensive feature calculation for an automated derivation of urban structure types at block level. Remote Sens. Environ. 2014, 154, 192–201. [Google Scholar] [CrossRef]

- Liu, Y.; Liu, X.; Gao, S.; Gong, L.; Kang, C.; Zhi, Y.; Chi, G.; Shi, L. Social Sensing: A New Approach to Understanding Our Socioeconomic Environments. Ann. Assoc. Am. Geogr. 2015, 105, 512–530. [Google Scholar] [CrossRef]

- Yang, H.; Wolfson, O.; Zheng, Y.; Capra, L. Urban Computing: Concepts, Methodologies, and Applications. ACM Trans. Intell. Syst. Technol. 2014, 5, 38. [Google Scholar]

- Hu, Y.; Han, Y. Identification of Urban Functional Areas Based on POI Data: A Case Study of the Guangzhou Economic and Technological Development Zone. Sustainability 2019, 11, 1385. [Google Scholar] [CrossRef] [Green Version]

- Chi, M.; Sun, Z.; Qin, Y.; Shen, J.; Benediktsson, J.A. A Novel Methodology to Label Urban Remote Sensing Images Based on Location-Based Social Media Photos. Proc. IEEE 2017, 105, 1926–1936. [Google Scholar] [CrossRef]

- Gao, S.; Janowicz, K.; Couclelis, H. Extracting urban functional regions from points of interest and human activities on location-based social networks. Trans. GIS 2017, 21, 446–467. [Google Scholar] [CrossRef]

- Hobel, H.; Abdalla, A.; Fogliaroni, P.; Frank, A.U. A Semantic Region Growing Algorithm: Extraction of Urban Settings. In AGILE 2015: Geographic Information Science as an Enabler of Smarter Cities and Communities; Fernando, B., Maribel, Y.S., Eds.; Springer International Publishing: Cham, Switzerland, 2015. [Google Scholar]

- Song, J.; Lin, T.; Li, X.; Prishchepov, A.V. Mapping Urban Functional Zones by Integrating Very High Spatial Resolution Remote Sensing Imagery and Points of Interest: A Case Study of Xiamen, China. Remote Sens. 2018, 10, 1737. [Google Scholar] [CrossRef] [Green Version]

- Liu, X.; He, J.; Yao, Y.; Zhang, J.; Ye, H. Classifying urban land use by integrating remote sensing and social media data. Int. J. Geogr. Inf. Sci. 2017, 31, 1675–1696. [Google Scholar] [CrossRef]

- Hu, T.; Yang, J.; Li, X.; Gong, P. Mapping Urban Land Use by Using Landsat Images and Open Social Data. Remote Sens. 2016, 8, 151. [Google Scholar] [CrossRef]

- Zhang, Y.; Li, Q.; Huang, H.; Wu, W.; Du, X.; Wang, H. The Combined Use of Remote Sensing and Social Sensing Data in Fine-Grained Urban Land Use Mapping: A Case Study in Beijing, China. Remote Sens. 2017, 9, 865. [Google Scholar] [CrossRef] [Green Version]

- Benza, M.; Weeks, J.R.; Stow, D.A.; López-Carr, D.; Clarke, K.C. A pattern-based definition of urban context using remote sensing and GIS. Remote Sens. Environ. 2016, 183, 250–264. [Google Scholar] [CrossRef] [Green Version]

- Jia, Y.; Ge, Y.; Ling, F.; Guo, X.; Wang, J.; Wang, L.; Cheng, Y.; Li, X. Urban land use mapping by combining remote sensing imagery and mobile phone positioning data. Remote Sens. 2018, 10, 446. [Google Scholar] [CrossRef] [Green Version]

- Haiyan, X.; Wangfeng, Z.; Huailu, C.; Shouqian, C. Comparative Analysis of New and Old “Code for Classification of Urban Land Use and Planning Standards of Development Land”. Modern Urban Res. 2015, 11, 69–75. [Google Scholar]

- Krylov, V.A.; Martino, M.D.; Moser, G.; Serpico, S.B. Large urban zone classification on SPOT-5 imagery with convolutional neural networks. In Proceedings of the IGARSS 2016—2016 IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016. [Google Scholar]

- Kampffmeyer, M.; Salberg, A.B.; Jenssen, R. Urban Land Cover Classification with Missing Data Modalities Using Deep Convolutional Neural Networks. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1758–1768. [Google Scholar] [CrossRef] [Green Version]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Fully convolutional neural networks for remote sensing image classification. In Proceedings of the IGARSS 2016—2016 IEEE International Geoscience and Remote Sensing Symposium, Beijing, China, 10–15 July 2016. [Google Scholar]

- Yi, S.; Wang, X.; Tang, X. Deep Convolutional Network Cascade for Facial Point Detection. In Proceedings of the 26th IEEE Conference on Computer Vision and Pattern Recognition, Portland, OR, USA, 23–28 June 2013. [Google Scholar]

- Hinton, G.; Salakhutdinov, R. Reducing the Dimensionality of Data with Neural Networks. Science 2006, 313, 504–507. [Google Scholar] [CrossRef] [Green Version]

- Ouyang, W.; Luo, P.; Zeng, X.; Qiu, S.; Tian, Y.; Li, H. DeepID-Net: Deformable Deep Convolutional Neural Networks for Object Detection. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 39, 1320–1334. [Google Scholar] [CrossRef]

- Sun, K.; Xiao, B.; Liu, D.; Wang, J. Deep High-Resolution Representation Learning for Human Pose Estimation. In Proceedings of the 2019 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Long Beach, CA, USA, 16–20 June 2019. [Google Scholar]

- France, S.; Carroll, D. Is the Distance Compression Effect Overstated? Some Theory and Experimentation. In International Workshop on Machine Learning and Data Mining in Pattern Recognition; Springer: Berlin/Heidelberg, Germany, 2009; pp. 280–294. [Google Scholar]

- Strehl, A.; Ghosh, J.; Mooney, R. Impact of similarity measures on web-page clustering. In Workshop on Artificial Intelligence for Web Search (AAAI); AAAI: Menlo Park, CA, USA, 2000; Volume 58, p. 64. [Google Scholar]

- Gong, C.; Junwei, H.; Xiaoqiang, L. Remote Sensing Image Scene Classification: Benchmark and State of the Art. Proc. IEEE 2017, 105, 1865–1883. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Jian, S. Deep Residual Learning for Image Recognition. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

- Christian, S.; Vincent, V.; Sergey, I.; Jonathon, S.; Zbigniew, W. Rethinking the inception architecture for computer vision. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016. [Google Scholar]

| First Level | Second Level | Third Level |

|---|---|---|

| Residence | / | Residential areas |

| Industrial area | / | Factory, Industrial zone |

| Transportation zone | Traffic hinge land | Airport, Railway station, Bus station |

| Administration and public service | Administrative land | Party and government organizations, Public institution |

| Cultural facilities land | Science and technology museum, Library, Art museum | |

| Education and research land | Colleges and universities, Middle schools, Primary schools | |

| Medical and sanitary land | General hospital, Specialized hospital | |

| Commercial and business facility | Commercial facility land | Catering and other services land, Commercial and hotel |

| Commercial neighborhood | Business office building | |

| Green space and square | Public park | Park, Botanical garden |

| Scenic spots land | Scenic area, National park |

| Code | POI Category | Code | POI Category |

|---|---|---|---|

| 1 | Car service | 11 | Famous scenery |

| 2 | Car sale | 12 | Business residence |

| 3 | Vehicle maintenance | 13 | Government agency and Social organization |

| 4 | Motorcycle service | 14 | Science and Education culture |

| 5 | Food and Beverage | 15 | Transport facility service |

| 6 | Shopping service | 16 | Financial and Insurance service |

| 7 | Life service | 17 | Company business |

| 8 | Sports leisure service | 18 | Place name address information |

| 9 | Healthcare service | 19 | Communal facility |

| 10 | Accommodation service |

| Model | Accuracy |

|---|---|

| Alexnet [48] | 56.7% |

| Resnet50 [49] | 64.0% |

| Resnet101 [49] | 65.7% |

| Inceptionv3 [50] | 67.7% |

| Ours | 71.8% |

| POI Attribute | Metric Method | Accuracy |

|---|---|---|

| Frequency density | Euclidean distance | 51.5% |

| Chebyshev distance | 54.6% | |

| Pearson coefficient | 63.6% | |

| Cosine distance | 60.6% | |

| Functional density | Euclidean distance | 63.6% |

| Chebyshev distance | 57.6% | |

| Pearson coefficient | 66.7% | |

| Cosine distance | 69.7% |

| POI Attribute | Normalization Method | Accuracy |

|---|---|---|

| Frequency density | SoftMax | 72.1% |

| Minmax-pro | 59.3% | |

| Minmax | 72.2% | |

| Functional density | SoftMax | 76.7% |

| Minmax-pro | 58.9% | |

| Minmax | 77.8% |

| Method | Accuracy |

|---|---|

| Remote sensing image-only | 71.8% |

| POI-only | 69.7% |

| Data fuse | 77.8% |

| Data fuse(assign weights) | 82.1% |

| Urban Functional Regions | Commercial and Business Facility | Industrial Area | Green Space and Square | Administration and Public Service | Residence | Transportation | Producer Accuracy |

|---|---|---|---|---|---|---|---|

| Commercial and business facility | 86 | 125 | 2 | 14 | 0 | 1 | 38% |

| Industrial area | 1 | 326 | 0 | 78 | 0 | 0 | 80% |

| Green space and square | 21 | 0 | 285 | 27 | 32 | 12 | 76% |

| Administration and public service | 0 | 0 | 35 | 332 | 1 | 0 | 90% |

| Residence | 1 | 0 | 0 | 2 | 373 | 2 | 99% |

| Transportation | 0 | 0 | 11 | 2 | 20 | 131 | 80% |

| User Accuracy | 79% | 72% | 86% | 73% | 88% | 90% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, S.; Qing, L.; Han, L.; Liu, M.; Peng, Y.; Shen, L. A New Remote Sensing Images and Point-of-Interest Fused (RPF) Model for Sensing Urban Functional Regions. Remote Sens. 2020, 12, 1032. https://doi.org/10.3390/rs12061032

Xu S, Qing L, Han L, Liu M, Peng Y, Shen L. A New Remote Sensing Images and Point-of-Interest Fused (RPF) Model for Sensing Urban Functional Regions. Remote Sensing. 2020; 12(6):1032. https://doi.org/10.3390/rs12061032

Chicago/Turabian StyleXu, Shengyu, Linbo Qing, Longmei Han, Mei Liu, Yonghong Peng, and Lifang Shen. 2020. "A New Remote Sensing Images and Point-of-Interest Fused (RPF) Model for Sensing Urban Functional Regions" Remote Sensing 12, no. 6: 1032. https://doi.org/10.3390/rs12061032

APA StyleXu, S., Qing, L., Han, L., Liu, M., Peng, Y., & Shen, L. (2020). A New Remote Sensing Images and Point-of-Interest Fused (RPF) Model for Sensing Urban Functional Regions. Remote Sensing, 12(6), 1032. https://doi.org/10.3390/rs12061032