A Method of Segmenting Apples Based on Gray-Centered RGB Color Space

Abstract

:1. Introduction

2. Materials and Methods

2.1. Apple Image Acquisition

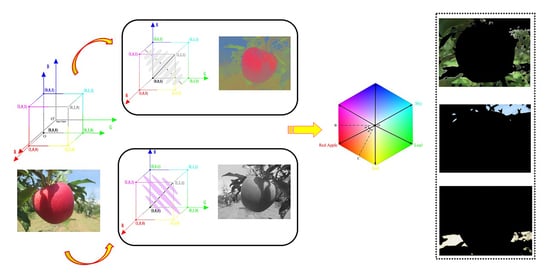

2.2. Gray-Centered RGB Color Space

2.3. Color Features Extraction

2.3.1. Quaternion

2.3.2. Color Features Decomposition of the Apple Image

2.3.3. Choice of COI and Features

2.4. A Patch-Based Feature Segmentation Algorithm

| Algorithm 1K-means clustering algorithm based on pixel block-based |

| Input: Original apple images |

| Segmentation region |

| Initialization: Randomly initialize, |

| Iteration: According to Equation (6), calculate |

| According to Equation (7), calculate |

| Until |

| Output: |

2.5. Criteria Methods

3. Experimental Results and Analysis

3.1. Visualization of Segmentation Results

3.2. Comparison and Quantitative Analysis of the Results of Segmentation

3.3. Double and Multi-Fruit Split Results

4. Discussion

4.1. Segmentation Result and Analysis with Different COI

4.2. Further Research Perspectives

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Yang, H.; Chen, L.; Ma, Z.; Chen, M.; Zhong, Y.; Deng, F.; Li, M. Computer vision-based high-quality tea automatic plucking robot using Delta parallel manipulator. Comput. Electron. Agric. 2021, 181, 105946. [Google Scholar] [CrossRef]

- Li, Y.; Li, M.; Jiangtao, Q.; Zhou, D.; Zou, Z.; Liu, K. Detection of typical obstacles in orchards based on deep convolutional neural network. Comput. Electron. Agric. 2021, 181, 105932. [Google Scholar] [CrossRef]

- Zhang, Z.; Kayacan, E.; Thompson, B.; Chowdhary, G. High precision control and deep learning-based corn stand counting algorithms for agricultural robot. Auton. Robot. 2020, 44, 1289–1302. [Google Scholar] [CrossRef]

- Arad, B.; Balendonck, J.; Barth, R.; Ben-Shahar, O.; Edan, Y.; Hellström, T.; Hemming, J.; Kurtser, P.; Ringdahl, O.; Tielen, T.; et al. Development of a sweet pepper harvesting robot. J. Field Robot. 2020, 37, 1027–1039. [Google Scholar] [CrossRef]

- Xiong, Y.; Ge, Y.; Grimstad, L.; From, P.J. An autonomous strawberry-harvesting robot: Design, development, integration, and field evaluation. J. Field Robot. 2020, 37, 202–224. [Google Scholar] [CrossRef] [Green Version]

- Sun, S.; Jiang, M.; He, D.; Long, Y.; Song, H. Recognition of green apples in an orchard environment by combining the GrabCut model and Ncut algorithm. Biosyst. Eng. 2019, 187, 201–213. [Google Scholar] [CrossRef]

- Wu, G.; Li, B.; Zhu, Q.; Huang, M.; Guo, Y. Using color and 3D geometry features to segment fruit point cloud and improve fruit recognition accuracy. Comput. Electron. Agric. 2020, 174, 105475. [Google Scholar] [CrossRef]

- Vidoni, R.; Bietresato, M.; Gasparetto, A.; Mazzetto, F. Evaluation and stability comparison of different vehicle configurations for robotic agricultural operations on side-slopes. Biosyst. Eng. 2015, 129, 197–211. [Google Scholar] [CrossRef]

- De-An, Z.; Jidong, L.; Ji, W.; Ying, Z.; Yu, C. Design and control of an apple harvesting robot. Biosyst. Eng. Biosyst. Eng. 2011, 110, 112–122. [Google Scholar] [CrossRef]

- Bulanon, D.; Kataoka, T. Fruit detection system and an end effector for robotic harvesting of Fuji apples. Agric. Eng. Int. CIGR J. 2010, 12, 203–210. [Google Scholar]

- Mao, S.; Li, Y.; Ma, Y.; Zhang, B.; Zhou, J.; Wang, K. Automatic cucumber recognition algorithm for harvesting robots in the natural environment using deep learning and multi-feature fusion. Comput. Electron. Agric. 2020, 170, 105254. [Google Scholar] [CrossRef]

- Ji, W.; Zhao, D.; Cheng, F.; Xu, B.; Zhang, Y.; Wang, J. Automatic recognition vision system guided for apple harvesting robot. Comput. Electr. Eng. 2012, 38, 1186–1195. [Google Scholar] [CrossRef]

- Lü, J.; Zhao, D.; Ji, W. Fast tracing recognition method of target fruit for apple harvesting robot. Nongye Jixie Xuebao/Trans. Chin. Soc. Agric. Mach. 2014, 45, 65–72. [Google Scholar] [CrossRef]

- Liu, X.; Zhao, D.; Jia, W.; Ruan, C.; Tang, S.; Shen, T. A method of segmenting apples at night based on color and position information. Comput. Electron. Agric. 2016, 122, 118–123. [Google Scholar] [CrossRef]

- Lv, J.; Wang, F.; Xu, L.; Ma, Z.; Yang, B. A segmentation method of bagged green apple image. Sci. Hortic. 2019, 246, 411–417. [Google Scholar] [CrossRef]

- Xiaoyang, L.; Dean, Z.; Weikuan, J.; Chengzhi, R.; Wei, J.I. Fruits Segmentation Method Based on Superpixel Features for Apple Harvesting Robot. Trans. Chin. Soc. Agric. Mach. 2019, 50, 22–30. [Google Scholar] [CrossRef]

- Tu, J.; Liu, C.; Li, Y.; Zhou, J.; Yuan, J. Apple recognition method based on illumination invariant graph. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2010, 26, 26–31. [Google Scholar] [CrossRef]

- Huang, L.; He, D. Apple Recognition in Natural Tree Canopy based on Fuzzy 2-partition Entropy. Int. J. Digit. Content Technol. Appl. 2013, 7, 107–115. [Google Scholar] [CrossRef]

- Song, H.; Qu, W.; Wang, D.; Yu, X.; He, D. Shadow removal method of apples based on illumination invariant image. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2014, 30, 168–176. [Google Scholar] [CrossRef]

- Song, H.; Zhang, W.; Zhang, X.; Zou, R. Shadow removal method of apples based on fuzzy set theory. Nongye Gongcheng Xuebao/Trans. Chin. Soc. Agric. Eng. 2014, 30, 135–141. [Google Scholar] [CrossRef]

- Jia, W.; Mou, S.; Wang, J.; Liu, X.; Zheng, Y.; Lian, J.; Zhao, D. Fruit recognition based on pulse coupled neural network and genetic Elman algorithm application in apple harvesting robot. Int. J. Adv. Robot. Syst. 2020, 17, 1729881419897473. [Google Scholar] [CrossRef]

- Xu, W.; Chen, H.; Su, Q.; Ji, C.; Xu, W.; Memon, D.-M.S.; Zhou, J. Shadow detection and removal in apple image segmentation under natural light conditions using an ultrametric contour map. Biosyst. Eng. 2019, 184, 142–154. [Google Scholar] [CrossRef]

- Xie, M.; Ji, Z.; Zhang, G.; Wang, T.; Sun, Q. Mutually Exclusive-KSVD: Learning a Discriminative Dictionary for Hyperspectral Image Classification. Neurocomputing 2018, 315, 177–189. [Google Scholar] [CrossRef]

- Sladojevic, S.; Arsenovic, M.; Anderla, A.; Stefanović, D. Deep Neural Networks Based Recognition of Plant Diseases by Leaf Image Classification. Comput. Intell. Neurosci. 2016, 2016, 1–11. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Ren, S.; He, K.; Girshick, R.; Sun, J. Faster R-CNN: Towards Real-Time Object Detection with Region Proposal Networks. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 39, 1137–1149. [Google Scholar] [CrossRef] [Green Version]

- Long, J.; Shelhamer, E.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. Arxiv 2014, 79, 474–478. [Google Scholar] [CrossRef]

- Kang, H.; Zhou, H.; Wang, X.; Chen, C. Real-Time Fruit Recognition and Grasping Estimation for Robotic Apple Harvesting. Sensors 2020, 20, 5670. [Google Scholar] [CrossRef]

- Jiao, Y.; Luo, R.; Li, Q.; Deng, X.; Yin, X.; Ruan, C.; Jia, W. Detection and Localization of Overlapped Fruits Application in an Apple Harvesting Robot. Electronics 2020, 9, 1023. [Google Scholar] [CrossRef]

- Li, Y.; Feng, X.; Wang, W. Color-Dependent Diffusion Equations Based on Quaternion Algebra. Chin. J. Electron. 2012, 21, 277–282. [Google Scholar] [CrossRef]

- Zhang, D.; Guo, Z.; Wang, G.; Jiang, T. Algebraic techniques for least squares problems in commutative quaternionic theory. Math. Methods Appl. Sci. 2020, 43, 3513–3523. [Google Scholar] [CrossRef]

- Marques-Bonham, S.; Chanyal, B.; Matzner, R. Yang–Mills-like field theories built on division quaternion and octonion algebras. Eur. Phys. J. Plus 2020, 135, 1–34. [Google Scholar] [CrossRef]

- Zhang, X.; Xia, J.; Tan, X.; Zhou, X.; Wang, T. PolSAR Image Classification via Learned Superpixels and QCNN Integrating Color Features. Remote Sens. 2019, 11, 1831. [Google Scholar] [CrossRef] [Green Version]

- Jia, Z.; Ng, M.; Guangjing, S. Robust quaternion matrix completion with applications to image inpainting. Numer. Linear Algebra Appl. 2019, 26, 26. [Google Scholar] [CrossRef]

- Evans, C.; Sangwine, S.; Ell, T. Hypercomplex color-sensitive smoothing filters. In Proceedings of the 2000 International Conference on Image Processing (Cat. No.00CH37101), Vancouver, BC, Canada, 10–13 September 2000; Volume 1, pp. 541–544. [Google Scholar] [CrossRef]

- Ell, T.; Sangwine, S. Hypercomplex Fourier Transforms of Color Images. IEEE Trans. Image Process. 2007, 16, 22–35. [Google Scholar] [CrossRef] [PubMed]

- Shi, L.; Funt, B. Quaternion color texture segmentation. Comput. Vis. Image Underst. 2007, 107, 88–96. [Google Scholar] [CrossRef]

- MacQueen, J. Some Methods for Classification and Analysis of MultiVariate Observations, 1st ed.; Le Cam, L., Neyman, J., Eds.; University of California Press: Berkeley, CA, USA, 1967; Volume 1, pp. 281–297. [Google Scholar]

- Liu, F.-Q.; Wang, Z.-Y. Automatic “Ground Truth” Annotation and Industrial Workpiece Dataset Generation for Deep Learning. Int. J. Autom. Comput. 2020, 17, 1–12. [Google Scholar] [CrossRef]

- Li, X.; Chang, D.; Ma, Z.; Tan, Z.-H.; Xue, J.-H.; Cao, J.; Guo, J. Deep InterBoost networks for small-sample image classification. Neurocomputing 2020. [Google Scholar] [CrossRef]

- Vahidi, H.; Klinkenberg, B.; Johnson, B.A.; Moskal, L.M.; Yan, W. Mapping the Individual Trees in Urban Orchards by Incorporating Volunteered Geographic Information and Very High Resolution Optical Remotely Sensed Data: A Template Matching-Based Approach. Remote Sens. 2018, 10, 1134. [Google Scholar] [CrossRef] [Green Version]

- Lei, T.; Jia, X.; Zhang, Y.; Liu, S.; Meng, H.; Nandi, A.K. Superpixel-Based Fast Fuzzy C-Means Clustering for Color Image Segmentation. IEEE Trans. Fuzzy Syst. 2019, 27, 1753–1766. [Google Scholar] [CrossRef] [Green Version]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2961–2969. [Google Scholar]

- Hammam, A.A.; Soliman, M.M.; Hassanien, A.E. Real-time multiple spatiotemporal action localization and prediction approach using deep learning. Neural Netw. 2020, 128, 331–344. [Google Scholar] [CrossRef]

- Jia, W.; Tian, Y.; Luo, R.; Zhang, Z.; Lian, J.; Zheng, Y. Detection and segmentation of overlapped fruits based on optimized mask R-CNN application in apple harvesting robot. Comput. Electron. Agric. 2020, 172, 105380. [Google Scholar] [CrossRef]

- Yuan, Y.; Chu, J.; Leng, L.; Miao, J.; Kim, B.-G. A scale-adaptive object-tracking algorithm with occlusion detection. EURASIP J. Image Video Process. 2020, 2020, 1–15. [Google Scholar] [CrossRef] [Green Version]

- Yamaguchi, T.; Tanaka, Y.; Imachi, Y.; Yamashita, M.; Katsura, K. Feasibility of Combining Deep Learning and RGB Images Obtained by Unmanned Aerial Vehicle for Leaf Area Index Estimation in Rice. Remote Sens. 2021, 13, 84. [Google Scholar] [CrossRef]

- Krahe, C.; Bräunche, A.; Jacob, A.; Stricker, N.; Lanza, G. Deep Learning for Automated Product Design. Procedia CIRP 2020, 91, 3–8. [Google Scholar] [CrossRef]

- Wu, M.; Yin, X.; Li, Q.; Zhang, J.; Feng, X.; Cao, Q.; Shen, H. Learning deep networks with crowdsourcing for relevance evaluation. EURASIP J. Wirel. Commun. Netw. 2020, 2020, 1–11. [Google Scholar] [CrossRef]

- Rizwan-i-Haque, I.; Neubert, J. Deep learning approaches to biomedical image segmentation. Inform. Med. Unlocked 2020, 18, 100297. [Google Scholar] [CrossRef]

- Amanullah, M.A.; Ariyaluran Habeeb, R.A.; Nasaruddin, F.; Gani, A.; Ahmed, E.; Nainar, A.; Akim, N.; Imran, M. Deep learning and big data technologies for IoT security. Comput. Commun. 2020, 151, 495–517. [Google Scholar] [CrossRef]

- Karanam, S.; Srinivas, Y.; Krishna, M. Study on image processing using deep learning techniques. Mater. Today Proc. 2020, 44, 2093–2109. [Google Scholar] [CrossRef]

- Arora, S.; Du, S.; Hu, W.; Li, Z.; Wang, R. Fine-Grained Analysis of Optimization and Generalization for Overparameterized Two-Layer Neural Networks 2019. Available online: https://ui.adsabs.harvard.edu/abs/2019arXiv190108584A (accessed on 21 July 2020).

- Du, S.; Zhai, X.; Poczos, B.; Singh, A. Gradient Descent Provably Optimizes Over-parameterized Neural Networks 2018. Available online: https://ui.adsabs.harvard.edu/abs/2018arXiv181002054D (accessed on 5 July 2020).

- Neyshabur, B.; Li, Z.; Bhojanapalli, S.; LeCun, Y.; Srebro, N. Towards Understanding the Role of Over-Parametrization in Generalization of Neural Networks 2018. Available online: https://ui.adsabs.harvard.edu/abs/2018arXiv180512076N (accessed on 13 September 2020).

- Riehle, D.; Reiser, D.; Griepentrog, H.W. Robust index-based semantic plant/background segmentation for RGB- images. Comput. Electron. Agric. 2020, 169, 105201. [Google Scholar] [CrossRef]

- Karabağ, C.; Verhoeven, J.; Miller, N.; Reyes-Aldasoro, C. Texture Segmentation: An Objective Comparison between Traditional and Deep-Learning Methodologies; University of London: London, UK, 2019. [Google Scholar] [CrossRef]

| Method | Method Source | Recall | Precision | FPR | FNR |

|---|---|---|---|---|---|

| Fuzzy 2-partition Entropy | Fuzzy 2-partition entropy | 87.75% | 84.87% | 9.36% | 12.44% |

| Fuzzy C-means | Superpixel-based fast fuzzy C-means clustering | 94.34% | 96.87% | 1.37% | 2.97% |

| Deep-learning | Mask R-CNN | 97.02% | 98.16% | 0.47% | 2.54% |

| Proposed algorithm | 98.69% | 99.26% | 0.06% | 1.44% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fan, P.; Lang, G.; Yan, B.; Lei, X.; Guo, P.; Liu, Z.; Yang, F. A Method of Segmenting Apples Based on Gray-Centered RGB Color Space. Remote Sens. 2021, 13, 1211. https://doi.org/10.3390/rs13061211

Fan P, Lang G, Yan B, Lei X, Guo P, Liu Z, Yang F. A Method of Segmenting Apples Based on Gray-Centered RGB Color Space. Remote Sensing. 2021; 13(6):1211. https://doi.org/10.3390/rs13061211

Chicago/Turabian StyleFan, Pan, Guodong Lang, Bin Yan, Xiaoyan Lei, Pengju Guo, Zhijie Liu, and Fuzeng Yang. 2021. "A Method of Segmenting Apples Based on Gray-Centered RGB Color Space" Remote Sensing 13, no. 6: 1211. https://doi.org/10.3390/rs13061211

APA StyleFan, P., Lang, G., Yan, B., Lei, X., Guo, P., Liu, Z., & Yang, F. (2021). A Method of Segmenting Apples Based on Gray-Centered RGB Color Space. Remote Sensing, 13(6), 1211. https://doi.org/10.3390/rs13061211