Orthomosaicking Thermal Drone Images of Forests via Simultaneously Acquired RGB Images

Abstract

:1. Introduction

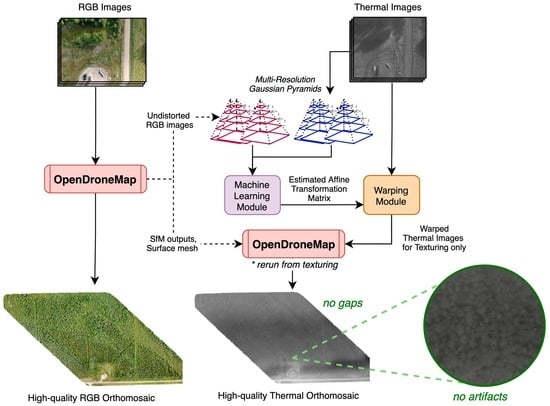

- We propose an integrated RGB and thermal orthomosaic generation workflow that bypasses the need for thermal SfM by leveraging intermediate RGB orthomosaicking outputs and co-registering RGB and thermal images through an automated intensity-based technique.

- We show that our workflow overcomes common issues associated with thermal-only orthomosaicking workflows while preserving the radiometric information (absolute temperature values) in the thermal imagery.

- We demonstrate the effectiveness of the geometrically aligned orthomosaics generated from our proposed workflow by utilizing an existing deep learning-based tree crown detector, showing how the RGB-detected bounding boxes can be directly applied to the thermal orthomosaic to extract thermal tree crowns.

- We develop an open-source tool with a GUI that implements our workflow to aid practitioners.

2. Materials and Proposed Workflow

2.1. Cynthia Cutblock Study Site

2.2. Proposed Integrated Orthomosaicking Workflow

2.2.1. RGB Orthomosaic Generation

2.2.2. Thermal Image Conversion

2.2.3. RGB–Thermal Image Co-Registration

2.2.4. Thermal Orthomosaic Generation

2.3. Downstream Tree Crown Detection Task

2.4. Proposed Open-Source Tool

2.5. Performance Assessment

3. Experimental Results

3.1. Quantitative Results

3.2. Qualitative Results

3.3. Robustness of Transformation Matrix Computation

3.4. Radiometric Analysis

3.5. Downstream Task Performance

3.6. Processing Time

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| CoV | Coefficient of Variation |

| CPU | Central Processing Unit |

| DOAJ | Directory of Open Access Journals |

| DSM | Digital Surface Model |

| ECC | Enhanced Correlation Coefficient |

| EXIF | Exchangeable Image File Format |

| FOV | Field of View |

| FPN | Feature Pyramid Network |

| GIS | Geographic Information System |

| GPU | Graphics Processing Unit |

| GUI | Graphical User Interface |

| JPEG | Joint Photographic Experts Group (image format) |

| MDPI | Multidisciplinary Digital Publishing Institute |

| MI | Mutual Information |

| NGF | Normalized Gradient Fields |

| ODM | Open Drone Map |

| RGB | Red Green Blue |

| RJPEG | Radiometric JPEG |

| SfM | Structure from Motion |

| TIFF | Tag Image File Format |

References

- Potter, K.M.; Conkling, B.L. Forest Health Monitoring: National Status, Trends, and Analysis 2021; U.S. Department of Agriculture Forest Service, Southern Research Station: Asheville, NC, USA, 2022. [CrossRef]

- Hall, R.; Castilla, G.; White, J.; Cooke, B.; Skakun, R. Remote sensing of forest pest damage: A review and lessons learned from a Canadian perspective. Can. Entomol. 2016, 148, S296–S356. [Google Scholar] [CrossRef]

- Marvasti-Zadeh, S.M.; Goodsman, D.; Ray, N.; Erbilgin, N. Early Detection of Bark Beetle Attack Using Remote Sensing and Machine Learning: A Review. arXiv 2022, arXiv:2210.03829. [Google Scholar]

- Ouattara, T.A.; Sokeng, V.C.J.; Zo-Bi, I.C.; Kouamé, K.F.; Grinand, C.; Vaudry, R. Detection of Forest Tree Losses in Côte d’Ivoire Using Drone Aerial Images. Drones 2022, 6, 83. [Google Scholar] [CrossRef]

- Ecke, S.; Dempewolf, J.; Frey, J.; Schwaller, A.; Endres, E.; Klemmt, H.J.; Tiede, D.; Seifert, T. UAV-Based Forest Health Monitoring: A Systematic Review. Remote Sens. 2022, 14, 3205. [Google Scholar] [CrossRef]

- Duarte, A.; Borralho, N.; Cabral, P.; Caetano, M. Recent Advances in Forest Insect Pests and Diseases Monitoring Using UAV-Based Data: A Systematic Review. Forests 2022, 13, 911. [Google Scholar] [CrossRef]

- Manfreda, S.; McCabe, M.; Miller, P.; Lucas, R.; Madrigal, V.P.; Mallinis, G.; Dor, E.B.; Helman, D.; Estes, L.; Ciraolo, G.; et al. On the Use of Unmanned Aerial Systems for Environmental Monitoring. Remote Sens. 2018, 10, 641. [Google Scholar] [CrossRef]

- Junttila, S.; Näsi, R.; Koivumäki, N.; Imangholiloo, M.; Saarinen, N.; Raisio, J.; Holopainen, M.; Hyyppä, H.; Hyyppä, J.; Lyytikäinen-Saarenmaa, P.; et al. Multispectral Imagery Provides Benefits for Mapping Spruce Tree Decline Due to Bark Beetle Infestation When Acquired Late in the Season. Remote Sens. 2022, 14, 909. [Google Scholar] [CrossRef]

- Sedano-Cibrián, J.; Pérez-Álvarez, R.; de Luis-Ruiz, J.M.; Pereda-García, R.; Salas-Menocal, B.R. Thermal Water Prospection with UAV, Low-Cost Sensors and GIS. Application to the Case of La Hermida. Sensors 2022, 22, 6756. [Google Scholar] [CrossRef]

- Guimarães, N.; Pádua, L.; Marques, P.; Silva, N.; Peres, E.; Sousa, J.J. Forestry Remote Sensing from Unmanned Aerial Vehicles: A Review Focusing on the Data, Processing and Potentialities. Remote Sens. 2020, 12, 1046. [Google Scholar] [CrossRef]

- Merino, L.; Caballero, F.; de Dios, J.R.M.; Maza, I.; Ollero, A. An Unmanned Aircraft System for Automatic Forest Fire Monitoring and Measurement. J. Intell. Robot. Syst. 2011, 65, 533–548. [Google Scholar] [CrossRef]

- Smigaj, M.; Gaulton, R.; Barr, S.L.; Suárez, J.C. UAV-Borne Thermal Imaging for Forest Health Monitoring: Detection of Disease-Induced Canopy Temperature Increase. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2015, XL-3/W3, 349–354. [Google Scholar] [CrossRef]

- Zakrzewska, A.; Kopeć, D. Remote sensing of bark beetle damage in Norway spruce individual tree canopies using thermal infrared and airborne laser scanning data fusion. For. Ecosyst. 2022, 9, 100068. [Google Scholar] [CrossRef]

- Chadwick, A.J.; Goodbody, T.R.H.; Coops, N.C.; Hervieux, A.; Bater, C.W.; Martens, L.A.; White, B.; Röeser, D. Automatic Delineation and Height Measurement of Regenerating Conifer Crowns under Leaf-Off Conditions Using UAV Imagery. Remote Sens. 2020, 12, 4104. [Google Scholar] [CrossRef]

- Iizuka, K.; Watanabe, K.; Kato, T.; Putri, N.; Silsigia, S.; Kameoka, T.; Kozan, O. Visualizing the Spatiotemporal Trends of Thermal Characteristics in a Peatland Plantation Forest in Indonesia: Pilot Test Using Unmanned Aerial Systems (UASs). Remote Sens. 2018, 10, 1345. [Google Scholar] [CrossRef]

- Hartmann, W.; Tilch, S.; Eisenbeiss, H.; Schindler, K. Determination of the Uav Position by Automatic Processing of Thermal Images. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2012, XXXIX-B6, 111–116. [Google Scholar] [CrossRef]

- Maes, W.; Huete, A.; Steppe, K. Optimizing the Processing of UAV-Based Thermal Imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef]

- Maes, W.; Huete, A.; Avino, M.; Boer, M.; Dehaan, R.; Pendall, E.; Griebel, A.; Steppe, K. Can UAV-Based Infrared Thermography Be Used to Study Plant-Parasite Interactions between Mistletoe and Eucalypt Trees? Remote Sens. 2018, 10, 2062. [Google Scholar] [CrossRef]

- Dillen, M.; Vanhellemont, M.; Verdonckt, P.; Maes, W.H.; Steppe, K.; Verheyen, K. Productivity, stand dynamics and the selection effect in a mixed willow clone short rotation coppice plantation. Biomass Bioenergy 2016, 87, 46–54. [Google Scholar] [CrossRef]

- Hoffmann, H.; Nieto, H.; Jensen, R.; Guzinski, R.; Zarco-Tejada, P.; Friborg, T. Estimating evaporation with thermal UAV data and two-source energy balance models. Hydrol. Earth Syst. Sci. 2016, 20, 697–713. [Google Scholar] [CrossRef]

- Ribeiro-Gomes, K.; Hernández-López, D.; Ortega, J.; Ballesteros, R.; Poblete, T.; Moreno, M. Uncooled Thermal Camera Calibration and Optimization of the Photogrammetry Process for UAV Applications in Agriculture. Sensors 2017, 17, 2173. [Google Scholar] [CrossRef]

- Ullman, S. The interpretation of structure from motion. Proc. R. Soc. Lond. Ser. B Biol. Sci. 1979, 203, 405–426. [Google Scholar]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Maset, E.; Fusiello, A.; Crosilla, F.; Toldo, R.; Zorzetto, D. Photogrammetric 3D building reconstruction from thermal images. ISPRS Ann. Photogramm. Remote Sens. Spat. Inf. Sci. 2017, IV-2/W3, 25–32. [Google Scholar] [CrossRef]

- Sledz, A.; Unger, J.; Heipke, C. Thermal IR Imaging: Image Quality and Orthophoto Generation. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. 2018, XLII-1, 413–420. [Google Scholar] [CrossRef]

- Yang, Y.; Lee, X. Four-band Thermal Mosaicking: A New Method to Process Infrared Thermal Imagery of Urban Landscapes from UAV Flights. Remote Sens. 2019, 11, 1365. [Google Scholar] [CrossRef]

- Javadnejad, F.; Gillins, D.T.; Parrish, C.E.; Slocum, R.K. A photogrammetric approach to fusing natural colour and thermal infrared UAS imagery in 3D point cloud generation. Int. J. Remote Sens. 2019, 41, 211–237. [Google Scholar] [CrossRef]

- Evangelidis, G.; Psarakis, E. Parametric Image Alignment Using Enhanced Correlation Coefficient Maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef]

- Dandrifosse, S.; Carlier, A.; Dumont, B.; Mercatoris, B. Registration and Fusion of Close-Range Multimodal Wheat Images in Field Conditions. Remote Sens. 2021, 13, 1380. [Google Scholar] [CrossRef]

- López, A.; Jurado, J.M.; Ogayar, C.J.; Feito, F.R. A framework for registering UAV-based imagery for crop-tracking in Precision Agriculture. Int. J. Appl. Earth Obs. Geoinf. 2021, 97, 102274. [Google Scholar] [CrossRef]

- López, A.; Ogayar, C.J.; Jurado, J.M.; Feito, F.R. Efficient generation of occlusion-aware multispectral and thermographic point clouds. Comput. Electron. Agric. 2023, 207, 107712. [Google Scholar] [CrossRef]

- Li, H.; Ding, W.; Cao, X.; Liu, C. Image Registration and Fusion of Visible and Infrared Integrated Camera for Medium-Altitude Unmanned Aerial Vehicle Remote Sensing. Remote Sens. 2017, 9, 441. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Saleem, S.; Bais, A. Visible Spectrum and Infra-Red Image Matching: A New Method. Appl. Sci. 2020, 10, 1162. [Google Scholar] [CrossRef]

- Truong, T.P.; Yamaguchi, M.; Mori, S.; Nozick, V.; Saito, H. Registration of RGB and Thermal Point Clouds Generated by Structure From Motion. In Proceedings of the 2017 IEEE International Conference on Computer Vision Workshops (ICCVW), Venice, Italy, 22–29 October 2017. [Google Scholar] [CrossRef]

- OpenDroneMap Authors. ODM—A Command Line Toolkit to Generate Maps, Point Clouds, 3D Models and DEMs from Drone, Balloon or Kite Images. OpenDroneMap/ODM GitHub Page 2020. Available online: https://github.com/OpenDroneMap/ODM (accessed on 15 February 2023).

- Nan, A.; Tennant, M.; Rubin, U.; Ray, N. DRMIME: Differentiable Mutual Information and Matrix Exponential for Multi-Resolution Image Registration. In Proceedings of the Third Conference on Medical Imaging with Deep Learning, Montreal, QC, Canada, 6–8 July 2020; Arbel, T., Ben Ayed, I., de Bruijne, M., Descoteaux, M., Lombaert, H., Pal, C., Eds.; Volume 121, pp. 527–543. [Google Scholar]

- Haber, E.; Modersitzki, J. Intensity Gradient Based Registration and Fusion of Multi-modal Images. In Medical Image Computing and Computer-Assisted Intervention–MICCAI 2006; Springer: Berlin/Heidelberg, Germany, 2006; pp. 726–733. [Google Scholar] [CrossRef]

- Adelson, E.H.; Anderson, C.H.; Bergen, J.R.; Burt, P.J.; Ogden, J.M. Pyramid Methods in Image Processing. RCA Eng. 1984, 29, 33–41. [Google Scholar]

- Hall, B.C. An Elementary Introduction to Groups and Representations. arXiv 2000, arXiv:math-ph/0005032. [Google Scholar]

- Weinstein, B.G.; Marconi, S.; Bohlman, S.; Zare, A.; White, E. Individual Tree-Crown Detection in RGB Imagery Using Semi-Supervised Deep Learning Neural Networks. Remote Sens. 2019, 11, 1309. [Google Scholar] [CrossRef]

- Hanusch, T. Texture Mapping and True Orthophoto Generation of 3D Objects. Ph.D. Thesis, ETH Zurich, Zurich, Switzerland, 2010. [Google Scholar] [CrossRef]

- Mapillary. Mapillary-OpenSfM. An Open-Source Structure from Motion Library That Lets You Build 3D Models from Images. Available online: https://opensfm.org/ (accessed on 15 February 2023).

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the Seventh IEEE International Conference on Computer Vision, Corfu, Greece, 20–27 September 1999; Volume 2, pp. 1150–1157. [Google Scholar]

- Shen, S. Accurate Multiple View 3D Reconstruction Using Patch-Based Stereo for Large-Scale Scenes. IEEE Trans. Image Process. 2013, 22, 1901–1914. [Google Scholar] [CrossRef]

- Brown, D.C. Decentering Distortion of Lenses. Photogramm. Eng. Remote Sens. 1966, 32, 444–462. [Google Scholar]

- Cernea, D. OpenMVS: Open Multiple View Stereovision. Available online: https://github.com/cdcseacave/openMVS/ (accessed on 15 February 2023).

- Kazhdan, M.; Hoppe, H. Screened Poisson Surface Reconstruction. ACM Trans. Graph. 2013, 32, 1–13. [Google Scholar] [CrossRef]

- Szeliski, R. Computer Vision; Springer: London, UK, 2011; Chapter 3; pp. 107–190. [Google Scholar] [CrossRef]

- Hartley, R.; Zisserman, A. Multiple View Geometry in Computer Vision, 2nd ed.; Cambridge University Press: New York, NY, USA, 2004; Chapter 2; pp. 25–64. ISBN 0521540518. [Google Scholar]

- Van der Walt, S.; Schönberger, J.L.; Nunez-Iglesias, J.; Boulogne, F.; Warner, J.D.; Yager, N.; Gouillart, E.; Yu, T.; The Scikit-Image Contributors. Scikit-image: Image processing in Python. PeerJ 2014, 2, e453. [Google Scholar] [CrossRef]

- Ruder, S. An overview of gradient descent optimization algorithms. arXiv 2016, arXiv:cs.LG/1609.04747. [Google Scholar]

- Konig, L.; Ruhaak, J. A fast and accurate parallel algorithm for non-linear image registration using Normalized Gradient fields. In Proceedings of the 2014 IEEE 11th International Symposium on Biomedical Imaging (ISBI), Beijing, China, 29 April–2 May 2014. [Google Scholar] [CrossRef]

- Maes, F.; Collignon, A.; Vandermeulen, D.; Marchal, G.; Suetens, P. Multimodality image registration by maximization of mutual information. IEEE Trans. Med. Imaging 1997, 16, 187–198. [Google Scholar] [CrossRef] [PubMed]

- Wilmott, P. The Mathematics of Financial Derivatives; Cambridge University Press: Cambridge, UK, 1995; p. 317. [Google Scholar]

- Hall, B.C. Lie Groups, Lie Algebras, and Representations; Springer International Publishing: Berlin, Germany, 2015. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hinton, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. arXiv 2014, arXiv:cs.LG/1412.6980. [Google Scholar]

- Paszke, A.; Gross, S.; Massa, F.; Lerer, A.; Bradbury, J.; Chanan, G.; Killeen, T.; Lin, Z.; Gimelshein, N.; Antiga, L.; et al. PyTorch: An Imperative Style, High-Performance Deep Learning Library. In Proceedings of the NeurIPS 2019, Advances in Neural Information Processing Systems 32, Vancouver, BC, Canada, 8–14 December 2019; pp. 8024–8035. [Google Scholar]

- Lin, T.Y.; Goyal, P.; Girshick, R.; He, K.; Dollár, P. Focal Loss for Dense Object Detection. arXiv 2017, arXiv:cs.CV/1708.02002. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. arXiv 2015, arXiv:cs.CV/1512.03385. [Google Scholar]

- Lin, T.Y.; Dollar, P.; Girshick, R.; He, K.; Hariharan, B.; Belongie, S. Feature Pyramid Networks for Object Detection. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Bhattacharyya, A. On a Measure of Divergence between Two Statistical Populations Defined by Their Probability Distributions. Bull. Calcutta Math. Soc. 1943, 35, 99–109. [Google Scholar]

- Penney, G.; Weese, J.; Little, J.; Desmedt, P.; Hill, D.; Hatwkes, D. A comparison of similarity measures for use in 2-D-3-D medical image registration. IEEE Trans. Med. Imaging 1998, 17, 586–595. [Google Scholar] [CrossRef]

| 20 Jul | 26 Jul | 9 Aug | 17 Aug | 30 Aug | |

|---|---|---|---|---|---|

| Number of RGB–Thermal Image Pairs | 827 | 828 | 820 | 825 | 814 |

| Average Air Temperature (°C) | 20.3 | 20.8 | 19.8 | 24.5 | 25.4 |

| Average Relative Humidity (%) | 42.7 | 61.0 | 53.0 | 40.7 | 46.3 |

| Design Choice | Jul 20 | Jul 26 | Aug 09 | Aug 17 | Aug 30 | Mean |

|---|---|---|---|---|---|---|

| Unregistered | 0.0539 | 0.0473 | 0.0695 | 0.0589 | 0.0658 | 0.0591 |

| Manual | 0.2417 | 0.1781 | 0.2612 | 0.2247 | 0.2003 | 0.2212 |

| ECC | 0.1658 | 0.2141 | 0.1742 | 0.2051 | 0.2079 | 0.1934 |

| NGF | 0.2999 | 0.2003 | 0.3181 | 0.2715 | 0.3038 | 0.2787 |

| Perspective | 0.2994 | 0.1995 | 0.3176 | 0.2707 | 0.3052 | 0.2785 |

| Affine | 0.2999 | 0.2003 | 0.3181 | 0.2715 | 0.3038 | 0.2787 |

| Single-resolution | 0.0239 | 0.0323 | 0.0271 | 0.0262 | 0.0247 | 0.0268 |

| Multi-resolution | 0.2999 | 0.2003 | 0.3181 | 0.2715 | 0.3038 | 0.2787 |

| Batch size = 1 | 0.0420 | 0.0396 | 0.0602 | 0.0506 | 0.0477 | 0.0480 |

| Batch size = 4 | 0.1420 | 0.0759 | 0.1053 | 0.1343 | 0.1036 | 0.1122 |

| Batch size = 16 | 0.3003 | 0.1966 | 0.3176 | 0.2672 | 0.3017 | 0.2767 |

| Batch size = 32 | 0.2966 | 0.1982 | 0.3182 | 0.2679 | 0.2999 | 0.2762 |

| Batch size = 64 | 0.2999 | 0.2003 | 0.3181 | 0.2715 | 0.3038 | 0.2787 |

| Random sampling | 0.3000 | 0.1963 | 0.3177 | 0.2682 | 0.3023 | 0.2769 |

| Systematic sampling | 0.2999 | 0.2003 | 0.3181 | 0.2715 | 0.3038 | 0.2787 |

| Component | Average | Minimum | Maximum | CoV (%) |

|---|---|---|---|---|

| 1.01442 | 1.01380 | 1.015200 | 0.05 | |

| 0.00618 | 0.00579 | 0.006600 | 4.45 | |

| 0.02537 | 0.02075 | 0.030240 | 12.19 | |

| −0.00861 | −0.01397 | −0.005990 | 33.69 | |

| 0.94546 | 0.94442 | 0.946730 | 0.08 | |

| −0.06670 | −0.08146 | −0.049870 | 16.40 | |

| 1.04259 | 1.04153 | 1.043970 | 0.09 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kapil, R.; Castilla, G.; Marvasti-Zadeh, S.M.; Goodsman, D.; Erbilgin, N.; Ray, N. Orthomosaicking Thermal Drone Images of Forests via Simultaneously Acquired RGB Images. Remote Sens. 2023, 15, 2653. https://doi.org/10.3390/rs15102653

Kapil R, Castilla G, Marvasti-Zadeh SM, Goodsman D, Erbilgin N, Ray N. Orthomosaicking Thermal Drone Images of Forests via Simultaneously Acquired RGB Images. Remote Sensing. 2023; 15(10):2653. https://doi.org/10.3390/rs15102653

Chicago/Turabian StyleKapil, Rudraksh, Guillermo Castilla, Seyed Mojtaba Marvasti-Zadeh, Devin Goodsman, Nadir Erbilgin, and Nilanjan Ray. 2023. "Orthomosaicking Thermal Drone Images of Forests via Simultaneously Acquired RGB Images" Remote Sensing 15, no. 10: 2653. https://doi.org/10.3390/rs15102653

APA StyleKapil, R., Castilla, G., Marvasti-Zadeh, S. M., Goodsman, D., Erbilgin, N., & Ray, N. (2023). Orthomosaicking Thermal Drone Images of Forests via Simultaneously Acquired RGB Images. Remote Sensing, 15(10), 2653. https://doi.org/10.3390/rs15102653