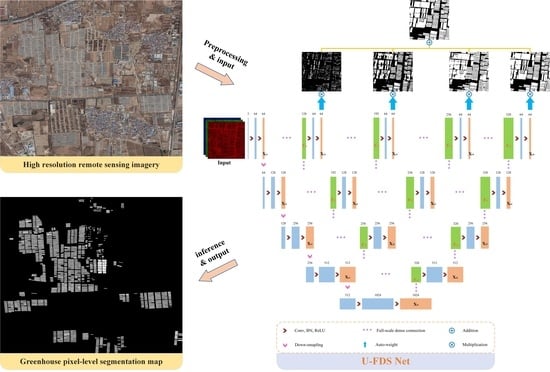

Extracting Plastic Greenhouses from Remote Sensing Images with a Novel U-FDS Net

Abstract

:1. Introduction

2. Materials and Methods

2.1. Network Structure

2.1.1. Full-Scale Dense Connection

2.1.2. Adaptive Deep Supervision Block

2.1.3. Lightweight Full-Scale Feature Aggregation Networks

2.2. Self-Annotated Dataset of Plastic Greenhouses

2.3. Experiments

- TP: true positive, i.e., the real category of a sample is greenhouse, and the model prediction result is also greenhouse;

- FN: false negative, i.e., the real category of a sample is greenhouse, but the model prediction result is background;

- FP: false positive, i.e., the real category of a sample is background, but the model prediction result is greenhouse;

- TN: true negative, i.e., the real class of a sample is background, and the model prediction result is background.

3. Results and Analysis

4. Discussion

4.1. Advantages

4.2. Limitations and Future Perspectives

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Liu, F.; Zhang, Z.; Shi, L.; Zhao, X.; Xu, J.; Yi, L.; Liu, B.; Wen, Q.; Hu, S.; Wang, X.; et al. Urban expansion in China and its spatial-temporal differences over the past four decades. J. Geogr. Sci. 2016, 26, 1477–1496. [Google Scholar] [CrossRef]

- Nunes, E.M.; Silva, P.S.G. Reforma agrária, regimes alimentares e desenvolvimento rural: Evidências a partir dos territórios rurais do Rio Grande do Norte. Rev. De Econ. E Sociol. Rural. 2023, 61, e232668. [Google Scholar] [CrossRef]

- Zheng, Y.Y.; Kong, J.L.; Jin, X.B.; Wang, X.Y.; Su, T.L.; Zuo, M. CropDeep: The Crop Vision Dataset for Deep-Learning-Based Classification and Detection in Precision Agriculture. Sensors 2019, 19, 1058. [Google Scholar] [CrossRef] [PubMed]

- Stark, J.C. Food production, human health and planet health amid COVID-19. Explor. J. Sci. Health 2021, 17, 179–180. [Google Scholar] [CrossRef]

- Hanan, J.J. Greenhouses: Advanced Technology for Protected Horticulture; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Zhang, G.X.; Fu, Z.T.; Yang, M.S.; Liu, X.X.; Dong, Y.H.; Li, X.X. Nonlinear simulation for coupling modeling of air humidity and vent opening in Chinese solar greenhouse based on CFD. Comput. Electron. Agric. 2019, 162, 337–347. [Google Scholar] [CrossRef]

- Novelli, A.; Aguilar, M.A.; Nemmaoui, A.; Aguilar, F.J.; Tarantino, E. Performance evaluation of object based greenhouse detection from Sentinel-2 MSI and Landsat 8 OLI data: A case study from Almeria (Spain). Int. J. Appl. Earth Obs. Geoinf. 2016, 52, 403–411. [Google Scholar] [CrossRef]

- Yang, D.D.; Chen, J.; Zhou, Y.; Chen, X.; Chen, X.H.; Cao, X. Mapping plastic greenhouse with medium spatial resolution satellite data: Development of a new spectral index. ISPRS J. Photogramm. Remote Sens. 2017, 128, 47–60. [Google Scholar] [CrossRef]

- Feng, Q.L.; Niu, B.W.; Chen, B.A.; Ren, Y.; Zhu, D.H.; Yang, J.Y.; Liu, J.T.; Ou, C.; Li, B.G. Mapping of plastic greenhouses and mulching films from very high resolution remote sensing imagery based on a dilated and non-local convolutional neural network. Int. J. Appl. Earth Obs. Geoinf. 2021, 102, 102441. [Google Scholar] [CrossRef]

- Pietro, P. Innovative Material and Improved Technical Design for a Sustainable Exploitation of Agricultural Plastic Film. J. Macromol. Sci. Part D Rev. Polym. Process. 2014, 53, 1000–1011. [Google Scholar]

- Picuno, P.; Sica, C.; Laviano, R.; Dimitrijevic, A.; Scarascia-Mugnozza, G. Experimental tests and technical characteristics of regenerated films from agricultural plastics. Polym. Degrad. Stab. 2012, 97, 1654–1661. [Google Scholar] [CrossRef]

- Picuno, P.; Tortora, A.; Capobianco, R.L. Analysis of plasticulture landscapes in Southern Italy through remote sensing and solid modelling techniques. Landsc. Urban Plan. 2011, 100, 45–56. [Google Scholar] [CrossRef]

- Shi, L.F.; Huang, X.J.; Zhong, T.Y.; Taubenbock, H. Mapping Plastic Greenhouses Using Spectral Metrics Derived From GaoFen-2 Satellite Data. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 49–59. [Google Scholar] [CrossRef]

- Veettil, B.K.; Xuan, Q.N. Landsat-8 and Sentinel-2 data for mapping plastic-covered greenhouse farming areas: A study from Dalat City (Lam Dong Province), Vietnam. Environ. Sci. Pollut. Res. 2022, 29, 73926–73933. [Google Scholar] [CrossRef] [PubMed]

- Yao, Y.; Wang, S.X. Evaluating the Effects of Image Texture Analysis on Plastic Greenhouse Segments via Recognition of the OSI-USI-ETA-CEI Pattern. Remote Sens. 2019, 11, 231. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Bianconi, F.; Aguilar, F.J.; Fernandez, I. Object-Based Greenhouse Classification from GeoEye-1 and WorldView-2 Stereo Imagery. Remote Sens. 2014, 6, 3554–3582. [Google Scholar] [CrossRef]

- Aguilar, M.A.; Novelli, A.; Nemmaoui, A.; Aguilar, F.J.; González-Yebra, Ó. Optimizing Multiresolution Segmentation for Extracting Plastic Greenhouses from WorldView-3 Imagery; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Balcik, F.B.; Senel, G.; Goksel, C. Object-Based Classification of Greenhouses Using Sentinel-2 MSI and SPOT-7 Images: A Case Study from Anamur (Mersin), Turkey. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 2769–2777. [Google Scholar] [CrossRef]

- Zhang, P.; Du, P.; Guo, S.; Zhang, W.; Tang, P.; Chen, J.; Zheng, H. A novel index for robust and large-scale mapping of plastic greenhouse from Sentinel-2 images. Remote Sens. Environ. 2022, 276, 113042. [Google Scholar] [CrossRef]

- Wu, C.F.; Deng, J.S.; Wang, K.; Ma, L.G.; Tahmassebi, A.R.S. Object-based classification approach for greenhouse mapping using Landsat-8 imagery. Int. J. Agric. Biol. Eng. 2016, 9, 79–88. [Google Scholar] [CrossRef]

- Ji, L.; Zhang, L.; Shen, Y.; Li, X.; Liu, W.; Chai, Q.; Zhang, R.; Chen, D. Object-Based Mapping of Plastic Greenhouses with Scattered Distribution in Complex Land Cover Using Landsat 8 OLI Images: A Case Study in Xuzhou, China. J. Indian Soc. Remote 2020, 48, 287–303. [Google Scholar] [CrossRef]

- Rostami, A.; Shah-Hosseini, R.; Asgari, S.; Zarei, A.; Aghdami-Nia, M.; Homayouni, S. Active Fire Detection from Landsat-8 Imagery Using Deep Multiple Kernel Learning. Remote Sens. 2022, 14, 992. [Google Scholar] [CrossRef]

- Khoshboresh-Masouleh, M.; Alidoost, F.; Arefi, H. Multiscale building segmentation based on deep learning for remote sensing RGB images from different sensors. J. Appl. Remote Sens. 2020, 14, 034503. [Google Scholar] [CrossRef]

- Ansari, M.; Homayouni, S.; Safari, A.; Niazmardi, S. A New Convolutional Kernel Classifier for Hyperspectral Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 11240–11256. [Google Scholar] [CrossRef]

- Yang, B.; Mao, Y.; Liu, L.; Liu, X.; Ma, Y.; Li, J. From Trained to Untrained: A Novel Change Detection Framework Using Randomly Initialized Models With Spatial-Channel Augmentation for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2023, 61, 1–14. [Google Scholar] [CrossRef]

- Aghdami-Nia, M.; Shah-Hosseini, R.; Rostami, A.; Homayouni, S. Automatic coastline extraction through enhanced sea-land segmentation by modifying Standard U-Net. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102785. [Google Scholar] [CrossRef]

- Yang, B.; Qin, L.; Liu, J.; Liu, X. UTRNet: An Unsupervised Time-Distance-Guided Convolutional Recurrent Network for Change Detection in Irregularly Collected Images. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–16. [Google Scholar] [CrossRef]

- Ranjbar, S.; Zarei, A.; Hasanlou, M.; Akhoondzadeh, M.; Amini, J.; Amani, M. Machine learning inversion approach for soil parameters estimation over vegetated agricultural areas using a combination of water cloud model and calibrated integral equation model. J. Appl. Remote Sens. 2021, 15, 018503. [Google Scholar] [CrossRef]

- Zarei, A.; Hasanlou, M.; Mahdianpari, M. A comparison of machine learning models for soil salinity estimation using multi-spectral earth observation data. ISPRS Ann. Photogramm. Remote Sens. Spatial Inf. Sci. 2021, V-3-2021, 257–263. [Google Scholar] [CrossRef]

- Zhou, Z.H.; Feng, J. Deep Forest: Towards an Alternative to Deep Neural Networks. In Proceedings of the 26th International Joint Conference on Artificial Intelligence (IJCAI), Melbourne, Australia, 19–25 August 2017; pp. 3553–3559. [Google Scholar]

- Ball, J.E.; Anderson, D.T.; Chan, C.S. Comprehensive survey of deep learning in remote sensing: Theories, tools, and challenges for the community. J. Appl. Remote Sens. 2017, 11, 42609. [Google Scholar] [CrossRef]

- Shelhamer, E.; Long, J.; Darrell, T. Fully Convolutional Networks for Semantic Segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 640–651. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer International Publishing: Berlin/Heidelberg, Germany, 2015. [Google Scholar]

- Chen, L.C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. DeepLab: Semantic Image Segmentation with Deep Convolutional Nets, Atrous Convolution, and Fully Connected CRFs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef]

- Guo, X.; Li, P. Mapping plastic materials in an urban area: Development of the normalized difference plastic index using WorldView-3 superspectral data. ISPRS J. Photogramm. Remote Sens. 2020, 169, 214–226. [Google Scholar] [CrossRef]

- Zhong, C.; Ting, Z.; Chao, O. End-to-End Airplane Detection Using Transfer Learning in Remote Sensing Images. Remote Sens. 2018, 10, 139. [Google Scholar]

- Li, M.; Zhang, Z.J.; Lei, L.P.; Wang, X.F.; Guo, X.D. Agricultural Greenhouses Detection in High-Resolution Satellite Images Based on Convolutional Neural Networks: Comparison of Faster R-CNN, YOLO v3 and SSD. Sensors 2020, 20, 4938. [Google Scholar] [CrossRef] [PubMed]

- Ma, A.L.; Chen, D.Y.; Zhong, Y.F.; Zheng, Z.; Zhang, L.P. National-scale greenhouse mapping for high spatial resolution remote sensing imagery using a dense object dual-task deep learning framework: A case study of China. ISPRS J. Photogramm. Remote Sens. 2021, 181, 279–294. [Google Scholar] [CrossRef]

- Chen, W.; Xu, Y.M.; Zhang, Z.; Yang, L.; Pan, X.B.; Jia, Z. Mapping agricultural plastic greenhouses using Google Earth images and deep learning. Comput. Electron. Agric. 2021, 191, 106552. [Google Scholar] [CrossRef]

- Niu, B.W.; Feng, Q.L.; Chen, B.; Ou, C.; Liu, Y.M.; Yang, J.Y. HSI-TransUNet: A transformer based semantic segmentation model for crop mapping from UAV hyperspectral imagery. Comput. Electron. Agric. 2022, 201, 107297. [Google Scholar] [CrossRef]

- Huang, H.M.; Lin, L.F.; Tong, R.F.; Hu, H.J.; Zhang, Q.W.; Iwamoto, Y.; Han, X.H.; Chen, Y.W.; Wu, J. UNET 3+: A full-scale connected unet for medical image segmentation. In Proceedings of the IEEE International Conference on Acoustics, Speech, and Signal Processing, Barcelona, Spain, 4–8 May 2020; pp. 1055–1059. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. UNet++: A Nested U-Net Architecture for Medical Image Segmentation. In Proceedings of the Deep Learning in Medical Image Analysis: 4th International Workshop (DLMIA 2018), and Multimodal Learning for Clinical Decision Support, International Workshop and 8th International Workshop, Held in Conjunction with MICCAI 2018 (ML-CDS 2018), Granada, Spain, 20 September 2018; Volume 11045, pp. 3–11. [Google Scholar] [CrossRef]

- Chen, W.; Wang, Q.; Wang, D.; Xu, Y.; He, Y.; Yang, L.; Tang, H. A lightweight and scalable greenhouse mapping method based on remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2023, 125, 103553. [Google Scholar] [CrossRef]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin Transformer: Hierarchical Vision Transformer using Shifted Windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision (ICCV), Montreal, BC, Canada, 11–17 October 2021. [Google Scholar]

- Chen, J.; Lu, Y.; Yu, Q.; Luo, X.; Adeli, E.; Wang, Y.; Lu, L.; Yuille, A.L.; Zhou, Y. TransUNet: Transformers Make Strong Encoders for Medical Image Segmentation. arXiv 2021, arXiv:2102.04306. [Google Scholar]

| Region | Size | Training | Evaluation | Test | Total |

|---|---|---|---|---|---|

| Shandong | 512 × 512 | 4849 | 606 | 606 | 6061 |

| Beijing | 256 × 256 | 5472 | 684 | 684 | 6840 |

| Confusion Matrix | Predict | ||

|---|---|---|---|

| True | False | ||

| Real | True | TP (True positive) | FN (False negative) |

| False | FP (False positive) | TN (True negative) | |

| Model | Params | Dataset | |

|---|---|---|---|

| Shandong | Beijing | ||

| U-Net | 12.77 M | 88.9266 | 92.4881 |

| U-Net++ | 44.99 M | 89.3119 | 92.7878 |

| U-Net+++ | 25.72 M | 90.1137 | 93.0096 |

| Attention U-Net | 38.25 M | 89.3293 | 93.7484 |

| En-U-FDS Net | 37.36 M | 90.0888 | 94.0418 |

| De-U-FDS Net | 37.36 M | 90.1263 | 94.0531 |

| Narrow-U-FDS Net | 10.67 M | 90.1381 | 94.1244 |

| U-FDS Net | 42.65 M | 90.5190 | 94.3499 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Mo, Y.; Zhou, W.; Chen, W. Extracting Plastic Greenhouses from Remote Sensing Images with a Novel U-FDS Net. Remote Sens. 2023, 15, 5736. https://doi.org/10.3390/rs15245736

Mo Y, Zhou W, Chen W. Extracting Plastic Greenhouses from Remote Sensing Images with a Novel U-FDS Net. Remote Sensing. 2023; 15(24):5736. https://doi.org/10.3390/rs15245736

Chicago/Turabian StyleMo, Yan, Wanting Zhou, and Wei Chen. 2023. "Extracting Plastic Greenhouses from Remote Sensing Images with a Novel U-FDS Net" Remote Sensing 15, no. 24: 5736. https://doi.org/10.3390/rs15245736

APA StyleMo, Y., Zhou, W., & Chen, W. (2023). Extracting Plastic Greenhouses from Remote Sensing Images with a Novel U-FDS Net. Remote Sensing, 15(24), 5736. https://doi.org/10.3390/rs15245736