Increasing the Accuracy and Automation of Fractional Vegetation Cover Estimation from Digital Photographs

Abstract

:1. Introduction

2. Materials and Methods

2.1. Data Collection

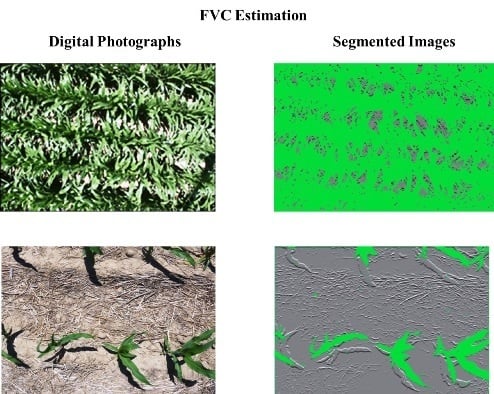

2.2. Image Segmentation

Threshold Determination

3. Results

3.1. Image Segmentation

3.2. Fractional Vegetation Cover Estimation

3.2.1. Ground Truth Image Segmentation

3.2.2. Comparison of Image Segmentation Techniques

3.2.3. Fractional Vegetation Cover Estimates

3.2.4. Segmentation and FVC Estimates for Remotely Sensed Corn

4. Discussion

4.1. Comparative Advantages of ACE

4.2. Implications for Remote Sensing

4.3. Implications for Senescent Crop Cover

4.4. Application of ACE to Crop Modelling

5. Conclusions

Software Availability

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Fiala, A.C.S.; Garman, S.L.; Gray, A.N. Comparison of five canopy cover estimation techniques in the western Oregon Cascades. For. Ecol. Manag. 2006, 232, 188–197. [Google Scholar] [CrossRef]

- Steduto, P.; Hsaio, T.C.; Raes, D.; Fereres, E. AquaCrop—The FAO model to simulate yield response to water. I. Concepts and underlying principles. Agron. J. 2009, 101, 426–437. [Google Scholar] [CrossRef]

- Behrens, T.; Diepenbrock, W. Using digital image analysis to describe canopies of winter oilseed rape (Brassica napus L.) during vegetative developmental stages. J. Agron. Crop Sci. 2006, 192, 295–302. [Google Scholar] [CrossRef]

- Pan, G.; Li, F.; Sun, G. Digital camera based measurement of crop cover for wheat yield prediction. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 797–800.

- Richardson, M.D.; Karcher, D.E.; Purcell, L.C. Quantifying turfgrass cover using digital image analysis. Crop Sci. 2001, 4, 1884–1888. [Google Scholar] [CrossRef]

- Jia, L.; Chen, X.; Zhang, F. Optimum nitrogen fertilization of winter wheat based on color digital camera image. Commun. Soil Sci. Plant Anal. 2007, 38, 1385–1394. [Google Scholar] [CrossRef]

- Li, Y.; Chen, D.; Walker, C.N.; Angus, J.F. Estimating the nitrogen status of crops using a digital camera. Field Crop. Res. 2010, 118, 221–227. [Google Scholar] [CrossRef]

- Sakamoto, T.; Gitelson, A.A.; Nguy-Robertson, A.L.; Arkebauer, T.J.; Wardlow, B.D.; Suyker, A.E.; Verma, S.B.; Shibayama, M. An alternative method using digital cameras for continuous monitoring of crop status. Agric. For. Meteorol. 2012, 154–155, 113–126. [Google Scholar] [CrossRef]

- Ding, Y.; Zheng, X.; Zhao, K.; Xin, X.; Liu, H. Quantifying the impact of NDVIsoil determination methods and NDVIsoil variability on the estimation of fractional vegetation cover in Northeast China. Remote Sens. 2016, 8, 29. [Google Scholar] [CrossRef]

- Liu, J.; Pattey, E. Retrieval of leaf area index from top-of-canopy digital photography over agricultural crops. Agric. For. Meteorol. 2010, 150, 1485–1490. [Google Scholar] [CrossRef]

- Nielsen, D.; Miceli-Garcia, J.J.; Lyon, D.J. Canopy cover and leaf area index relationships for wheat, triticale, and corn. Agron. J. 2012, 104, 1569–1573. [Google Scholar] [CrossRef]

- Córcoles, J.I.; Ortega, J.F.; Hernández, D.; Moreno, M.A. Estimation of leaf area index in onion (Allium cepa L.) using an unmanned aerial vehicle. Biosyst. Eng. 2013, 115, 31–42. [Google Scholar] [CrossRef]

- Wang, Y.; Wang, D.J.; Zhang, G.; Wang, J. Estimating nitrogen status of rice using the image segmentation of G-R thresholding method. Field Crop. Res. 2013, 149, 33–39. [Google Scholar] [CrossRef]

- Booth, D.T.; Cox, S.E.; Fifield, C.; Phillips, M.; Williamson, N. Image analysis compared with other methods for measuring ground cover. Arid Land Res. Manag. 2005, 19, 91–100. [Google Scholar] [CrossRef]

- Guevara-Escobar, A.; Tellez, J.; Gonzalez-Sosa, E. Use of digital photography for analysis of canopy closure. Agrofor. Syst. 2005, 65, 1–11. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A.; Herrick, J.E.; Fredrickson, E.L.; Burkett, L. An object-based image analysis approach for determining fractional cover of senescent and green vegetation with digital plot photography. J. Arid Environ. 2007, 69, 1–14. [Google Scholar] [CrossRef]

- Lee, K.; Lee, B. Estimating canopy cover from color digital camera image of rice field. J. Crop Sci. Biotechnol. 2011, 14, 151–155. [Google Scholar] [CrossRef]

- Yu, Z.-H.; Cao, Z.-G.; Wu, X.; Bai, X.; Qin, Y.; Zhuo, W.; Xiao, Y.; Zhang, X.; Xue, H. Automatic image-based detection technology for two critical growth stages of maize: Emergence and three-leaf stage. Agric. For. Meteorol. 2013, 174, 65–84. [Google Scholar] [CrossRef]

- Sui, R.X.; Thomasson, J.A.; Hanks, J.; Wooten, J. Ground-based sensing system for weed mapping in cotton. Comput. Electron. Agric. 2008, 60, 31–38. [Google Scholar] [CrossRef]

- Abbasgholipour, M.; Omid, M.; Keyhani, A.; Mohtasebi, S.S. Color image segmentation with genetic algorithm in a raisin sorting system based on machine vision in variable conditions. Expert Syst. Appl. 2011, 38, 3671–3678. [Google Scholar] [CrossRef]

- Przeszlowska, A.; Trlica, M.; Weltz, M. Near-ground remote sensing of green area index on the shortgrass prairie. Rangel. Ecol. Manag. 2006, 59, 422–430. [Google Scholar] [CrossRef]

- Zelikova, T.J.; Williams, D.G.; Hoenigman, R.; Blumenthal, D.M.; Morgan, J.A.; Pendall, E. Seasonality of soil moisture mediates responses of ecosystem phenology to elevated CO2 and warming in a semi-arid grassland. J. Ecol. 2015, 103, 1119–1130. [Google Scholar] [CrossRef]

- Mu, X.; Hu, M.; Song, W.; Ruan, G.; Ge, Y.; Wang, J.; Huang, S.; Yan, G. Evaluation of sampling methods for validation of remotely sensed fractional vegetation cover. Remote Sens. 2015, 7, 16164–16182. [Google Scholar] [CrossRef]

- Ding, Y.; Zheng, X.; Jiang, T.; Zhao, K. Comparison and validation of long time serial global GEOV1 and regional Australian MODIS fractional vegetation cover products over the Australian continent. Remote Sens. 2015, 7, 5718–5733. [Google Scholar]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 60–68. [Google Scholar] [Green Version]

- Booth, D.T.; Cox, S.E.; Meikle, T.W.; Fitzgerald, C. The accuracy of ground-cover measurements. Rangel. Ecol. Manag. 2006, 59, 179–188. [Google Scholar]

- Kataoka, T.; Kaneko, T.; Okamoto, H.; Hata, S. Crop growth estimation system using machine vision. In Proceedings of the IEEE/ASME International Conference on Advanced Intelligent Mechatronics (AIM 2003), Kobe, Japan, 20–24 July 2003; Volume 2, pp. b1079–b1083.

- Neto, J.C. A Combined Statistical-Soft Computing Approach for Classification and Mapping Weed Species in Minimum-Tillage Systems. Ph.D. Thesis, University of Nebraska, Lincoln, NE, USA, 2006. [Google Scholar]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar]

- Bai, X.D.; Cao, Z.G.; Wanga, Y.; Yua, Z.H.; Zhang, X.F.; Li, C.N. Crop segmentation from images by morphology modeling in the CIE L*a*b* color space. Comput. Electron. Agric. 2013, 99, 21–34. [Google Scholar]

- Song, W.; Mu, X.; Yan, G.; Huang, S. Extracting the green fractional vegetation cover from digital images using a shadow-resistant algorithm (SHAR-LABFVC). Remote Sens. 2015, 7, 10425–10443. [Google Scholar]

- Tian, L.F.; Slaughter, D.C. Environmentally adaptive segmentation algorithm for outdoor image segmentation. Comput. Electron. Agric. 1998, 21, 153–168. [Google Scholar]

- Nielsen, D.C.; Lyon, D.J.; Hergert, G.W.; Higgins, R.K.; Calderon, F.J.; Vigil, M.F. Cover crop mixtures do not use water differently than single-species plantings. Agron. J. 2015, 107, 1025–1038. [Google Scholar] [CrossRef]

- DeJonge, K.C.; Taghvaeian, S.; Trout, T.J.; Comis, L.H. Comparison of canopy temperature-based water stress indices for maize. Agric. Water Manag. 2015, 156, 51–62. [Google Scholar]

- Tkalĉiĉ, M.; Tasiĉ, J.F. Colour spaces: Perceptual, historical and applicational background. In Proceedings of the IEEE Region 8 EUROCON 2003, Computer as a Tool, Ljubljana, Slovenia, 22–24 September 2003; Volume 1, pp. 304–308.

- O’Haver, T. Peak Finding and Measurement. Custom Scripts for the MATLAB Platform. 2006. Available online: http://www.wam.umd.edu/~toh/spectrum/PeakFindingandMeasurement.htm (accessed on 15 June 2014).

- Booth, D.T.; Cox, S.E.; Berryman, R.D. Point sampling digital imagery with ‘SamplePoint’. Environ. Monit. Assess. 2006, 123, 97–108. [Google Scholar]

- Ponti, M.P. Segmentation of low-cost remote sensing images combining vegetation indices and mean shift. IEEE Geosci. Remote Sens. Lett. 2013, 10, 67–70. [Google Scholar]

- Seefeldt, S.S.; Booth, D.T. Measuring plant cover in sagebrush steppe rangelands: A comparison of methods. Environ. Manag. 2006, 37, 703–711. [Google Scholar]

- Booth, D.T.; Cox, S.E.; Meikle, T.; Zuuring, H.R. Ground-cover measurements: Assessing correlation among aerial and ground-based methods. Environ. Manag. 2008, 42, 1091–1100. [Google Scholar]

- Jia, K.; Liang, S.; Gu, X.; Baret, F.; Wei, X.; Wang, X.; Yao, Y.; Yang, L.; Li, Y. Fractional vegetation cover estimation algorithm for Chinese GF-1 wide field view data. Remote Sens. Environ. 2016, 177, 184–191. [Google Scholar]

- Steduto, P.; Hsiao, T.C.; Fereres, E.; Raes, D. Crop Yield Response to Water; FAO Irrigation and Drainage Paper 66; Food and Agricultural Organization of the United Nations (FAO): Rome, Italy, 2012. [Google Scholar]

- Rankine, D.R.; Cohen, J.E.; Taylor, M.A.; Coy, A.D.; Simpson, L.A.; Stephenson, T.; Lawrence, J.L. Parameterizing the FAO AquaCrop model for rainfed and irrigated field-grown sweet potato. Agron. J. 2015, 107, 375–387. [Google Scholar]

| Algorithm | Corn | Oat | Flax | Rapeseed | Overall | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| µ (%) | σ (%) | µ (%) | σ (%) | µ (%) | σ (%) | µ (%) | σ (%) | µ (%) | σ (%) | |

| CIVE | 40.0 | 18.0 | 63.0 | 8.0 | 60.0 | 18.0 | 51.0 | 1.5 | 52.6 | 17.3 |

| ExG | 67.0 | 8.0 | 58.0 | 9.0 | 63.0 | 16.0 | 50.0 | 1.5 | 59.6 | 13.6 |

| VVI | 30.0 | 8.0 | 45.0 | 9.0 | 48.4 | 10.0 | 39.4 | 10.0 | 40.0 | 10.9 |

| MS | 35.0 | 9.0 | 54.0 | 7.0 | 48.0 | 10.0 | 43.1 | 13.0 | 44.2 | 11.7 |

| MSCIVE | 85.4 | 6.0 | 61.0 | 25.0 | 74.0 | 6.0 | 75.4 | 10.0 | 74.4 | 15.8 |

| MSExG | 85.0 | 7.0 | 62.0 | 25.0 | 73.0 | 6.0 | 76.0 | 8.0 | 74.4 | 15.2 |

| MSVVI | 32.3 | 9.0 | 55.0 | 7.0 | 44.0 | 10.0 | 42.0 | 12.0 | 42.5 | 11.9 |

| Bai et al. | 88.0 | 5.0 | 85.0 | 6.4 | 84.4 | 8.0 | 87.0 | 8.1 | 86.1 | 7.0 |

| SHAR-LAB | 87.0 | 5.0 | 82.3 | 11.6 | 81.3 | 6.0 | 85.0 | 10.0 | 82.3 | 9.0 |

| ACE | 89.2 | 2.6 | 89.1 | 4.3 | 89.8 | 5.1 | 90.4 | 4.5 | 89.6 | 4.5 |

| Algorithm | Corn | Oat | Flax | Rapeseed | Overall | |||||

|---|---|---|---|---|---|---|---|---|---|---|

| RMSE | σ (%) | RMSE | σ (%) | RMSE | σ (%) | RMSE | σ (%) | RMSE | σ (%) | |

| CIVE | 33.7 | 17.4 | 17.9 | 14.3 | 35.0 | 14.5 | 31.1 | 31.8 | 30.2 | 24.8 |

| ExG | 11.7 | 7.1 | 18.6 | 7.0 | 14.8 | 7.4 | 33.3 | 12.4 | 21.3 | 11.9 |

| VVI | 20.0 | 17.3 | 21.8 | 13.8 | 15.4 | 15.8 | 36.1 | 29.3 | 24.6 | 21.3 |

| MS | 16.8 | 16.0 | 15.2 | 15.3 | 19.4 | 18.9 | 34.3 | 31.4 | 22.7 | 22.8 |

| MSCIVE | 4.7 | 4.8 | 29.1 | 22.7 | 8.6 | 8.6 | 20.9 | 14.3 | 18.6 | 16.3 |

| MSExG | 5.7 | 5.7 | 28.4 | 22.8 | 8.2 | 8.1 | 19.7 | 12.8 | 18.0 | 15.6 |

| MSVVI | 16.6 | 16.2 | 15.4 | 15.4 | 18.0 | 18.4 | 34.7 | 31.2 | 22.6 | 22.5 |

| Bai et al. | 2.1 | 2.2 | 8.2 | 8.4 | 5.6 | 4.2 | 5.3 | 5.0 | 5.7 | 5.6 |

| SHAR-LAB | 9.2 | 6.4 | 16.0 | 11.4 | 11.6 | 6.0 | 8.7 | 4.7 | 11.7 | 7.7 |

| ACE | 2.7 | 2.2 | 5.8 | 6.0 | 5.7 | 5.8 | 5.3 | 4.3 | 5.0 | 4.9 |

| Algorithm | Segmentation Accuracy | FVC | ||

|---|---|---|---|---|

| µ (%) | σ (%) | RMSE | σ (%) | |

| CIVE | 53.7 | 19.7 | 29.9 | 22.2 |

| ExG | 41.0 | 18.9 | 31.2 | 16.5 |

| VVI | 41.7 | 18.8 | 20.0 | 20.3 |

| MS | 44.2 | 17.6 | 22.6 | 23.2 |

| MSCIVE | 65.4 | 11.7 | 16.8 | 17.1 |

| MSExG | 65.5 | 11.5 | 15.3 | 15.6 |

| MSVVI | 43.2 | 17.7 | 22.3 | 22.9 |

| Bai et al. | 74.9 | 17.7 | 13.1 | 8.4 |

| SHAR-LAB | 81.1 | 11.4 | 4.5 | 4.2 |

| ACE | 88.7 | 5.4 | 4.1 | 4.1 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Coy, A.; Rankine, D.; Taylor, M.; Nielsen, D.C.; Cohen, J. Increasing the Accuracy and Automation of Fractional Vegetation Cover Estimation from Digital Photographs. Remote Sens. 2016, 8, 474. https://doi.org/10.3390/rs8070474

Coy A, Rankine D, Taylor M, Nielsen DC, Cohen J. Increasing the Accuracy and Automation of Fractional Vegetation Cover Estimation from Digital Photographs. Remote Sensing. 2016; 8(7):474. https://doi.org/10.3390/rs8070474

Chicago/Turabian StyleCoy, André, Dale Rankine, Michael Taylor, David C. Nielsen, and Jane Cohen. 2016. "Increasing the Accuracy and Automation of Fractional Vegetation Cover Estimation from Digital Photographs" Remote Sensing 8, no. 7: 474. https://doi.org/10.3390/rs8070474

APA StyleCoy, A., Rankine, D., Taylor, M., Nielsen, D. C., & Cohen, J. (2016). Increasing the Accuracy and Automation of Fractional Vegetation Cover Estimation from Digital Photographs. Remote Sensing, 8(7), 474. https://doi.org/10.3390/rs8070474