Visual Sensors

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Physical Sensors".

Viewed by 14794Editors

Interests: computer vision; robotics; cooperative robotics

Special Issues, Collections and Topics in MDPI journals

Interests: computer vision; omnidirectional imaging; appearance descriptors; image processing; mobile robotics; environment modeling; visual localization

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

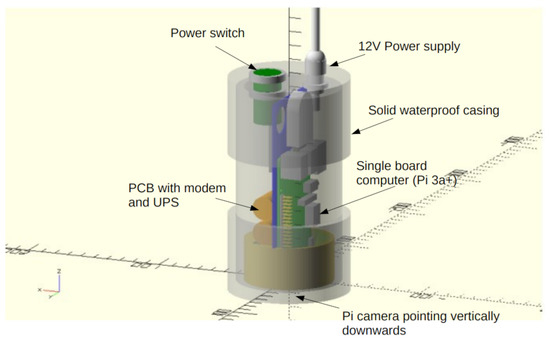

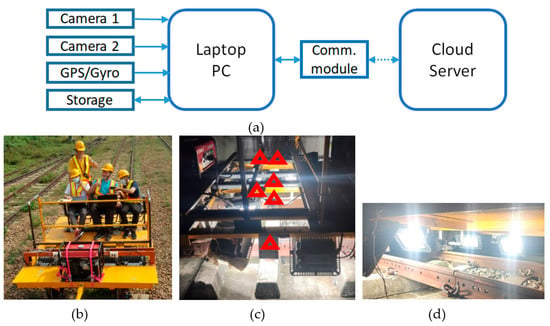

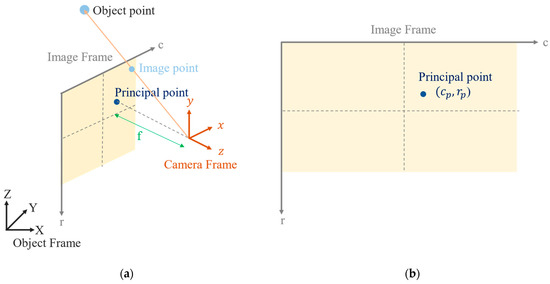

Visual sensors are able to capture a large quantity of information from the environment around them. Nowadays, a wide variety of visual systems can be found, from the classical monocular systems to omnidirectional, RGB-D and more sophisticated 3D systems. Every configuration presents some specific characteristics that make them useful to solve different problems, with a unique vision system or a network of them, or even fusing the visual information with other sources of information. The range of applications of visual sensors is wide and varied. Amongst them, we can find robotics, industry, security, medicine, agriculture, quality control, visual inspection, surveillance, autonomous driving and navigation aid systems.

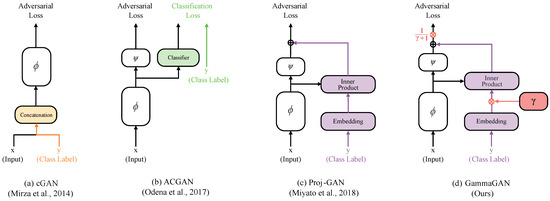

The visual information can be processed using a variety of approaches to extract relevant and useful information. Among them, machine learning and deep learning have experienced a great development over the past few years and have provided robust solutions to complex problems. The aim of this collection is to collect new research works and developments that present some of the possibilities that vision systems offer, focusing on the different configurations that can be used, new image processing algorithms and novel applications in any field. Furthermore, reviews presenting a deep analysis of a specific problem and the use of vision systems to address it would also be appropriate.

This Topical Collection invites contributions in the following topics (but is not limited to them):

- Image processing;

- Visual pattern recognition;

- Object recognition by visual sensors;

- Movement estimation or registration from images;

- Visual sensors in robotics;

- Visual sensors in industrial applications;

- Computer vision for quality evaluation;

- Visual sensors in agriculture;

- Computer vision in medical applications;

- Computer vision in autonomous or computer-aided driving;

- Environment modeling and reconstruction from images;

- Visual Localization;

- Visual SLAM;

- Deep learning from images.

Prof. Dr. Oscar Reinoso Garcia

Prof. Dr. Luis Payá

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript. The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs). Submitted papers should be well formatted and use good English. Authors may use MDPI's English editing service prior to publication or during author revisions.

Keywords

- 3D imaging

- Stereo visual systems

- Omnidirectional visual systems

- Quality assessment

- Pattern recognition

- Visual registration

- Visual navigation

- Visual mapping

- LiDAR/vision system

- Multi-visual sensors

- RGB-D cameras

- Fusion of visual information

- Fusion with other sources of information

- Networks of visual sensors

- Machine learning

- Deep learning