Future Internet, Volume 14, Issue 2 (February 2022) – 38 articles

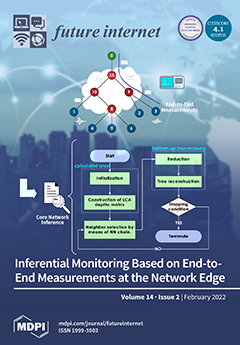

This work presents a distance-based agglomerative clustering algorithm for inferential monitoring based on end-to-end measurements obtained at the network edge. In particular, we extend the bottom-up clustering method by incorporating the use of nearest neighbors (NN) chains and reciprocal nearest neighbors (RNNs) to infer the topology of the examined network (i.e., the logical and the physical routing trees) and estimate the link performance characteristics (i.e., loss rate and jitter). Going beyond network monitoring in itself, we design and implement a tangible application of the proposed algorithm that combines network tomography with change point analysis to realize performance anomaly detection. The experimental validation of our ideas takes place in a fully controlled large-scale testbed over bare-metal hardware. View this paper.

- Issues are regarded as officially published after their release is announced to the table of contents alert mailing list.

- You may sign up for e-mail alerts to receive table of contents of newly released issues.

- PDF is the official format for papers published in both, html and pdf forms. To view the papers in pdf format, click on the "PDF Full-text" link, and use the free Adobe Reader to open them.