Selected Papers from the PETRA Conference Series

A topical collection in Technologies (ISSN 2227-7080).

Viewed by 287154

Share This Topical Collection

Editor

Prof. Dr. Fillia Makedon

Prof. Dr. Fillia Makedon

Prof. Dr. Fillia Makedon

Prof. Dr. Fillia Makedon

E-Mail

Website

Collection Editor

Department of Computer Sciences & Engineering, University of Texas at Arlington, 701 S Nedderman Drive, Arlington, TX 76019, USA

Interests: human–computer interaction; human–robot interaction; user interfaces; cognitive computing; virtual reality; mixed reality

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

We are planning to publish a Topic Collection related to the PETRA conference series. The latest events can be found at http://www.petrae.org/cfp.html. All the participants of the PETRA conference series and their colleagues are encouraged to submit their work to this Topic Collection.

The PETRA conference publishes its proceedings in the ACM digital library, and the US National Science Foundation has supported the conference through its Doctoral Consortium Program for 12 years in a row, giving the opportunity to hundreds of student authors to participate by granting generous travel awards.

Research areas of interest include, but are not limited to, the following:

- Healthcare informatics;

- Big data management;

- Data privacy and remote health monitoring;

- Games for physical therapy and rehabilitation;

- User interface design and usability;

- Reasoning systems and machine learning;

- Affective computing;

- Cyberlearning: theory, methods, and technologies;

- Human–robot interaction;

- Human-centered computing;

- Human monitoring;

- Haptics;

- Gesture and motion tracking;

- Cognitive modeling;

- Wearable computing;

- Interactions and the Internet of Things (IoT);

- Cognitive computing;

- Disability computing.

Applications include, but are not limited to, the following:

- Sensor networks for pervasive healthcare;

- Mobile and wireless technologies;

- Healthcare privacy and data security;

- Smart rehabilitation systems;

- Game design for cognitive assessment and social interaction;

- Behavior monitoring systems;

- Computer vision in healthcare;

- Virtual and augmented reality environments;

- Ambient assisted living;

- Navigation systems;

- Collaboration and data sharing;

- Wearable devices;

- Drug delivery evaluation;

- Vocational safety and health monitoring;

- Eyetracking;

- Telemedicine and biotechnology;

- Technologies for senior living;

- Social impact of pervasive technologies;

- Intelligent assistive environments;

- Technologies to provide assessment and intervention for Stroke recovery, spinal cord injury, and multiple sclerosis;

- Technologies for improving quality of daily living;

- Robotics research for rehabilitation and tele-rehabilitation;

- Innovative design for smart wheelchairs and smart canes;

- Computer-based training systems for artificial limbs and prosthetics;

- Disability computing: smart systems to assist persons with visual, hearing, and loss of limb functionalities;

- Smart cities of the future;

- Assistive technologies for urban environments.

Prof. Dr. Fillia Makedon

Guest Editor

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Technologies is an international peer-reviewed open access monthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 1600 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Published Papers (40 papers)

Open AccessArticle

MIRA: Multi-Joint Imitation with Recurrent Adaptation for Robot-Assisted Rehabilitation

by

Ali Ashary, Ruchik Mishra, Madan M. Rayguru and Dan O. Popa

Viewed by 1997

Abstract

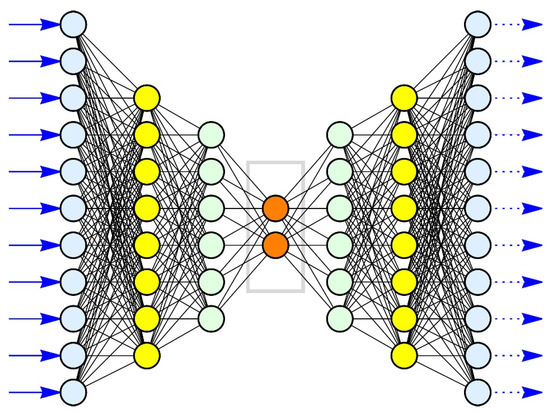

This work proposes a modular learning framework (MIRA) for rehabilitation robots based on a new deep recurrent neural network (RNN) that achieves adaptive multi-joint motion imitation. The RNN is fed with the fundamental frequencies as well as the ranges of the joint trajectories,

[...] Read more.

This work proposes a modular learning framework (MIRA) for rehabilitation robots based on a new deep recurrent neural network (RNN) that achieves adaptive multi-joint motion imitation. The RNN is fed with the fundamental frequencies as well as the ranges of the joint trajectories, in order to predict the future joint trajectories of the robot. The proposed framework also uses a Segment Online Dynamic Time Warping (SODTW) algorithm to quantify the closeness between the robot and patient motion. The SODTW cost decides the amount of modification needed in the inputs to our deep RNN network, which in turn adapts the robot movements. By keeping the prediction mechanism (RNN) and adaptation mechanism (SODTW) separate, the framework achieves modularity, flexibility, and scalability. We tried both Long Short-Term Memory (LSTM) and Gated Recurrent Unit (GRU) RNN architectures within our proposed framework. Experiments involved a group of 15 human subjects performing a range of motion tasks in conjunction with our social robot, Zeno. Comparative analysis of the results demonstrated the superior performance of the LSTM RNN across multiple task variations, highlighting its enhanced capability for adaptive motion imitation.

Full article

►▼

Show Figures

Open AccessReview

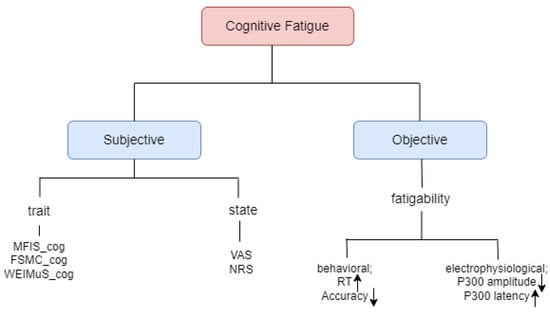

Examining the Landscape of Cognitive Fatigue Detection: A Comprehensive Survey

by

Enamul Karim, Hamza Reza Pavel, Sama Nikanfar, Aref Hebri, Ayon Roy, Harish Ram Nambiappan, Ashish Jaiswal, Glenn R. Wylie and Fillia Makedon

Cited by 2 | Viewed by 7245

Abstract

Cognitive fatigue, a state of reduced mental capacity arising from prolonged cognitive activity, poses significant challenges in various domains, from road safety to workplace productivity. Accurately detecting and mitigating cognitive fatigue is crucial for ensuring optimal performance and minimizing potential risks. This paper

[...] Read more.

Cognitive fatigue, a state of reduced mental capacity arising from prolonged cognitive activity, poses significant challenges in various domains, from road safety to workplace productivity. Accurately detecting and mitigating cognitive fatigue is crucial for ensuring optimal performance and minimizing potential risks. This paper presents a comprehensive survey of the current landscape in cognitive fatigue detection. We systematically review various approaches, encompassing physiological, behavioral, and performance-based measures, for robust and objective fatigue detection. The paper further analyzes different challenges, including the lack of standardized ground truth and the need for context-aware fatigue assessment. This survey aims to serve as a valuable resource for researchers and practitioners seeking to understand and address the multifaceted challenge of cognitive fatigue detection.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceReview

A Survey of the Diagnosis of Peripheral Neuropathy Using Intelligent and Wearable Systems

by

Muhammad Talha, Maria Kyrarini and Ehsan Ali Buriro

Cited by 2 | Viewed by 3243

Abstract

In recent years, the usage of wearable systems in healthcare has gained much attention, as they can be easily worn by the subject and provide a continuous source of data required for the tracking and diagnosis of multiple kinds of abnormalities or diseases

[...] Read more.

In recent years, the usage of wearable systems in healthcare has gained much attention, as they can be easily worn by the subject and provide a continuous source of data required for the tracking and diagnosis of multiple kinds of abnormalities or diseases in the human body. Wearable systems can be made useful in improving a patient’s quality of life and at the same time reducing the overall cost of caring for individuals including the elderly. In this survey paper, the recent research in the development of intelligent wearable systems for the diagnosis of peripheral neuropathy is discussed. The paper provides detailed information about recent techniques based on different wearable sensors for the diagnosis of peripheral neuropathy including experimental protocols, biomarkers, and other specifications and parameters such as the type of signals and data processing methods, locations of sensors, the scales and tests used in the study, and the scope of the study. It also highlights challenges that are still present in order to make wearable devices more effective in the diagnosis of peripheral neuropathy in clinical settings.

Full article

Open AccessEditor’s ChoiceCommunication

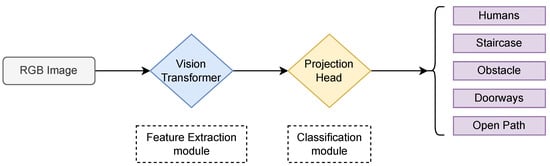

Towards Safe Visual Navigation of a Wheelchair Using Landmark Detection

by

Christos Sevastopoulos, Mohammad Zaki Zadeh, Michail Theofanidis, Sneh Acharya, Nishi Patel and Fillia Makedon

Cited by 1 | Viewed by 2186

Abstract

This article presents a method for extracting high-level semantic information through successful landmark detection using 2D RGB images. In particular, the focus is placed on the presence of particular labels (open path, humans, staircase, doorways, obstacles) in the encountered scene, which can be

[...] Read more.

This article presents a method for extracting high-level semantic information through successful landmark detection using 2D RGB images. In particular, the focus is placed on the presence of particular labels (open path, humans, staircase, doorways, obstacles) in the encountered scene, which can be a fundamental source of information enhancing scene understanding and paving the path towards the safe navigation of the mobile unit. Experiments are conducted using a manual wheelchair to gather image instances from four indoor academic environments consisting of multiple labels. Afterwards, the fine-tuning of a pretrained vision transformer (ViT) is conducted, and the performance is evaluated through an ablation study versus well-established state-of-the-art deep architectures for image classification such as ResNet. Results show that the fine-tuned ViT outperforms all other deep convolutional architectures while achieving satisfactory levels of generalization.

Full article

►▼

Show Figures

Open AccessCommunication

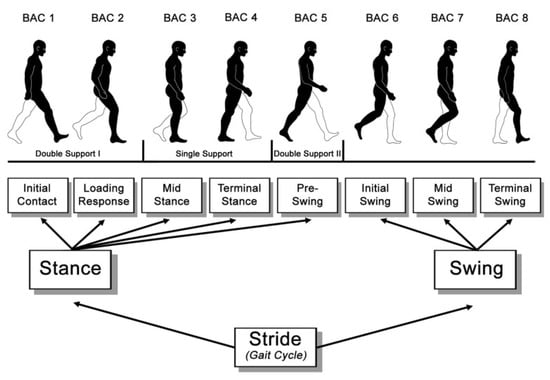

Assessment of Cognitive Fatigue from Gait Cycle Analysis

by

Hamza Reza Pavel, Enamul Karim, Ashish Jaiswal, Sneh Acharya, Gaurav Nale, Michail Theofanidis and Fillia Makedon

Cited by 4 | Viewed by 2811

Abstract

Cognitive Fatigue (CF) is the decline in cognitive abilities due to prolonged exposure to mentally demanding tasks. In this paper, we used gait cycle analysis, a biometric method related to human locomotion to identify cognitive fatigue in individuals. The proposed system in this

[...] Read more.

Cognitive Fatigue (CF) is the decline in cognitive abilities due to prolonged exposure to mentally demanding tasks. In this paper, we used gait cycle analysis, a biometric method related to human locomotion to identify cognitive fatigue in individuals. The proposed system in this paper takes two asynchronous videos of the gait of individuals to classify if they are cognitively fatigued or not. We leverage the pose estimation library OpenPose, to extract the body keypoints from the frames in the videos. To capture the spatial and temporal information of the gait cycle, a CNN-based model is used in the system to extract the embedded features which are then used to classify the cognitive fatigue level of individuals. To train and test the model, a gait dataset is built from 21 participants by collecting walking data before and after inducing cognitive fatigue using clinically used games. The proposed model can classify cognitive fatigue from the gait data of an individual with an accuracy of 81%.

Full article

►▼

Show Figures

Open AccessArticle

Determination of “Neutral”–“Pain”, “Neutral”–“Pleasure”, and “Pleasure”–“Pain” Affective State Distances by Using AI Image Analysis of Facial Expressions

by

Hermann Prossinger, Tomáš Hladký, Silvia Boschetti, Daniel Říha and Jakub Binter

Cited by 1 | Viewed by 2255

Abstract

(1) Background: In addition to verbalizations, facial expressions advertise one’s affective state. There is an ongoing debate concerning the communicative value of the facial expressions of pain and of pleasure, and to what extent humans can distinguish between these. We introduce a novel

[...] Read more.

(1) Background: In addition to verbalizations, facial expressions advertise one’s affective state. There is an ongoing debate concerning the communicative value of the facial expressions of pain and of pleasure, and to what extent humans can distinguish between these. We introduce a novel method of analysis by replacing human ratings with outputs from image analysis software. (2) Methods: We use image analysis software to extract feature vectors of the facial expressions neutral, pain, and pleasure displayed by 20 actresses. We dimension-reduced these feature vectors, used singular value decomposition to eliminate noise, and then used hierarchical agglomerative clustering to detect patterns. (3) Results: The vector norms for pain–pleasure were rarely less than the distances pain–neutral and pleasure–neutral. The pain–pleasure distances were Weibull-distributed and noise contributed 10% to the signal. The noise-free distances clustered in four clusters and two isolates. (4) Conclusions: AI methods of image recognition are superior to human abilities in distinguishing between facial expressions of pain and pleasure. Statistical methods and hierarchical clustering offer possible explanations as to why humans fail. The reliability of commercial software, which attempts to identify facial expressions of affective states, can be improved by using the results of our analyses.

Full article

►▼

Show Figures

Open AccessArticle

STAMINA: Bioinformatics Platform for Monitoring and Mitigating Pandemic Outbreaks

by

Nikolaos Bakalos, Maria Kaselimi, Nikolaos Doulamis, Anastasios Doulamis, Dimitrios Kalogeras, Mathaios Bimpas, Agapi Davradou, Aggeliki Vlachostergiou, Anaxagoras Fotopoulos, Maria Plakia, Alexandros Karalis, Sofia Tsekeridou, Themistoklis Anagnostopoulos, Angela Maria Despotopoulou, Ilaria Bonavita, Katrina Petersen, Leonidas Pelepes, Lefteris Voumvourakis, Anastasia Anagnostou, Derek Groen, Kate Mintram, Arindam Saha, Simon J. E. Taylor, Charon van der Ham, Patrick Kaleta, Dražen Ignjatović and Luca Rossiadd

Show full author list

remove

Hide full author list

| Viewed by 3732

Abstract

This paper presents the components and integrated outcome of a system that aims to achieve early detection, monitoring and mitigation of pandemic outbreaks. The architecture of the platform aims at providing a number of pandemic-response-related services, on a modular basis, that allows for

[...] Read more.

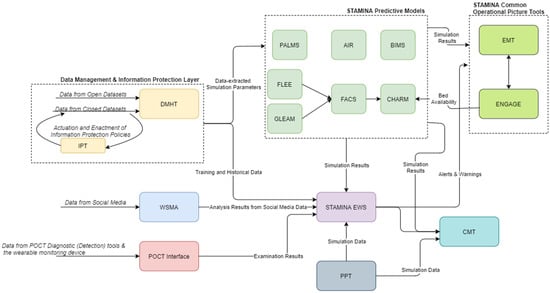

This paper presents the components and integrated outcome of a system that aims to achieve early detection, monitoring and mitigation of pandemic outbreaks. The architecture of the platform aims at providing a number of pandemic-response-related services, on a modular basis, that allows for the easy customization of the platform to address user’s needs per case. This customization is achieved through its ability to deploy only the necessary, loosely coupled services and tools for each case, and by providing a common authentication, data storage and data exchange infrastructure. This way, the platform can provide the necessary services without the burden of additional services that are not of use in the current deployment (e.g., predictive models for pathogens that are not endemic to the deployment area). All the decisions taken for the communication and integration of the tools that compose the platform adhere to this basic principle. The tools presented here as well as their integration is part of the project STAMINA.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Continuous Emotion Recognition for Long-Term Behavior Modeling through Recurrent Neural Networks

by

Ioannis Kansizoglou, Evangelos Misirlis, Konstantinos Tsintotas and Antonios Gasteratos

Cited by 30 | Viewed by 4713

Abstract

One’s internal state is mainly communicated through nonverbal cues, such as facial expressions, gestures and tone of voice, which in turn shape the corresponding emotional state. Hence, emotions can be effectively used, in the long term, to form an opinion of an individual’s

[...] Read more.

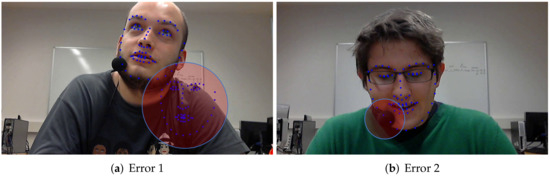

One’s internal state is mainly communicated through nonverbal cues, such as facial expressions, gestures and tone of voice, which in turn shape the corresponding emotional state. Hence, emotions can be effectively used, in the long term, to form an opinion of an individual’s overall personality. The latter can be capitalized on in many human–robot interaction (HRI) scenarios, such as in the case of an assisted-living robotic platform, where a human’s mood may entail the adaptation of a robot’s actions. To that end, we introduce a novel approach that gradually maps and learns the personality of a human, by conceiving and tracking the individual’s emotional variations throughout their interaction. The proposed system extracts the facial landmarks of the subject, which are used to train a suitably designed deep recurrent neural network architecture. The above architecture is responsible for estimating the two continuous coefficients of emotion, i.e., arousal and valence, following the broadly known Russell’s model. Finally, a user-friendly dashboard is created, presenting both the momentary and the long-term fluctuations of a subject’s emotional state. Therefore, we propose a handy tool for HRI scenarios, where robot’s activity adaptation is needed for enhanced interaction performance and safety.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Reliable Ultrasonic Obstacle Recognition for Outdoor Blind Navigation

by

Apostolos Meliones, Costas Filios and Jairo Llorente

Cited by 15 | Viewed by 5843

Abstract

A reliable state-of-the-art obstacle detection algorithm is proposed for a mobile application that will analyze in real time the data received by an external sonar device and decide the need to audibly warn the blind person about near field obstacles. The proposed algorithm

[...] Read more.

A reliable state-of-the-art obstacle detection algorithm is proposed for a mobile application that will analyze in real time the data received by an external sonar device and decide the need to audibly warn the blind person about near field obstacles. The proposed algorithm can equip an orientation and navigation device that allows the blind person to walk safely autonomously outdoors. The smartphone application and the microelectronic external device will serve as a wearable that will help the safe outdoor navigation and guidance of blind people. The external device will collect information using an ultrasonic sensor and a GPS module. Its main objective is to detect the existence of obstacles in the path of the user and to provide information, through oral instructions, about the distance at which it is located, its size and its potential motion and to advise how it could be avoided. Subsequently, the blind can feel more confident, detecting obstacles via hearing before sensing them with the walking cane, including hazardous obstacles that cannot be sensed at the ground level. Besides presenting the micro-servo-motor ultrasonic obstacle detection algorithm, the paper also presents the external microelectronic device integrating the sonar module, the impulse noise filtering implementation, the power budget of the sonar module and the system evaluation. The presented work is an integral part of a state-of-the-art outdoor blind navigation smartphone application implemented in the MANTO project.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

The NESTORE e-Coach: Designing a Multi-Domain Pathway to Well-Being in Older Age

by

Leonardo Angelini, Mira El Kamali, Elena Mugellini, Omar Abou Khaled, Christina Röcke, Simone Porcelli, Alfonso Mastropietro, Giovanna Rizzo, Noemi Boqué, Josep Maria del Bas, Filippo Palumbo, Michele Girolami, Antonino Crivello, Canan Ziylan, Paula Subías-Beltrán, Silvia Orte, Carlo Emilio Standoli, Laura Fernandez Maldonado, Maurizio Caon, Martin Sykora, Suzanne Elayan, Sabrina Guye and Giuseppe Andreoniadd

Show full author list

remove

Hide full author list

| Viewed by 4230

Abstract

This article describes the coaching strategies of the NESTORE e-coach, a virtual coach for promoting healthier lifestyles in older age. The novelty of the NESTORE project is the definition of a multi-domain personalized pathway where the e-coach accompanies the user throughout different structured

[...] Read more.

This article describes the coaching strategies of the NESTORE e-coach, a virtual coach for promoting healthier lifestyles in older age. The novelty of the NESTORE project is the definition of a multi-domain personalized pathway where the e-coach accompanies the user throughout different structured and non-structured coaching activities and recommendations. The article also presents the design process of the coaching strategies, carried out including older adults from four European countries and experts from the different health domains, and the results of the tests carried out with 60 older adults in Italy, Spain and The Netherlands.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Verifiable Surface Disinfection Using Ultraviolet Light with a Mobile Manipulation Robot

by

Alan G. Sanchez and William D. Smart

Cited by 3 | Viewed by 3311

Abstract

Robots are being increasingly used in the fight against highly-infectious diseases such as the Novel Coronavirus (SARS-CoV-2). By using robots in place of human health care workers in disinfection tasks, we can reduce the exposure of these workers to the virus and, as

[...] Read more.

Robots are being increasingly used in the fight against highly-infectious diseases such as the Novel Coronavirus (SARS-CoV-2). By using robots in place of human health care workers in disinfection tasks, we can reduce the exposure of these workers to the virus and, as a result, often dramatically reduce their risk of infection. Since healthcare workers are often disproportionately affected by large-scale infectious disease outbreaks, this risk reduction can profoundly affect our ability to fight these outbreaks. Many robots currently available for disinfection, however, are little more than mobile platforms for ultraviolet lights, do not allow fine-grained control over how the disinfection is performed, and do not allow verification that it was done as the human supervisor intended. In this paper, we present a semi-autonomous system, originally designed for the disinfection of surfaces in the context of Ebola Virus Disease (EVD) that allows a human supervisor to direct an autonomous robot to disinfect contaminated surfaces to a desired level, and to subsequently verify that this disinfection has taken place. We describe the overall system, the user interface, how our calibration and modeling allows for reliable disinfection, and offer directions for future work to address open space disinfection tasks.

Full article

►▼

Show Figures

Open AccessArticle

Fall Detection Using Multi-Property Spatiotemporal Autoencoders in Maritime Environments

by

Iason Katsamenis, Nikolaos Bakalos, Eleni Eirini Karolou, Anastasios Doulamis and Nikolaos Doulamis

Cited by 7 | Viewed by 3360

Abstract

Man overboard is an emergency in which fast and efficient detection of the critical event is the key factor for the recovery of the victim. Its severity urges the utilization of intelligent video surveillance systems that monitor the ship’s perimeter in real time

[...] Read more.

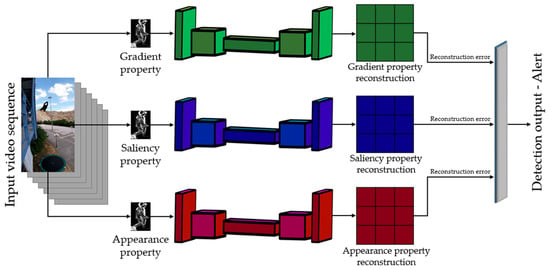

Man overboard is an emergency in which fast and efficient detection of the critical event is the key factor for the recovery of the victim. Its severity urges the utilization of intelligent video surveillance systems that monitor the ship’s perimeter in real time and trigger the relative alarms that initiate the rescue mission. In terms of deep learning analysis, since man overboard incidents occur rarely, they present a severe class imbalance problem, and thus, supervised classification methods are not suitable. To tackle this obstacle, we follow an alternative philosophy and present a novel deep learning framework that formulates man overboard identification as an anomaly detection task. The proposed system, in the absence of training data, utilizes a multi-property spatiotemporal convolutional autoencoder that is trained only on the normal situation. We explore the use of RGB video sequences to extract specific properties of the scene, such as gradient and saliency, and utilize the autoencoders to detect anomalies. To the best of our knowledge, this is the first time that man overboard detection is made in a fully unsupervised manner while jointly learning the spatiotemporal features from RGB video streams. The algorithm achieved 97.30% accuracy and a 96.01%

F1-score, surpassing the other state-of-the-art approaches significantly.

Full article

►▼

Show Figures

Open AccessArticle

Negotiating Learning Goals with Your Future Learning-Self

by

Konstantinos Tsiakas, Deborah Cnossen, Timothy H. C. Muyrers, Danique R. C. Stappers, Romain H. A. Toebosch and Emilia I. Barakova

Viewed by 2883

Abstract

This paper discusses the challenges towards designing an educational avatar which visualizes the future learning-self of a student in order to promote their self-regulated learning skills. More specifically, the avatar follows a negotiation-based interaction with the student during the goal-setting process of self-regulated

[...] Read more.

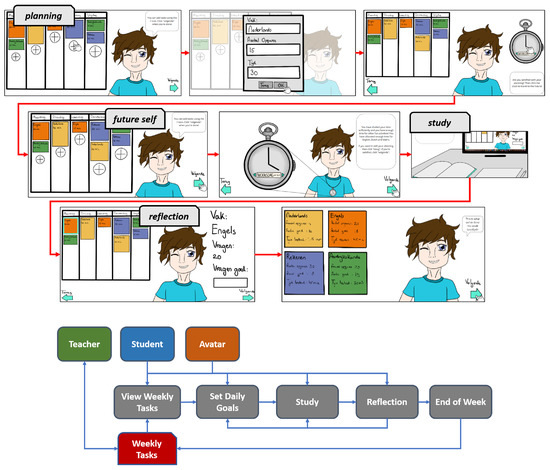

This paper discusses the challenges towards designing an educational avatar which visualizes the future learning-self of a student in order to promote their self-regulated learning skills. More specifically, the avatar follows a negotiation-based interaction with the student during the goal-setting process of self-regulated learning. The goal of the avatar is to help the student get insights of their possible future learning-self based on their daily goals. Our approach utilizes a Recurrent Neural Network as the underlying prediction model for expected learning outcomes and goal feasibility. In this paper, we present our ongoing work and design process towards an explainable and personalized educational avatar, focusing both on the avatar design and the human-algorithm interactions.

Full article

►▼

Show Figures

Open AccessReview

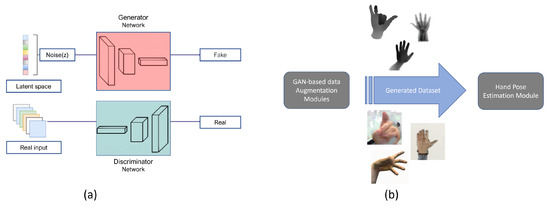

A Survey on GAN-Based Data Augmentation for Hand Pose Estimation Problem

by

Farnaz Farahanipad, Mohammad Rezaei, Mohammad Sadegh Nasr, Farhad Kamangar and Vassilis Athitsos

Cited by 16 | Viewed by 6770

Abstract

Deep learning solutions for hand pose estimation are now very reliant on comprehensive datasets covering diverse camera perspectives, lighting conditions, shapes, and pose variations. While acquiring such datasets is a challenging task, several studies circumvent this problem by exploiting synthetic data, but this

[...] Read more.

Deep learning solutions for hand pose estimation are now very reliant on comprehensive datasets covering diverse camera perspectives, lighting conditions, shapes, and pose variations. While acquiring such datasets is a challenging task, several studies circumvent this problem by exploiting synthetic data, but this does not guarantee that they will work well in real situations mainly due to the gap between the distribution of synthetic and real data. One recent popular solution to the domain shift problem is learning the mapping function between different domains through generative adversarial networks. In this study, we present a comprehensive study on effective hand pose estimation approaches, which are comprised of the leveraged generative adversarial network (GAN), providing a comprehensive training dataset with different modalities. Benefiting from GAN, these algorithms can augment data to a variety of hand shapes and poses where data manipulation is intuitively controlled and greatly realistic. Next, we present related hand pose datasets and performance comparison of some of these methods for the hand pose estimation problem. The quantitative and qualitative results indicate that the state-of-the-art hand pose estimators can be greatly improved with the aid of the training data generated by these GAN-based data augmentation methods. These methods are able to beat the baseline approaches with better visual quality and higher values in most of the metrics (PCK and ME) on both the STB and NYU datasets. Finally, in conclusion, the limitation of the current methods and future directions are discussed.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Detection of Physical Strain and Fatigue in Industrial Environments Using Visual and Non-Visual Low-Cost Sensors

by

Konstantinos Papoutsakis, George Papadopoulos, Michail Maniadakis, Thodoris Papadopoulos, Manolis Lourakis, Maria Pateraki and Iraklis Varlamis

Cited by 14 | Viewed by 6124

Abstract

The detection and prevention of workers’ body straining postures and other stressing conditions within the work environment, supports establishing occupational safety and promoting well being and sustainability at work. Developed methods towards this aim typically rely on combining highly ergonomic workplaces and expensive

[...] Read more.

The detection and prevention of workers’ body straining postures and other stressing conditions within the work environment, supports establishing occupational safety and promoting well being and sustainability at work. Developed methods towards this aim typically rely on combining highly ergonomic workplaces and expensive monitoring mechanisms including wearable devices. In this work, we demonstrate how the input from low-cost sensors, specifically, passive camera sensors installed in a real manufacturing workplace, and smartwatches used by the workers can provide useful feedback on the workers’ conditions and can yield key indicators for the prevention of work-related musculo-skeletal disorders (WMSD) and physical fatigue. To this end, we study the ability to assess the risk for physical strain of workers online during work activities based on the classification of ergonomically sub-optimal working postures using visual information, the correlation and fusion of these estimations with synchronous worker heart rate data, as well as the prediction of near-future heart rate using deep learning-based techniques. Moreover, a new multi-modal dataset of video and heart rate data captured in a real manufacturing workplace during car door assembly activities is introduced. The experimental results show the efficiency of the proposed approach that exceeds 70% of classification rate based on the F1 score measure using a set of over 300 annotated video clips of real line workers during work activities. In addition a time lagging correlation between the estimated ergonomic risks for physical strain and high heart rate was assessed using a larger dataset of synchronous visual and heart rate data sequences. The statistical analysis revealed that imposing increased strain to body parts will results in an increase to the heart rate after 100–120 s. This finding is used to improve the short term forecasting of worker’s cardiovascular activity for the next 10 to 30 s by fusing the heart rate data with the estimated ergonomic risks for physical strain and ultimately to train better predictive models for worker fatigue.

Full article

►▼

Show Figures

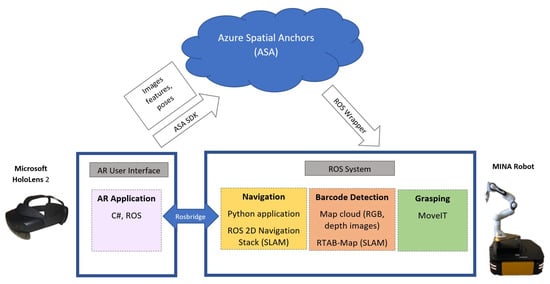

Open AccessEditor’s ChoiceArticle

MINA: A Robotic Assistant for Hospital Fetching Tasks

by

Harish Ram Nambiappan, Stephanie Arevalo Arboleda, Cody Lee Lundberg, Maria Kyrarini, Fillia Makedon and Nicholas Gans

Cited by 5 | Viewed by 5034

Abstract

In this paper, a robotic Multitasking Intelligent Nurse Aid (MINA) is proposed to assist nurses with everyday object fetching tasks. MINA consists of a manipulator arm on an omni-directional mobile base. Before the operation, an augmented reality interface was used to place waypoints.

[...] Read more.

In this paper, a robotic Multitasking Intelligent Nurse Aid (MINA) is proposed to assist nurses with everyday object fetching tasks. MINA consists of a manipulator arm on an omni-directional mobile base. Before the operation, an augmented reality interface was used to place waypoints. Waypoints can indicate the location of a patient, supply shelf, and other locations of interest. When commanded to retrieve an object, MINA uses simultaneous localization and mapping to map its environment and navigate to the supply shelf waypoint. At the shelf, MINA builds a 3D point cloud representation of the shelf and searches for barcodes to identify and localize the object it was sent to retrieve. Upon grasping the object, it returns to the user. Collision avoidance is incorporated during the mobile navigation and grasping tasks. We performed experiments to evaluate MINA’s efficacy including with obstacles along the path. The experimental results showed that MINA can repeatedly navigate to the specified waypoints and successfully perform the grasping and retrieval task.

Full article

►▼

Show Figures

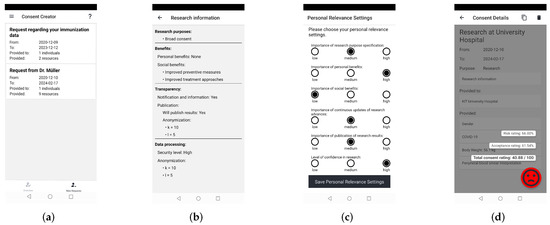

Open AccessEditor’s ChoiceArticle

Sovereign Digital Consent through Privacy Impact Quantification and Dynamic Consent

by

Arno Appenzeller, Marina Hornung, Thomas Kadow, Erik Krempel and Jürgen Beyerer

Cited by 6 | Viewed by 3575

Abstract

Digitization is becoming more and more important in the medical sector. Through electronic health records and the growing amount of digital data of patients available, big data research finds an increasing amount of use cases. The rising amount of data and the imposing

[...] Read more.

Digitization is becoming more and more important in the medical sector. Through electronic health records and the growing amount of digital data of patients available, big data research finds an increasing amount of use cases. The rising amount of data and the imposing privacy risks can be overwhelming for patients, so they can have the feeling of being out of control of their data. Several previous studies on digital consent have tried to solve this problem and empower the patient. However, there are no complete solution for the arising questions yet. This paper presents the concept of Sovereign Digital Consent by the combination of a consent privacy impact quantification and a technology for proactive sovereign consent. The privacy impact quantification supports the patient to comprehend the potential risk when sharing the data and considers the personal preferences regarding acceptance for a research project. The proactive dynamic consent implementation provides an implementation for fine granular digital consent, using medical data categorization terminology. This gives patients the ability to control their consent decisions dynamically and is research friendly through the automatic enforcement of the patients’ consent decision. Both technologies are evaluated and implemented in a prototypical application. With the combination of those technologies, a promising step towards patient empowerment through Sovereign Digital Consent can be made.

Full article

►▼

Show Figures

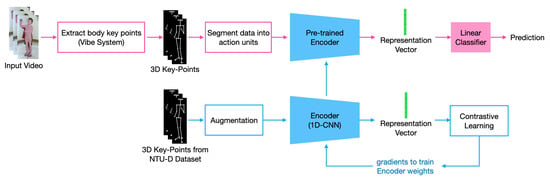

Open AccessEditor’s ChoiceArticle

Self-Supervised Human Activity Representation for Embodied Cognition Assessment

by

Mohammad Zaki Zadeh, Ashwin Ramesh Babu, Ashish Jaiswal and Fillia Makedon

Cited by 6 | Viewed by 3286

Abstract

Physical activities, according to the embodied cognition theory, are an important manifestation of cognitive functions. As a result, in this paper, the Activate Test of Embodied Cognition (ATEC) system is proposed to assess various cognitive measures. It consists of physical exercises with different

[...] Read more.

Physical activities, according to the embodied cognition theory, are an important manifestation of cognitive functions. As a result, in this paper, the Activate Test of Embodied Cognition (ATEC) system is proposed to assess various cognitive measures. It consists of physical exercises with different variations and difficulty levels designed to provide assessment of executive and motor functions. This work focuses on obtaining human activity representation from recorded videos of ATEC tasks in order to automatically assess embodied cognition performance. A self-supervised approach is employed in this work that can exploit a small set of annotated data to obtain an effective human activity representation. The performance of different self-supervised approaches along with a supervised method are investigated for automated cognitive assessment of children performing ATEC tasks. The results show that the supervised learning approach performance decreases as the training set becomes smaller, whereas the self-supervised methods maintain their performance by taking advantage of unlabeled data.

Full article

►▼

Show Figures

Open AccessArticle

Insights on the Effect and Experience of a Diet-Tracking Application for Older Adults in a Diet Trial

by

Laura M. van der Lubbe, Michel C. A. Klein, Marjolein Visser, Hanneke A. H. Wijnhoven and Ilse Reinders

Viewed by 2846

Abstract

With an ageing population, healthy ageing becomes more important. Healthy nutrition is part of this process and can be supported in many ways. The PROMISS trial studies the effect of increasing protein intake in older adults on their physical functioning. Within this trial,

[...] Read more.

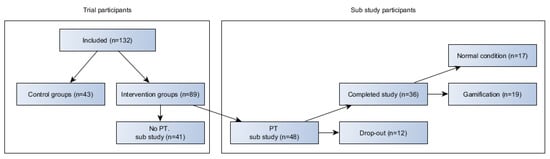

With an ageing population, healthy ageing becomes more important. Healthy nutrition is part of this process and can be supported in many ways. The PROMISS trial studies the effect of increasing protein intake in older adults on their physical functioning. Within this trial, a sub-study was performed, researching the added effect of using a diet-tracking app enhanced with persuasive and (optional) gamification techniques. The goal was to see how older adult participants received such technology within their diet program. There were 48 participants included in this sub-study, of which 36 completed the study period of 6 months. Our results on adherence and user evaluation show that a dedicated app used within the PROMISS trial is a feasible way to engage older adults in diet tracking. On average, participants used the app 83% of the days, during a period of on average 133 days. User-friendliness was evaluated with an average score of 4.86 (out of 7), and experienced effectiveness was evaluated with an average score of 4.57 (out of 7). However, no effect of the technology on protein intake was found. The added gamification elements did not have a different effect compared with the version without those elements. However, some participants did like the added gamification elements, and it can thus be nice to add them as additional features for participants that like them. This article also studies whether personal characteristics correlate with any of the other results. Although some significant results were found, this does not give a clear view on which types of participants like or benefit from this technology.

Full article

►▼

Show Figures

Open AccessArticle

Adapt or Perish? Exploring the Effectiveness of Adaptive DoF Control Interaction Methods for Assistive Robot Arms

by

Kirill Kronhardt, Stephan Rübner, Max Pascher, Felix Ferdinand Goldau, Udo Frese and Jens Gerken

Cited by 8 | Viewed by 3584

Abstract

Robot arms are one of many assistive technologies used by people with motor impairments. Assistive robot arms can allow people to perform activities of daily living (ADL) involving grasping and manipulating objects in their environment without the assistance of caregivers. Suitable input devices

[...] Read more.

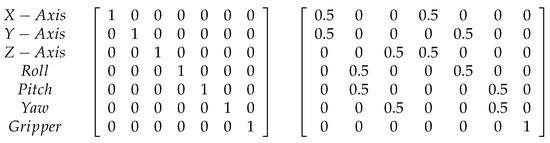

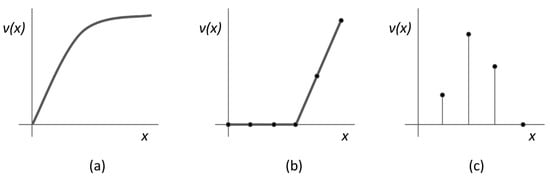

Robot arms are one of many assistive technologies used by people with motor impairments. Assistive robot arms can allow people to perform activities of daily living (ADL) involving grasping and manipulating objects in their environment without the assistance of caregivers. Suitable input devices (e.g., joysticks) mostly have two Degrees of Freedom (DoF), while most assistive robot arms have six or more. This results in time-consuming and cognitively demanding mode switches to change the mapping of DoFs to control the robot. One option to decrease the difficulty of controlling a high-DoF assistive robot arm using a low-DoF input device is to assign different combinations of movement-DoFs to the device’s input DoFs depending on the current situation (adaptive control). To explore this method of control, we designed two adaptive control methods for a realistic virtual 3D environment. We evaluated our methods against a commonly used non-adaptive control method that requires the user to switch controls manually. This was conducted in a simulated remote study that used Virtual Reality and involved 39 non-disabled participants. Our results show that the number of mode switches necessary to complete a simple pick-and-place task decreases significantly when using an adaptive control type. In contrast, the task completion time and workload stay the same. A thematic analysis of qualitative feedback of our participants suggests that a longer period of training could further improve the performance of adaptive control methods.

Full article

►▼

Show Figures

Open AccessArticle

Improving Effectiveness of a Coaching System through Preference Learning

by

Martin Žnidaršič, Aljaž Osojnik, Peter Rupnik and Bernard Ženko

Viewed by 2243

Abstract

The paper describes an approach for indirect data-based assessment and use of user preferences in an unobtrusive sensor-based coaching system with the aim of improving coaching effectiveness. The preference assessments are used to adapt the reasoning components of the coaching system in a

[...] Read more.

The paper describes an approach for indirect data-based assessment and use of user preferences in an unobtrusive sensor-based coaching system with the aim of improving coaching effectiveness. The preference assessments are used to adapt the reasoning components of the coaching system in a way to better align with the preferences of its users. User preferences are learned based on data that describe user feedback as reported for different coaching messages that were received by the users. The preferences are not learned directly, but are assessed through a proxy—classifications or probabilities of positive feedback as assigned by a predictive machine learned model of user feedback. The motivation and aim of such an indirect approach is to allow for preference estimation without burdening the users with interactive preference elicitation processes. A brief description of the coaching setting is provided in the paper, before the approach for preference assessment is described and illustrated on a real-world example obtained during the testing of the coaching system with elderly users.

Full article

►▼

Show Figures

Open AccessArticle

An Affordable Upper-Limb Exoskeleton Concept for Rehabilitation Applications

by

Emanuele Palazzi, Luca Luzi, Eldison Dimo, Matteo Meneghetti, Rudy Vicario, Rafael Ferro Luzia, Rocco Vertechy and Andrea Calanca

Cited by 21 | Viewed by 6998

Abstract

In recent decades, many researchers have focused on the design and development of exoskeletons. Several strategies have been proposed to develop increasingly more efficient and biomimetic mechanisms. However, existing exoskeletons tend to be expensive and only available for a few people. This paper

[...] Read more.

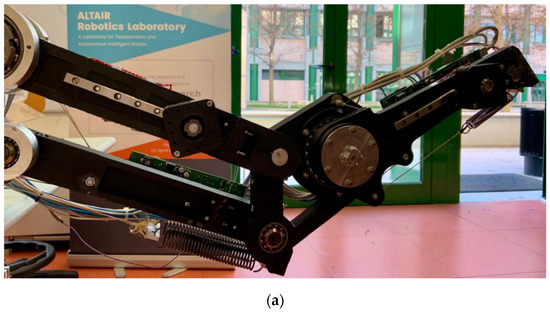

In recent decades, many researchers have focused on the design and development of exoskeletons. Several strategies have been proposed to develop increasingly more efficient and biomimetic mechanisms. However, existing exoskeletons tend to be expensive and only available for a few people. This paper introduces a new gravity-balanced upper-limb exoskeleton suited for rehabilitation applications and designed with the main objective of reducing the cost of the components and materials. Regarding mechanics, the proposed design significantly reduces the motor torque requirements, because a high cost is usually associated with high-torque actuation. Regarding the electronics, we aim to exploit the microprocessor peripherals to obtain parallel and real-time execution of communication and control tasks without relying on expensive RTOSs. Regarding sensing, we avoid the use of expensive force sensors. Advanced control and rehabilitation features are implemented, and an intuitive user interface is developed. To experimentally validate the functionality of the proposed exoskeleton, a rehabilitation exercise in the form of a pick-and-place task is considered. Experimentally, peak torques are reduced by 89% for the shoulder and by 84% for the elbow.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Results of Preliminary Studies on the Perception of the Relationships between Objects Presented in a Cartesian Space

by

Ira Woodring and Charles Owen

Viewed by 2283

Abstract

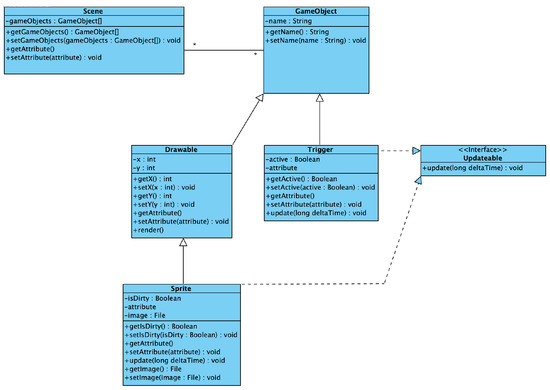

Visualizations often use the paradigm of a Cartesian space for the presentation of objects and information. Unified Modeling Language (UML) is a visual language used to describe relationships in processes and systems and is heavily used in computer science and software engineering. Visualizations

[...] Read more.

Visualizations often use the paradigm of a Cartesian space for the presentation of objects and information. Unified Modeling Language (UML) is a visual language used to describe relationships in processes and systems and is heavily used in computer science and software engineering. Visualizations are a powerful development tool, but are not necessarily accessible to all users, as individuals may differ in their level of visual ability or perceptual biases. Sonfication methods can be used to supplement or, in some cases, replace visual models. This paper describes two studies created to determine the ability of users to perceive relationships between objects in a Cartesian space when presented in a sonified form. Results from this study will be used to guide the creation of sonified UML software.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

On the Exploration of Automatic Building Extraction from RGB Satellite Images Using Deep Learning Architectures Based on U-Net

by

Anastasios Temenos, Nikos Temenos, Anastasios Doulamis and Nikolaos Doulamis

Cited by 13 | Viewed by 4231

Abstract

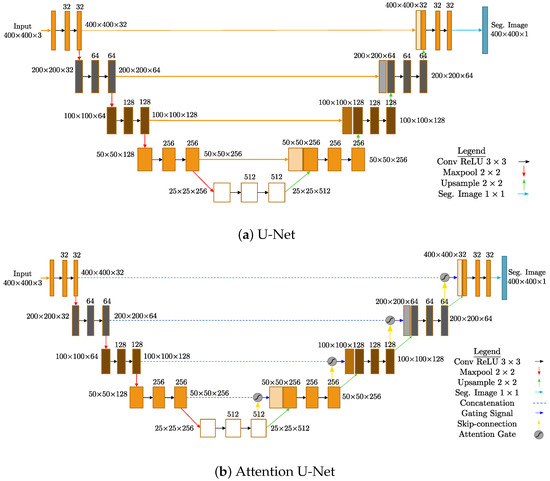

Detecting and localizing buildings is of primary importance in urban planning tasks. Automating the building extraction process, however, has become attractive given the dominance of Convolutional Neural Networks (CNNs) in image classification tasks. In this work, we explore the effectiveness of the CNN-based

[...] Read more.

Detecting and localizing buildings is of primary importance in urban planning tasks. Automating the building extraction process, however, has become attractive given the dominance of Convolutional Neural Networks (CNNs) in image classification tasks. In this work, we explore the effectiveness of the CNN-based architecture U-Net and its variations, namely, the Residual U-Net, the Attention U-Net, and the Attention Residual U-Net, in automatic building extraction. We showcase their robustness in feature extraction and information processing using exclusively RGB images, as they are a low-cost alternative to multi-spectral and LiDAR ones, selected from the SpaceNet 1 dataset. The experimental results show that U-Net achieves a

accuracy, whereas introducing residual blocks, attention gates, or a combination of both improves the accuracy of the vanilla U-Net to

,

, and

, respectively. Finally, the comparison between U-Net architectures and typical deep learning approaches from the literature highlights their increased performance in accurate building localization around corners and edges.

Full article

►▼

Show Figures

Open AccessArticle

Self-Organizing and Self-Explaining Pervasive Environments by Connecting Smart Objects and Applications

by

Börge Kordts, Bennet Gerlach and Andreas Schrader

Viewed by 2778

Abstract

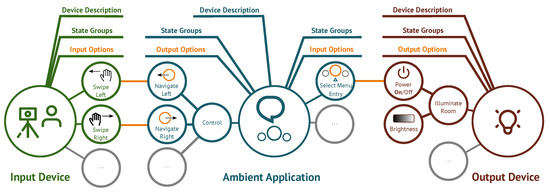

In the past decade, pervasive environments have progressed from promising research concepts to available products present in our everyday lives. By connecting multiple smart objects, device ensembles can be formed to assist users in performing tasks. Furthermore, smart objects can be used to

[...] Read more.

In the past decade, pervasive environments have progressed from promising research concepts to available products present in our everyday lives. By connecting multiple smart objects, device ensembles can be formed to assist users in performing tasks. Furthermore, smart objects can be used to control applications, that, in turn, can be used to control other smart objects. As manual configuration is often time-consuming, an automatic connection of the components may present a useful tool, which should take various aspects into account. While dynamically connecting these components allows for solutions tailored to the needs and respective tasks of a user, it obfuscates the handling and ultimately may decrease usability. Self-descriptions have been proposed to overcome this issue for ensembles of smart objects. For a more extensive approach, descriptions of applications in pervasive environments need to be addressed as well. Based on previous research in the context of self-explainability of smart objects, we propose a description language as well as a framework to support self-explaining ambient applications (applications that are used within smart environments). The framework can be used to manually or automatically connect smart objects as well as ambient applications and to realize self-explainability for these interconnected device and application ensembles.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

A Simulated Environment for Robot Vision Experiments

by

Christos Sevastopoulos, Stasinos Konstantopoulos, Keshav Balaji, Mohammad Zaki Zadeh and Fillia Makedon

Cited by 5 | Viewed by 3214

Abstract

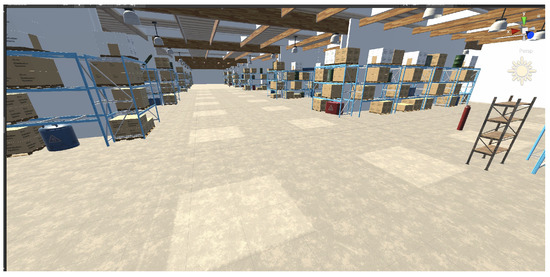

Training on simulation data has proven invaluable in applying machine learning in robotics. However, when looking at robot vision in particular, simulated images cannot be directly used no matter how realistic the image rendering is, as many physical parameters (temperature, humidity, wear-and-tear in

[...] Read more.

Training on simulation data has proven invaluable in applying machine learning in robotics. However, when looking at robot vision in particular, simulated images cannot be directly used no matter how realistic the image rendering is, as many physical parameters (temperature, humidity, wear-and-tear in time) vary and affect texture and lighting in ways that cannot be encoded in the simulation. In this article we propose a different approach for extracting value from simulated environments: although neither of the trained models can be used nor are any evaluation scores expected to be the same on simulated and physical data, the conclusions drawn from simulated experiments might be valid. If this is the case, then simulated environments can be used in early-stage experimentation with different network architectures and features. This will expedite the early development phase before moving to (harder to conduct) physical experiments in order to evaluate the most promising approaches. In order to test this idea we created two simulated environments for the Unity engine, acquired simulated visual datasets, and used them to reproduce experiments originally carried out in a physical environment. The comparison of the conclusions drawn in the physical and the simulated experiments is promising regarding the validity of our approach.

Full article

►▼

Show Figures

Open AccessArticle

Does One Size Fit All? A Case Study to Discuss Findings of an Augmented Hands-Free Robot Teleoperation Concept for People with and without Motor Disabilities

by

Stephanie Arévalo Arboleda, Marvin Becker and Jens Gerken

Cited by 4 | Viewed by 3180

Abstract

Hands-free robot teleoperation and augmented reality have the potential to create an inclusive environment for people with motor disabilities. It may allow them to teleoperate robotic arms to manipulate objects. However, the experiences evoked by the same teleoperation concept and augmented reality can

[...] Read more.

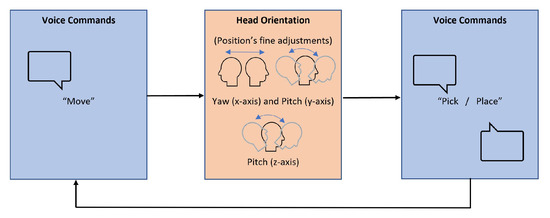

Hands-free robot teleoperation and augmented reality have the potential to create an inclusive environment for people with motor disabilities. It may allow them to teleoperate robotic arms to manipulate objects. However, the experiences evoked by the same teleoperation concept and augmented reality can vary significantly for people with motor disabilities compared to those without disabilities. In this paper, we report the experiences of Miss L., a person with multiple sclerosis, when teleoperating a robotic arm in a hands-free multimodal manner using a virtual menu and visual hints presented through the Microsoft HoloLens 2. We discuss our findings and compare her experiences to those of people without disabilities using the same teleoperation concept. Additionally, we present three learning points from comparing these experiences: a re-evaluation of the metrics used to measure performance, being aware of the bias, and considering variability in abilities, which evokes different experiences. We consider these learning points can be extrapolated to carrying human–robot interaction evaluations with mixed groups of participants with and without disabilities.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Visual Robotic Perception System with Incremental Learning for Child–Robot Interaction Scenarios

by

Niki Efthymiou, Panagiotis Paraskevas Filntisis, Gerasimos Potamianos and Petros Maragos

Cited by 4 | Viewed by 3445

Abstract

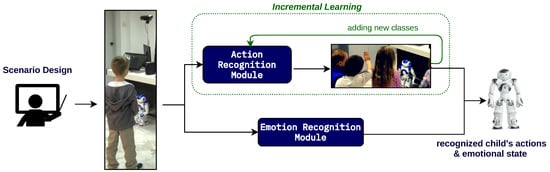

This paper proposes a novel lightweight visual perception system with Incremental Learning (IL), tailored to child–robot interaction scenarios. Specifically, this encompasses both an action and emotion recognition module, with the former wrapped around an IL system, allowing novel actions to be easily added.

[...] Read more.

This paper proposes a novel lightweight visual perception system with Incremental Learning (IL), tailored to child–robot interaction scenarios. Specifically, this encompasses both an action and emotion recognition module, with the former wrapped around an IL system, allowing novel actions to be easily added. This IL system enables the tutor aspiring to use robotic agents in interaction scenarios to further customize the system according to children’s needs. We perform extensive evaluations of the developed modules, achieving state-of-the-art results on both the children’s action BabyRobot dataset and the children’s emotion EmoReact dataset. Finally, we demonstrate the robustness and effectiveness of the IL system for action recognition by conducting a thorough experimental analysis for various conditions and parameters.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Multiclass Confusion Matrix Reduction Method and Its Application on Net Promoter Score Classification Problem

by

Ioannis Markoulidakis, Ioannis Rallis, Ioannis Georgoulas, George Kopsiaftis, Anastasios Doulamis and Nikolaos Doulamis

Cited by 121 | Viewed by 15577

Abstract

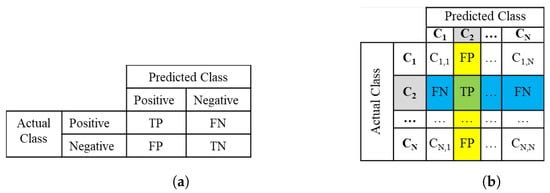

The current paper presents a novel method for reducing a multiclass confusion matrix into a

version enabling the exploitation of the relevant performance metrics and methods such as the receiver operating characteristic and area under the curve for the assessment

[...] Read more.

The current paper presents a novel method for reducing a multiclass confusion matrix into a

version enabling the exploitation of the relevant performance metrics and methods such as the receiver operating characteristic and area under the curve for the assessment of different classification algorithms. The reduction method is based on class grouping and leads to a special type of matrix called the reduced confusion matrix. The developed method is then exploited for the assessment of state of the art machine learning algorithms applied on the net promoter score classification problem in the field of customer experience analytics indicating the value of the proposed method in real world classification problems.

Full article

►▼

Show Figures

Open AccessArticle

Image-Label Recovery on Fashion Data Using Image Similarity from Triple Siamese Network

by

Debapriya Banerjee, Maria Kyrarini and Won Hwa Kim

Cited by 2 | Viewed by 4361

Abstract

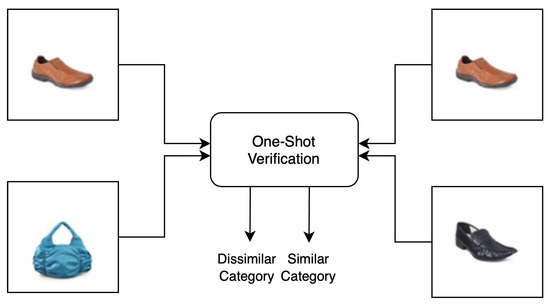

Weakly labeled data are inevitable in various research areas in artificial intelligence (AI) where one has a modicum of knowledge about the complete dataset. One of the reasons for weakly labeled data in AI is insufficient accurately labeled data. Strict privacy control or

[...] Read more.

Weakly labeled data are inevitable in various research areas in artificial intelligence (AI) where one has a modicum of knowledge about the complete dataset. One of the reasons for weakly labeled data in AI is insufficient accurately labeled data. Strict privacy control or accidental loss may also cause missing-data problems. However, supervised machine learning (ML) requires accurately labeled data in order to successfully solve a problem. Data labeling is difficult and time-consuming as it requires manual work, perfect results, and sometimes human experts to be involved (e.g., medical labeled data). In contrast, unlabeled data are inexpensive and easily available. Due to there not being enough labeled training data, researchers sometimes only obtain one or few data points per category or label. Training a supervised ML model from the small set of labeled data is a challenging task. The objective of this research is to recover missing labels from the dataset using state-of-the-art ML techniques using a semisupervised ML approach. In this work, a novel convolutional neural network-based framework is trained with a few instances of a class to perform metric learning. The dataset is then converted into a graph signal, which is recovered using a recover algorithm (RA) in graph Fourier transform. The proposed approach was evaluated on a Fashion dataset for accuracy and precision and performed significantly better than graph neural networks and other state-of-the-art methods.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceReview

A Survey of Robots in Healthcare

by

Maria Kyrarini, Fotios Lygerakis, Akilesh Rajavenkatanarayanan, Christos Sevastopoulos, Harish Ram Nambiappan, Kodur Krishna Chaitanya, Ashwin Ramesh Babu, Joanne Mathew and Fillia Makedon

Cited by 231 | Viewed by 39543

Abstract

In recent years, with the current advancements in Robotics and Artificial Intelligence (AI), robots have the potential to support the field of healthcare. Robotic systems are often introduced in the care of the elderly, children, and persons with disabilities, in hospitals, in rehabilitation

[...] Read more.

In recent years, with the current advancements in Robotics and Artificial Intelligence (AI), robots have the potential to support the field of healthcare. Robotic systems are often introduced in the care of the elderly, children, and persons with disabilities, in hospitals, in rehabilitation and walking assistance, and other healthcare situations. In this survey paper, the recent advances in robotic technology applied in the healthcare domain are discussed. The paper provides detailed information about state-of-the-art research in care, hospital, assistive, rehabilitation, and walking assisting robots. The paper also discusses the open challenges healthcare robots face to be integrated into our society.

Full article

►▼

Show Figures

Open AccessFeature PaperEditor’s ChoiceReview

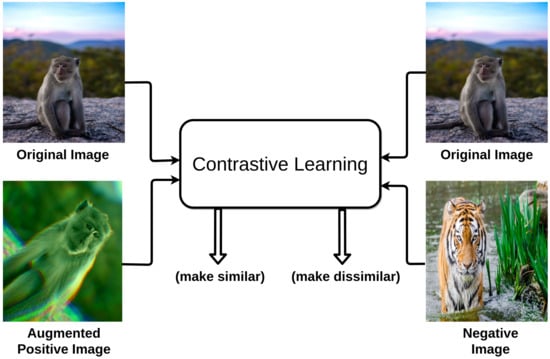

A Survey on Contrastive Self-Supervised Learning

by

Ashish Jaiswal, Ashwin Ramesh Babu, Mohammad Zaki Zadeh, Debapriya Banerjee and Fillia Makedon

Cited by 910 | Viewed by 55790

Abstract

Self-supervised learning has gained popularity because of its ability to avoid the cost of annotating large-scale datasets. It is capable of adopting self-defined pseudolabels as supervision and use the learned representations for several downstream tasks. Specifically, contrastive learning has recently become a dominant

[...] Read more.

Self-supervised learning has gained popularity because of its ability to avoid the cost of annotating large-scale datasets. It is capable of adopting self-defined pseudolabels as supervision and use the learned representations for several downstream tasks. Specifically, contrastive learning has recently become a dominant component in self-supervised learning for computer vision, natural language processing (NLP), and other domains. It aims at embedding augmented versions of the same sample close to each other while trying to push away embeddings from different samples. This paper provides an extensive review of self-supervised methods that follow the contrastive approach. The work explains commonly used pretext tasks in a contrastive learning setup, followed by different architectures that have been proposed so far. Next, we present a performance comparison of different methods for multiple downstream tasks such as image classification, object detection, and action recognition. Finally, we conclude with the limitations of the current methods and the need for further techniques and future directions to make meaningful progress.

Full article

►▼

Show Figures

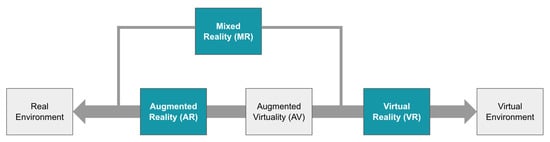

Open AccessEditor’s ChoiceArticle

A Review of Extended Reality (XR) Technologies for Manufacturing Training

by

Sanika Doolani, Callen Wessels, Varun Kanal, Christos Sevastopoulos, Ashish Jaiswal, Harish Nambiappan and Fillia Makedon

Cited by 168 | Viewed by 22984

Abstract

Recently, the use of extended reality (XR) systems has been on the rise, to tackle various domains such as training, education, safety, etc. With the recent advances in augmented reality (AR), virtual reality (VR) and mixed reality (MR) technologies and ease of availability

[...] Read more.

Recently, the use of extended reality (XR) systems has been on the rise, to tackle various domains such as training, education, safety, etc. With the recent advances in augmented reality (AR), virtual reality (VR) and mixed reality (MR) technologies and ease of availability of high-end, commercially available hardware, the manufacturing industry has seen a rise in the use of advanced XR technologies to train its workforce. While several research publications exist on applications of XR in manufacturing training, a comprehensive review of recent works and applications is lacking to present a clear progress in using such advance technologies. To this end, we present a review of the current state-of-the-art of use of XR technologies in training personnel in the field of manufacturing. First, we put forth the need of XR in manufacturing. We then present several key application domains where XR is being currently applied, notably in maintenance training and in performing assembly task. We also reviewed the applications of XR in other vocational domains and how they can be leveraged in the manufacturing industry. We finally present some current barriers to XR adoption in manufacturing training and highlight the current limitations that should be considered when looking to develop and apply practical applications of XR.

Full article

►▼

Show Figures

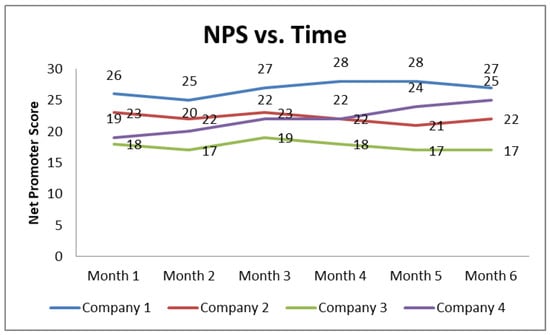

Open AccessEditor’s ChoiceArticle

A Machine Learning Based Classification Method for Customer Experience Survey Analysis

by

Ioannis Markoulidakis, Ioannis Rallis, Ioannis Georgoulas, George Kopsiaftis, Anastasios Doulamis and Nikolaos Doulamis

Cited by 11 | Viewed by 8710

Abstract

Customer Experience (CX) is monitored through market research surveys, based on metrics like the Net Promoter Score (NPS) and the customer satisfaction for certain experience attributes (e.g., call center, website, billing, service quality, tariff plan). The objective of companies is to maximize NPS

[...] Read more.

Customer Experience (CX) is monitored through market research surveys, based on metrics like the Net Promoter Score (NPS) and the customer satisfaction for certain experience attributes (e.g., call center, website, billing, service quality, tariff plan). The objective of companies is to maximize NPS through the improvement of the most important CX attributes. However, statistical analysis suggests that there is a lack of clear and accurate association between NPS and the CX attributes’ scores. In this paper, we address the aforementioned deficiency using a novel classification approach, which was developed based on logistic regression and tested with several state-of-the-art machine learning (ML) algorithms. The proposed method was applied on an extended data set from the telecommunication sector and the results were quite promising, showing a significant improvement in most statistical metrics.

Full article

►▼

Show Figures

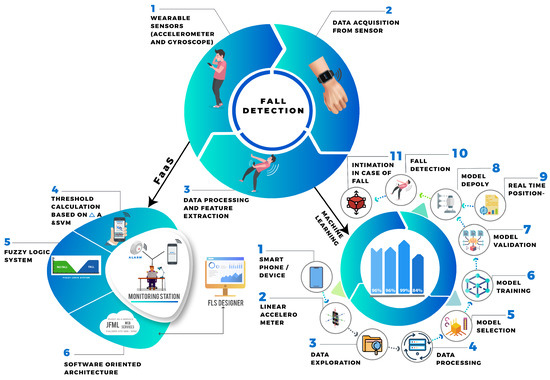

Open AccessEditor’s ChoiceArticle

Comparative Analysis of Real-Time Fall Detection Using Fuzzy Logic Web Services and Machine Learning

by

Bhavesh Pandya, Amir Pourabdollah and Ahmad Lotfi

Cited by 12 | Viewed by 3673

Abstract

Falls are the main cause of susceptibility to severe injuries in many humans, especially for older adults aged 65 and over. Typically, falls are being unnoticed and interpreted as a mere inevitable accident. Various wearable fall warning devices have been created recently for

[...] Read more.

Falls are the main cause of susceptibility to severe injuries in many humans, especially for older adults aged 65 and over. Typically, falls are being unnoticed and interpreted as a mere inevitable accident. Various wearable fall warning devices have been created recently for older people. However, most of these devices are dependent on local data processing. Various algorithms are used in wearable sensors to track a real-time fall effectively, which focuses on fall detection via fuzzy-as-a-service based on IEEE 1855–2016, Java Fuzzy Markup Language (FML) and service-oriented architecture. Moreover, several approaches are used to detect a fall using machine learning techniques via human movement positional data to avert any accidents. For fuzzy logic web services, analysis is performed using wearable accelerometer and gyroscope sensors, whereas in machine learning techniques, k-NN, decision tree, random forest and extreme gradient boost are used to differentiate between a fall and non-fall. This study aims to carry out a comparative analysis of real-time fall detection using fuzzy logic web services and machine learning techniques and aims to determine which one is better for real-time fall detection. Research findings exhibit that the proposed fuzzy-as-a-service could easily differentiate between fall and non-fall occurrences in a real-time environment with an accuracy, sensitivity and specificity of 90%, 88.89% and 91.67%, respectively, while the random forest algorithm of machine learning achieved 99.19%, 98.53% and 99.63%, respectively.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

Deep Learning Based Fall Detection Algorithms for Embedded Systems, Smartwatches, and IoT Devices Using Accelerometers

by

Dimitri Kraft, Karthik Srinivasan and Gerald Bieber

Cited by 28 | Viewed by 6484

Abstract

A fall of an elderly person often leads to serious injuries or even death. Many falls occur in the home environment and remain unrecognized. Therefore, a reliable fall detection is absolutely necessary for a fast help. Wrist-worn accelerometer based fall detection systems are

[...] Read more.

A fall of an elderly person often leads to serious injuries or even death. Many falls occur in the home environment and remain unrecognized. Therefore, a reliable fall detection is absolutely necessary for a fast help. Wrist-worn accelerometer based fall detection systems are developed, but the accuracy and precision are not standardized, comparable, or sometimes even known. In this work, we present an overview about existing public databases with sensor based fall datasets and harmonize existing wrist-worn datasets for a broader and robust evaluation. Furthermore, we are analyzing the current possible recognition rate of fall detection using deep learning algorithms for mobile and embedded systems. The presented results and databases can be used for further research and optimizations in order to increase the recognition rate to enhance the independent life of the elderly. Furthermore, we give an outlook for a convenient application and wrist device.

Full article

►▼

Show Figures

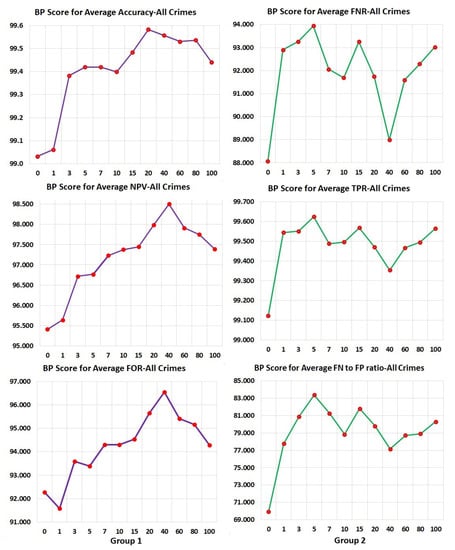

Open AccessArticle

Using Bias Parity Score to Find Feature-Rich Models with Least Relative Bias

by

Bhanu Jain, Manfred Huber, Ramez Elmasri and Leonidas Fegaras

Cited by 5 | Viewed by 3366

Abstract

Machine learning-based decision support systems bring relief and support to the decision-maker in many domains such as loan application acceptance, dating, hiring, granting parole, insurance coverage, and medical diagnoses. These support systems facilitate processing tremendous amounts of data to decipher the patterns embedded

[...] Read more.

Machine learning-based decision support systems bring relief and support to the decision-maker in many domains such as loan application acceptance, dating, hiring, granting parole, insurance coverage, and medical diagnoses. These support systems facilitate processing tremendous amounts of data to decipher the patterns embedded in them. However, these decisions can also absorb and amplify bias embedded in the data. To address this, the work presented in this paper introduces a new fairness measure as well as an enhanced, feature-rich representation derived from the temporal aspects in the data set that permits the selection of the lowest bias model among the set of models learned on various versions of the augmented feature set. Specifically, our approach uses neural networks to forecast recidivism from many unique feature-rich models created from the same raw offender dataset. We create multiple records from one summarizing criminal record per offender in the raw dataset. This is achieved by grouping each set of arrest to release information into a unique record. We use offenders’ criminal history, substance abuse, and treatments taken during imprisonment in different numbers of past arrests to enrich the input feature vectors for the prediction models generated. We propose a fairness measure called Bias Parity (BP) score to measure quantifiable decrease in bias in the prediction models. BP score leverages an existing intuition of bias awareness and summarizes it in a single measure. We demonstrate how BP score can be used to quantify bias for a variety of statistical quantities and how to associate disparate impact with this measure. By using our feature enrichment approach we could increase the accuracy of predicting recidivism for the same dataset from 77.8% in another study to 89.2% in the current study while achieving an improved BP score computed for average accuracy of 99.4, where a value of 100 means no bias for the two subpopulation groups compared. Moreover, an analysis of the accuracy and BP scores for various levels of our feature augmentation method shows consistent trends among scores for a range of fairness measures, illustrating the benefit of the method for picking fairer models without significant loss of accuracy.

Full article

►▼

Show Figures

Open AccessArticle

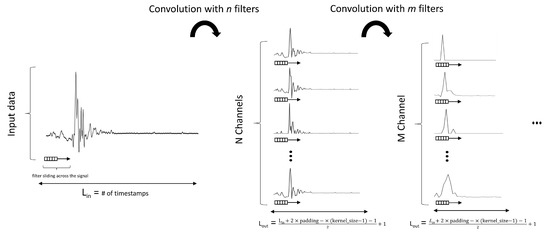

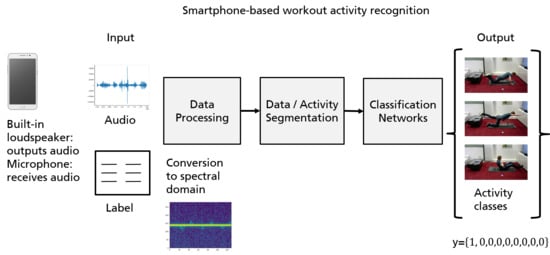

Performing Realistic Workout Activity Recognition on Consumer Smartphones

by

Biying Fu, Florian Kirchbuchner and Arjan Kuijper

Cited by 2 | Viewed by 2856

Abstract

Smartphones have become an essential part of our lives. Especially its computing power and its current specifications make a modern smartphone a powerful device for human activity recognition tasks. Equipped with various integrated sensors, a modern smartphone can be leveraged for lots of

[...] Read more.

Smartphones have become an essential part of our lives. Especially its computing power and its current specifications make a modern smartphone a powerful device for human activity recognition tasks. Equipped with various integrated sensors, a modern smartphone can be leveraged for lots of smart applications. We already investigated the possibility of using an unmodified commercial smartphone to recognize eight strength-based exercises. App-based workouts have become popular in the last few years. The advantage of using a mobile device is that you can practice anywhere at anytime. In our previous work, we proved the possibility of turning a commercial smartphone into an active sonar device to leverage the echo reflected from exercising movement close to the device. By conducting a test study with 14 participants, we showed the first results for cross person evaluation and the generalization ability of our inference models on disjoint participants. In this work, we extended another model to further improve the model generalizability and provided a thorough comparison of our proposed system to other existing state-of-the-art approaches. Finally, a concept of counting the repetitions is also provided in this study as a parallel task to classification.

Full article

►▼

Show Figures

Open AccessArticle

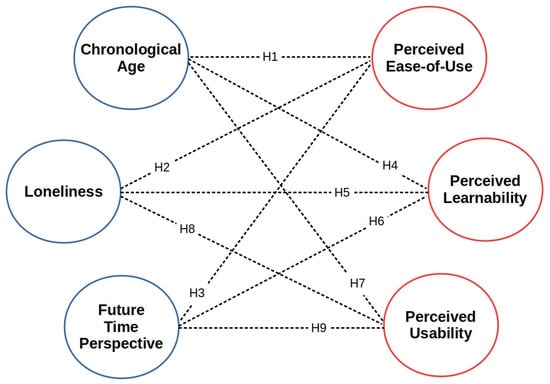

Connections between Older Greek Adults’ Implicit Attributes and Their Perceptions of Online Technologies

by

Diogenis Alexandrakis, Konstantinos Chorianopoulos and Nikolaos Tselios

Viewed by 3346

Abstract

Older Greek adults make use of web technologies much less than the majority of their peers in Europe. Based on the fact that psychosocial attributes can also affect technology usage, this exploratory quantitative research is an attempt to focus on the implicit factors

[...] Read more.

Older Greek adults make use of web technologies much less than the majority of their peers in Europe. Based on the fact that psychosocial attributes can also affect technology usage, this exploratory quantitative research is an attempt to focus on the implicit factors related to older Greek adults’ perceived usability, learnability, and ease-of-use of web technologies. For this aim, a web 2.0 storytelling prototype has been demonstrated to 112 participants and an online questionnaire was applied for data collection. According to the results, distinct correlations emerged between older adults’ characteristics (chronological age, loneliness, future time perspective) and the perceived usability, learnability, and ease-of-use of the presented prototype. These outcomes contribute to the limited literature in the field by probing the connections between older people’s implicit attributes and their evaluative perceptions of online technologies.

Full article

►▼

Show Figures

Open AccessEditor’s ChoiceArticle

ExerTrack—Towards Smart Surfaces to Track Exercises

by

Biying Fu, Lennart Jarms, Florian Kirchbuchner and Arjan Kuijper

Cited by 9 | Viewed by 5707

Abstract

The concept of the quantified self has gained popularity in recent years with the hype of miniaturized gadgets to monitor vital fitness levels. Smartwatches or smartphone apps and other fitness trackers are overwhelming the market. Most aerobic exercises such as walking, running, or

[...] Read more.